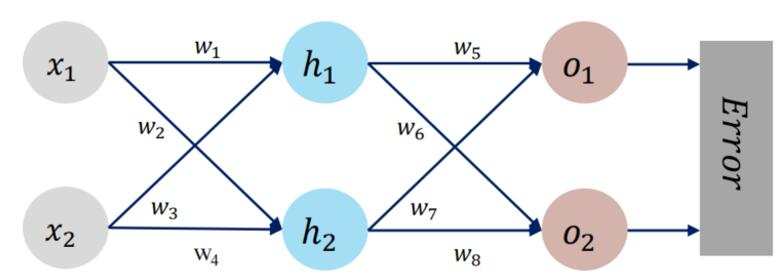

NN模型:

ref:【人工智能导论:模型与算法】MOOC 8.3 误差后向传播(BP) 例题 【第三版】 - HBU_DAVID - 博客园 (cnblogs.com)

实验目标:

理解正向传播过程,熟悉pytorch编程。

初始值:

w1, w2, w3, w4, w5, w6, w7, w8 = 0.2, -0.4, 0.5, 0.6, 0.1, -0.5, -0.3, 0.8

x1, x2 = 0.5, 0.3

y1, y2 = 0.23, -0.07

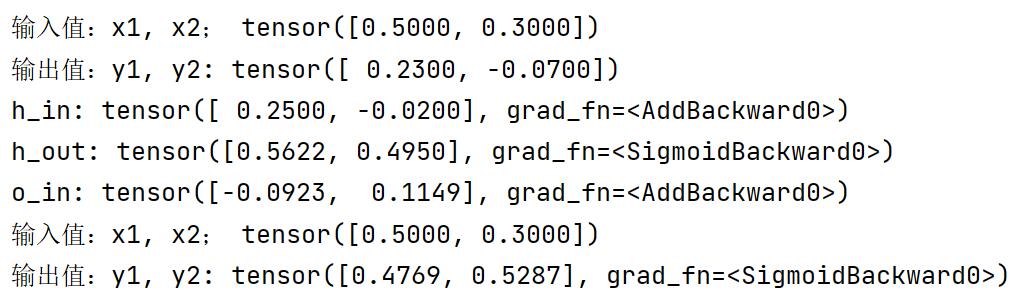

输出:

源代码1

import torch.nn as nn

import torch

def forward_propagate(x, w1, w2): # 正向传播

h = nn.Linear(2, 2) # 输入层,线性(liner)关系

h.weight.data = w1

h.bias.data = torch.Tensor([0.0])

h_out = torch.sigmoid(h(x))

o = nn.Linear(2, 2) # 输入层,线性(liner)关系

o.weight.data = w2

o.bias.data = torch.Tensor([0.0])

o_out = torch.sigmoid(o(h_out))

return o_out

if __name__ == "__main__":

w1 = torch.Tensor([[0.2, 0.5], [-0.4, 0.6]])

w2 = torch.Tensor([[0.1, -0.3], [-0.5, 0.8]])

x = torch.tensor([0.5, 0.3]) # 输入值

y = torch.tensor([0.23, 0.07]) #

print("输入值:x1, x2;", x, "\n输出值:y1, y2:", y)

out_o = forward_propagate(x, w1, w2)

print("输入值:x1, x2;", x, "\n输出值:y1, y2:", out_o)源代码2:

整理为 class Net(nn.Module)形式,为将来学习“训练模型”代码做准备

import torch

import torch.nn as nn

class Net(nn.Module):

# 初始化网络结构

def __init__(self, input_size, hidden_size, num_classes):

super(Net, self).__init__()

print(input_size, hidden_size, num_classes)

self.fc1 = nn.Linear(input_size, hidden_size) # 输入层,线性(liner)关系

self.sigmoid = torch.nn.Sigmoid() # 隐藏层,使用ReLU函数

self.fc2 = nn.Linear(hidden_size, num_classes) # 输出层,线性(liner)关系

# forword 参数传递函数,网络中数据的流动

def forward(self, x, w1, w2):

# print("x:",x)

self.fc1.weight.data = w1

self.fc1.bias.data = torch.Tensor([0.0])

out = self.fc1(x)

print("h_in:", out)

# print(self.fc1.weight.data)

out = self.sigmoid(out)

print("h_out:", out)

self.fc2.weight.data = w2

self.fc2.bias.data = torch.Tensor([0.0])

out = self.fc2(out)

print("o_in:", out)

# print(self.fc2.weight.data)

out = self.sigmoid(out)

# print("o_out:", out)

return out

net = Net(2, 2, 2)

if __name__ == "__main__":

x = torch.tensor([0.5, 0.3])

y = torch.tensor([0.23, -0.07]) # y0, y1 = 0.23, -0.07

w1 = torch.Tensor([[0.2, 0.5], [-0.4, 0.6]]) # 一般情况下,参数无需初始化,让机器自己学习。 有时,初始化参数可提高效率。

w2 = torch.Tensor([[0.1, -0.3], [-0.5, 0.8]])

print("输入值:x1, x2;", x, "\n输出值:y1, y2:", y)

out_o = net(x, w1, w2)

print("输入值:x1, x2;", x, "\n输出值:y1, y2:", out_o)