论文作者谌强在ReadPaper网站上有解读,推荐大家阅读:https://readpaper.com/paper/669120545282228224

1、研究动机

该论文旨在解决基于局部窗口的自注意力(local-window Self-attention)在视觉任务上应用存在的两个问题:(1)感受野受限;(2)通道维度上的建模能力较弱。

对于局部窗口的注意力,已经有一些解决方案:HaloNet和Focal Transformer使用Expanding的方式来扩大感受野;Swin Transformer采用Shift操作来扩大感受野;Shuffle Transformer则引入Shuffle操作来完成局部窗口之间的交互。

这个论文有两个贡献:

- 通过平行分支设计(parallel design),将局部自注意力(local-window Self-attention)与通道分离卷积(depth-wise Convolution)进行结合,融合了局部窗口的信息,扩大了感受野。

- 根据不同分支上操作共享参数的维度不同,在平行分支之间,该文提出双向交互模块(bi-directional interaction),融合不同维度信息,增强模型在各个维度的建模能力。

2、主要方法

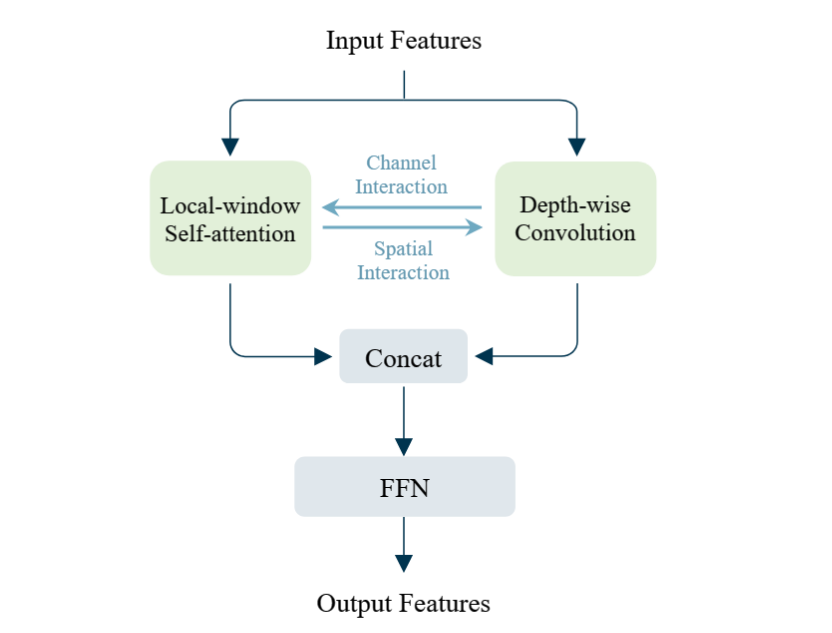

论文的核心是提出了一个 mixing block,结构如下图所示。核心是将CNN与local window attention 并行,中间设计了双向特征交互。

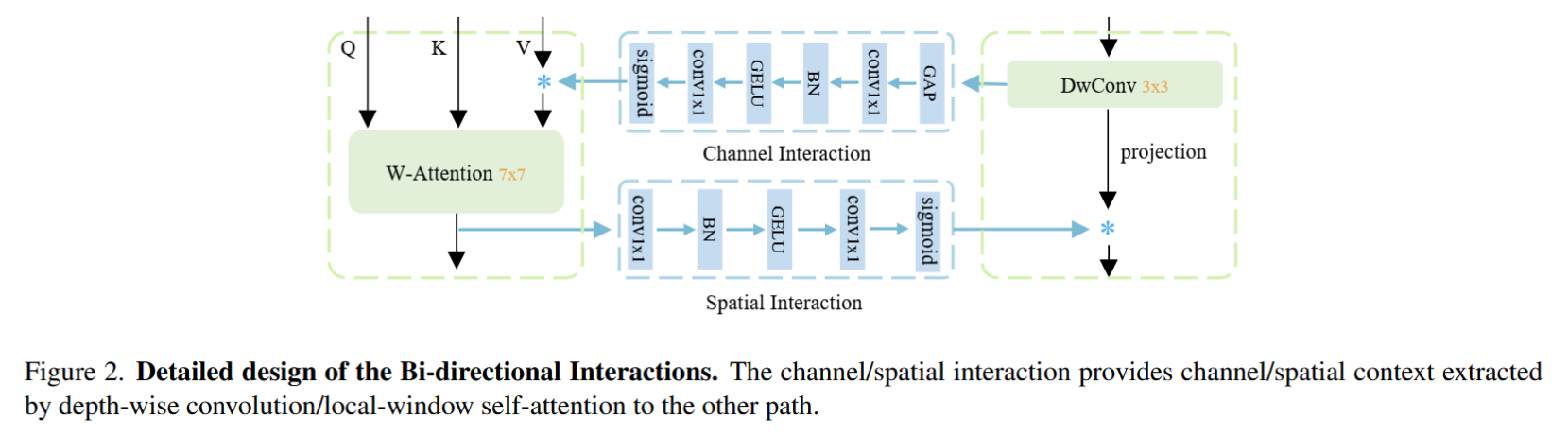

双向特征交互的细节如下图所示,本质就是应用SENet生成权重,给另外分支特征加权。值得注意的是,DwConv分支是给Channel维度分配权重,W-Attention分支是给 HW维度分配权重。

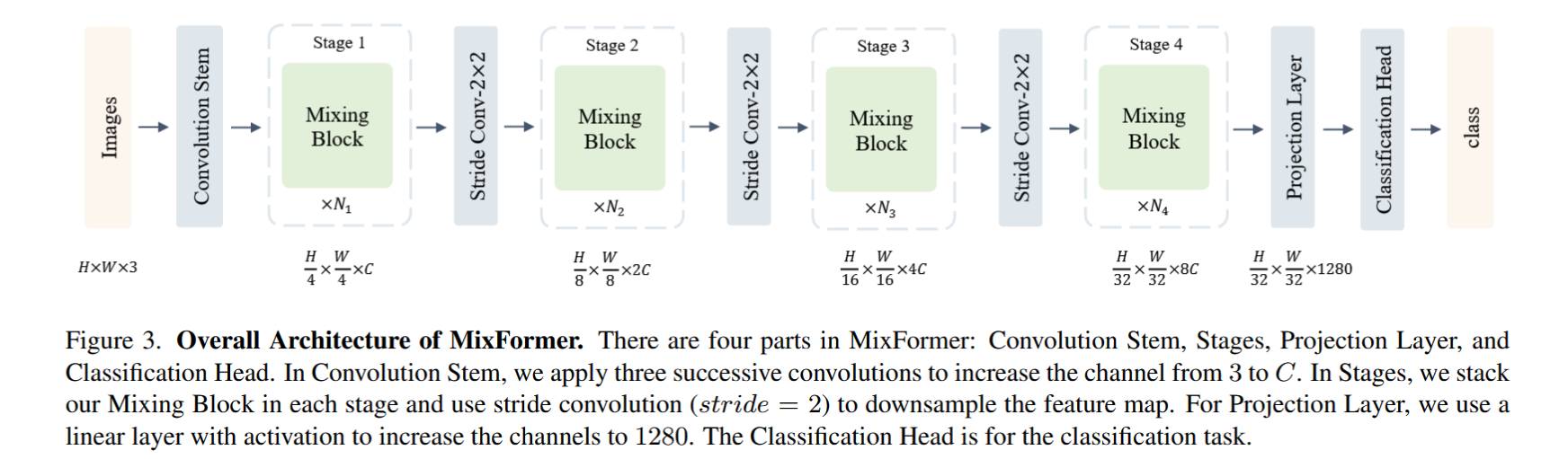

作者使用的网络架构如下图所示,最后作者使用FC层将特征转为H/32 x W/32 x 1280,最后转化为 classification head,最后全连接到分类类别。

实验部分不再多介绍了,可以参考作者论文。

3、一些思考

这个论文思想非常简单,但是DwConv模块和 Attention 模块可以并行可以串行,这个作者在 附加材料里面有考虑。

此外,附加材料里的 discussion with related works 非常有趣,提到了很多类似的工作:

In MixFormer, we consider two types of information exchanges: (1) across dimensions, (2) across windows.

- For the first type, Conformer [39] also performs information exchange between a transformer branch and a convolution branch. While its motivation is different from ours. Conformer aims to couple local and global features across convolution and transformer branches. MixFormer uses channel and spatial interactions to address the weak modeling ability issues caused by weight sharing on the channel (local-window self-attention) and the spatial (depth-wise convolution) dimensions [17].

- For the second type, Twins (strided convolution + global sub-sampled attention) [6] and Shuffle Transformer (neighbor-window connection (NWC) + random spatial shuffle) [26] construct local and global connections to achieve information exchanges, MSG Transformer (channel shuffle on extra MSG tokens) [12] applies global connection. Our MixFormer achieves this goal by concatenating the parallel features: the non-overlapped window feature and the local-connected feature (output of the dwconv3x3).