1. keepalived简介

1.1 keepalived是什么?

Keepalived 软件起初是专为LVS负载均衡软件设计的,用来管理并监控LVS集群系统中各个服务节点的状态,后来又加入了可以实现高可用的VRRP功能。因此,Keepalived除了能够管理LVS软件外,还可以作为其他服务(例如:Nginx、Haproxy、MySQL等)的高可用解决方案软件。

Keepalived软件主要是通过VRRP协议实现高可用功能的。VRRP是Virtual Router RedundancyProtocol(虚拟路由器冗余协议)的缩写,VRRP出现的目的就是为了解决静态路由单点故障问题的,它能够保证当个别节点宕机时,整个网络可以不间断地运行。

所以,Keepalived 一方面具有配置管理LVS的功能,同时还具有对LVS下面节点进行健康检查的功能,另一方面也可实现系统网络服务的高可用功能。

1.2 keepalived的重要功能

keepalived 有三个重要的功能,分别是:

管理LVS负载均衡软件

实现LVS集群节点的健康检查

作为系统网络服务的高可用性(failover)

1.3 keepalived高可用故障转移的原理

Keepalived 高可用服务之间的故障切换转移,是通过 VRRP (Virtual Router Redundancy Protocol ,虚拟路由器冗余协议)来实现的。

在 Keepalived 服务正常工作时,主 Master 节点会不断地向备节点发送(多播的方式)心跳消息,用以告诉备 Backup 节点自己还活看,当主 Master 节点发生故障时,就无法发送心跳消息,备节点也就因此无法继续检测到来自主 Master 节点的心跳了,于是调用自身的接管程序,接管主 Master 节点的 IP 资源及服务。而当主 Master 节点恢复时,备 Backup 节点又会释放主节点故障时自身接管的IP资源及服务,恢复到原来的备用角色。

那么,什么是VRRP呢?

VRRP ,全 称 Virtual Router Redundancy Protocol ,中文名为虚拟路由冗余协议 ,VRRP的出现就是为了解决静态踣甶的单点故障问题,VRRP是通过一种竞选机制来将路由的任务交给某台VRRP路由器的。

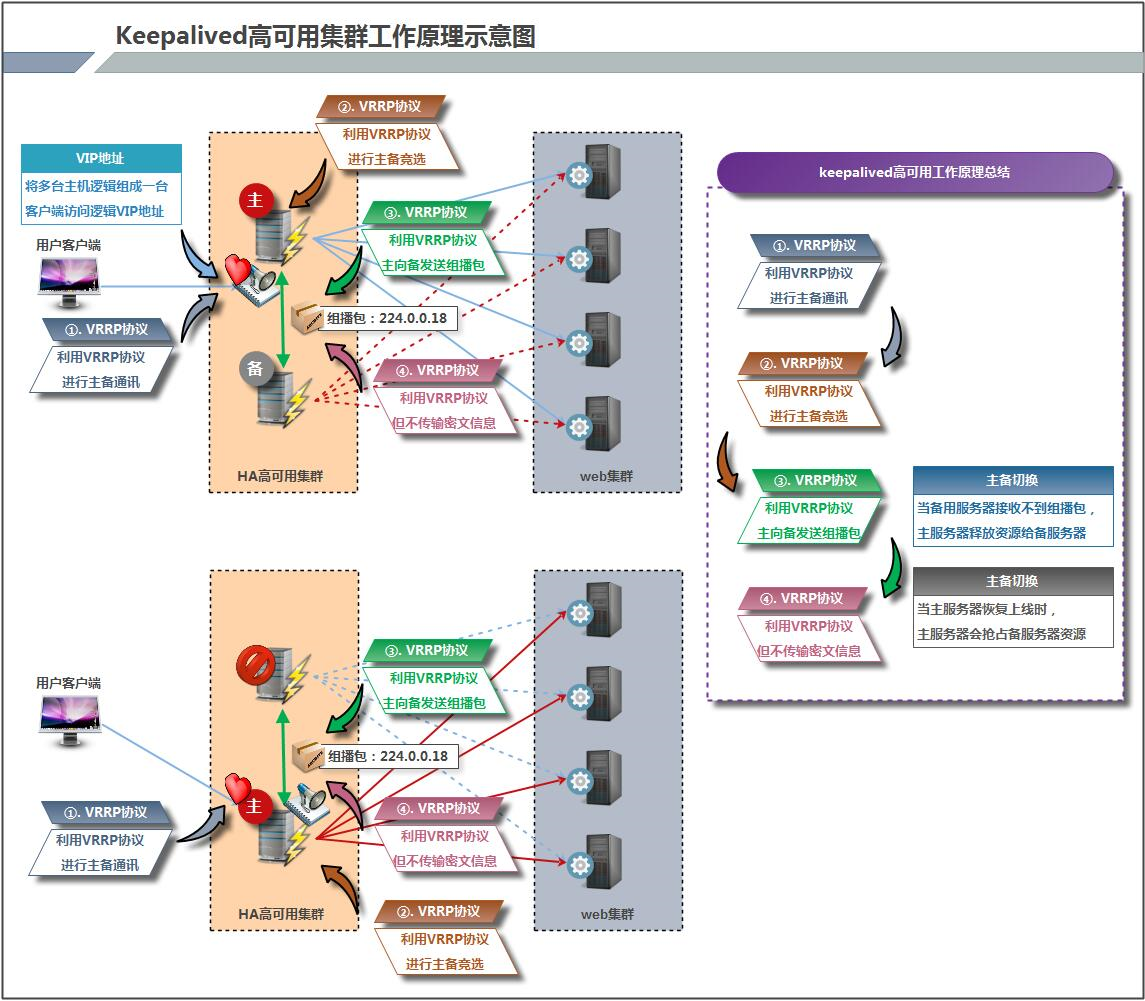

1.4 keepalived原理

1.4.1 keepalived高可用架构图

1.4.2 keepalived工作原理描述

Keepalived高可用是通过 VRRP 进行通信的, VRRP是通过竞选机制来确定主备的,主的优先级高于备,因此,工作时主会优先获得所有的资源,备节点处于等待状态,当主挂了的时候,备节点就会接管主节点的资源,然后顶替主节点对外提供服务。

在 Keepalived 服务之间,只有作为主的服务器会一直发送 VRRP 广播包,告诉备它还活着,此时备不会枪占主,当主不可用时,即备监听不到主发送的广播包时,就会启动相关服务接管资源,保证业务的连续性.接管速度最快可以小于1秒。

2.keepalived实现nginx负载均衡机高可用

2.1 keepalived安装

配置主keepalived

//关闭防火墙与SELINUX

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]# setenforce 0

[root@localhost ~]# sed -ri 's/^(SELINUX=).*/1disabled/g' /etc/selinux/config

//配置网络源

[root@localhost yum.repos.d]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@localhost yum.repos.d]# sed -i 's#$releasever#7#g' /etc/yum.repos.d/CentOS-Base.repo

[root@localhost yum.repos.d]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

//安装keepalived

[root@localhost ~]# yum -y install keepalived

用同样的方法在备服务器上安装keepalived

//关闭防火墙与SELINUX

[root@liping ~]# systemctl stop firewalld

[root@liping ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@liping ~]# setenforce 0

[root@liping ~]# sed -ri 's/^(SELINUX=).*/1disabled/g' /etc/selinux/config

//配置网络源

[root@liping ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@liping ~]# sed -i 's#$releasever#7#g' /etc/yum.repos.d/CentOS-Base.repo

[root@liping ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

//安装keepalived

[root@liping ~]# yum -y install keepalived

2.2 在主备机上分别安装nginx

在master上安装nginx

[root@localhost ~]# yum -y install nginx

[root@localhost ~]# cd /usr/share/nginx/html/

[root@localhost html]# ls

404.html 50x.html en-US icons img index.html nginx-logo.png poweredby.png

[root@localhost html]# mv index.html{,.bak}

[root@localhost html]# echo 'master' > index.html

[root@localhost html]# ls

404.html 50x.html en-US icons img index.html index.html.bak nginx-logo.png poweredby.png

[root@localhost html]# systemctl start nginx

[root@localhost html]# systemctl enable nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@localhost html]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:80 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 25 *:514 *:*

LISTEN 0 128 :::80 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 25 :::514 :::*

在slave上安装nginx

[root@liping ~]# yum -y install nginx

[root@liping ~]# cd /usr/share/nginx/html/

[root@liping html]# ls

404.html 50x.html en-US icons img index.html nginx-logo.png poweredby.png

[root@liping html]# mv index.html{,.bak}

[root@liping html]# echo 'slave' > index.html

[root@liping html]# ls

404.html 50x.html en-US icons img index.html index.html.bak nginx-logo.png poweredby.png

[root@liping html]# systemctl start nginx

[root@liping html]# systemctl enable nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@liping html]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:80 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 :::80 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

在浏览器上访问试试,确保master上的nginx服务能够正常访问

2.3 keepalived配置

2.3.1 配置主keepalived

[root@localhost html]# cd /etc/keepalived

[root@localhost keepalived]# mv keepalived.conf{,-bak}

[root@localhost keepalived]# ls

keepalived.conf-bak

[root@localhost keepalived]# vim keepalived.conf

global_defs {

router_id node1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.136.250 dev ens33

}

}

virtual_server 192.168.136.250 80 {

delay_loop 3

lvs_sched rr

lvs_method DR

protocol TCP

real_server 192.168.136.153 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.136.152 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@localhost keepalived]# systemctl start keepalived

[root@localhost keepalived]# systemctl enable keepalived

2.3.2 配置备keepalived

[root@liping html]# cd /etc/keepalived/

[root@liping keepalived]# ls

keepalived.conf

[root@liping keepalived]# mv keepalived.conf{,-bak}

[root@liping keepalived]# ls

keepalived.conf-bak

[root@liping keepalived]# vim keepalived.conf

global_defs {

router_id node2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.136.250 dev ens33

}

}

virtual_server 192.168.136.250 80 {

delay_loop 3

lvs_sched rr

lvs_method DR

protocol TCP

real_server 192.168.136.153 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.136.152 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@liping keepalived]# systemctl restart keepalived

[root@liping keepalived]# systemctl enable keepalived

2.3.3 查看VIP在哪里

在MASTER上查看

[root@localhost keepalived]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:00:52:6e brd ff:ff:ff:ff:ff:ff

inet 192.168.136.153/24 brd 192.168.136.255 scope global dynamic ens33

valid_lft 1395sec preferred_lft 1395sec

inet 192.168.136.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::cb81:16ba:de26:872d/64 scope link

valid_lft forever preferred_lft forever

在SLAVE上查看

[root@liping keepalived]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:26:a4:52 brd ff:ff:ff:ff:ff:ff

inet 192.168.136.152/24 brd 192.168.136.255 scope global dynamic ens33

valid_lft 1508sec preferred_lft 1508sec

inet6 fe80::ff7a:d77e:ff2:64bd/64 scope link

valid_lft forever preferred_lft forever

用VIP访问

关闭MASTER上的keepalived再用VIP访问

[root@localhost ~]# systemctl stop keepalived

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:00:52:6e brd ff:ff:ff:ff:ff:ff

inet 192.168.136.153/24 brd 192.168.136.255 scope global dynamic ens33

valid_lft 1103sec preferred_lft 1103sec

inet6 fe80::cb81:16ba:de26:872d/64 scope link

valid_lft forever preferred_lft forever

[root@liping ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:26:a4:52 brd ff:ff:ff:ff:ff:ff

inet 192.168.136.152/24 brd 192.168.136.255 scope global dynamic ens33

valid_lft 1235sec preferred_lft 1235sec

inet 192.168.136.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ff7a:d77e:ff2:64bd/64 scope link

valid_lft forever preferred_lft forever

2.4 让keepalived监控nginx负载均衡机

keepalived通过脚本来监控nginx负载均衡机的状态

在master上编写脚本

[root@localhost ~]# mkdir /scripts

[root@localhost ~]# cd /scripts/

[root@localhost scripts]# vim check_n.sh

#!/bin/bash

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep 'nginx'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl stop keepalived

fi

[root@localhost scripts]# chmod +x check_n.sh

[root@localhost scripts]# vim notify.sh

#!/bin/bash

VIP=$2

sendmail (){

subject="${VIP}'s server keepalived state is translate"

content="`date +'%F %T'`: `hostname`'s state change to master"

echo $content | mail -s "$subject" 1470044516@qq.com

}

case "$1" in

master)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep 'nginx'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl start nginx

fi

sendmail

;;

backup)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep 'nginx'|wc -l)

if [ $nginx_status -gt 0 ];then

systemctl stop nginx

fi

;;

*)

echo "Usage:$0 master|backup VIP"

;;

esac

[root@localhost scripts]# chmod +x notify.sh

在slave上编写脚本

[root@liping ~]# mkdir /scripts

[root@liping ~]# cd /scripts/

[root@liping scripts]# vim notify.sh

#!/bin/bash

VIP=$2

sendmail (){

subject="${VIP}'s server keepalived state is translate"

content="`date +'%F %T'`: `hostname`'s state change to master"

echo $content | mail -s "$subject" 1470044516@qq.com

}

case "$1" in

master)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep 'nginx'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl start nginx

fi

sendmail

;;

backup)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep 'nginx'|wc -l)

if [ $nginx_status -gt 0 ];then

systemctl stop nginx

fi

;;

*)

echo "Usage:$0 master|backup VIP"

;;

esac

[root@liping scripts]# chmod +x notify.sh

2.5 配置keepalived加入监控脚本的配置

配置主keepalived

[root@localhost ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id node1

}

vrrp_script nginx_check {

script "/scripts/check_n.sh"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 52

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.136.250 dev ens33

}

}

track_script {

nginx_check

}

notify_master "/scripts/notify.sh master 192.168.136.250"

notify_backup "/scripts/notify.sh backup 192.168.136.250"

}

virtual_server 192.168.136.250 80 {

delay_loop 3

lvs_sched rr

lvs_method DR

protocol TCP

real_server 192.168.136.153 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.136.152 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@localhost ~]# systemctl restart keepalived

配置备keepalived

[root@liping ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_id node2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.136.250 dev ens33

}

notify_master "/scripts/notify.sh master 192.168.136.250"

notify_backup "/scripts/notify.sh backup 192.168.136.250"

}

virtual_server 192.168.136.250 80 {

delay_loop 3

lvs_sched rr

lvs_method DR

protocol TCP

real_server 192.168.136.153 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

global_defs {

router_id node2

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.136.250 dev ens33

}

notify_master "/scripts/notify.sh master 192.168.136.250"

notify_backup "/scripts/notify.sh backup 192.168.136.250"

}

virtual_server 192.168.136.250 80 {

delay_loop 3

lvs_sched rr

lvs_method DR

protocol TCP

real_server 192.168.136.153 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.136.152 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

//关闭slave上的nginx,关闭master上的keepalived

[root@liping ~]# systemctl stop nginx

[root@liping ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

[root@localhost ~]# systemctl stop keepalived

//slave上的nginx自动开启

[root@liping ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:80 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 :::80 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

[root@liping ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:26:a4:52 brd ff:ff:ff:ff:ff:ff

inet 192.168.136.152/24 brd 192.168.136.255 scope global dynamic ens33

valid_lft 1413sec preferred_lft 1413sec

inet 192.168.136.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ff7a:d77e:ff2:64bd/64 scope link

valid_lft forever preferred_lft forever

网站访问