转载自鲲鹏论坛

今天为大家分享的是一家专业从事工业互联网关键技术,产品开发的全栈解决方案供应商,致力于工业制造直接的工业互联网应用以及工业企业大数据服务为两大核心业务,在进行鲲鹏迁移时,遇到的问题及经验分享。

首先是大数据组件CDH的编译安装。CDH是用Java编写的,默认配置是在x86的机器上运行,需要进行修改才能在鲲鹏云服务器上运行。在修改过程中遇到不少坑,在这分享一下踩坑经历,以供各位参考。

CDH是Apache Hadoop和相关项目的最完整,经过测试的流行发行版。 CDH提供了Hadoop的核心元素 - 可扩展的存储和分布式计算 - 以及基于Web的用户界面和重要的企业功能。 CDH是Apache许可的开放源码,是唯一提供统一批处理,交互式SQL和交互式搜索以及基于角色的访问控制的Hadoop解决方案。

环境信息:

|

类别 |

子项 |

版本 |

|

OS |

CentOS |

7.5 |

|

Kernel |

4.14 |

|

|

软件 |

CDH-hadoop |

hadoop-2.6.0-cdh5.13.3 |

|

Maven |

3.5.4 |

|

|

Ant |

1.9.4 |

|

|

Git |

1.8.3.1 |

|

|

GCC |

4.8.5 |

|

|

Cmake |

3.11.2 |

|

|

JDK |

1.8 |

依赖安装

yum -y install gcc-c++ autoconf automake libtool cmake svn openssl-devel ncurses-devel组件编译安装

下载安装包

1、安装jdk-1.8.0

参考《华为云鲲鹏云服务最佳实践-JDK-1.8.0 安装配置指南》

2、安装Maven

简介:通过一小段描述信息来管理项目的构建,报告和文档的项目管理工具软件。

安装软件:apache-maven-3.5.4-bin.tar.gz

wget https://archive.apache.org/dist/maven/maven-3/3.5.4/binaries/apache-maven-3.5.4-bin.tar.gz解压

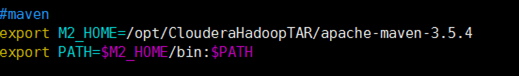

tar -zxvf apache-maven-3.5.4-bin.tar.gz -C /opt/ClouderaHadoopTAR/配置mvn的环境变量

vim/etc/profile

export M2_HOME=/opt/ClouderaHadoopTAR/apache-maven-3.5.4

export PATH=$M2_HOME/bin:$PATH

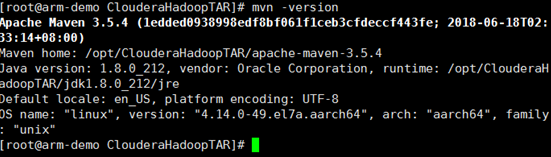

source /etc/profile测试是否安装完成

mvn -v

3、安装Findbugs

安装软件:findbugs-3.0.1.tar.gz

wget http://prdownloads.sourceforge.net/findbugs/findbugs-3.0.1.tar.gz解压

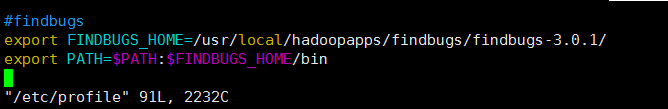

tar -zxvf findbugs-3.0.1.tar.gz -C /usr/local/hadoopapps/findbugs/配置Findbugs环境变量

export FINDBUGS_HOME=/usr/local/hadoopapps/findbugs/findbugs-3.0.1/

export PATH=$PATH:$FINDBUGS_HOME/bin

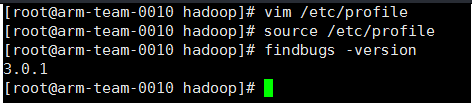

使环境变量生效

source /etc/profile测试是否安装成功

findbugs -version

4、安装ProtocolBuffer

直接用yum安装

yum install protobuf-compiler.aarch64

测试是否安装成功

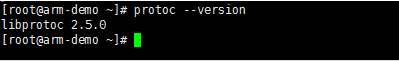

protoc --version

5、安装Snappy

简介:Snappy 是一个 C++ 的用来压缩和解压缩的开发包。

安装软件:snappy-1.1.3.tar.gz

wget https://github.com/google/snappy/releases/download/1.1.3/snappy-1.1.3.tar.gz解压

tar -zxvf snappy-1.1.3.tar.gz进入snappy目录

cd snappy-1.1.3/编译安装

./configure

make

make install测试是否安装成功,查看snappy文件库

ls -lh /usr/local/lib | grep snappy相关依赖安装完之后,就可以开始编译CDH了。

编译要求:hadoop-2.6.0-cdh5.13.3

wget http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.13.3.tar.gz解压

tar -zxvf hadoop-2.6.0-cdh5.13.3.tar.gz进入hadoop-2.6.0-cdh5.13.3目录

cd hadoop-2.6.0-cdh5.13.3/src #pom.xml所在同级目录使用JDK8需要改动的地方

(1)修改Hadoop依赖的JDK版本

sed -i "s/1.7/1.8/g" `grep javaVersion -rl /usr/local/src/hadoop-2.6.0-cdh5.13.3/pom.xml`否则会报以下错误

[WARNING] Rule 1: org.apache.maven.plugins.enforcer.RequireJavaVersion failed with message:

Detected JDK Version: 1.8.0-131 is not in the allowed range [1.7.0,1.7.1000}].(2)替换依赖的jdk.tools版本

sed -i "s/1.7/1.8/g" `grep 1.7 -rl /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/pom.xml`否则会报以下错误

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:2.5.1:compile (default-compile) on project hadoop-annotations: Compilation failure: Compilation failure:

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/tools/ExcludePrivateAnnotationsJDiffDoclet.java:[20,22] error: package com.sun.javadoc does not exist

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/tools/ExcludePrivateAnnotationsJDiffDoclet.java:[21,22] error: package com.sun.javadoc does not exist

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/tools/ExcludePrivateAnnotationsJDiffDoclet.java:[22,22] error: package com.sun.javadoc does not exist

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/tools/ExcludePrivateAnnotationsJDiffDoclet.java:[35,16] error: cannot find symbol

[ERROR] symbol: class LanguageVersion

[ERROR] location: class ExcludePrivateAnnotationsJDiffDoclet(3)替换不规范的字符

sed -i "s/</ul>//g" `grep "</ul>" -l /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/InterfaceStability.java`否则会报以下错误

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-javadoc-plugin:2.8.1:jar (module-javadocs) on project hadoop-annotations: MavenReportException: Error while creating archive:

[ERROR] Exit code: 1 - /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/src/main/java/org/apache/hadoop/classification/InterfaceStability.java:27: error: unexpected end tag: </ul>

[ERROR] * </ul>

[ERROR] ^

[ERROR]

[ERROR] Command line was: /usr/local/jdk1.8.0_131/jre/../bin/javadoc @options @packages

[ERROR]

[ERROR] Refer to the generated Javadoc files in '/usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-annotations/target' dir.

[ERROR]

(4)关闭DocLint特性

Java 8 新增了DocLint特性,这个特性主要是在开发阶段生产javadoc文档之前就检查Javadoc注释的错误,并且链接到源代码。如果javadoc的注释有错误,不生产javadoc。

默认情况下DocLint功能是开启的,在配置里添加-Xdoclint:none可以关闭这个功能,生成javadoc就不会做检查。

vim /usr/local/src/hadoop-2.6.0-cdh5.13.3/pom.xml(5)在<properties></properties>之间添加如下属性

<additionalparam>-Xdoclint:none</additionalparam>否则会报以下错误

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-javadoc-plugin:2.8.1:jar (module-javadocs) on project hadoop-nfs: MavenReportException: Error while creating archive:

[ERROR] Exit code: 1 - /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-nfs/src/main/java/org/apache/hadoop/oncrpc/RpcCallCache.java:126: warning: no @return

[ERROR] public String getProgram() {

[ERROR] ^

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-common-project/hadoop-nfs/src/main/java/org/apache/hadoop/oncrpc/RpcCallCache.java:131: warning: no @param for clientId(6)修复bug

参考链接https://issues.apache.org/jira/browse/YARN-3400

替换两个文件:

./hadoop-2.6.0-cdh5.13.3/src/hadoop-mapreduce-project/hadoop-mapreduce-client/hadoop-mapreduce-client-nativetask/src/main/native/src/lib/primitives.h

. /hadoop-2.6.0-cdh5.13.3/src/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-common/src/main/java/org/apache/hadoop/yarn/ipc/RPCUtil.java

替换文件请见附件,由于文件上传限制,需要修改文件名,分别改为primitives.h和RPCUtil.java

否则会报

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:2.5.1:compile (default-compile) on project hadoop-yarn-common: Compilation failure: Compilation failure:

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-common/src/main/java/org/apache/hadoop/yarn/ipc/RPCUtil.java:[101,10] error: unreported exception Throwable; must be caught or declared to be thrown

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-common/src/main/java/org/apache/hadoop/yarn/ipc/RPCUtil.java:[104,10] error: unreported exception Throwable; must be caught or declared to be thrown

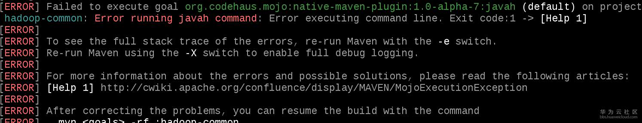

[ERROR] /usr/local/src/hadoop-2.6.0-cdh5.13.3/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-common/src/main/java/org/apache/hadoop/yarn/ipc/RPCUtil.java:[107,10] error: unreported exception Throwable; must be caught or declared to be thrown(7)安装protobuf

版本必须与Hadoop一致,否则会报错

WARNING] [protoc, --version] failed: java.io.IOException: Cannot run program "protoc": error=2, No such file or directory

[ERROR] stdout: []

查看Hadoop版本中指定的protobuf版本

grep "<cdh.protobuf.version>" /root/.m2/repository/com/cloudera/cdh/cdh-root/5.13.3/cdh-root-5.13.3.pom

<cdh.protobuf.version>2.5.0</cdh.protobuf.version>替换yum源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-6.repo执行命令进行编译

mvn package -DskipTests -Pdist,native -Dtar -Dsnappy.lib=/usr/local/lib -Dbundle.snappy -Dmaven.javadoc.skip=true

编译结果位于./hadoop-dist/target/

hadoop-2.6.0-cdh5.13.3.tar.gz是压缩包

hadoop-2.6.0-cdh5.13.3是文件夹

1.编译时可能会遇到javah错误

配置JAVA_HOME路径即可

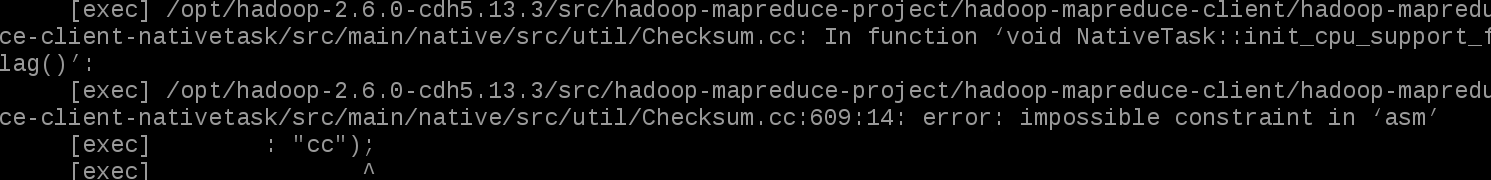

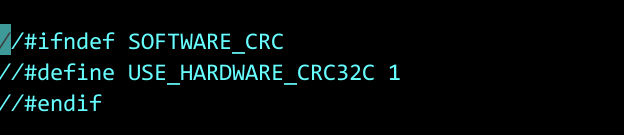

2.checksum.cc文件的问题

注释掉硬件CRC32的实现,使用软件实现方式

修改/opt/hadoop-2.6.0-cdh5.13.3/src/hadoop-mapreduce-project/hadoop-mapreduce-client/hadoop-mapreduce-client-nativetask/src/main/native/src/util/Checksum.cc

注释掉582行,583,584行,即可继续编译.

编译libtorrent-rasterbar.so

1.安装依赖

yum install -y boost boost-devel2.下载源码

wget https://github.com/arvidn/libtorrent/releases/download/libtorrent-1_0_6/libtorrent-rasterbar-1.0.6.tar.gz3.编译源码

tar -zxvf libtorrent-rasterbar-1.0.6.tar.gz

cd libtorrent-rasterbar-1.0.6

./configure

make -j4

make install通过swig将c/c++封装成python可调用的so动态链接库4.找到libtorrent-rasterbar.so文件

1.安装swig

yum install -y swig2.下载源码

git clone https://github.com/AGWA/parentdeathutils.git3.改写源码

cd parentdeathutils

cp diewithparent.c diewithparent.i

vim diewithparent.i

/***在文件头部添加如下内容***/

%module diewithparent

%{

#define SWIG_FILE_WITH_INIT

#include <sys/prctl.h>

#include <signal.h>

#include <unistd.h>

#include <stdio.h>

#include <stdlib.h>

%}4.建python模块,利用-python参数执行swig

swig -python diewithparent.i执行完命令后生成两个不同的文件:diewithparent_wrap.c和diewithparent.py

5.利用distutils生成动态库

先定义一个配置文件,命名为setup.py

vim setup.pysetup.py内容如下:

# -*- coding: utf-8 -*

from distutils.core import setup, Extension

diewithparent_module = Extension('_diewithparent',sources=['diewithparent_wrap.c','diewithparent.c'],)

setup (name='diewithparent',version='1.0',author="SWIG Docs",description="""Simple swig diewithparent from docs""",ext_modules = [diewithparent_module],py_modules = ["diewithparent"],)6.生成_diewithparent.so动态库

python setup.py build_ext --inplace作者:南七技校林书豪