一、导入jar包

本次使用的是eclipse操作的,所以需要手动导入jar包

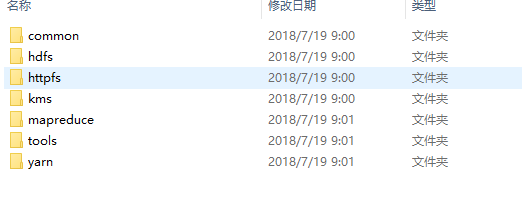

common为核心类,此次需要引入common和hdfs两个文件夹下的所有jar包(包括作者写的三个jar包以及lib里面的所有jar包)

//连接hdfs的服务 @Test public void connect() { Configuration conf=new Configuration(); conf.set("fs.defaultFS", "hdfs://192.168.0.32:9000"); try { FileSystem fileSystem = FileSystem.get(conf); FileStatus fileStatus = fileSystem.getFileStatus(new Path("/UPLOAD")); System.out.println(fileStatus.isFile()); //是不是一个文件 System.out.println(fileStatus.isDirectory()); //是不是一个目录 System.out.println(fileStatus.getPath()); //文件的路径 System.out.println(fileStatus.getLen()); //文件大小 } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

执行hadoop fs -chmod 777 / 给予所有操作权限再运行就可以了

//重命名 @Test public void mv() { Configuration conf=new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.0.32:9000"), conf); //连接 boolean rename = fileSystem.rename(new Path("/UPLOAD/jdk-8u221-linux-x64.tar.gz"),new Path("/UPLOAD/jdk1.8.tar.gz")); System.out.println(rename?"修改成功":"修改失败"); fileSystem.close(); //关闭 } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

//创建文件夹 @Test public void mkdir() { Configuration conf=new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.0.32:9000"),conf); //连接 fileSystem.mkdirs(new Path("/test")); fileSystem.mkdirs(new Path("/other/hello/world")); fileSystem.close(); //关闭 } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

//文件上传 @Test public void upload() { Configuration conf=new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.0.32:9000"),conf); FSDataOutputStream out = fileSystem.create(new Path("/hadoop.jar")); FileInputStream in=new FileInputStream(new File("D:\\hadoop.jar")); byte[]b=new byte[1024]; int len=0; while((len=in.read(b))!=-1) { out.write(b,0,len); in.close(); out.close(); } } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

文件下载

//文件下载 @Test public void download() { Configuration conf=new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.0.32:9000"),conf); FSDataInputStream in = fileSystem.open(new Path("/hadoop.jar")); FileOutputStream out=new FileOutputStream(new File("D://soft//hadoop.jar")); byte[]b=new byte[1024]; int len=0; while((len=in.read(b))!=-1) { out.write(b,0,len); } in.close(); out.close(); } catch (IOException | URISyntaxException e) { // TODO Auto-generated catch block e.printStackTrace(); } }

@Test //遍历文件系统 public void lsr() { Configuration conf = new Configuration(); try { FileSystem fileSystem = FileSystem.get(new URI("hdfs://192.168.0.32:9000"),conf); FileStatus[] status = fileSystem.listStatus(new Path("/")); for(FileStatus f:status) { judge(fileSystem,f); } fileSystem.close(); } catch (IOException | URISyntaxException e) { e.printStackTrace(); } } public void judge(FileSystem fileSystem,FileStatus s) { String name = s.getPath().toString().split("hdfs://192.168.0.32:9000/")[1]; if(s.isDirectory()) { System.out.println("文件夹: "+name); try { FileStatus[] status= fileSystem.listStatus(new Path("/"+name)); for(FileStatus f:status) { judge(fileSystem,f); } } catch (IllegalArgumentException | IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } }else { System.out.println("文件: "+name); } }