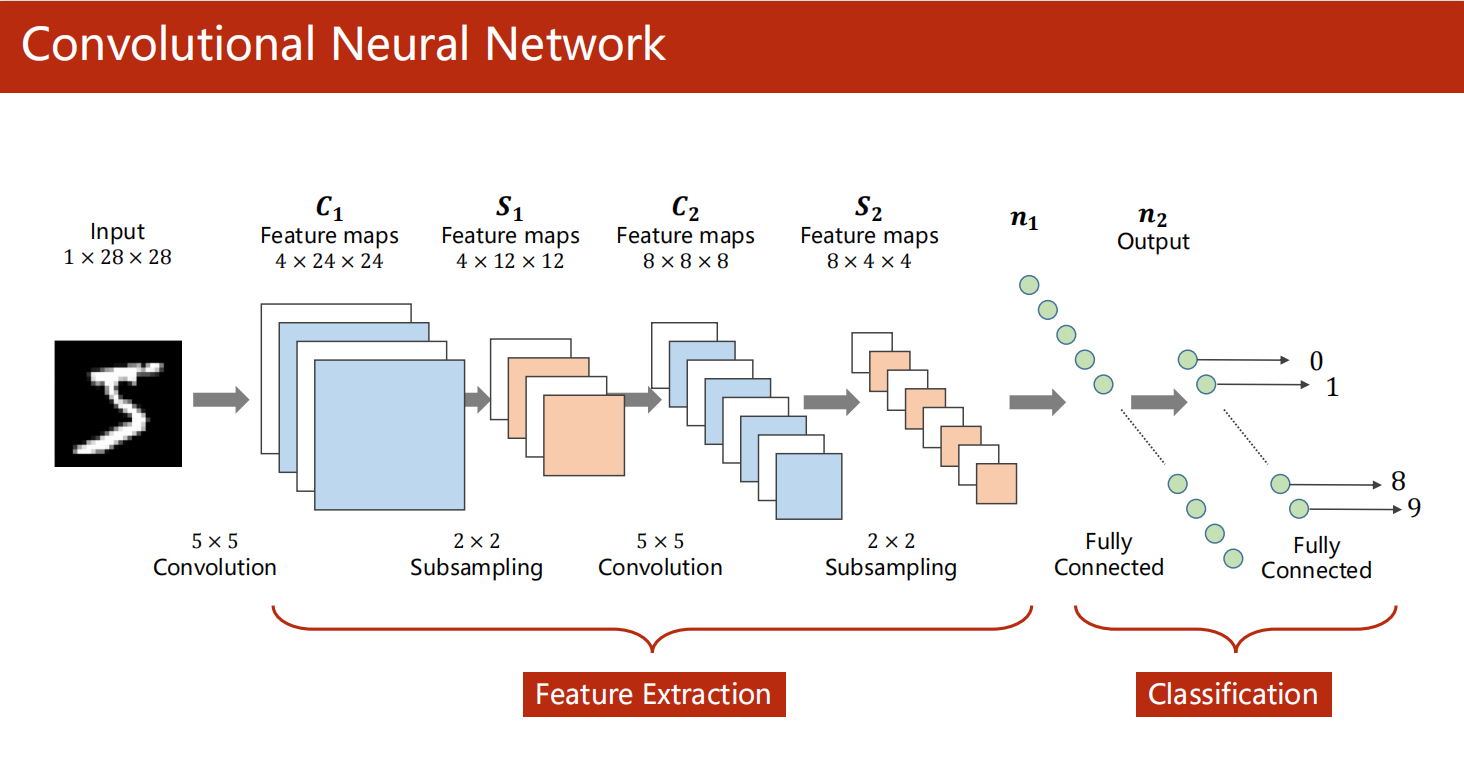

卷积神经网络

CNN

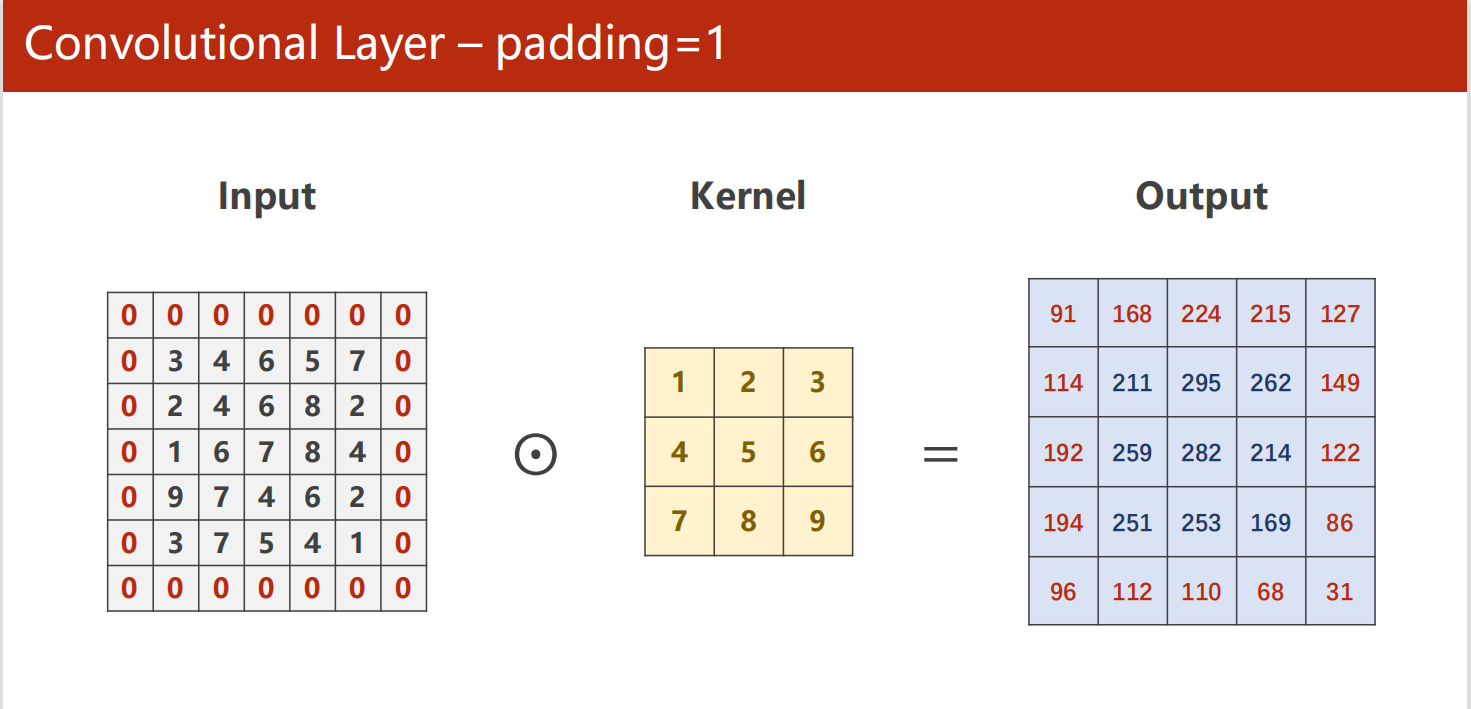

padding

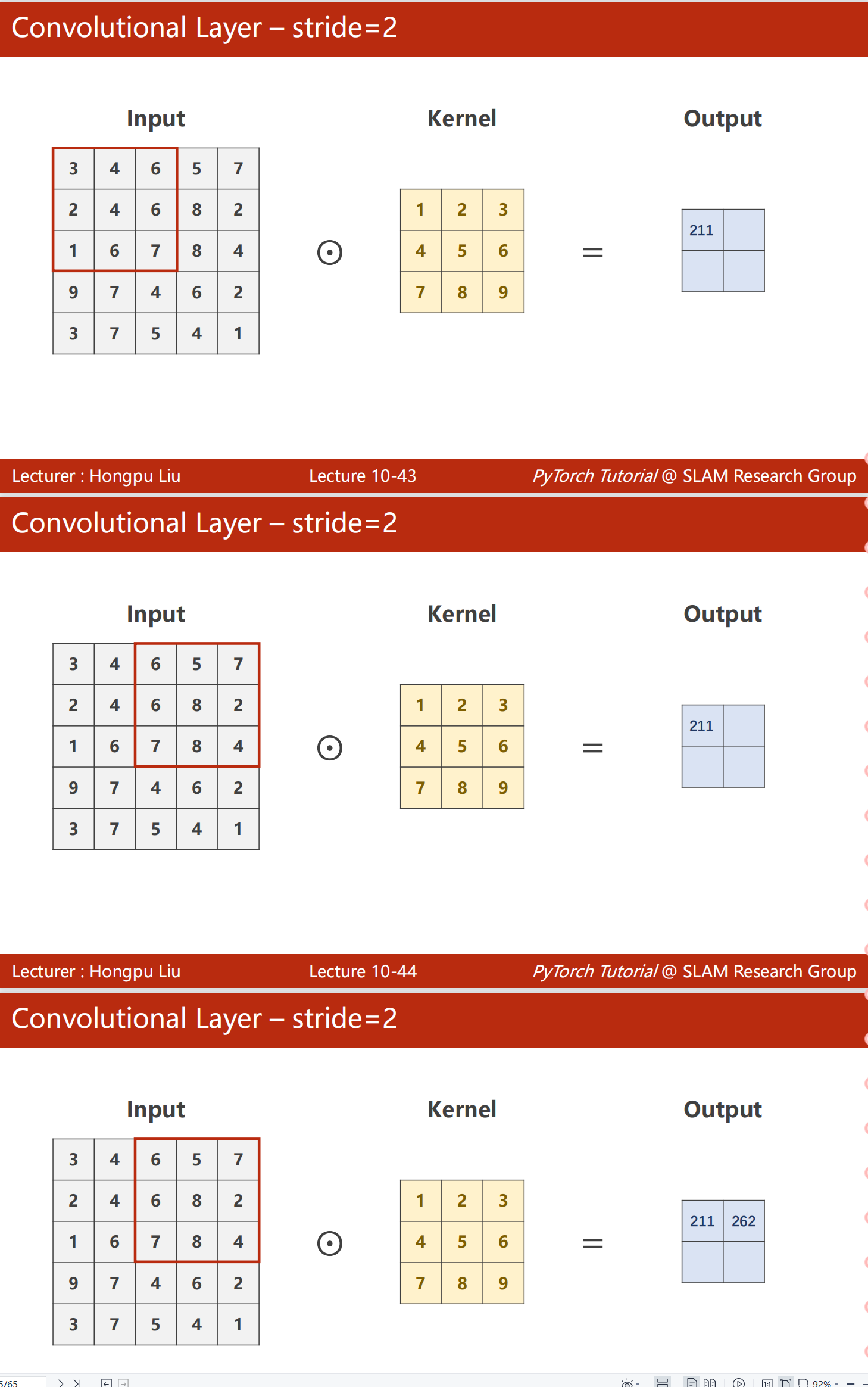

stride

MaxPooling

CNN

代码:

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size=64

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),(0.3081,))

])

train_dataset=datasets.MNIST(root='../dataset/mnist',train=True,download=True,transform=transform)

train_loader=DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset=datasets.MNIST(root='../dataset/mnist',train=False,download=True,transform=transform)

test_loader=DataLoader(test_dataset,shuffle=True,batch_size=batch_size)

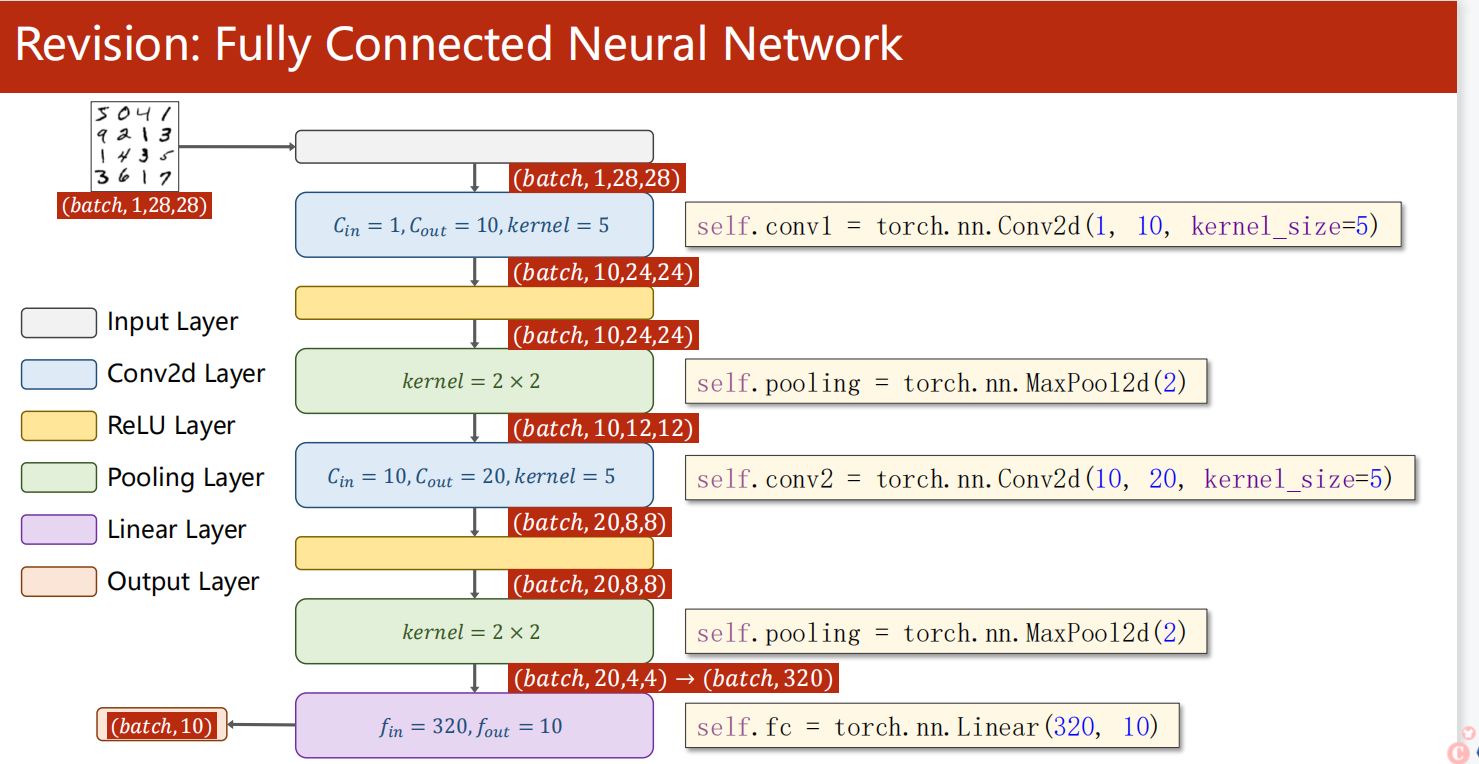

class Net(torch.nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1=torch.nn.Conv2d(1,10,kernel_size=5)

self.conv2=torch.nn.Conv2d(10,20,kernel_size=5)

self.pooling=torch.nn.MaxPool2d(2)

self.fc=torch.nn.Linear(320,10)

def forward(self,x):

batch_size=x.size(0)

x=self.pooling(torch.relu(self.conv1(x)))

x = self.pooling(torch.relu(self.conv2(x)))

x=x.view(batch_size,-1)

x=self.fc(x)

return x

model=Net()

device=torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

criterion=torch.nn.CrossEntropyLoss()

optimizer=optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

def train(epoch):

running_loss=0.0

for batch_idx,data in enumerate(train_loader,0):

inputs,target=data

inputs=inputs.to(device)

target=target.to(device)

optimizer.zero_grad()

outputs=model(inputs)

loss=criterion(outputs,target)

loss.backward()

optimizer.step()

running_loss+=loss.item()

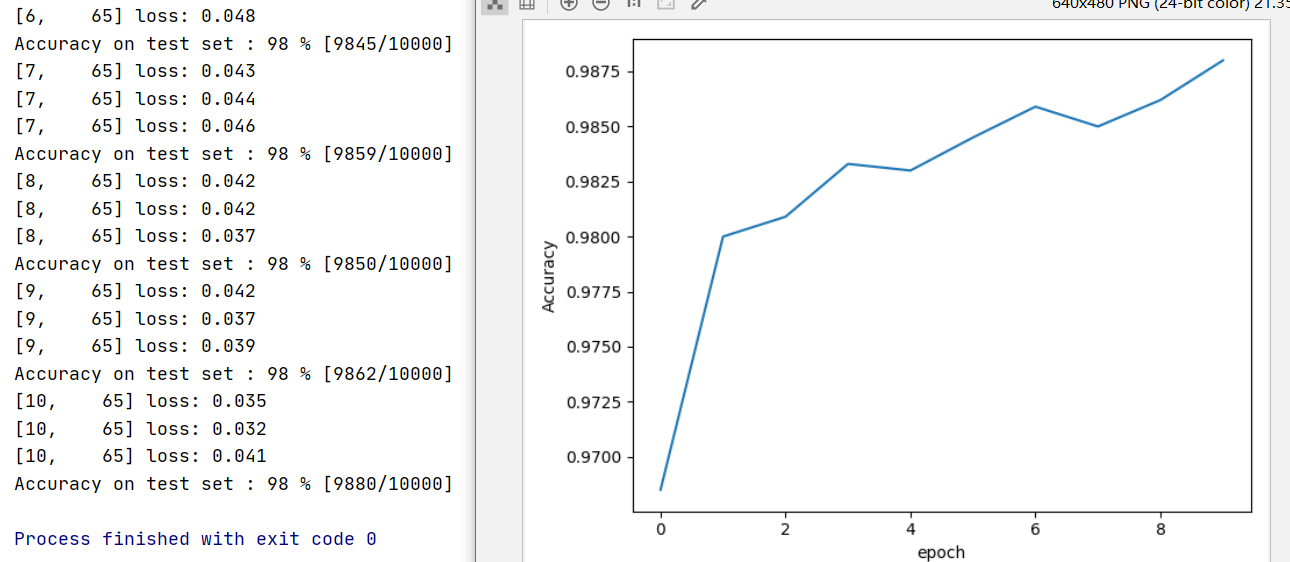

if batch_idx%300==299:

print('[%d, %5d] loss: %.3f'% (epoch+1,batch_size+1,running_loss/2000))

running_loss=0.0

def test():

correct=0

total=0

with torch.no_grad():

for data in test_loader:

images,labels=data

images,labels=images.to(device),labels.to(device)

outputs=model(images)

_,predicted=torch.max(outputs.data,dim=1)

total+=labels.size(0)

correct+=(predicted == labels).sum().item()

accuracy.append(correct/total)

print('Accuracy on test set : %d %% [%d/%d]' % (100*correct/total,correct,total))

import matplotlib.pyplot as plt

if __name__ == '__main__':

epoch_x=[]

accuracy=[]

for epoch in range(10):

train(epoch)

test()

epoch_x.append(epoch)

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

ax.set_xlabel("epoch")

ax.set_ylabel("Accuracy")

ax.plot(epoch_x,accuracy)

plt.show()

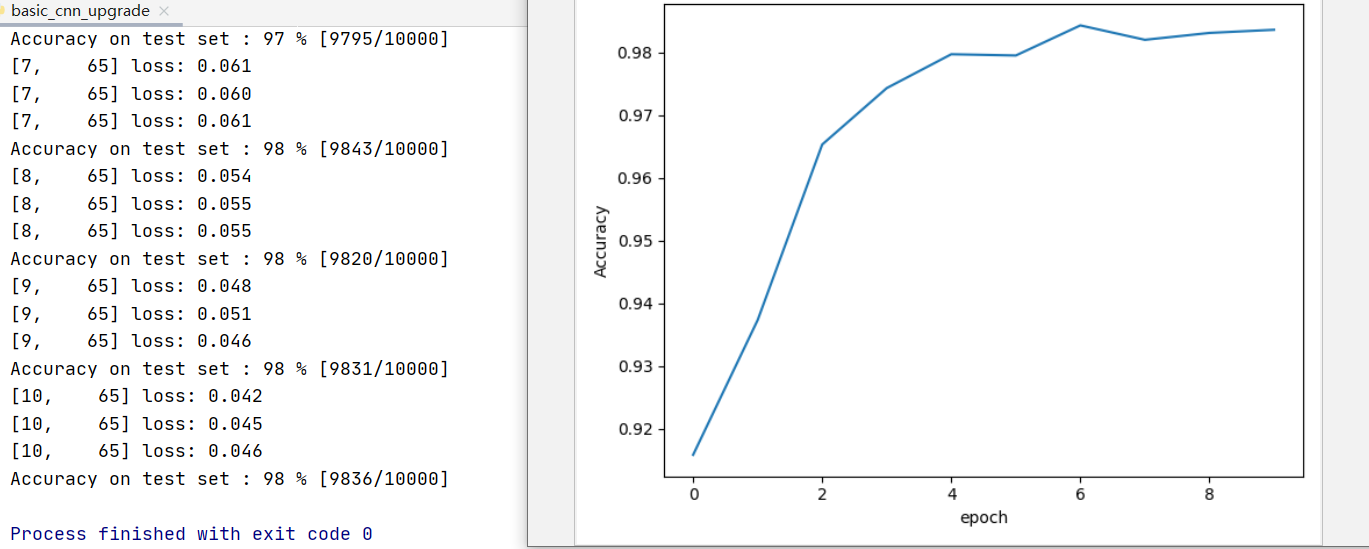

结果:

Exercise

代码:

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

import torch

batch_size=64

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),(0.3081,))

])

train_dataset=datasets.MNIST(root='../dataset/mnist',train=True,download=True,transform=transform)

train_loader=DataLoader(train_dataset,shuffle=True,batch_size=batch_size)

test_dataset=datasets.MNIST(root='../dataset/mnist',train=False,download=True,transform=transform)

test_loader=DataLoader(test_dataset,shuffle=True,batch_size=batch_size)

class Net(torch.nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1=torch.nn.Conv2d(1,10,kernel_size=5)

self.conv2=torch.nn.Conv2d(10,20,kernel_size=3)

self.conv3 = torch.nn.Conv2d(20,40, kernel_size=3)

self.pooling=torch.nn.MaxPool2d(2)

self.pooling2 = torch.nn.MaxPool2d(3)

self.l1=torch.nn.Linear(40,30)

self.l2=torch.nn.Linear(30,20)

self.l3=torch.nn.Linear(20,10)

def forward(self,x):

batch_size=x.size(0)

x=self.pooling(torch.relu(self.conv1(x)))

x = self.pooling(torch.relu(self.conv2(x)))

x = self.pooling2(torch.relu(self.conv3(x)))

x=x.view(batch_size,-1)

x=self.l1(x)

x =self.l2(x)

return self.l3(x)

model=Net()

device=torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

criterion=torch.nn.CrossEntropyLoss()

optimizer=optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

def train(epoch):

running_loss=0.0

for batch_idx,data in enumerate(train_loader,0):

inputs,target=data

inputs=inputs.to(device)

target=target.to(device)

optimizer.zero_grad()

outputs=model(inputs)

loss=criterion(outputs,target)

loss.backward()

optimizer.step()

running_loss+=loss.item()

if batch_idx%300==299:

print('[%d, %5d] loss: %.3f'% (epoch+1,batch_size+1,running_loss/300))

running_loss=0.0

def test():

correct=0

total=0

with torch.no_grad():

for data in test_loader:

images,labels=data

images,labels=images.to(device),labels.to(device)

outputs=model(images)

_,predicted=torch.max(outputs.data,dim=1)

total+=labels.size(0)

correct+=(predicted == labels).sum().item()

accuracy.append(correct/total)

print('Accuracy on test set : %d %% [%d/%d]' % (100*correct/total,correct,total))

import matplotlib.pyplot as plt

if __name__ == '__main__':

epoch_x=[]

accuracy=[]

for epoch in range(10):

train(epoch)

test()

epoch_x.append(epoch)

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

ax.set_xlabel("epoch")

ax.set_ylabel("Accuracy")

ax.plot(epoch_x,accuracy)

plt.show()

结果: