本爬虫任务:

爬虫糗事百科网站(https://www.qiushibaike.com/)——段子版块中所有的【段子】、【投票数】、【神回复】等内容

步骤:

- 通过翻页寻找url规律,构造url列表

- 查看审查元素,发现网页内容均在elements中,可以直接请求

- 通过xpath提取需要的内容

- 保存数据

逻辑:

- 构造外层url列表并进行遍历

- 对外层url请求访问,获得响应

- 提取内层url列表

- 遍历内层url

- 对内层url请求访问,获取响应

- 提取需要的数据(段子、投票数、神回复)

- 保存

代码:

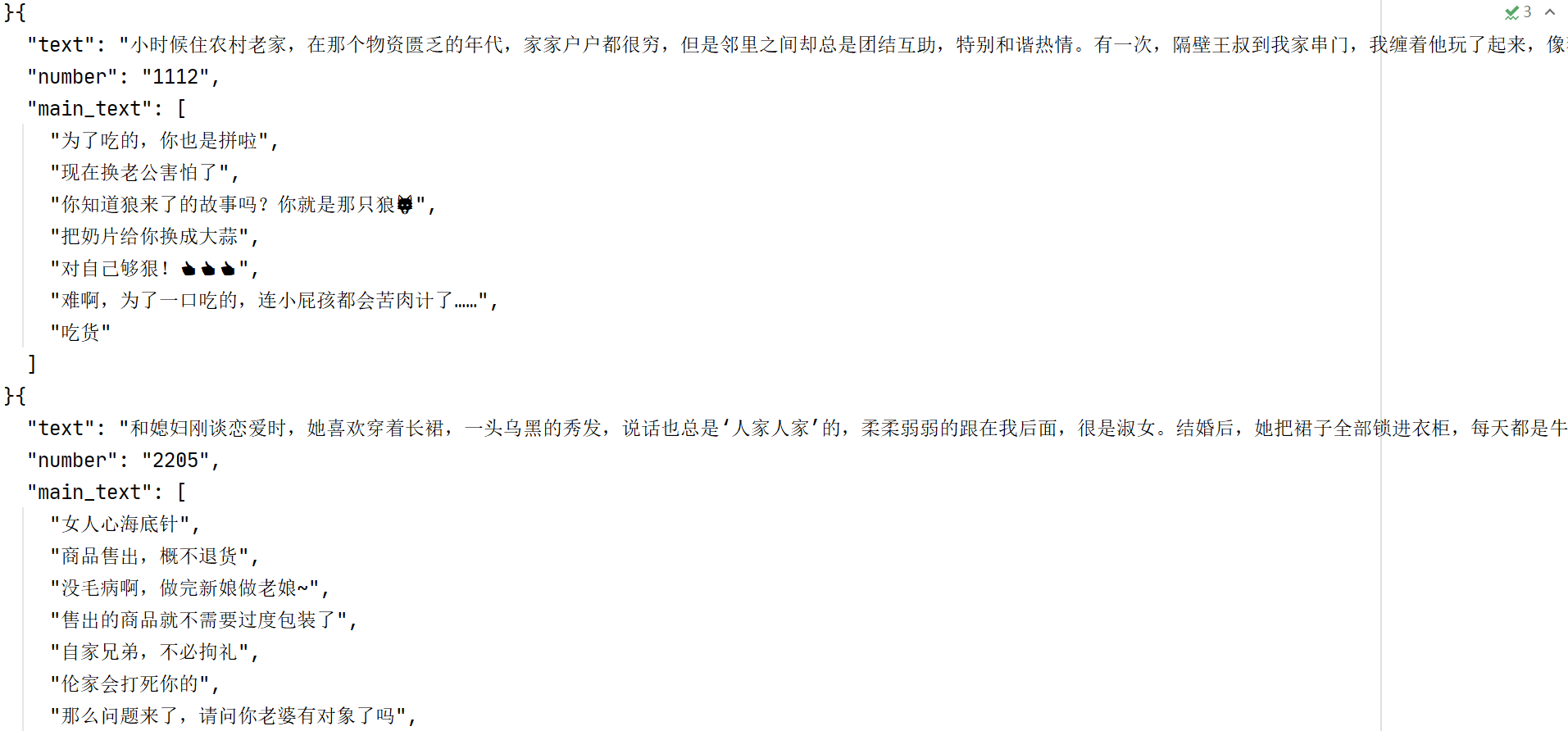

1 import requests 2 from lxml import etree 3 import json 4 5 6 class QiuShiSpider: 7 def __init__(self): 8 self.start_url = "https://www.qiushibaike.com/text/page/{}/" 9 self.headers = { 10 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.k36 (KHTML, like Gecko) ""Chrome/86.0.4240.11"} 11 12 def parse_url(self, url): 13 res = requests.get(url, headers=self.headers) 14 return res.content.decode() 15 16 def get_urls_inner(self, html_str): 17 html = etree.HTML(html_str) 18 urls_inner = html.xpath("//a[@class='contentHerf']/@href") 19 full_urls_inner = ["https://www.qiushibaike.com" + i for i in urls_inner] 20 return full_urls_inner 21 22 def get_contents(self, html_str): 23 html = etree.HTML(html_str) 24 text_list = html.xpath("//div[@class='content']/text()") 25 text = "".join(text_list) 26 number = html.xpath("//span[@class='stats-vote']/i/text()")[0] if len( 27 html.xpath("//span[@class='stats-vote']/i/text()")) > 0 else None 28 main_text_list = html.xpath("//div/span[@class='body']/text()") 29 return text, number, main_text_list 30 31 def save(self, content_dic): 32 with open("qs4.txt", "a", encoding="utf-8") as f: 33 f.write(json.dumps(content_dic, ensure_ascii=False, indent=2)) 34 35 def run(self): 36 # 遍历url发送请求获取响应 37 urls_outer = [self.start_url.format(n + 1) for n in range(13)] 38 for url_outer in urls_outer: 39 try: 40 html_str = self.parse_url(url_outer) 41 urls_inner = self.get_urls_inner(html_str) 42 for url_inner in urls_inner: 43 content_dic = {} 44 html_str = self.parse_url(url_inner) 45 text, number, main_text_list = self.get_contents(html_str) 46 content_dic["text"] = text 47 content_dic["number"] = number 48 content_dic["main_text"] = main_text_list 49 50 self.save(content_dic) 51 print("第{}页,第{}条".format(urls_outer.index(url_outer), urls_inner.index(url_inner))) 52 except Exception as e: 53 print(e) 54 55 56 if __name__ == "__main__": 57 qs = QiuShiSpider() 58 qs.run()