参考文档:

- Install-guide:https://docs.openstack.org/install-guide/

- OpenStack High Availability Guide:https://docs.openstack.org/ha-guide/index.html

- 理解Pacemaker:http://www.cnblogs.com/sammyliu/p/5025362.html

- Ceph: http://docs.ceph.com/docs/master/start/intro/

十.Nova控制节点集群

1. 创建nova相关数据库

# 在任意控制节点创建数据库,后台数据自动同步,以controller01节点为例; # nova服务含4个数据库,统一授权到nova用户; # placement主要涉及资源统筹,较常用的api接口是获取备选资源与claim资源等 [root@controller01 ~]# mysql -u root -pmysql_pass MariaDB [(none)]> CREATE DATABASE nova_api; MariaDB [(none)]> CREATE DATABASE nova; MariaDB [(none)]> CREATE DATABASE nova_cell0; MariaDB [(none)]> CREATE DATABASE nova_placement; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_placement.* TO 'nova'@'localhost' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_placement.* TO 'nova'@'%' IDENTIFIED BY 'nova_dbpass'; MariaDB [(none)]> flush privileges; MariaDB [(none)]> exit;

2. 创建nova/placement-api

# 在任意控制节点操作,以controller01节点为例; # 调用nova相关服务需要认证信息,加载环境变量脚本即可 [root@controller01 ~]# . admin-openrc

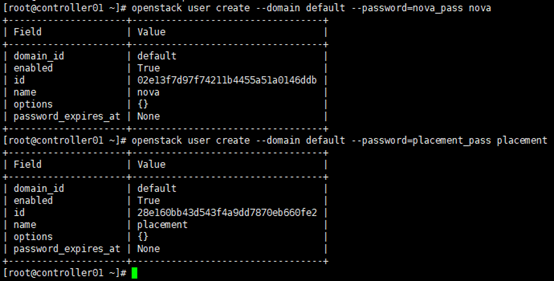

1)创建nova/plcement用户

# service项目已在glance章节创建; # nova/placement用户在”default” domain中 [root@controller01 ~]# openstack user create --domain default --password=nova_pass nova [root@controller01 ~]# openstack user create --domain default --password=placement_pass placement

2)nova/placement赋权

# 为nova/placement用户赋予admin权限 [root@controller01 ~]# openstack role add --project service --user nova admin [root@controller01 ~]# openstack role add --project service --user placement admin

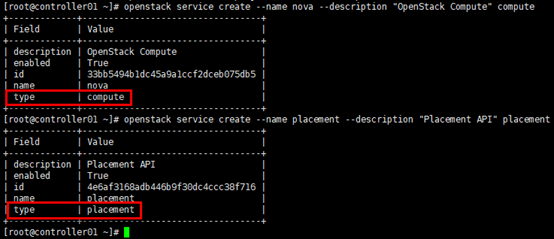

3)创建nova/placement服务实体

# nova服务实体类型”compute”; # placement服务实体类型”placement” [root@controller01 ~]# openstack service create --name nova --description "OpenStack Compute" compute [root@controller01 ~]# openstack service create --name placement --description "Placement API" placement

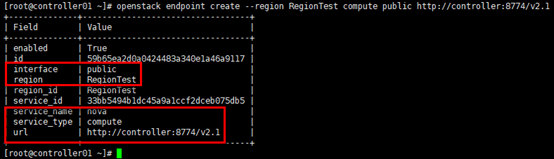

4)创建nova/placement-api

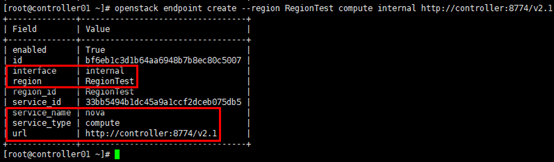

# 注意--region与初始化admin用户时生成的region一致; # api地址统一采用vip,如果public/internal/admin分别使用不同的vip,请注意区分; # nova-api 服务类型为compute,placement-api服务类型为placement; # nova public api [root@controller01 ~]# openstack endpoint create --region RegionTest compute public http://controller:8774/v2.1

# nova internal api [root@controller01 ~]# openstack endpoint create --region RegionTest compute internal http://controller:8774/v2.1

# nova admin api [root@controller01 ~]# openstack endpoint create --region RegionTest compute admin http://controller:8774/v2.1

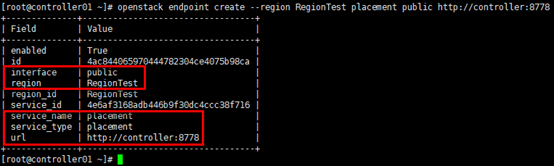

# placement public api [root@controller01 ~]# openstack endpoint create --region RegionTest placement public http://controller:8778

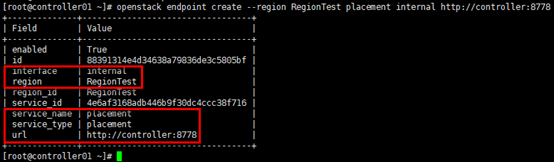

# placement internal api [root@controller01 ~]# openstack endpoint create --region RegionTest placement internal http://controller:8778

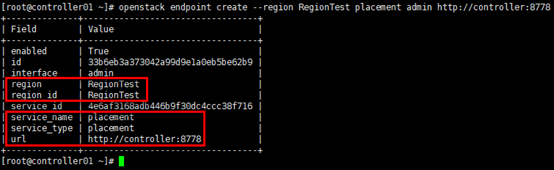

# placement admin api [root@controller01 ~]# openstack endpoint create --region RegionTest placement admin http://controller:8778

3. 安装nova

# 在全部控制节点安装nova相关服务,以controller01节点为例 [root@controller01 ~]# yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api -y

4. 配置nova.conf

# 在全部控制节点操作,以controller01节点为例; # 注意”my_ip”参数,根据节点修改; # 注意nova.conf文件的权限:root:nova [root@controller01 ~]# cp /etc/nova/nova.conf /etc/nova/nova.conf.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/nova/nova.conf [DEFAULT] my_ip=172.30.200.31 use_neutron=true firewall_driver=nova.virt.firewall.NoopFirewallDriver enabled_apis=osapi_compute,metadata osapi_compute_listen=$my_ip osapi_compute_listen_port=8774 metadata_listen=$my_ip metadata_listen_port=8775 # 前端采用haproxy时,服务连接rabbitmq会出现连接超时重连的情况,可通过各服务与rabbitmq的日志查看;

# transport_url=rabbit://openstack:rabbitmq_pass@controller:5673 # rabbitmq本身具备集群机制,官方文档建议直接连接rabbitmq集群;但采用此方式时服务启动有时会报错,原因不明;如果没有此现象,强烈建议连接rabbitmq直接对接集群而非通过前端haproxy transport_url=rabbit://openstack:rabbitmq_pass@controller01:5672,controller02:5672,controller03:5672 [api] auth_strategy=keystone [api_database] connection=mysql+pymysql://nova:nova_dbpass@controller/nova_api [barbican] [cache] backend=oslo_cache.memcache_pool enabled=True memcache_servers=controller01:11211,controller02:11211,controller03:11211 [cells] [cinder] [compute] [conductor] [console] [consoleauth] [cors] [crypto] [database] connection = mysql+pymysql://nova:nova_dbpass@controller/nova [devices] [ephemeral_storage_encryption] [filter_scheduler] [glance] api_servers = http://controller:9292 [guestfs] [healthcheck] [hyperv] [ironic] [key_manager] [keystone] [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller01:11211,controller02:11211,controller03:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova_pass [libvirt] [matchmaker_redis] [metrics] [mks] [neutron] [notifications] [osapi_v21] [oslo_concurrency] lock_path=/var/lib/nova/tmp [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_messaging_zmq] [oslo_middleware] [oslo_policy] [pci] [placement] region_name = RegionTest project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:35357/v3 username = placement password = placement_pass [quota] [rdp] [remote_debug] [scheduler] [serial_console] [service_user] [spice] [upgrade_levels] [vault] [vendordata_dynamic_auth] [vmware] [vnc] enabled=true server_listen=$my_ip server_proxyclient_address=$my_ip novncproxy_base_url=http://$my_ip:6080/vnc_auto.html novncproxy_host=$my_ip novncproxy_port=6080 [workarounds] [wsgi] [xenserver] [xvp]

5. 配置00-nova-placement-api.conf

# 在全部控制节点操作,以controller01节点为例; # 注意根据不同节点修改监听地址 [root@controller01 ~]# cp /etc/httpd/conf.d/00-nova-placement-api.conf /etc/httpd/conf.d/00-nova-placement-api.conf.bak [root@controller01 ~]# sed -i "s/Listen 8778/Listen 172.30.200.31:8778/g" /etc/httpd/conf.d/00-nova-placement-api.conf [root@controller01 ~]# sed -i "s/*:8778/172.30.200.31:8778/g" /etc/httpd/conf.d/00-nova-placement-api.conf [root@controller01 ~]# echo " #Placement API <Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory> " >> /etc/httpd/conf.d/00-nova-placement-api.conf # 重启httpd服务,启动placement-api监听端口 [root@controller01 ~]# systemctl restart httpd

6. 同步nova相关数据库

1)同步nova相关数据库

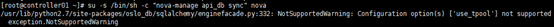

# 任意控制节点操作; # 同步nova-api数据库 [root@controller01 ~]# su -s /bin/sh -c "nova-manage api_db sync" nova # 注册cell0数据库 [root@controller01 ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova # 创建cell1 cell [root@controller01 ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova # 同步nova数据库; # 忽略”deprecated”信息 [root@controller01 ~]# su -s /bin/sh -c "nova-manage db sync" nova

补充:

此版本在向数据库同步导入数据表时,报错:/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

解决方案如下:

bug:https://bugs.launchpad.net/nova/+bug/1746530

pacth:https://github.com/openstack/oslo.db/commit/c432d9e93884d6962592f6d19aaec3f8f66ac3a2

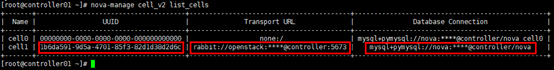

2)验证

# cell0与cell1注册正确 [root@controller01 ~]# nova-manage cell_v2 list_cells

# 查看数据表 [root@controller01 ~]# mysql -h controller01 -u nova -pnova_dbpass -e "use nova_api;show tables;" [root@controller01 ~]# mysql -h controller01 -u nova -pnova_dbpass -e "use nova;show tables;" [root@controller01 ~]# mysql -h controller01 -u nova -pnova_dbpass -e "use nova_cell0;show tables;"

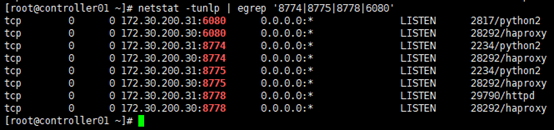

7. 启动服务

# 在全部控制节点操作,以controller01节点为例; # 开机启动 [root@controller01 ~]# systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service # 启动 [root@controller01 ~]# systemctl restart openstack-nova-api.service [root@controller01 ~]# systemctl restart openstack-nova-consoleauth.service [root@controller01 ~]# systemctl restart openstack-nova-scheduler.service [root@controller01 ~]# systemctl restart openstack-nova-conductor.service [root@controller01 ~]# systemctl restart openstack-nova-novncproxy.service # 查看状态 [root@controller01 ~]# systemctl status openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service # 查看端口 [root@controller01 ~]# netstat -tunlp | egrep '8774|8775|8778|6080'

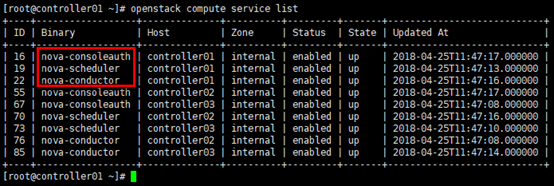

8. 验证

[root@controller01 ~]# . admin-openrc # 列出各服务组件,查看状态; # 也可使用命令” nova service-list” [root@controller01 ~]# openstack compute service list

# 展示api端点 [root@controller01 ~]# openstack catalog list

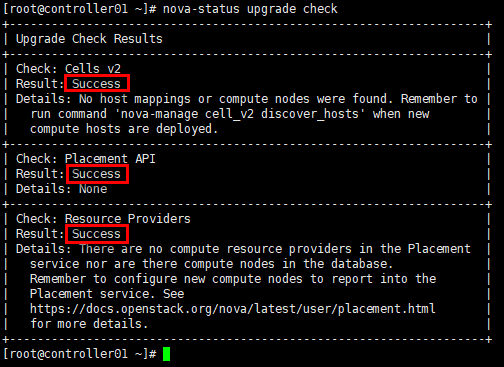

# 检查cell与placement api运行正常 [root@controller01 ~]# nova-status upgrade check

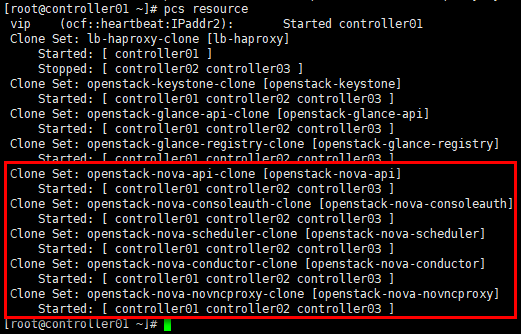

9. 设置pcs资源

# 在任意控制节点操作; # 添加资源openstack-nova-api,openstack-nova-consoleauth,openstack-nova-scheduler,openstack-nova-conductor与openstack-nova-novncproxy [root@controller01 ~]# pcs resource create openstack-nova-api systemd:openstack-nova-api --clone interleave=true [root@controller01 ~]# pcs resource create openstack-nova-consoleauth systemd:openstack-nova-consoleauth --clone interleave=true [root@controller01 ~]# pcs resource create openstack-nova-scheduler systemd:openstack-nova-scheduler --clone interleave=true [root@controller01 ~]# pcs resource create openstack-nova-conductor systemd:openstack-nova-conductor --clone interleave=true [root@controller01 ~]# pcs resource create openstack-nova-novncproxy systemd:openstack-nova-novncproxy --clone interleave=true # 经验证,建议openstack-nova-api,openstack-nova-consoleauth,openstack-nova-conductor与openstack-nova-novncproxy 等无状态服务以active/active模式运行; # openstack-nova-scheduler等服务以active/passive模式运行 # 查看pcs资源; [root@controller01 ~]# pcs resource