Lasso and me

For a long time I was wrong about lasso.

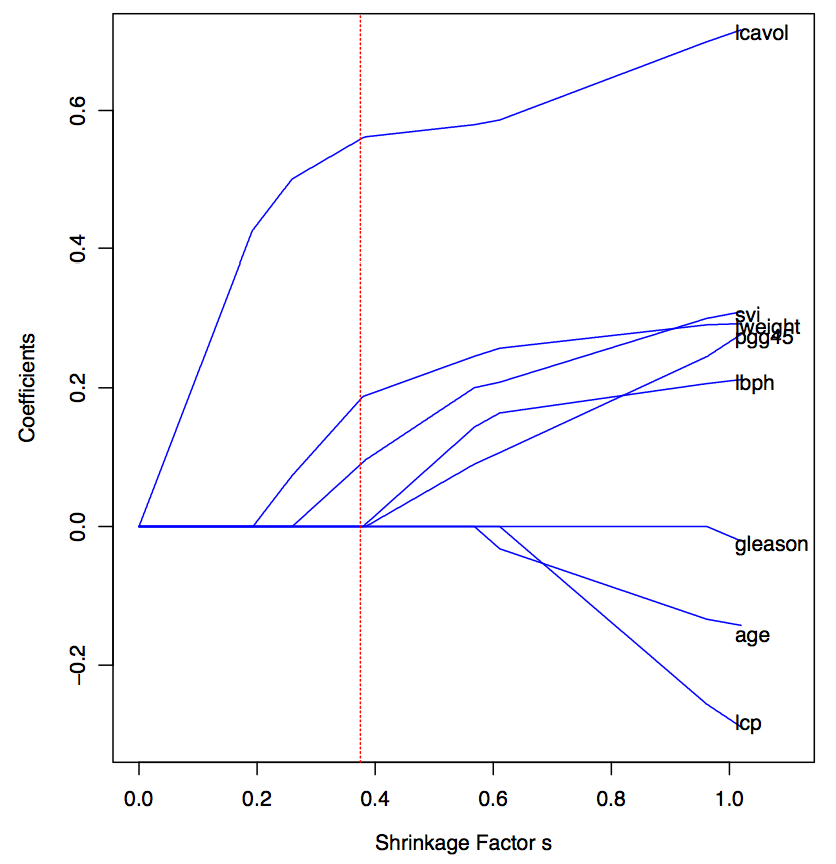

Lasso (“least absolute shrinkage and selection operator”) is a regularization procedure that shrinks regression coefficients toward zero, and in its basic form is equivalent to maximum penalized likelihood estimation with a penalty function that is proportional to the sum of the absolute values of the regression coefficients.

I first heard about lasso from a talk that Trevor Hastie Rob Tibshirani gave at Berkeley in 1994 or 1995. He demonstrated that it shrunk regression coefficients to zero. I wasn’t impressed, first because it seemed like no big deal (if that’s the prior you use, that’s the shrinkage you get) and second because, from a Bayesian perspective, I don’t want to shrink things all the way to zero. In the sorts of social and environmental science problems I’ve worked on, just about nothing is zero. I’d like to control my noisy estimates but there’s nothing special about zero. At the end of the talk I stood up and asked Trevor Rob why he thought it was a good idea to have zero estimates and he Trevor Hastie stood up and said something about how it can be costly to keep track of lots of predictors so it could be efficient to set a bunch of coefficients to zero. I didn’t buy it: if cost is a consideration, I’d think cost should be in the calculation, the threshold for setting a coefficient to zero should depend on the cost of the variable, and so on.

In my skepticism, I was right in the short term but way way wrong in the medium-term (and we’ll never know about the long term). What I mean is, I think my reactions made sense: lasso corresponds to one particular penalty function and, if the goal is reducing cost of saving variables in a typical social-science regression problem, there’s no reason to use some single threshold. But I was wrong in the medium term, for two reasons. First, whether or not lasso is just an implementation of Bayes, the fact is that mainstream Bayesians weren’t doing much of it. We didn’t have anything like lasso in the first or the second edition of Bayesian Data Analysis, and in my applied work I had real difficulties with regression coefficients getting out of control (see Table 2 of this article from 2003, for example). I could go around smugly thinking that lasso was a trivial implementation of a prior distribution, coupled with a silly posterior-mode summary. Meanwhile Hastie, Tibshirani, and others were moving far ahead, going past simple regressions to work on ever-larger problems. Why didn’t I try some of these prior distributions in my own work or even mention them in my textbooks? I don’t know. Finally in 2008 we published something on regularized logistic regression, but even there we were pretty apologetic about it. Somehow there had developed a tradition for Bayesians to downplay the use of prior information. In much of my own work there was a lot of talk about how the prior distribution was pretty much just estimated from the data, and other Bayesians such as Raftery worked around various problems arising from the use of flat priors. Somehow it took the Stanford school of open-minded non-Bayesians to regularize in the way that Bayesians always could—but didn’t.

So, yes, lasso was a great idea, and I didn’t get the point. I’m still amazed in retrospect that as late as 2003, I was fitting uncontrolled regressions with lots of predictors and not knowing what to do. Or, I should say, as late as 2013, considering I still haven’t fully integrated these ideas into my work. I do use bayesglm() routinely, but that has very weak priors.

And it’s not just the Bayesians like me who’ve been slow to pick up on this. I see routine regression analysis all the time that does no regularization and as a result suffers from the usual problem of noisy estimates and dramatic overestimates of the magnitudes of effect. Just look at the tables of regression coefficients in just about any quantitative empirical paper in political science or economics or public health. So there’s a ways to go.

New lasso research!

Yesterday I had the idea of writing this post. I’d been thinking about the last paragraph here and how lasso has been so important and how I was so slow to catch on, and it seemed worth writing about. So many new ideas are like this, I think. The enter the world in some ragged, incompletely-justified format, then prove themselves through many applications, and then only gradually get fully understood. I’ve seen this happen enough with my own ideas, and I’ve discussed many of these cases on this blog, so this seemed like a perfect opportunity to demonstrate the principle from the other direction.

And then, just by coincidence, I received the following email from Rob Tibshirani:

Over the past few months I [Tibshirani] have been consumed with a new piece of work [with Richard Lockhart, Jonathan Taylor, and Ryan Tibshirani] that’s finally done. Here’s the paper, the slides (easier to read), and the R package.

I’m very excited about this. We have discovered a test statistic for the lasso that has a very simple Exp(1) asymptotic distribution, accounting for the adaptive fitting. In a sense, it’s the natural analogue of the drop in RSS chi-squared (or F) statistic for adaptive regression!

It also could help bring the lasso into the mainstream. It shows how a basic adaptive (frequentist) inference—difficult to do in standard least squares regression, falls out naturally in the lasso paradigm.

My quick reaction was: Don’t worry, Rob—lasso already is in the statistical mainstream. I looked it up on Google scholar and the 1996 lasso paper has over 7000 citations, with dozens of other papers on lasso having hundreds of citations each. Lasso is huge.

What fascinates me is that the lasso world is a sort of parallel Bayesian world. I don’t particularly care whether the methods are “frequentist” or not—really I think we all have the same inferential goals, which are to make predictions and to estimate parameters (where “parameters” are those aspects of a model that generalize to new data)

Bayesians have traditionally (and continue to) feel free to optimize, to tinker, to improve a model here or there and slightly improve inference. In contrast, it’s been my impression that statistical researchers working within classical paradigms have felt more constrained. So one thing I like about the lasso world is that it frees a whole group of researchers—those who, for whatever reason, feel uncomfortable with Bayesian methods—to act in what i consider an open-ended Bayesian way. I’m not thinking so much of Hastie and Tibshirani here—ever since their work on generalized additive models (if not before), they’ve always worked in a middle ground where they’ve felt free to play with ideas—rather, I’m thinking of more theoretically-minded researchers who may have been inspired by lasso to think more broadly.

To get back to the paper that Rob sent me: I already think that lasso (by itself, and as an inspiration for Bayesian regularization) is a great idea. I’m not a big fan of significance tests. So for my own practice I don’t see the burning need for this paper. But if it will help a new group of applied researchers hop on to the regularization bandwagon, I’m all for it. And I’ve always been interested in methods for counting parameters and adjusting chi-squared tests. This came up in Hastie and Tibshirani’s 1990 book, it was in my Ph.D. thesis, and it was in my 1996 paper with Meng and Stern. So I’m always happy to see progress in this area. I wonder how the ideas in Tibshirani et al.’s new paper connect to research on predictive error measures such as AIC and WAIC.

So, although, I don’t have any applied or technical comments on the paper at hand (except for feeling strongly that Tables 2 and 3 should really really really be made into a graph, and Figure 4 would be improved by bounding the y-axis between 0 and 1, and really Tables 1, 4, and 5 would be better as graphs too (remember “coefplot”? and do we really care that a certain number is “315.216″), I welcome it inasmuch as it will motivate a new group of users to try lasso, and I recommend it in particular to theoretically-minded researchers and those who have a particular interest in significance tests.

P.S. The discussion below is excellent. So much better than those silly debates about how best to explain p-values (or, worse, the sidebar about whether it’s kosher to call a p-value a conditional probability). I’d much rather we get 100 comments on the topics being discussed here.