今天完成的是第二部数据的清洗,由于在网上查阅了相关资料,进一步了解了有关mapreduce的运作机理,所以在做起来时,较以前简单了许多。

import java.io.IOException; 2 import org.apache.hadoop.conf.Configuration; 3 import org.apache.hadoop.fs.Path; 4 import org.apache.hadoop.io.Text; 5 import org.apache.hadoop.mapreduce.Job; 6 import org.apache.hadoop.mapreduce.Mapper; 7 import org.apache.hadoop.mapreduce.Reducer; 8 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 9 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 10 11 public class text_2_1 { 12 public static class Map extends Mapper<Object,Text,Text,Text>{ 13 private static Text newKey = new Text(); 14 private static Text newvalue = new Text("1"); 15 public void map(Object key,Text value,Context context) throws IOException, InterruptedException{ 16 String line = value.toString(); 17 String arr[] = line.split(" "); 18 newKey.set(arr[5]); 19 context.write(newKey,newvalue); 20 } 21 } 22 public static class Reduce extends Reducer<Text, Text, Text, Text> { 23 private static Text newkey = new Text(); 24 private static Text newvalue = new Text(); 25 protected void reduce(Text key, Iterable<Text> values, Context context)throws IOException, InterruptedException { 26 int num = 0; 27 for(Text text : values){ 28 num++; 29 } 30 newkey.set(""+num); 31 newvalue.set(key); 32 context.write(newkey,newvalue); 33 } 34 } 35 public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { 36 Configuration conf = new Configuration(); 37 conf.set("mapred.textoutputformat.separator", " "); 38 System.out.println("start"); 39 Job job=Job.getInstance(conf); 40 job.setJarByClass(text_2_1.class); 41 job.setMapperClass(Map.class); 42 job.setReducerClass(Reduce.class); 43 job.setOutputKeyClass(Text.class); 44 job.setOutputValueClass(Text.class); 45 Path in=new Path("hdfs://localhost:9000/text/in/data"); 46 Path out=new Path("hdfs://localhost:9000/text/out1"); 47 FileInputFormat.addInputPath(job, in); 48 FileOutputFormat.setOutputPath(job, out); 49 boolean flag = job.waitForCompletion(true); 50 System.out.println(flag); 51 System.exit(flag? 0 : 1); 52 } 53 }

import java.io.IOException; 2 import org.apache.hadoop.conf.Configuration; 3 import org.apache.hadoop.fs.Path; 4 import org.apache.hadoop.io.IntWritable; 5 import org.apache.hadoop.io.Text; 6 import org.apache.hadoop.mapreduce.Job; 7 import org.apache.hadoop.mapreduce.Mapper; 8 import org.apache.hadoop.mapreduce.Reducer; 9 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 10 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 11 public class m { 12 public static class Map extends Mapper<Object,Text,IntWritable,Text>{ 13 private static IntWritable newKey = new IntWritable (); 14 private static Text newvalue = new Text (); 15 public void map(Object key,Text value,Context context) throws IOException, InterruptedException{ 16 String line = value.toString(); 17 String arr[] = line.split(" "); 18 newKey.set(Integer.parseInt(arr[0])); 19 newvalue.set(arr[1]); 20 context.write(newKey,newvalue); 21 } 22 } 23 public static class Reduce extends Reducer<IntWritable, Text, IntWritable, Text> { 24 protected void reduce(IntWritable key, Iterable<Text> values, Context context)throws IOException, InterruptedException { 25 for(Text text : values){ 26 context.write(key,text); 27 } 28 } 29 } 30 public static class IntWritableDecreasingComparator extends IntWritable.Comparator 31 { 32 public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2) 33 { 34 return -super.compare(b1, s1, l1, b2, s2, l2); 35 } 36 } 37 public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { 38 Configuration conf = new Configuration(); 39 conf.set("mapred.textoutputformat.separator", " "); 40 System.out.println("start"); 41 Job job=Job.getInstance(conf); 42 job.setJarByClass(m.class); 43 job.setMapperClass(Map.class); 44 job.setReducerClass(Reduce.class); 45 job.setOutputKeyClass(IntWritable.class); 46 job.setOutputValueClass(Text.class); 47 job.setSortComparatorClass(IntWritableDecreasingComparator.class); 48 Path in=new Path("hdfs://localhost:9000/text/out1/part-r-00000"); 49 Path out=new Path("hdfs://localhost:9000/text/out2"); 50 FileInputFormat.addInputPath(job, in); 51 FileOutputFormat.setOutputPath(job, out); 52 boolean flag = job.waitForCompletion(true); 53 System.out.println(flag); 54 System.exit(flag? 0 : 1); 55 } 56 }

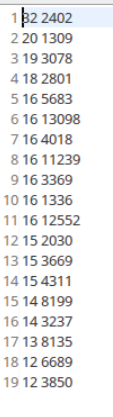

运行结果如下: