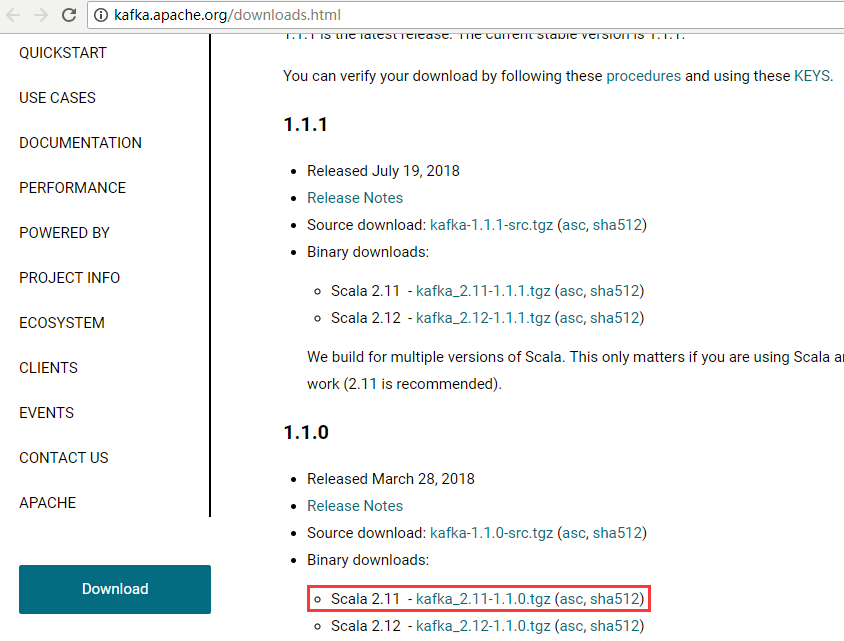

一、下载

下载地址:

http://kafka.apache.org/downloads.html 我这里下载的是Scala 2.11对应的 kafka_2.11-1.1.0.tgz

二、集群规划

| IP | 节点名称 | Kafka | Zookeeper | Jdk | Scala |

| 192.168.100.21 | node21 | Kafka | Zookeeper | Jdk | Scala |

| 192.168.100.22 | node22 | Kafka | Zookeeper | Jdk | Scala |

| 192.168.100.23 | node23 | Kafka | Zookeeper | Jdk | Scala |

Zookeeper集群安装参考: CentOS7.5搭建Zookeeper3.4.12集群与命令行操作

三、安装

2.1 上传解压缩

[admin@node21 software]$ tar zxvf kafka_2.11-1.1.0.tgz -C /opt/module/

2.2 创建日志目录

[admin@node21 software]$ cd /opt/module/kafka_2.11-1.1.0 [admin@node21 kafka_2.11-1.1.0]$ mkdir logs

2.3 修改配置文件

进入kafka的安装配置目录

[admin@node21 kafka_2.11-1.1.0]$ cd config/ [admin@node21 config]$ vi server.properties

主要关注:server.properties 这个文件即可,发现在配置目录下也有Zookeeper文件,我们可以根据Kafka内置的zk集群来启动,但是建议使用独立的zk集群。

server.properties(broker.id和listeners每个节点都不相同)

#是否允许删除topic,默认false不能手动删除 delete.topic.enable=true #当前机器在集群中的唯一标识,和zookeeper的myid性质一样 broker.id=0 #当前kafka服务侦听的地址和端口,端口默认是9092 listeners = PLAINTEXT://192.168.100.21:9092 #这个是borker进行网络处理的线程数 num.network.threads=3 #这个是borker进行I/O处理的线程数 num.io.threads=8 #发送缓冲区buffer大小,数据不是一下子就发送的,先会存储到缓冲区到达一定的大小后在发送,能提高性能 socket.send.buffer.bytes=102400 #kafka接收缓冲区大小,当数据到达一定大小后在序列化到磁盘 socket.receive.buffer.bytes=102400 #这个参数是向kafka请求消息或者向kafka发送消息的请请求的最大数,这个值不能超过java的堆栈大小 socket.request.max.bytes=104857600 #消息日志存放的路径 log.dirs=/opt/module/kafka_2.11-1.1.0/logs #默认的分区数,一个topic默认1个分区数 num.partitions=1 #每个数据目录用来日志恢复的线程数目 num.recovery.threads.per.data.dir=1 #默认消息的最大持久化时间,168小时,7天 log.retention.hours=168 #这个参数是:因为kafka的消息是以追加的形式落地到文件,当超过这个值的时候,kafka会新起一个文件 log.segment.bytes=1073741824 #每隔300000毫秒去检查上面配置的log失效时间 log.retention.check.interval.ms=300000 #是否启用log压缩,一般不用启用,启用的话可以提高性能 log.cleaner.enable=false #设置zookeeper的连接端口 zookeeper.connect=node21:2181,node22:2181,node23:2181 #设置zookeeper的连接超时时间 zookeeper.connection.timeout.ms=6000

2.4分发安装包到其他节点

[admin@node21 module]$ scp -r kafka_2.11-1.1.0 admin@node22:/opt/module/ [admin@node21 module]$ scp -r kafka_2.11-1.1.0 admin@node23:/opt/module/

修改node22,node23节点kafka配置文件conf/server.properties里面的broker.id和listeners的值。

2.5 添加环境变量

[admin@node21 module]$ vi /etc/profile #KAFKA_HOME export KAFKA_HOME=/opt/module/kafka_2.11-1.1.0 export PATH=$PATH:$KAFKA_HOME/bin

保存使其立即生效

[admin@node21 module]$ source /etc/profile

三、kafka集群启动

3.1 首先启动zookeeper集群

所有zookeeper节点都需要执行

[admin@node21 ~]$ zkServer.sh start

3.2 后台启动Kafka集群服务

所有Kafka节点都需要执行

[admin@node21 kafka_2.11-1.1.0]$ bin/kafka-server-start.sh config/server.properties &

四、kafka命令行操作

kafka-broker-list:node21:9092,node22:9092,node23:9092 zookeeper.connect-list: node21:2181,node22:2181,node23:2181

4.1 创建新topic

[admin@node22 kafka_2.11-1.1.0]$ bin/kafka-topics.sh Create, delete, describe, or change a topic. Option Description ------ ----------- --alter Alter the number of partitions, replica assignment, and/or configuration for the topic. --config <String: name=value> A topic configuration override for the topic being created or altered.The following is a list of valid configurations: cleanup.policy compression.type delete.retention.ms file.delete.delay.ms flush.messages flush.ms follower.replication.throttled. replicas index.interval.bytes leader.replication.throttled.replicas max.message.bytes message.format.version message.timestamp.difference.max.ms message.timestamp.type min.cleanable.dirty.ratio min.compaction.lag.ms min.insync.replicas preallocate retention.bytes retention.ms segment.bytes segment.index.bytes segment.jitter.ms segment.ms unclean.leader.election.enable See the Kafka documentation for full details on the topic configs. --create Create a new topic. --delete Delete a topic --delete-config <String: name> A topic configuration override to be removed for an existing topic (see the list of configurations under the --config option). --describe List details for the given topics. --disable-rack-aware Disable rack aware replica assignment --force Suppress console prompts --help Print usage information. --if-exists if set when altering or deleting topics, the action will only execute if the topic exists --if-not-exists if set when creating topics, the action will only execute if the topic does not already exist --list List all available topics. --partitions <Integer: # of partitions> The number of partitions for the topic being created or altered (WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected --replica-assignment <String: A list of manual partition-to-broker broker_id_for_part1_replica1 : assignments for the topic being broker_id_for_part1_replica2 , created or altered. broker_id_for_part2_replica1 : broker_id_for_part2_replica2 , ...> --replication-factor <Integer: The replication factor for each replication factor> partition in the topic being created. --topic <String: topic> The topic to be create, alter or describe. Can also accept a regular expression except for --create option --topics-with-overrides if set when describing topics, only show topics that have overridden configs --unavailable-partitions if set when describing topics, only show partitions whose leader is not available --under-replicated-partitions if set when describing topics, only show under replicated partitions --zookeeper <String: hosts> REQUIRED: The connection string for the zookeeper connection in the form host:port. Multiple hosts can be given to allow fail-over.

在node21节点上创建一个新的Topic

[admin@node21 kafka_2.11-1.1.0]$ bin/kafka-topics.sh --create --zookeeper node21:2181,node22:2181,node23:2181 --replication-factor 3 --partitions 3 --topic TestTopic

选项说明:

--topic 定义topic名

--replication-factor 定义副本数

--partitions 定义分区数

4.2 查看topic副本信息

[admin@node21 kafka_2.11-1.1.0]$ bin/kafka-topics.sh --describe --zookeeper node21:2181,node22:2181,node23:2181 --topic TestTopic

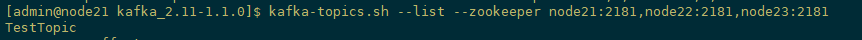

4.3 查看已经创建的topic信息

[admin@node21 kafka_2.11-1.1.0]$ kafka-topics.sh --list --zookeeper node21:2181,node22:2181,node23:2181

4.4 测试生产者发送消息

[admin@node22 kafka_2.11-1.1.0]$ bin/kafka-console-producer.sh Read data from standard input and publish it to Kafka. Option Description ------ ----------- --batch-size <Integer: size> Number of messages to send in a single batch if they are not being sent synchronously. (default: 200) --broker-list <String: broker-list> REQUIRED: The broker list string in the form HOST1:PORT1,HOST2:PORT2. --compression-codec [String: The compression codec: either 'none', compression-codec] 'gzip', 'snappy', or 'lz4'.If specified without value, then it defaults to 'gzip' --key-serializer <String: The class name of the message encoder encoder_class> implementation to use for serializing keys. (default: kafka. serializer.DefaultEncoder) --line-reader <String: reader_class> The class name of the class to use for reading lines from standard in. By default each line is read as a separate message. (default: kafka. tools. ConsoleProducer$LineMessageReader) --max-block-ms <Long: max block on The max time that the producer will send> block for during a send request (default: 60000) --max-memory-bytes <Long: total memory The total memory used by the producer in bytes> to buffer records waiting to be sent to the server. (default: 33554432) --max-partition-memory-bytes <Long: The buffer size allocated for a memory in bytes per partition> partition. When records are received which are smaller than this size the producer will attempt to optimistically group them together until this size is reached. (default: 16384) --message-send-max-retries <Integer> Brokers can fail receiving the message for multiple reasons, and being unavailable transiently is just one of them. This property specifies the number of retires before the producer give up and drop this message. (default: 3) --metadata-expiry-ms <Long: metadata The period of time in milliseconds expiration interval> after which we force a refresh of metadata even if we haven't seen any leadership changes. (default: 300000) --old-producer Use the old producer implementation. --producer-property <String: A mechanism to pass user-defined producer_prop> properties in the form key=value to the producer. --producer.config <String: config file> Producer config properties file. Note that [producer-property] takes precedence over this config. --property <String: prop> A mechanism to pass user-defined properties in the form key=value to the message reader. This allows custom configuration for a user- defined message reader. --queue-enqueuetimeout-ms <Integer: Timeout for event enqueue (default: queue enqueuetimeout ms> 2147483647) --queue-size <Integer: queue_size> If set and the producer is running in asynchronous mode, this gives the maximum amount of messages will queue awaiting sufficient batch size. (default: 10000) --request-required-acks <String: The required acks of the producer request required acks> requests (default: 1) --request-timeout-ms <Integer: request The ack timeout of the producer timeout ms> requests. Value must be non-negative and non-zero (default: 1500) --retry-backoff-ms <Integer> Before each retry, the producer refreshes the metadata of relevant topics. Since leader election takes a bit of time, this property specifies the amount of time that the producer waits before refreshing the metadata. (default: 100) --socket-buffer-size <Integer: size> The size of the tcp RECV size. (default: 102400) --sync If set message send requests to the brokers are synchronously, one at a time as they arrive. --timeout <Integer: timeout_ms> If set and the producer is running in asynchronous mode, this gives the maximum amount of time a message will queue awaiting sufficient batch size. The value is given in ms. (default: 1000) --topic <String: topic> REQUIRED: The topic id to produce messages to. --value-serializer <String: The class name of the message encoder encoder_class> implementation to use for serializing values. (default: kafka. serializer.DefaultEncoder)

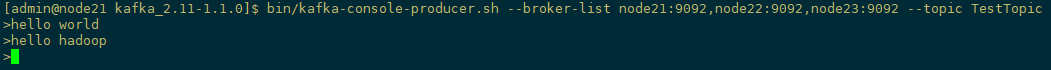

在node21上生产消息

[admin@node21 kafka_2.11-1.1.0]$ bin/kafka-console-producer.sh --broker-list node21:9092,node22:9092,node23:9092 --topic TestTopic

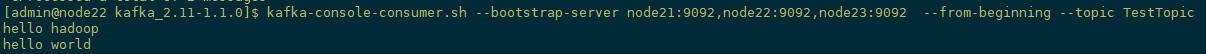

4.5 测试消费者消费消息

[admin@node22 kafka_2.11-1.1.0]$ bin/kafka-console-consumer.sh The console consumer is a tool that reads data from Kafka and outputs it to standard output. Option Description ------ ----------- --blacklist <String: blacklist> Blacklist of topics to exclude from consumption. --bootstrap-server <String: server to REQUIRED (unless old consumer is connect to> used): The server to connect to. --consumer-property <String: A mechanism to pass user-defined consumer_prop> properties in the form key=value to the consumer. --consumer.config <String: config file> Consumer config properties file. Note that [consumer-property] takes precedence over this config. --csv-reporter-enabled If set, the CSV metrics reporter will be enabled --delete-consumer-offsets If specified, the consumer path in zookeeper is deleted when starting up --enable-systest-events Log lifecycle events of the consumer in addition to logging consumed messages. (This is specific for system tests.) --formatter <String: class> The name of a class to use for formatting kafka messages for display. (default: kafka.tools. DefaultMessageFormatter) --from-beginning If the consumer does not already have an established offset to consume from, start with the earliest message present in the log rather than the latest message. --group <String: consumer group id> The consumer group id of the consumer. --isolation-level <String> Set to read_committed in order to filter out transactional messages which are not committed. Set to read_uncommittedto read all messages. (default: read_uncommitted) --key-deserializer <String: deserializer for key> --max-messages <Integer: num_messages> The maximum number of messages to consume before exiting. If not set, consumption is continual. --metrics-dir <String: metrics If csv-reporter-enable is set, and directory> this parameter isset, the csv metrics will be output here --new-consumer Use the new consumer implementation. This is the default, so this option is deprecated and will be removed in a future release. --offset <String: consume offset> The offset id to consume from (a non- negative number), or 'earliest' which means from beginning, or 'latest' which means from end (default: latest) --partition <Integer: partition> The partition to consume from. Consumption starts from the end of the partition unless '--offset' is specified. --property <String: prop> The properties to initialize the message formatter. --skip-message-on-error If there is an error when processing a message, skip it instead of halt. --timeout-ms <Integer: timeout_ms> If specified, exit if no message is available for consumption for the specified interval. --topic <String: topic> The topic id to consume on. --value-deserializer <String: deserializer for values> --whitelist <String: whitelist> Whitelist of topics to include for consumption. --zookeeper <String: urls> REQUIRED (only when using old consumer): The connection string for the zookeeper connection in the form host:port. Multiple URLS can be given to allow fail-over.

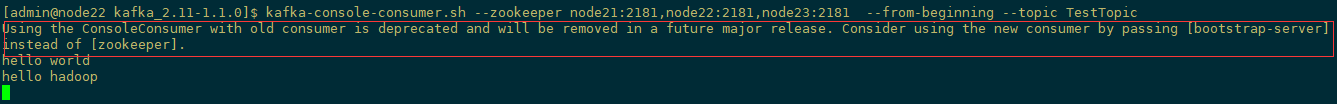

在node22上消费消息(旧命令操作)

[admin@node22 kafka_2.11-1.1.0]$ kafka-console-consumer.sh --zookeeper node21:2181,node22:2181,node23:2181 --from-beginning --topic TestTopic

--from-beginning:会把TestTopic主题中以往所有的数据都读取出来。根据业务场景选择是否增加该配置。

新消费者命令

[admin@node22 kafka_2.11-1.1.0]$ kafka-console-consumer.sh --bootstrap-server node21:9092,node22:9092,node23:9092 --from-beginning --topic TestTopic

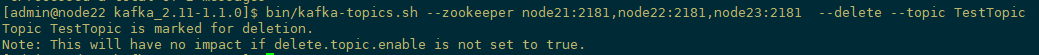

4.6删除topic

[admin@node22 kafka_2.11-1.1.0]$ bin/kafka-topics.sh --zookeeper node21:2181,node22:2181,node23:2181 --delete --topic TestTopic

需要server.properties中设置delete.topic.enable=true否则只是标记删除或者直接重启。

4.7 停止Kafka服务

[admin@node21 kafka_2.11-1.1.0]$ bin/kafka-server-stop.sh stop

4.8 编写kafka启动脚本

[admin@node21 kafka_2.11-1.1.0]$ cd bin [admin@node21 bin]$ vi start-kafka.sh #!/bin/bash nohup /opt/module/kafka_2.11-1.1.0/bin/kafka-server-start.sh /opt/module/kafka_2.11-1.1.0/config/server.properties >/opt/module/kafka_2.11-1.1.0/logs/kafka.log 2>&1 &

赋权限给脚本:chmod +x start-kafka.sh

六 Kafka配置信息详解

5.1 Broker配置信息

|

属性 |

默认值 |

描述 |

|

broker.id |

必填参数,broker的唯一标识 |

|

|

log.dirs |

/tmp/kafka-logs |

Kafka数据存放的目录。可以指定多个目录,中间用逗号分隔,当新partition被创建的时会被存放到当前存放partition最少的目录。 |

|

port |

9092 |

BrokerServer接受客户端连接的端口号 |

|

zookeeper.connect |

null |

Zookeeper的连接串,格式为:hostname1:port1,hostname2:port2,hostname3:port3。可以填一个或多个,为了提高可靠性,建议都填上。注意,此配置允许我们指定一个zookeeper路径来存放此kafka集群的所有数据,为了与其他应用集群区分开,建议在此配置中指定本集群存放目录,格式为:hostname1:port1,hostname2:port2,hostname3:port3/chroot/path 。需要注意的是,消费者的参数要和此参数一致。 |

|

message.max.bytes |

1000000 |

服务器可以接收到的最大的消息大小。注意此参数要和consumer的maximum.message.size大小一致,否则会因为生产者生产的消息太大导致消费者无法消费。 |

|

num.io.threads |

8 |

服务器用来执行读写请求的IO线程数,此参数的数量至少要等于服务器上磁盘的数量。 |

|

queued.max.requests |

500 |

I/O线程可以处理请求的队列大小,若实际请求数超过此大小,网络线程将停止接收新的请求。 |

|

socket.send.buffer.bytes |

100 * 1024 |

The SO_SNDBUFF buffer the server prefers for socket connections. |

|

socket.receive.buffer.bytes |

100 * 1024 |

The SO_RCVBUFF buffer the server prefers for socket connections. |

|

socket.request.max.bytes |

100 * 1024 * 1024 |

服务器允许请求的最大值, 用来防止内存溢出,其值应该小于 Java heap size. |

|

num.partitions |

1 |

默认partition数量,如果topic在创建时没有指定partition数量,默认使用此值,建议改为5 |

|

log.segment.bytes |

1024 * 1024 * 1024 |

Segment文件的大小,超过此值将会自动新建一个segment,此值可以被topic级别的参数覆盖。 |

|

log.roll.{ms,hours} |

24 * 7 hours |

新建segment文件的时间,此值可以被topic级别的参数覆盖。 |

|

log.retention.{ms,minutes,hours} |

7 days |

Kafka segment log的保存周期,保存周期超过此时间日志就会被删除。此参数可以被topic级别参数覆盖。数据量大时,建议减小此值。 |

|

log.retention.bytes |

-1 |

每个partition的最大容量,若数据量超过此值,partition数据将会被删除。注意这个参数控制的是每个partition而不是topic。此参数可以被log级别参数覆盖。 |

|

log.retention.check.interval.ms |

5 minutes |

删除策略的检查周期 |

|

auto.create.topics.enable |

true |

自动创建topic参数,建议此值设置为false,严格控制topic管理,防止生产者错写topic。 |

|

default.replication.factor |

1 |

默认副本数量,建议改为2。 |

|

replica.lag.time.max.ms |

10000 |

在此窗口时间内没有收到follower的fetch请求,leader会将其从ISR(in-sync replicas)中移除。 |

|

replica.lag.max.messages |

4000 |

如果replica节点落后leader节点此值大小的消息数量,leader节点就会将其从ISR中移除。 |

|

replica.socket.timeout.ms |

30 * 1000 |

replica向leader发送请求的超时时间。 |

|

replica.socket.receive.buffer.bytes |

64 * 1024 |

The socket receive buffer for network requests to the leader for replicating data. |

|

replica.fetch.max.bytes |

1024 * 1024 |

The number of byes of messages to attempt to fetch for each partition in the fetch requests the replicas send to the leader. |

|

replica.fetch.wait.max.ms |

500 |

The maximum amount of time to wait time for data to arrive on the leader in the fetch requests sent by the replicas to the leader. |

|

num.replica.fetchers |

1 |

Number of threads used to replicate messages from leaders. Increasing this value can increase the degree of I/O parallelism in the follower broker. |

|

fetch.purgatory.purge.interval.requests |

1000 |

The purge interval (in number of requests) of the fetch request purgatory. |

|

zookeeper.session.timeout.ms |

6000 |

ZooKeeper session 超时时间。如果在此时间内server没有向zookeeper发送心跳,zookeeper就会认为此节点已挂掉。 此值太低导致节点容易被标记死亡;若太高,.会导致太迟发现节点死亡。 |

|

zookeeper.connection.timeout.ms |

6000 |

客户端连接zookeeper的超时时间。 |

|

zookeeper.sync.time.ms |

2000 |

H ZK follower落后 ZK leader的时间。 |

|

controlled.shutdown.enable |

true |

允许broker shutdown。如果启用,broker在关闭自己之前会把它上面的所有leaders转移到其它brokers上,建议启用,增加集群稳定性。 |

|

auto.leader.rebalance.enable |

true |

If this is enabled the controller will automatically try to balance leadership for partitions among the brokers by periodically returning leadership to the “preferred” replica for each partition if it is available. |

|

leader.imbalance.per.broker.percentage |

10 |

The percentage of leader imbalance allowed per broker. The controller will rebalance leadership if this ratio goes above the configured value per broker. |

|

leader.imbalance.check.interval.seconds |

300 |

The frequency with which to check for leader imbalance. |

|

offset.metadata.max.bytes |

4096 |

The maximum amount of metadata to allow clients to save with their offsets. |

|

connections.max.idle.ms |

600000 |

Idle connections timeout: the server socket processor threads close the connections that idle more than this. |

|

num.recovery.threads.per.data.dir |

1 |

The number of threads per data directory to be used for log recovery at startup and flushing at shutdown. |

|

unclean.leader.election.enable |

true |

Indicates whether to enable replicas not in the ISR set to be elected as leader as a last resort, even though doing so may result in data loss. |

|

delete.topic.enable |

false |

启用deletetopic参数,建议设置为true。 |

|

offsets.topic.num.partitions |

50 |

The number of partitions for the offset commit topic. Since changing this after deployment is currently unsupported, we recommend using a higher setting for production (e.g., 100-200). |

|

offsets.topic.retention.minutes |

1440 |

Offsets that are older than this age will be marked for deletion. The actual purge will occur when the log cleaner compacts the offsets topic. |

|

offsets.retention.check.interval.ms |

600000 |

The frequency at which the offset manager checks for stale offsets. |

|

offsets.topic.replication.factor |

3 |

The replication factor for the offset commit topic. A higher setting (e.g., three or four) is recommended in order to ensure higher availability. If the offsets topic is created when fewer brokers than the replication factor then the offsets topic will be created with fewer replicas. |

|

offsets.topic.segment.bytes |

104857600 |

Segment size for the offsets topic. Since it uses a compacted topic, this should be kept relatively low in order to facilitate faster log compaction and loads. |

|

offsets.load.buffer.size |

5242880 |

An offset load occurs when a broker becomes the offset manager for a set of consumer groups (i.e., when it becomes a leader for an offsets topic partition). This setting corresponds to the batch size (in bytes) to use when reading from the offsets segments when loading offsets into the offset manager’s cache. |

|

offsets.commit.required.acks |

-1 |

The number of acknowledgements that are required before the offset commit can be accepted. This is similar to the producer’s acknowledgement setting. In general, the default should not be overridden. |

|

offsets.commit.timeout.ms |

5000 |

The offset commit will be delayed until this timeout or the required number of replicas have received the offset commit. This is similar to the producer request timeout. |

5.2 Producer配置信息

|

属性 |

默认值 |

描述 |

|

metadata.broker.list |

启动时producer查询brokers的列表,可以是集群中所有brokers的一个子集。注意,这个参数只是用来获取topic的元信息用,producer会从元信息中挑选合适的broker并与之建立socket连接。格式是:host1:port1,host2:port2。 |

|

|

request.required.acks |

0 |

参见3.2节介绍 |

|

request.timeout.ms |

10000 |

Broker等待ack的超时时间,若等待时间超过此值,会返回客户端错误信息。 |

|

producer.type |

sync |

同步异步模式。async表示异步,sync表示同步。如果设置成异步模式,可以允许生产者以batch的形式push数据,这样会极大的提高broker性能,推荐设置为异步。 |

|

serializer.class |

kafka.serializer.DefaultEncoder |

序列号类,.默认序列化成 byte[] 。 |

|

key.serializer.class |

Key的序列化类,默认同上。 |

|

|

partitioner.class |

kafka.producer.DefaultPartitioner |

Partition类,默认对key进行hash。 |

|

compression.codec |

none |

指定producer消息的压缩格式,可选参数为: “none”, “gzip” and “snappy”。关于压缩参见4.1节 |

|

compressed.topics |

null |

启用压缩的topic名称。若上面参数选择了一个压缩格式,那么压缩仅对本参数指定的topic有效,若本参数为空,则对所有topic有效。 |

|

message.send.max.retries |

3 |

Producer发送失败时重试次数。若网络出现问题,可能会导致不断重试。 |

|

retry.backoff.ms |

100 |

Before each retry, the producer refreshes the metadata of relevant topics to see if a new leader has been elected. Since leader election takes a bit of time, this property specifies the amount of time that the producer waits before refreshing the metadata. |

|

topic.metadata.refresh.interval.ms |

600 * 1000 |

The producer generally refreshes the topic metadata from brokers when there is a failure (partition missing, leader not available…). It will also poll regularly (default: every 10min so 600000ms). If you set this to a negative value, metadata will only get refreshed on failure. If you set this to zero, the metadata will get refreshed after each message sent (not recommended). Important note: the refresh happen only AFTER the message is sent, so if the producer never sends a message the metadata is never refreshed |

|

queue.buffering.max.ms |

5000 |

启用异步模式时,producer缓存消息的时间。比如我们设置成1000时,它会缓存1秒的数据再一次发送出去,这样可以极大的增加broker吞吐量,但也会造成时效性的降低。 |

|

queue.buffering.max.messages |

10000 |

采用异步模式时producer buffer 队列里最大缓存的消息数量,如果超过这个数值,producer就会阻塞或者丢掉消息。 |

|

queue.enqueue.timeout.ms |

-1 |

当达到上面参数值时producer阻塞等待的时间。如果值设置为0,buffer队列满时producer不会阻塞,消息直接被丢掉。若值设置为-1,producer会被阻塞,不会丢消息。 |

|

batch.num.messages |

200 |

采用异步模式时,一个batch缓存的消息数量。达到这个数量值时producer才会发送消息。 |

|

send.buffer.bytes |

100 * 1024 |

Socket write buffer size |

|

client.id |

“” |

The client id is a user-specified string sent in each request to help trace calls. It should logically identify the application making the request. |

5.3 Consumer配置信息

|

属性 |

默认值 |

描述 |

|

group.id |

Consumer的组ID,相同goup.id的consumer属于同一个组。 |

|

|

zookeeper.connect |

Consumer的zookeeper连接串,要和broker的配置一致。 |

|

|

consumer.id |

null |

如果不设置会自动生成。 |

|

socket.timeout.ms |

30 * 1000 |

网络请求的socket超时时间。实际超时时间由max.fetch.wait + socket.timeout.ms 确定。 |

|

socket.receive.buffer.bytes |

64 * 1024 |

The socket receive buffer for network requests. |

|

fetch.message.max.bytes |

1024 * 1024 |

查询topic-partition时允许的最大消息大小。consumer会为每个partition缓存此大小的消息到内存,因此,这个参数可以控制consumer的内存使用量。这个值应该至少比server允许的最大消息大小大,以免producer发送的消息大于consumer允许的消息。 |

|

num.consumer.fetchers |

1 |

The number fetcher threads used to fetch data. |

|

auto.commit.enable |

true |

如果此值设置为true,consumer会周期性的把当前消费的offset值保存到zookeeper。当consumer失败重启之后将会使用此值作为新开始消费的值。 |

|

auto.commit.interval.ms |

60 * 1000 |

Consumer提交offset值到zookeeper的周期。 |

|

queued.max.message.chunks |

2 |

用来被consumer消费的message chunks 数量, 每个chunk可以缓存fetch.message.max.bytes大小的数据量。 |

|

auto.commit.interval.ms |

60 * 1000 |

Consumer提交offset值到zookeeper的周期。 |

|

queued.max.message.chunks |

2 |

用来被consumer消费的message chunks 数量, 每个chunk可以缓存fetch.message.max.bytes大小的数据量。 |

|

fetch.min.bytes |

1 |

The minimum amount of data the server should return for a fetch request. If insufficient data is available the request will wait for that much data to accumulate before answering the request. |

|

fetch.wait.max.ms |

100 |

The maximum amount of time the server will block before answering the fetch request if there isn’t sufficient data to immediately satisfy fetch.min.bytes. |

|

rebalance.backoff.ms |

2000 |

Backoff time between retries during rebalance. |

|

refresh.leader.backoff.ms |

200 |

Backoff time to wait before trying to determine the leader of a partition that has just lost its leader. |

|

auto.offset.reset |

largest |

What to do when there is no initial offset in ZooKeeper or if an offset is out of range ;smallest : automatically reset the offset to the smallest offset; largest : automatically reset the offset to the largest offset;anything else: throw exception to the consumer |

|

consumer.timeout.ms |

-1 |

若在指定时间内没有消息消费,consumer将会抛出异常。 |

|

exclude.internal.topics |

true |

Whether messages from internal topics (such as offsets) should be exposed to the consumer. |

|

zookeeper.session.timeout.ms |

6000 |

ZooKeeper session timeout. If the consumer fails to heartbeat to ZooKeeper for this period of time it is considered dead and a rebalance will occur. |

|

zookeeper.connection.timeout.ms |

6000 |

The max time that the client waits while establishing a connection to zookeeper. |

|

zookeeper.sync.time.ms |

2000 |

How far a ZK follower can be behind a ZK leader |