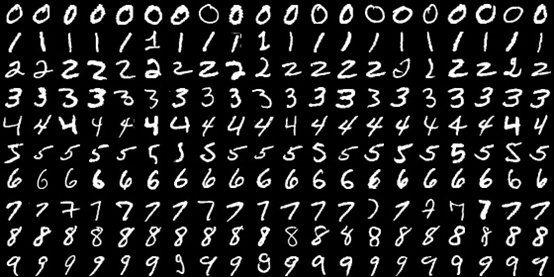

Pytorch是热门的深度学习框架之一,通过经典的MNIST 数据集进行快速的pytorch入门。

导入库

from torchvision.datasets import MNIST

from torchvision.transforms import ToTensor, Compose, Normalize

from torch.utils.data import DataLoader

import torch

import torch.nn.functional as F

import torch.nn as nn

import os

import numpy as np

准备数据集

path = './data'

# 使用Compose 将tensor化和正则化操作打包

transform_fn = Compose([

ToTensor(),

Normalize(mean=(0.1307,), std=(0.3081,))

])

mnist_dataset = MNIST(root=path, train=True, transform=transform_fn)

data_loader = torch.utils.data.DataLoader(dataset=mnist_dataset, batch_size=2, shuffle=True)

# 1. 构建函数,数据集预处理

BATCH_SIZE = 128

TEST_BATCH_SIZE = 1000

def get_dataloader(train=True, batch_size=BATCH_SIZE):

'''

train=True, 获取训练集

train=False 获取测试集

'''

transform_fn = Compose([

ToTensor(),

Normalize(mean=(0.1307,), std=(0.3081,))

])

dataset = MNIST(root='./data', train=train, transform=transform_fn)

data_loader = DataLoader(dataset=dataset, batch_size=BATCH_SIZE, shuffle=True)

return data_loader

构建模型

class MnistModel(nn.Module):

def __init__(self):

super().__init__() # 继承父类

self.fc1 = nn.Linear(1*28*28, 28) # 添加全连接层

self.fc2 = nn.Linear(28, 10)

def forward(self, input):

x = input.view(-1, 1*28*28)

x = self.fc1(x)

x = F.relu(x)

out = self.fc2(x)

return F.log_softmax(out, dim=-1) # log_softmax 与 nll_loss合用,计算交叉熵

模型训练

mnist_model = MnistModel()

optimizer = torch.optim.Adam(params=mnist_model.parameters(), lr=0.001)

# 如果有模型则加载

if os.path.exists('./model'):

mnist_model.load_state_dict(torch.load('model/mnist_model.pkl'))

optimizer.load_state_dict(torch.load('model/optimizer.pkl'))

def train(epoch):

data_loader = get_dataloader()

for index, (data, target) in enumerate(data_loader):

optimizer.zero_grad() # 梯度先清零

output = mnist_model(data)

loss = F.nll_loss(output, target)

loss.backward() # 误差反向传播计算

optimizer.step() # 更新梯度

if index % 100 == 0:

# 保存训练模型

torch.save(mnist_model.state_dict(), 'model/mnist_model.pkl')

torch.save(optimizer.state_dict(), 'model/optimizer.pkl')

print('Train Epoch: {} [{}/{} ({:.0f}%)] Loss: {:.6f}'.format(

epoch, index * len(data), len(data_loader.dataset),

100. * index / len(data_loader), loss.item()))

for i in range(epoch=5):

train(i)

Train Epoch: 0 [0/60000 (0%)] Loss: 0.023078

Train Epoch: 0 [12800/60000 (21%)] Loss: 0.019347

Train Epoch: 0 [25600/60000 (43%)] Loss: 0.105870

Train Epoch: 0 [38400/60000 (64%)] Loss: 0.050866

Train Epoch: 0 [51200/60000 (85%)] Loss: 0.097995

Train Epoch: 1 [0/60000 (0%)] Loss: 0.108337

Train Epoch: 1 [12800/60000 (21%)] Loss: 0.071196

Train Epoch: 1 [25600/60000 (43%)] Loss: 0.022856

Train Epoch: 1 [38400/60000 (64%)] Loss: 0.028392

Train Epoch: 1 [51200/60000 (85%)] Loss: 0.070508

Train Epoch: 2 [0/60000 (0%)] Loss: 0.037416

Train Epoch: 2 [12800/60000 (21%)] Loss: 0.075977

Train Epoch: 2 [25600/60000 (43%)] Loss: 0.024356

Train Epoch: 2 [38400/60000 (64%)] Loss: 0.042203

Train Epoch: 2 [51200/60000 (85%)] Loss: 0.020883

Train Epoch: 3 [0/60000 (0%)] Loss: 0.023487

Train Epoch: 3 [12800/60000 (21%)] Loss: 0.024403

Train Epoch: 3 [25600/60000 (43%)] Loss: 0.073619

Train Epoch: 3 [38400/60000 (64%)] Loss: 0.074042

Train Epoch: 3 [51200/60000 (85%)] Loss: 0.036283

Train Epoch: 4 [0/60000 (0%)] Loss: 0.021305

Train Epoch: 4 [12800/60000 (21%)] Loss: 0.062750

Train Epoch: 4 [25600/60000 (43%)] Loss: 0.016911

Train Epoch: 4 [38400/60000 (64%)] Loss: 0.039599

Train Epoch: 4 [51200/60000 (85%)] Loss: 0.026689

模型测试

def test():

loss_list = []

acc_list = []

test_loader = get_dataloader(train=False, batch_size = TEST_BATCH_SIZE)

mnist_model.eval() # 设为评估模式

for index, (data, target) in enumerate(test_loader):

with torch.no_grad():

out = mnist_model(data)

loss = F.nll_loss(out, target)

loss_list.append(loss)

pred = out.data.max(1)[1]

acc = pred.eq(target).float().mean() # eq()函数用于将两个tensor中的元素对比,返回布尔值

acc_list.append(acc)

print('平均准确率, 平均损失', np.mean(acc_list), np.mean(loss_list))

test()

平均准确率, 平均损失 0.9662777 0.12309619