1.前言

以前搭建集群都是使用nginx反向代理,但现在我们有了更好的选择——K8S。我不打算一上来就讲K8S的知识点,因为知识点还是比较多,我打算先从搭建K8S集群讲起,我也是在搭建集群的过程中熟悉了K8S的一些概念,希望对大家有所帮助。K8S集群的搭建难度适中,网上有很多搭建k8s的教程,我搭建的过程中或多或少遇到一些问题,现在就把我总结完的教程给大家总结一下。这里主要讲通过二进制包安装K8S

2.集群组件介绍

| 节点 | ip | 组件 |

| master | 192.168.8.201 |

etcd:存储集群节点信息 kubectl:管理集群组件,通过kubectl控制集群 kube-controller-manage:监控节点是否健康,不健康则自动修复至健康状态 kube-scheduler:负责为kube-controller-manage创建的pod选择合适的节点,将节点信息写入etcd |

| node | 192.168.8.202 |

kube-proxy:service与pod通信 kubelet:kube-scheduler将节点数据存入etcd后,kubelet获取到并按规则创建pod docker |

3.etcd安装

yum install etcd –y vi /etc/etcd/etcd.conf

修改etcd.conf内容

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

启动

systemctl start etcd

systemctl enable etcd

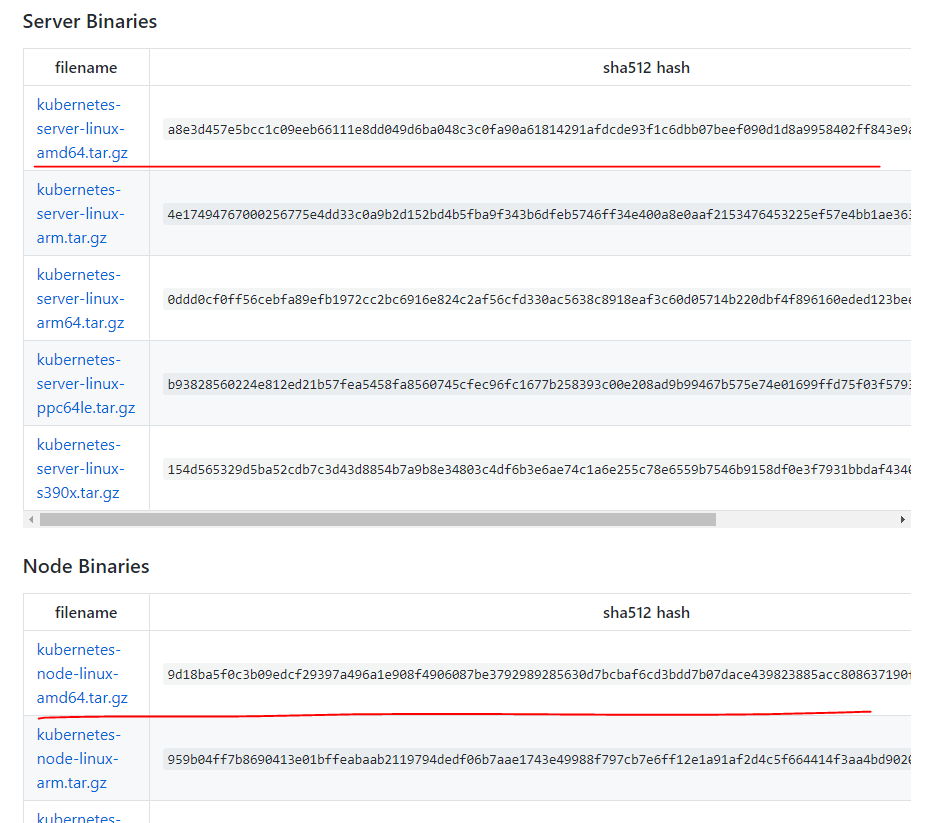

4.下载k8s安装包

打开github中k8s地址,选择一个版本的安装包

点击CHANGELOG-1.13.md,在master节点上安装server包,node节点上安装node包

5.master节点安装server

tar zxvf kubernetes-server-linux-amd64.tar.gz #解压 mkdir -p /opt/kubernetes/{bin,cfg} #创建文件夹 mv kubernetes/server/bin/{kube-apiserver,kube-scheduler,kube-controller-manager,kubectl} /opt/kubernetes/bin #移动文件到上一步的文件夹

chmod +x /opt/kubernetes/bin/*

5.1配置apiserver

cat <<EOF >/opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=http://192.168.8.201:2379 \ --insecure-bind-address=0.0.0.0 \ --insecure-port=8080 \ --advertise-address=192.168.8.201 \ --allow-privileged=true \ --service-cluster-ip-range=10.10.10.0/24 \ --service-node-port-range=30000-50000 \ --admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ResourceQuota" EOF

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

5.2配置kube-controller-manager

cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect=true \ --address=127.0.0.1" EOF

cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

5.3配置kube-scheduler

cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect" EOF

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

5.4运行kube-api与kube-controller-manager与kube-scheduler

vim ku.sh #创建一个脚本,内容如下

#!/bin/bash systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver systemctl enable kube-controller-manager systemctl restart kube-controller-manager systemctl enable kube-scheduler systemctl restart kube-scheduler

执行以上脚本

chmod +x *.sh #给权限 ./ku.sh #运行

5.5将kubectl配置到环境变量,便于执行

echo "export PATH=$PATH:/opt/kubernetes/bin" >> /etc/profile source /etc/profile

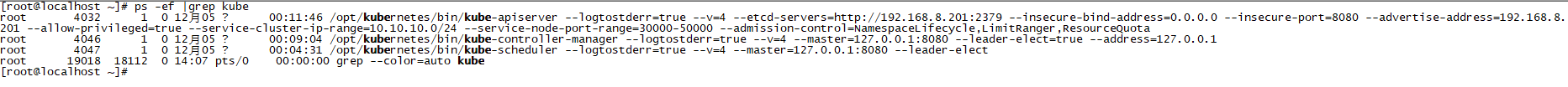

至此server安装成功,可通过命令查看相关进程是否启动成功

ps -ef |grep kube

启动失败可通过以下命令查看信息

journalctl -u kube-apiserver

6.安装node节点

6.1docker安装

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sudo yum makecache fast sudo yum -y install docker-ce sudo systemctl start docker

6.2解压node.zip包

tar zxvf kubernetes-node-linux-amd64.tar.gz mkdir -p /opt/kubernetes/{bin,cfg} mv kubernetes/node/bin/{kubelet,kube-proxy} /opt/kubernetes/bin/

chmod +x /opt/kubernetes/bin/*

6.3创建配置文件

vim /opt/kubernetes/cfg/kubelet.kubeconfig

apiVersion: v1 kind: Config clusters: - cluster: server: http://192.168.8.201:8080 name: kubernetes contexts: - context: cluster: kubernetes name: default-context current-context: default-context

vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

apiVersion: v1 kind: Config clusters: - cluster: server: http://192.168.8.201:8080 name: kubernetes contexts: - context: cluster: kubernetes name: default-context current-context: default-context

cat <<EOF >/opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \ --v=4 \ --address=192.168.8.202 \ --hostname-override=192.168.8.202 \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --allow-privileged=true \ --cluster-dns=10.10.10.2 \ --cluster-domain=cluster.local \ --fail-swap-on=false \ --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF

cat <<EOF >/usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=-/opt/kubernetes/cfg/kubelet ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target EOF

cat <<EOF >/opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true --v=4 --hostname-override=192.168.8.202 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" EOF

cat <<EOF >/usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF

6.4启动kube-proxy与kubelet

vim ku.sh

#!/bin/bash systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet systemctl enable kube-proxy systemctl restart kube-proxy

至此node安装完成,查看是否安装成功

失败则查看日志

journalctl -u kubelet

7.master节点验证是否有node节点

查看集群健康状态

至此master与node安装成功

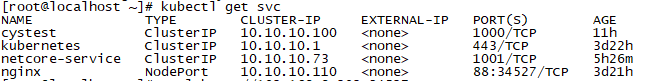

8.启动一个nginx示例

kubectl run nginx --image=nginx --replicas=3 kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort

验证

浏览器访问

9.安装dashbord

vim kube.yaml

apiVersion: extensions/v1beta1 kind: Deployment metadata: labels: app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: replicas: 1 selector: matchLabels: app: kubernetes-dashboard template: metadata: labels: app: kubernetes-dashboard annotations: scheduler.alpha.kubernetes.io/tolerations: | [ { "key": "dedicated", "operator": "Equal", "value": "master", "effect": "NoSchedule" } ] spec: containers: - name: kubernetes-dashboard image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.7.0 imagePullPolicy: Always ports: - containerPort: 9090 protocol: TCP args: - --apiserver-host=http://192.168.8.201:8080 livenessProbe: httpGet: path: / port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 --- kind: Service apiVersion: v1 metadata: labels: app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system spec: type: NodePort ports: - port: 80 targetPort: 9090 selector: app: kubernetes-dashboard

创建

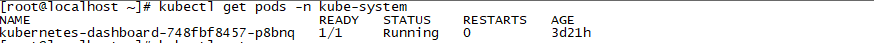

kubectl create -f kube.yaml

查看pod

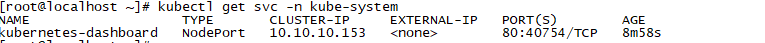

查看端口

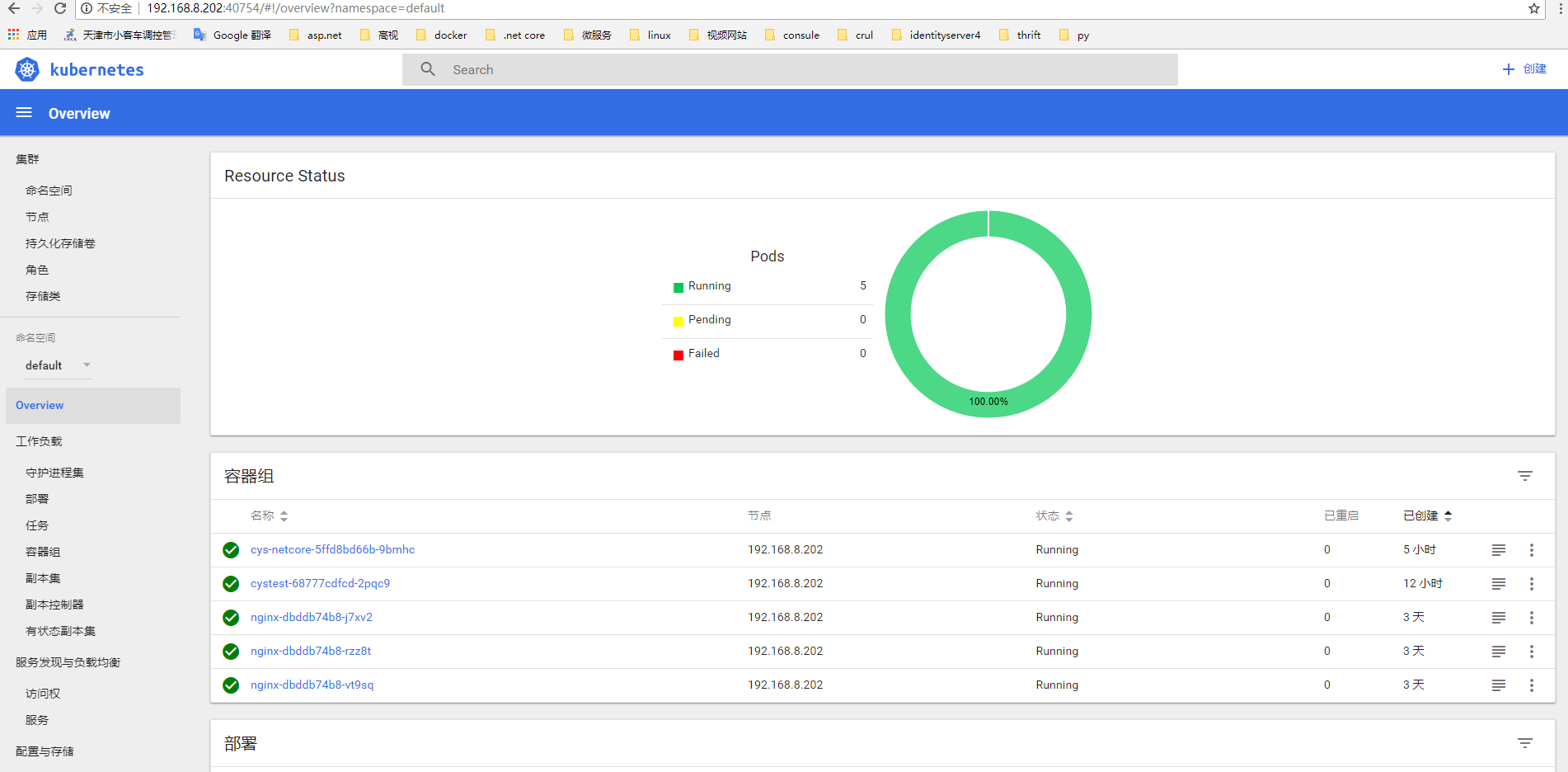

访问bord

至此集群搭建完成