kakfa官网上的几个例子:

环境: kafka集群, eclipse(win10)

pom.xml

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.12</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-streams</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>1.0.0</version>

</dependency>

一. Pipe

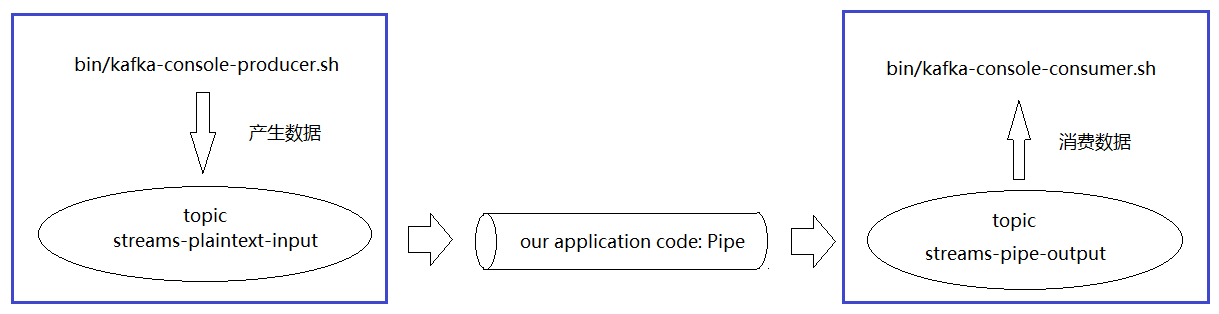

这个例子的功能就是从streams-plaintext-input输入文本, 然后我们写的代码将输入的文本转到streams-pipe-output.

streams-plaintext-input和streams-pipe-output这两个topic,kafka会自动创建.

可以查看topic:

# bin/kafka-topics.sh --describe --zookeeper node1,node2,node3

这将列出所有topic.

数据流是酱婶儿地:

图中蓝色部分要在shell上手中输入。具体命令:(开两个putty)

这个putty产生数据:

# bin/kafka-console-producer.sh --broker-list node1:9092,node2:9092,node3:9092 --topic streams-plaintext-input

这个putty显示producer输入的数据

# bin/kafka-console-consumer.sh --bootstrap-server node3:9092 --from-beginning --topic streams-pipe-output

java代码:(运行在win10的eclipse里)

package ex.pipe; import java.util.Properties; import java.util.concurrent.CountDownLatch; import org.apache.kafka.common.serialization.Serdes; import org.apache.kafka.streams.KafkaStreams; import org.apache.kafka.streams.StreamsBuilder; import org.apache.kafka.streams.StreamsConfig; import org.apache.kafka.streams.Topology; import org.apache.kafka.streams.kstream.KStream; public class Pipe { public static void main(String[] args) throws Exception { Properties config = new Properties(); config.put(StreamsConfig.APPLICATION_ID_CONFIG, "stream-pipe"); // specify a list of host/port pairs to connect to the kakfa cluster config.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.1.121:9092, 192.168.1.122:9092,192.168.1.123:9092"); config.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass()); config.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass()); final StreamsBuilder builder = new StreamsBuilder(); // a source stream from a kakfa topic named streams-plaintext-inpput KStream<String,String> source = builder.stream("streams-plaintext-input"); // write to streams-pipe-output topic source.to("streams-pipe-output"); // concatenate the above two lines into a single line: // builder.stream("streams-plaintext-input").to("streams-pipe-output"); // inpsect what kind of topology is created from this builder final Topology topology = builder.build(); System.out.println(topology.describe()); final KafkaStreams streams = new KafkaStreams(topology, config); final CountDownLatch latch = new CountDownLatch(1); Runtime.getRuntime().addShutdownHook(new Thread("streams-shutdonw-hook") { @Override public void run() { streams.close(); latch.countDown(); } }); try { streams.start(); latch.await(); }catch(Throwable e) { System.exit(1); } } }