一 logstash安装

1.1下载包

[root@node1 ~]# cd /usr/local/src/

[root@node1 src]# wget https://artifacts.elastic.co/downloads/logstash/logstash-7.4.2.tar.gz

[root@node1 src]# tar -xf logstash-7.4.2.tar.gz

[root@node1 src]# mv logstash-7.4.2 /usr/local/logstash

[root@node1 src]# cd /usr/local/logstash

1.2 查看Java环境

[root@node1 logstash]# java -version

openjdk version "1.8.0_232" OpenJDK Runtime Environment (build 1.8.0_232-b09) OpenJDK 64-Bit Server VM (build 25.232-b09, mixed mode)

1.3 启动简单测试

简单的logstash案例

[root@node1 logstash]# ./bin/logstash -e 'input {stdin {} } output { stdout {}}'

启动相对比较慢

启动后,可以在控制台输入,就可以在控制台输出,没有filter的过滤功能

hello { "@timestamp" => 2019-11-30T06:38:30.431Z, "@version" => "1", "host" => "node1", "message" => "hello" } nihao { "@timestamp" => 2019-11-30T06:39:23.965Z, "@version" => "1", "host" => "node1", "message" => "nihao" } logstash test { "@timestamp" => 2019-11-30T06:39:36.067Z, "@version" => "1", "host" => "node1", "message" => "logstash test" }

二 logstash的配置

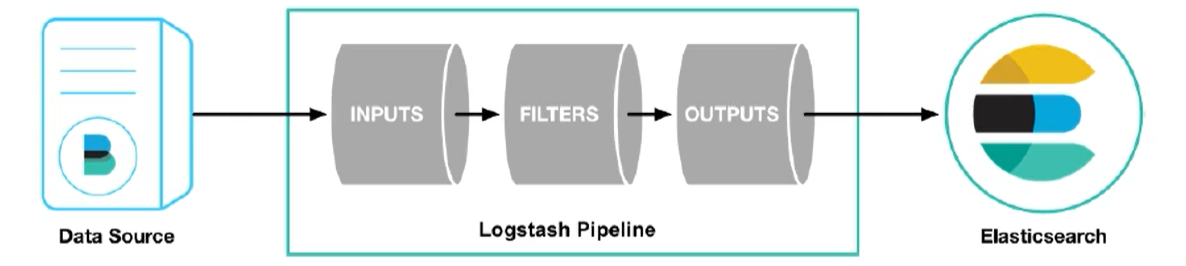

2.1 logstash的配置文件结构

Logstash配置文件针对要添加到事件处理管道中的每种插件类型都有一个单独的部分

# This is a comment. You should use comments to describe

# parts of your configuration.

input { #输入

stdin {...} 标准输入

}

filter { #过滤,对数据进行分析,截取等处理

...

}

output { #输出

stdout {...} #标准输出

}

每个部分都包含一个或多个插件的配置选项。如果指定多个过滤器,则会按照它们在配置文件中出现的顺序进行应用。

2.2 插件配置

插件的配置包括插件名称,后跟该插件的一组设置。例如,此输入部分配置两个文件输入:

input { file { path => "/var/log/messages" type => "syslog" } file { path => "/var/log/apache/access.log" type => "apache" } }

编解码器是用于表示数据的Logstash编解码器的名称。编解码器可用于输入和输出。

输入编解码器提供了一种在数据输入之前解码数据的便捷方法。输出编解码器提供了一种方便的方式,可以在数据离开输出之前对其进行编码。使用输入或输出编解码器,无需在Logstash管道中使用单独的过滤器。

codec => "json"

2.3 官方的一个配置示例

以下示例说明了如何配置Logstash来过滤事件,处理Apache日志和syslog消息,以及使用条件控制过滤器或输出处理哪些事件

配置过滤器

筛选器是一种在线处理机制,可灵活地对数据进行切片和切块以适应您的需求。让我们看一下一些实际使用的过滤器。以下配置文件设置grok和date过滤器

[root@node1 logstash]# vi logstash-filter.conf

input { stdin { } } filter { grok { match => { "message" => "%{COMBINEDAPACHELOG}" } } date { match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ] } } output { elasticsearch { hosts => ["localhost:9200"] } stdout { codec => rubydebug } }

运行

[root@node1 logstash]# bin/logstash -f logstash-filter.conf

将以下行粘贴到您的终端中,然后按Enter键,它将由stdin输入处理:

127.0.0.1 - - [11/Dec/2013:00:01:45 -0800] "GET /xampp/status.php HTTP/1.1" 200 3891 "http://cadenza/xampp/navi.php" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.9; rv:25.0) Gecko/20100101 Firefox/25.0"

应该看到返回到stdout的内容,如下所示:

127.0.0.1 - - [11/Dec/2013:00:01:45 -0800] "GET /xampp/status.php HTTP/1.1" 200 3891 "http://cadenza/xampp/navi.php" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.9; rv:25.0) Gecko/20100101 Firefox/25.0" { "clientip" => "127.0.0.1", "auth" => "-", "verb" => "GET", "request" => "/xampp/status.php", "bytes" => "3891", "host" => "node1", "@timestamp" => 2013-12-11T08:01:45.000Z, "message" => "127.0.0.1 - - [11/Dec/2013:00:01:45 -0800] "GET /xampp/status.php HTTP/1.1" 200 3891 "http://cadenza/xampp/navi.php" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.9; rv:25.0) Gecko/20100101 Firefox/25.0"", "@version" => "1", "response" => "200", "ident" => "-", "httpversion" => "1.1", "referrer" => ""http://cadenza/xampp/navi.php"", "agent" => ""Mozilla/5.0 (Macintosh; Intel Mac OS X 10.9; rv:25.0) Gecko/20100101 Firefox/25.0"", "timestamp" => "11/Dec/2013:00:01:45 -0800" }

如上所见,Logstash(在grok过滤器的帮助下)能够解析日志行(碰巧是Apache“组合日志”格式),并将其分解为许多不同的信息位。一旦开始查询和分析我们的日志数据,这将非常有用。例如,将能够轻松地运行有关HTTP响应代码,IP地址,引荐来源网址等的报告。Logstash包含许多现成的grok模式,因此如果您需要解析一种通用的日志格式,很可能有人已经完成了工作。

2.4 处理Apache日志

input { file { path => "/tmp/access_log" start_position => "beginning" } } filter { if [path] =~ "access" { mutate { replace => { "type" => "apache_access" } } grok { match => { "message" => "%{COMBINEDAPACHELOG}" } } } date { match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ] } } output { elasticsearch { hosts => ["localhost:9200"] } stdout { codec => rubydebug } }

运行

[root@node1 logstash]# bin/logstash -f logstash-apache.conf

[2019-11-30T02:28:42,685][INFO ][filewatch.observingtail ][main] START, creating Discoverer, Watch with file and sincedb collections [2019-11-30T02:28:43,130][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

然后,使用以下日志条目(或使用您自己的Web服务器中的某些日志)输入

71.141.244.242 - kurt [18/May/2011:01:48:10 -0700] "GET /admin HTTP/1.1" 301 566 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3" 134.39.72.245 - - [18/May/2011:12:40:18 -0700] "GET /favicon.ico HTTP/1.1" 200 1189 "-" "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; InfoPath.2; .NET4.0C; .NET4.0E)" 98.83.179.51 - - [18/May/2011:19:35:08 -0700] "GET /css/main.css HTTP/1.1" 200 1837 "http://www.safesand.com/information.htm" "Mozilla/5.0 (Windows NT 6.0; WOW64; rv:2.0.1) Gecko/20100101 Firefox/4.0.1"

输入

[root@node1 ~]# echo '71.141.244.242 - kurt [18/May/2011:01:48:10 -0700] "GET /admin HTTP/1.1" 301 566 "-" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.9.2.3) Gecko/20100401 Firefox/3.6.3"' >> /tmp/access_log

[root@node1 ~]# echo '134.39.72.245 - - [18/May/2011:12:40:18 -0700] "GET /favicon.ico HTTP/1.1" 200 1189 "-" "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; InfoPath.2; .NET4.0C; .NET4.0E)"' >> /tmp/access_log

[root@node1 ~]# echo '98.83.179.51 - - [18/May/2011:19:35:08 -0700] "GET /css/main.css HTTP/1.1" 200 1837 "http://www.safesand.com/information.htm" "Mozilla/5.0 (Windows NT 6.0; WOW64; rv:2.0.1) Gecko/20100101 Firefox/4.0.1"' /tmp/access_log

98.83.179.51 - - [18/May/2011:19:35:08 -0700] "GET /css/main.css HTTP/1.1" 200 1837 "http://www.safesand.com/information.htm" "Mozilla/5.0 (Windows NT 6.0; WOW64; rv:2.0.1) Gecko/20100101 Firefox/4.0.1" /tmp/access_log

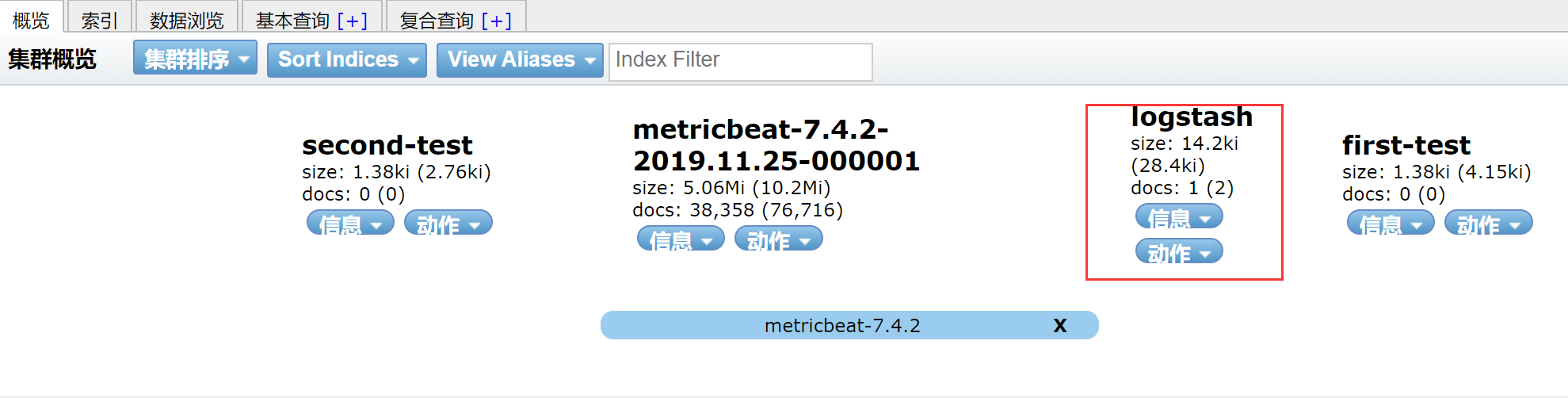

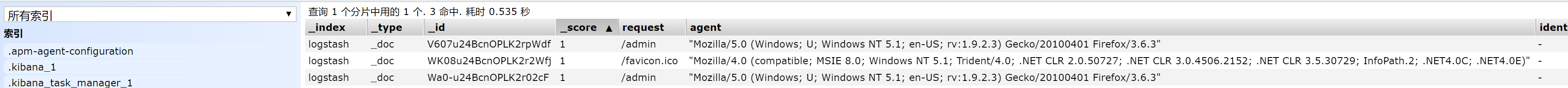

查看

查看刚刚的数据

查看原数据

{ "_index": "logstash", "_type": "_doc", "_id": "WK08u24BcnOPLK2r2Wfj", "_version": 1, "_score": 1, "_source": { "request": "/favicon.ico", "agent": ""Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; InfoPath.2; .NET4.0C; .NET4.0E)"", "ident": "-", "clientip": "134.39.72.245", "verb": "GET", "type": "apache_access", "path": "/tmp/access_log", "@version": "1", "message": "134.39.72.245 - - [18/May/2011:12:40:18 -0700] "GET /favicon.ico HTTP/1.1" 200 1189 "-" "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 2.0.50727; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; InfoPath.2; .NET4.0C; .NET4.0E)"", "timestamp": "18/May/2011:12:40:18 -0700", "bytes": "1189", "referrer": ""-"", "auth": "-", "httpversion": "1.1", "response": "200", "host": "node1", "@timestamp": "2011-05-18T19:40:18.000Z" } }

对于官方的示例还有很多,后面在慢慢实验

三 读取自定义结构的日志

前面我们通过 Filebeat读取了 nginx的日志,如果是自定义结构的日志,就需要读取处理后才能使用,所以,这个时候就需要使用 Logstash了,因为 Logstash有着强大的处理能力,可以应对各种各样的场景。

3.1 日志结构

2019-03-15 21:21:21 ERROR|读取数据出错|参数:id=1002

可以看到,日志中的内容是使用”进行分割的,使用,我们在处理的时候,也需要对数据做分割处理。

3.2 编写配置文件切割日志

[root@node1 logstash]# vi pipeline.conf

input { file { path => "/tmp/access_log" start_position => "beginning" } } filter { mutate { split => {"message" => "|"} } } output { stdout { codec => rubydebug } }

启动测试

[root@node1 logstash]# bin/logstash -f pipeline.conf

[2019-11-30T03:19:10,634][INFO ][filewatch.observingtail ][main] START, creating Discoverer, Watch with file and sincedb collections [2019-11-30T03:19:11,079][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} /usr/local/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

输入日志

[root@node1 ~]# echo "2019-03-15 21:21:21 ERROR|读取数据出错|参数:id=1002" >>/tmp/access_log

输出显示

{ "message" => [ [0] "2019-03-15 21:21:21 ERROR", [1] "读取数据出错", [2] "参数:id=1002" ], "@version" => "1", "host" => "node1", "@timestamp" => 2019-11-30T08:20:06.666Z, "path" => "/tmp/access_log" }

3.3 在把分割的日志做一个标记

[root@node1 logstash]# vi pipeline.conf

input { file { path => "/tmp/access_log" start_position => "beginning" } } filter { mutate { split => {"message" => "|"} } mutate { add_field =>{ "Time" => "%{message[0]}" "result" => "%{message[1]}" "userID" => "%{message[2]}" } } } output { stdout { codec => rubydebug } }

[root@node1 logstash]# bin/logstash -f pipeline.conf

这种个配置会出现错误,而且不能出现添加的filed

[2019-11-30T04:13:11,739][WARN ][logstash.filters.mutate ][main] Exception caught while applying mutate filter {:exception=>"Invalid FieldReference: `message[0]`"}

修改pipelie.conf如下

input { file { path => "/tmp/access_log" start_position => "beginning" } } filter { mutate { split => {"message" => "|"} } mutate { add_field => { "Date" => "%{[message][0]}" "Leverl" => "%{[message][1]}" "result" => "%{[message][2]}" "userID" => "%{[message][3]}" } } } output { stdout { codec => rubydebug } }

运行

[root@node1 logstash]# bin/logstash -f pipeline.conf

输入日志

[root@node1 ~]# echo "2019-03-15 21:21:21| ERROR|读取数据出错|参数:id=1002" >>/tmp/access_log

输出台结果

{ "path" => "/tmp/access_log", "@version" => "1", "Date" => "2019-03-15 21:21:21", "host" => "node1", "message" => [ [0] "2019-03-15 21:21:21", [1] " ERROR", [2] "读取数据出错", [3] "参数:id=1002" ], "@timestamp" => 2019-11-30T09:18:19.569Z, "Leverl" => " ERROR", "result" => "读取数据出错", "userID" => "参数:id=1002" }

logstash的实验先做到这里,后面在综合做一个实验