介绍:前期对ceph有一个简单的介绍,但是内容太大,并不具体,接下来使用ceph-deploy部署一个Ceph集群,并做一些运维管理工作,深入的理解Ceph原理及工作工程!

一、环境准备

本次使用的虚拟机测试,使用7.6系统最小化安装,CentOS Linux release 7.6.1810 (Core)

1.1 主机规划:

|

节点

|

类型

|

IP

|

CPU

|

内存

|

|

ceph1

|

部署管理平台

|

172.25.254.130

|

2 C

|

4 G

|

|

ceph2

|

Monitor OSD

|

172.25.254.131

|

2 C

|

4G

|

|

ceph3

|

OSD

|

172.25.254.132

|

2 C

|

4 G

|

|

ceph4

|

OSD

|

172.25.254.133

|

2 C

|

4 G

|

|

ceph5

|

client

|

172.25.254.134

|

2C

|

4G

|

1.2 功能实现

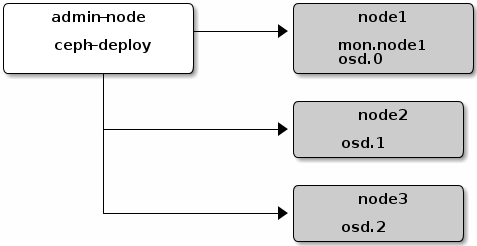

Ceph集群:monitor、manager、osd*3

1.3 主机前期准备

每个节点都要做

修改主机名安装必要软件

hostnamectl set-hostname username hostname username yum install -y net-tools wget vim yum update

配置阿里源

rm -f /etc/yum.repos.d/* wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo sed -i '/aliyuncs.com/d' /etc/yum.repos.d/*.repo echo '#阿里ceph源 [ceph] name=ceph baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64/ gpgcheck=0 [ceph-noarch] name=cephnoarch baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch/ gpgcheck=0 [ceph-source] name=ceph-source baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS/ gpgcheck=0 #'>/etc/yum.repos.d/ceph.repo yum clean all && yum makecache

yum install deltarpm

配置时间同步

yum install ntp ntpdate ntp-doc

二、部署节点准备

2.1 部署节点配置主机名

[root@ceph1 ~]# vi /etc/hosts

172.25.254.130 ceph1 172.25.254.131 ceph2 172.25.254.132 ceph3 172.25.254.133 ceph4 172.25.254.134 ceph5 172.25.254.135 ceph6

2.2 配置部署节点到所有osd节点免密登录

[root@ceph1 ~]# useradd cephuser #创建非root用户

[root@ceph1 ~]# echo redhat | passwd --stdin cephuser

[root@ceph1 ~]# for i in {2..5}; do echo "====ceph${i}====";ssh root@ceph${i} 'useradd -d /home/cephuser -m cephuser; echo "redhat" | passwd --stdin cephuser'; done #所有osd节点创建cephuser用户

[root@ceph1 ~]# for i in {1..5}; do echo "====ceph${i}====";ssh root@ceph${i} 'echo "cephuser ALL = (root) NOPASSWD:ALL" > /etc/sudoers.d/cephuser'; done

[root@ceph1 ~]# for i in {1..5}; do echo "====ceph${i}====";ssh root@ceph${i} 'chmod 0440 /etc/sudoers.d/cephuser'; done

2.3 关闭selinux并配置防火墙

[root@ceph1 ~]# sed -i '/^SELINUX=.*/c SELINUX=perimissive' /etc/selinux/config

[root@ceph1 ~]# sed -i 's/^SELINUXTYPE=.*/SELINUXTYPE=disabled/g' /etc/selinux/config

[root@ceph1 ~]# grep --color=auto '^SELINUX' /etc/selinux/config

SELINUX=perimissive

SELINUXTYPE=disabled

[root@ceph1 ~]# setenforce 0

[root@ceph1 ~]# firewall-cmd --list-all

public (active)

target: default

icmp-block-inversion: no

interfaces: ens33

sources:

services: ssh dhcpv6-client

ports:

protocols:

masquerade: no

forward-ports:

source-ports:

icmp-blocks:

rich rules:

[root@ceph1 ~]# systemctl stop firewalld

[root@ceph1 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

2.4 部署节点安装pip环境

[root@ceph1 ~]# curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

[root@ceph1 ~]# python get-pip.py

[root@ceph1 ~]# su - cephuser

[cephuser@ceph1 ~]$ ssh-keygen -f ~/.ssh/id_rsa -N ''

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.130

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.131

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.132

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.133

[cephuser@ceph1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub cephuser@172.25.254.134

2.5 修改部署节点的config文件

[cephuser@ceph1 ~]$ vi ~/.ssh/config

Host node1 Hostname ceph1 User cephuser Host node2 Hostname crph2 User cephuser Host node3 Hostname ceph3 User cephuser Host node4 Hostname ceph4 User cephuser Host node5 Hostname ceph5 User cephuser

[cephuser@ceph1 ~]$ chmod 600 .ssh/config

测试

[cephuserr@ceph1 ~]$ ssh cephuser@ceph2

[cephuser@ceph2 ~]$ exit

三、创建集群

以下操作均在控制节点完成

[root@ceph1 ~]# su - cephuser

[cephuser@ceph1 ~]$ sudo yum install yum-plugin-priorities

[cephuser@ceph1 ~]$ sudo yum install ceph-deploy

3.1 创建配置文件

[cephuser@ceph1 ~]$ cd

[cephuser@ceph1 ~]$ mkdir my-cluster

[cephuser@ceph1 ~]$ cd my-cluster

3.2 创建监控节点

[cephuser@ceph1 my-cluster]$ ceph-deploy new ceph2

[cephuser@ceph1 my-cluster]$ ll

-rw-rw-r--. 1 cephuser cephuser 197 Mar 14 23:13 ceph.conf -rw-rw-r--. 1 cephuser cephuser 3166 Mar 14 23:13 ceph-deploy-ceph.log -rw-------. 1 cephuser cephuser 73 Mar 14 23:13 ceph.mon.keyring

该目录存在一个 Ceph 配置文件、一个 monitor 密钥环和一个日志文件。

3.2 安装ceph

[cephuser@ceph1 my-cluster]$ ceph-deploy install ceph2 ceph3 ceph4

[cephuser@ceph2 my-cluster]$ sudo ceph --version

ceph version 13.2.5 (cbff874f9007f1869bfd3821b7e33b2a6ffd4988) mimic (stable)

3.4 初始化监控节点

[cephuser@ceph1 my-cluster]$ ceph-deploy mon create-initial

[cephuser@ceph1 my-cluster]$ ll

-rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-mds.keyring -rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-mgr.keyring -rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-osd.keyring -rw-------. 1 cephuser cephuser 71 Mar 14 23:29 ceph.bootstrap-rgw.keyring -rw-------. 1 cephuser cephuser 63 Mar 14 23:29 ceph.client.admin.keyring -rw-rw-r--. 1 cephuser cephuser 197 Mar 14 23:29 ceph.conf -rw-rw-r--. 1 cephuser cephuser 310977 Mar 14 23:30 ceph-deploy-ceph.log -rw-------. 1 cephuser cephuser 73 Mar 14 23:29 ceph.mon.keyring

3.5 部署MGR,创建monitor管理节点

[cephuser@ceph1 my-cluster]$ ceph-deploy mgr create ceph2 ceph3 ceph4

注:这个地方有事会卡很久,而且会报错或者其他问题,重复的操作,后面一次竟然成功了,愿意无解。

[ceph4][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph4 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph4/keyring

[ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.6.1810 Core

[ceph2][INFO ] Running command: sudo systemctl enable ceph.target

[ceph2][INFO ] Running command: sudo systemctl enable ceph-mon@ceph2

[ceph2][INFO ] Running command: sudo systemctl start ceph-mon@ceph2

[ceph2][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph2.asok mon_status

[ceph2][INFO ] monitor: mon.ceph2 is running

[ceph2][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph2.asok mon_status

3.6 分发配置文件

[cephuser@ceph1 my-cluster]$ ceph-deploy admin ceph2 ceph3 ceph4

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph2

[ceph2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

3.7 添加osd

列出所有磁盘:

[cephuser@ceph1 my-cluster]$ ceph-deploy disk list ceph2 ceph3 ceph4

[ceph2][INFO ] Disk /dev/sda: 21.5 GB, 21474836480 bytes, 41943040 sectors [ceph2][INFO ] Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors [ceph2][INFO ] Disk /dev/mapper/centos-root: 18.2 GB, 18249416704 bytes, 35643392 sectors [ceph2][INFO ] Disk /dev/mapper/centos-swap: 2147 MB, 2147483648 bytes, 4194304 sectors

……

[cephuser@ceph1 my-cluster]$ ceph-deploy osd create --data /dev/sdb ceph2

[ceph_deploy.osd][DEBUG ] Host ceph4 is now ready for osd use

[cephuser@ceph1 my-cluster]$ ceph-deploy osd create --data /dev/sdb ceph3

[ceph3][WARNIN] No data was received after 300 seconds, disconnecting...

[ceph3][INFO ] checking OSD status...

[ceph3][DEBUG ] find the location of an executable

[ceph3][INFO ] Running command: sudo /bin/ceph --cluster=ceph osd stat --format=json

[cephuser@ceph1 my-cluster]$ ceph-deploy osd create --data /dev/sdb ceph4

[ceph4][WARNIN] No data was received after 300 seconds, disconnecting...

[ceph4][INFO ] checking OSD status...

[ceph4][DEBUG ] find the location of an executable

[ceph4][INFO ] Running command: sudo /bin/ceph --cluster=ceph osd stat --format=json

在这里也是一样,添加第一个OSD正常添加,但是到了第二个的时候,会失败,而且到后面也显示成功,但是在后面进行查看的时候,只有第一个osd,但是集群状显示health,原因个人猜测是因为使用的虚拟机,内存,CPU等各方面压力太大,需要等待!为了保证正常,我在添加第一个osd后,虚拟机几个小时后,再去添加第二个osd,尝试看是否正常!!!

3.8 查看集群状态:

[cephuser@ceph1 my-cluster]$ ssh ceph2 sudo ceph health

HEALTH_OK

[cephuser@ceph1 my-cluster]$ ssh ceph2 sudo ceph -s

cluster: id: 2835ab5a-32fe-40df-8965-32bbe4991222 health: HEALTH_OK services: mon: 1 daemons, quorum ceph2 mgr: ceph2(active) osd: 1 osds: 1 up, 1 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 1.0 GiB used, 19 GiB / 20 GiB avail pgs:

在上面的过程发现只有一个osd,但是添加的三块磁盘,原因报错如下,同时ceph4节点也是相同问题

[ceph3][WARNIN] No data was received after 300 seconds, disconnecting... [ceph3][INFO ] checking OSD status... [ceph3][DEBUG ] find the location of an executable [ceph3][INFO ] Running command: sudo /bin/ceph --cluster=ceph osd stat --format=json

查阅资料,是镜像源的问题,但是并没有解决,继续下一步试验,回头处理

也就是相当于配置了一个ceph2的单节点的ceph集群,在这个集群上继续完成实验

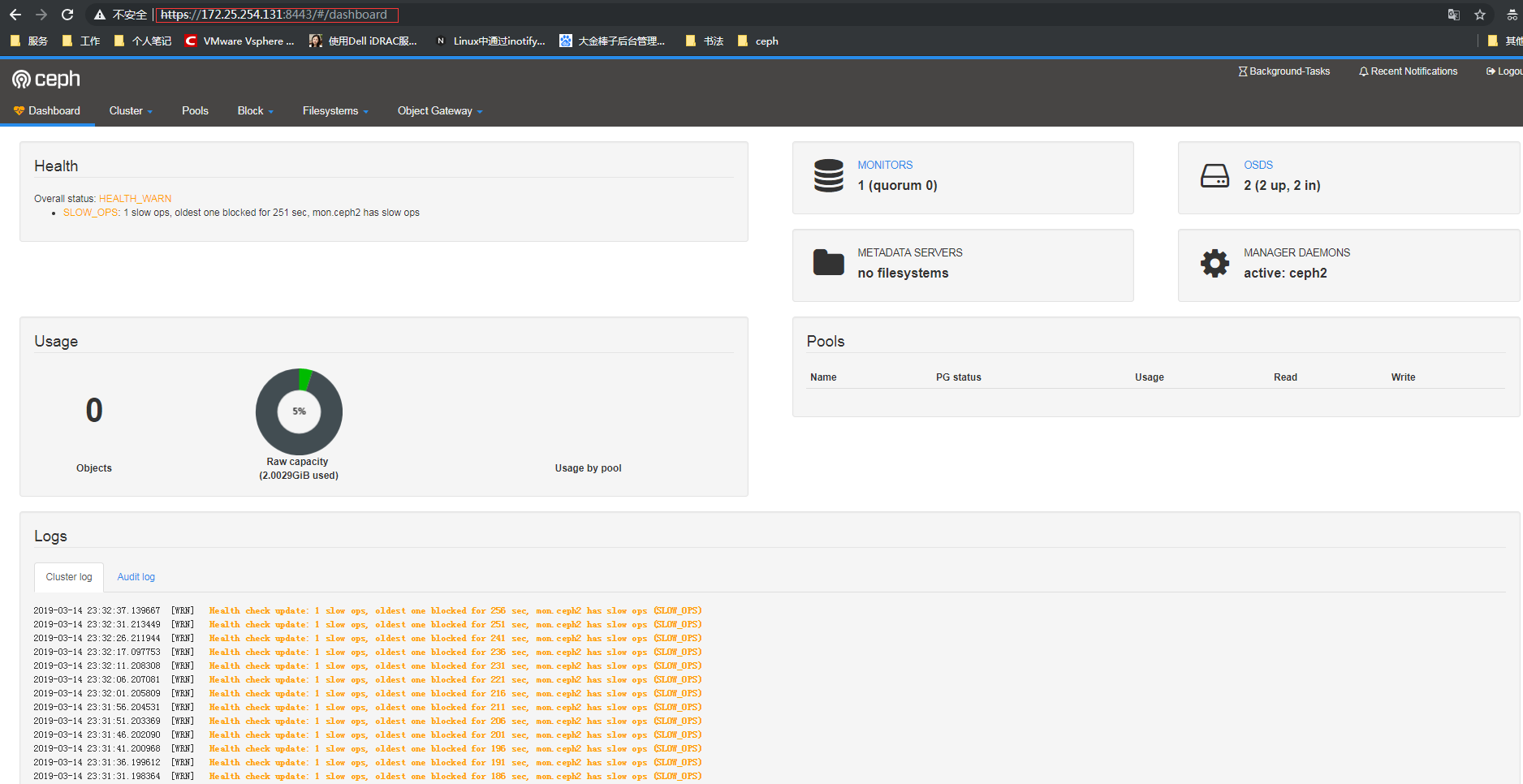

3.9 开启dashboard

[root@ceph2 ~]# ceph mgr module enable dashboard

[root@ceph2 ~]# ceph dashboard create-self-signed-cert

Self-signed certificate created

[root@ceph2 ~]# ceph dashboard set-login-credentials admin admin

Username and password updated

[root@ceph2 ~]# ceph mgr services

{ "dashboard": "https://ceph2:8443/" }

关闭防火墙,浏览器访问测试

[root@ceph2 ~]# systemctl stop firewalld

3.10 删除所有配置

[cephuser@ceph1 my-cluster]$ ceph-deploy purge ceph1 ceph2 ceph3 ceph4 ceph5

[cephuser@ceph1 my-cluster]$ ceph-deploy purgedata ceph1 ceph2 ceph3 ceph4 ceph5

[cephuser@ceph1 my-cluster]$ ceph-deploy forgetkeys

[cephuser@ceph1 my-cluster]$ rm ceph.*

出现问题

[cephuser@ceph1 my-cluster]$ ceph-deploy install ceph1 ceph2 ceph3 ceph4 ceph5

Delta RPMs disabled because /usr/bin/applydeltarpm not installed

解决:

[cephuser@ceph1 my-cluster]$ yum provides '*/applydeltarpm

[cephuser@ceph1 my-cluster]$ sudo yum install deltarpm

[cephuser@ceph1 my-cluster]$ ceph-deploy mon create-initial

有错误:

上面的各种错误,大部分原因是硬件网络问题,重置环境十来遍,只想说坑爹!!!

参考链接:

https://willireamangel.github.io/2018/06/07/Ceph%E9%83%A8%E7%BD%B2%E6%95%99%E7%A8%8B%EF%BC%88luminous%EF%BC%89/

https://www.cnblogs.com/itzgr/p/10275863.html