不多说,直接上干货!

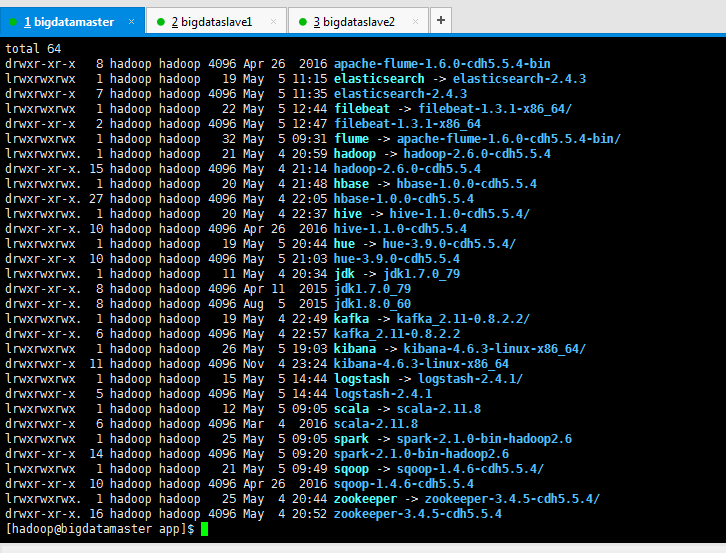

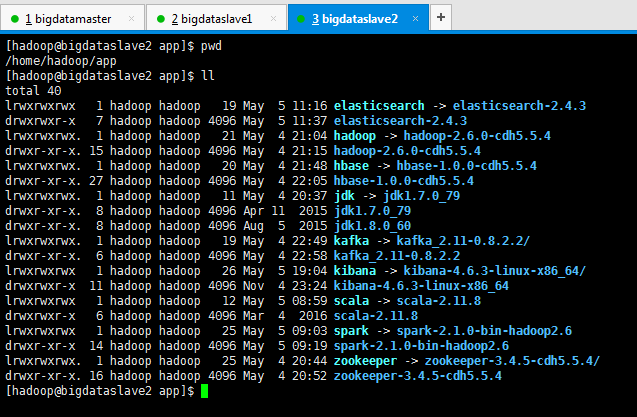

我的集群机器情况是 bigdatamaster(192.168.80.10)、bigdataslave1(192.168.80.11)和bigdataslave2(192.168.80.12)

然后,安装目录是在/home/hadoop/app下。

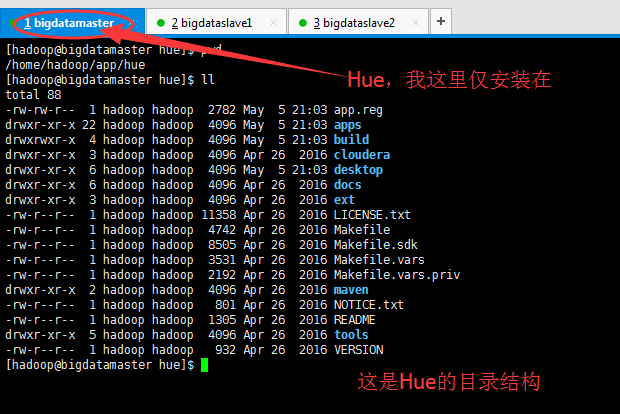

官方建议在master机器上安装Hue,我这里也不例外。安装在bigdatamaster机器上。

Hue版本:hue-3.9.0-cdh5.5.4

需要编译才能使用(联网)

说给大家的话:大家电脑的配置好的话,一定要安装cloudera manager。毕竟是一家人的。

同时,我也亲身经历过,会有部分组件版本出现问题安装起来要个大半天时间去排除,做好心里准备。废话不多说,因为我目前读研,自己笔记本电脑最大8G,只能玩手动来练手。

纯粹是为了给身边没高配且条件有限的学生党看的! 但我已经在实验室机器群里搭建好cloudera manager 以及 ambari都有。

大数据领域两大最主流集群管理工具Ambari和Cloudera Manger

Cloudera安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

Ambari安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

前期博客

CDH版本大数据集群下搭建Hue(hadoop-2.6.0-cdh5.5.4.gz + hue-3.9.0-cdh5.5.4.tar.gz)(博主推荐)

[hadoop@bigdatamaster hue]$ pwd /home/hadoop/app/hue [hadoop@bigdatamaster hue]$ ll total 88 -rw-rw-r-- 1 hadoop hadoop 2782 May 5 21:03 app.reg drwxr-xr-x 22 hadoop hadoop 4096 May 5 21:03 apps drwxrwxr-x 4 hadoop hadoop 4096 May 5 21:03 build drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 cloudera drwxr-xr-x 6 hadoop hadoop 4096 May 5 21:03 desktop drwxr-xr-x 6 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 ext -rw-r--r-- 1 hadoop hadoop 11358 Apr 26 2016 LICENSE.txt -rw-r--r-- 1 hadoop hadoop 4742 Apr 26 2016 Makefile -rw-r--r-- 1 hadoop hadoop 8505 Apr 26 2016 Makefile.sdk -rw-r--r-- 1 hadoop hadoop 3531 Apr 26 2016 Makefile.vars -rw-r--r-- 1 hadoop hadoop 2192 Apr 26 2016 Makefile.vars.priv drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 maven -rw-r--r-- 1 hadoop hadoop 801 Apr 26 2016 NOTICE.txt -rw-r--r-- 1 hadoop hadoop 1305 Apr 26 2016 README drwxr-xr-x 5 hadoop hadoop 4096 Apr 26 2016 tools -rw-r--r-- 1 hadoop hadoop 932 Apr 26 2016 VERSION [hadoop@bigdatamaster hue]$

首先,大家一应要看清我的3个节点的集群机器情况!(不看清楚,自己去后悔吧)

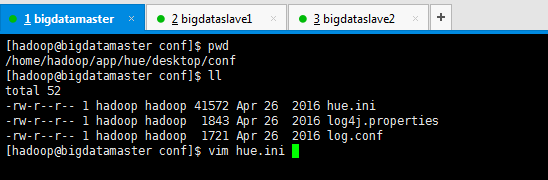

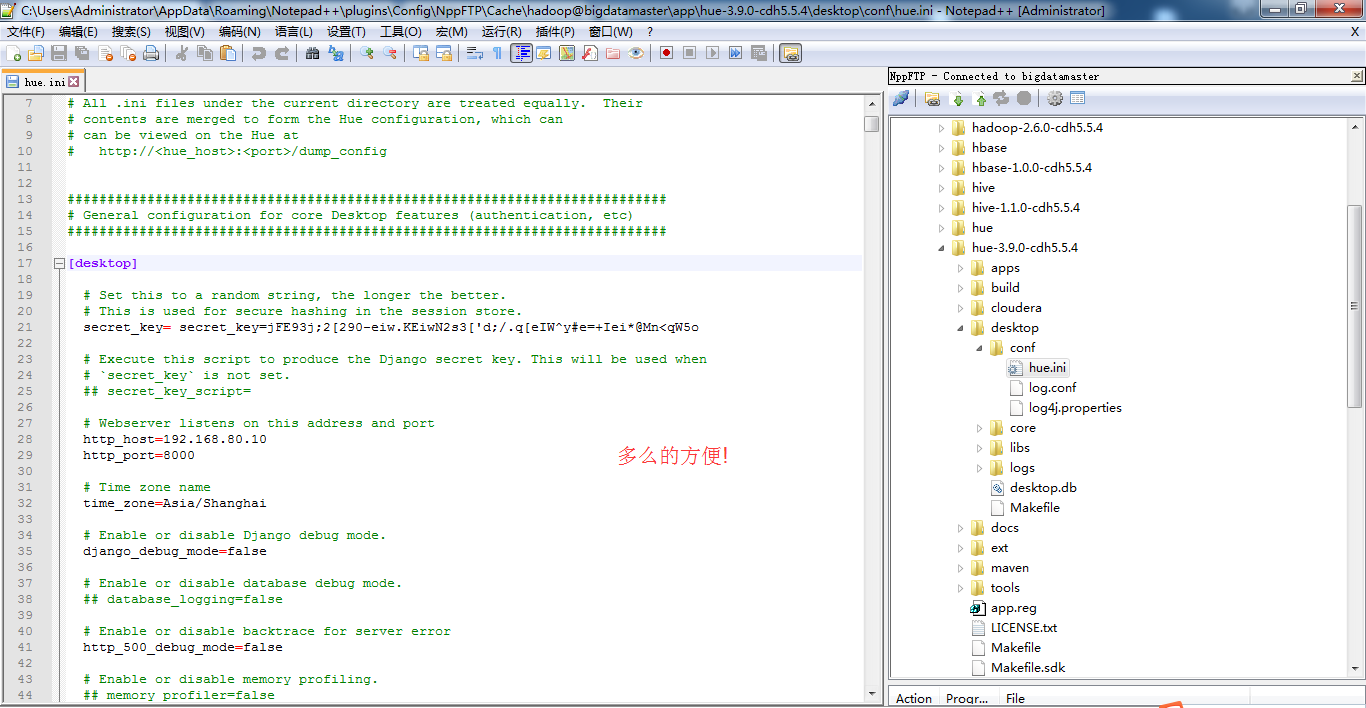

进入 $HUE_HOME/desktop/conf/hue.ini

关于这里,给大家一个很好的技巧

大数据搭建各个子项目时配置文件技巧(适合CentOS和Ubuntu系统)(博主推荐)

以下是我的配置文件(注意不是默认的)(作为大家参考) (这个配置文件,是根据大家的机器变动而走的)(动态的)

# Hue configuration file # =================================== # # For complete documentation about the contents of this file, run # $ <hue_root>/build/env/bin/hue config_help # # All .ini files under the current directory are treated equally. Their # contents are merged to form the Hue configuration, which can # can be viewed on the Hue at # http://<hue_host>:<port>/dump_config

########################################################################### # General configuration for core Desktop features (authentication, etc) ###########################################################################

[desktop]

# Set this to a random string, the longer the better.

# This is used for secure hashing in the session store.

secret_key= secret_key=jFE93j;2[290-eiw.KEiwN2s3['d;/.q[eIW^y#e=+Iei*@Mn<qW5o

# Execute this script to produce the Django secret key. This will be used when

# `secret_key` is not set.

## secret_key_script=

# Webserver listens on this address and port

http_host=192.168.80.10

http_port=8000

# Time zone name

time_zone=Asia/Shanghai

# Enable or disable Django debug mode.

django_debug_mode=false

# Enable or disable database debug mode.

## database_logging=false

# Enable or disable backtrace for server error

http_500_debug_mode=false

# Enable or disable memory profiling.

## memory_profiler=false

# Server email for internal error messages

## django_server_email='hue@localhost.localdomain'

# Email backend

## django_email_backend=django.core.mail.backends.smtp.EmailBackend

# Webserver runs as this user

server_user=hue

server_group=hue

# This should be the Hue admin and proxy user

default_user=hue

# This should be the hadoop cluster admin

efault_hdfs_superuser=hadoop

# If set to false, runcpserver will not actually start the web server.

# Used if Apache is being used as a WSGI container.

## enable_server=yes

# Number of threads used by the CherryPy web server

## cherrypy_server_threads=40

# Filename of SSL Certificate

## ssl_certificate=

# Filename of SSL RSA Private Key

## ssl_private_key=

# Filename of SSL Certificate Chain

## ssl_certificate_chain=

# SSL certificate password

## ssl_password=

# Execute this script to produce the SSL password. This will be used when `ssl_password` is not set.

## ssl_password_script=

# List of allowed and disallowed ciphers in cipher list format.

# See http://www.openssl.org/docs/apps/ciphers.html for more information on

# cipher list format. This list is from

# https://wiki.mozilla.org/Security/Server_Side_TLS v3.7 intermediate

# recommendation, which should be compatible with Firefox 1, Chrome 1, IE 7,

# Opera 5 and Safari 1.

## ssl_cipher_list=ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA

# Path to default Certificate Authority certificates.

## ssl_cacerts=/etc/hue/cacerts.pem

# Choose whether Hue should validate certificates received from the server.

## validate=true

# Default LDAP/PAM/.. username and password of the hue user used for authentications with other services.

# e.g. LDAP pass-through authentication for HiveServer2 or Impala. Apps can override them individually.

## auth_username=hue

## auth_password=

# Default encoding for site data

## default_site_encoding=utf-8

# Help improve Hue with anonymous usage analytics.

# Use Google Analytics to see how many times an application or specific section of an application is used, nothing more.

## collect_usage=true

# Enable X-Forwarded-Host header if the load balancer requires it.

## use_x_forwarded_host=false

# Support for HTTPS termination at the load-balancer level with SECURE_PROXY_SSL_HEADER.

## secure_proxy_ssl_header=false

# Comma-separated list of Django middleware classes to use.

# See https://docs.djangoproject.com/en/1.4/ref/middleware/ for more details on middlewares in Django.

## middleware=desktop.auth.backend.LdapSynchronizationBackend

# Comma-separated list of regular expressions, which match the redirect URL.

# For example, to restrict to your local domain and FQDN, the following value can be used:

# ^/.*$,^http://www.mydomain.com/.*$

## redirect_whitelist=^/.*$

# Comma separated list of apps to not load at server startup.

# e.g.: pig,zookeeper

## app_blacklist=

# The directory where to store the auditing logs. Auditing is disable if the value is empty.

# e.g. /var/log/hue/audit.log

## audit_event_log_dir=

# Size in KB/MB/GB for audit log to rollover.

## audit_log_max_file_size=100MB

# A json file containing a list of log redaction rules for cleaning sensitive data

# from log files. It is defined as:

#

# {

# "version": 1,

# "rules": [

# {

# "description": "This is the first rule",

# "trigger": "triggerstring 1",

# "search": "regex 1",

# "replace": "replace 1"

# },

# {

# "description": "This is the second rule",

# "trigger": "triggerstring 2",

# "search": "regex 2",

# "replace": "replace 2"

# }

# ]

# }

#

# Redaction works by searching a string for the [TRIGGER] string. If found,

# the [REGEX] is used to replace sensitive information with the

# [REDACTION_MASK]. If specified with `log_redaction_string`, the

# `log_redaction_string` rules will be executed after the

# `log_redaction_file` rules.

#

# For example, here is a file that would redact passwords and social security numbers:

# {

# "version": 1,

# "rules": [

# {

# "description": "Redact passwords",

# "trigger": "password",

# "search": "password=".*"",

# "replace": "password="???""

# },

# {

# "description": "Redact social security numbers",

# "trigger": "",

# "search": "d{3}-d{2}-d{4}",

# "replace": "XXX-XX-XXXX"

# }

# ]

# }

## log_redaction_file=

# Comma separated list of strings representing the host/domain names that the Hue server can serve.

# e.g.: localhost,domain1,*

## allowed_hosts=*

# Administrators

# ----------------

[[django_admins]]

## [[[admin1]]]

## name=john

## email=john@doe.com

# UI customizations

# -------------------

[[custom]]

# Top banner HTML code

# e.g. <H2>Test Lab A2 Hue Services</H2>

## banner_top_html=

# Configuration options for user authentication into the web application

# ------------------------------------------------------------------------

[[auth]]

# Authentication backend. Common settings are:

# - django.contrib.auth.backends.ModelBackend (entirely Django backend)

# - desktop.auth.backend.AllowAllBackend (allows everyone)

# - desktop.auth.backend.AllowFirstUserDjangoBackend

# (Default. Relies on Django and user manager, after the first login)

# - desktop.auth.backend.LdapBackend

# - desktop.auth.backend.PamBackend

# - desktop.auth.backend.SpnegoDjangoBackend

# - desktop.auth.backend.RemoteUserDjangoBackend

# - libsaml.backend.SAML2Backend

# - libopenid.backend.OpenIDBackend

# - liboauth.backend.OAuthBackend

# (New oauth, support Twitter, Facebook, Google+ and Linkedin

## backend=desktop.auth.backend.AllowFirstUserDjangoBackend

# The service to use when querying PAM.

## pam_service=login

# When using the desktop.auth.backend.RemoteUserDjangoBackend, this sets

# the normalized name of the header that contains the remote user.

# The HTTP header in the request is converted to a key by converting

# all characters to uppercase, replacing any hyphens with underscores

# and adding an HTTP_ prefix to the name. So, for example, if the header

# is called Remote-User that would be configured as HTTP_REMOTE_USER

#

# Defaults to HTTP_REMOTE_USER

## remote_user_header=HTTP_REMOTE_USER

# Ignore the case of usernames when searching for existing users.

# Only supported in remoteUserDjangoBackend.

## ignore_username_case=true

# Ignore the case of usernames when searching for existing users to authenticate with.

# Only supported in remoteUserDjangoBackend.

## force_username_lowercase=true

# Users will expire after they have not logged in for 'n' amount of seconds.

# A negative number means that users will never expire.

## expires_after=-1

# Apply 'expires_after' to superusers.

## expire_superusers=true

# Force users to change password on first login with desktop.auth.backend.AllowFirstUserDjangoBackend

## change_default_password=false

# Number of login attempts allowed before a record is created for failed logins

## login_failure_limit=3

# After number of allowed login attempts are exceeded, do we lock out this IP and optionally user agent?

## login_lock_out_at_failure=false

# If set, defines period of inactivity in seconds after which failed logins will be forgotten

## login_cooloff_time=60

# If True, lock out based on IP and browser user agent

## login_lock_out_by_combination_browser_user_agent_and_ip=false

# If True, lock out based on IP and user

## login_lock_out_by_combination_user_and_ip=false

# Configuration options for connecting to LDAP and Active Directory

# -------------------------------------------------------------------

[[ldap]]

# The search base for finding users and groups

## base_dn="DC=mycompany,DC=com"

# URL of the LDAP server

## ldap_url=ldap://auth.mycompany.com

# A PEM-format file containing certificates for the CA's that

# Hue will trust for authentication over TLS.

# The certificate for the CA that signed the

# LDAP server certificate must be included among these certificates.

# See more here http://www.openldap.org/doc/admin24/tls.html.

## ldap_cert=

## use_start_tls=true

# Distinguished name of the user to bind as -- not necessary if the LDAP server

# supports anonymous searches

## bind_dn="CN=ServiceAccount,DC=mycompany,DC=com"

# Password of the bind user -- not necessary if the LDAP server supports

# anonymous searches

## bind_password=

# Execute this script to produce the bind user password. This will be used

# when `bind_password` is not set.

## bind_password_script=

# Pattern for searching for usernames -- Use <username> for the parameter

# For use when using LdapBackend for Hue authentication

## ldap_username_pattern="uid=<username>,ou=People,dc=mycompany,dc=com"

# Create users in Hue when they try to login with their LDAP credentials

# For use when using LdapBackend for Hue authentication

## create_users_on_login = true

# Synchronize a users groups when they login

## sync_groups_on_login=false

# Ignore the case of usernames when searching for existing users in Hue.

## ignore_username_case=true

# Force usernames to lowercase when creating new users from LDAP.

## force_username_lowercase=true

# Use search bind authentication.

## search_bind_authentication=true

# Choose which kind of subgrouping to use: nested or suboordinate (deprecated).

## subgroups=suboordinate

# Define the number of levels to search for nested members.

## nested_members_search_depth=10

# Whether or not to follow referrals

## follow_referrals=false

# Enable python-ldap debugging.

## debug=false

# Sets the debug level within the underlying LDAP C lib.

## debug_level=255

# Possible values for trace_level are 0 for no logging, 1 for only logging the method calls with arguments,

# 2 for logging the method calls with arguments and the complete results and 9 for also logging the traceback of method calls.

## trace_level=0

[[[users]]]

# Base filter for searching for users

## user_filter="objectclass=*"

# The username attribute in the LDAP schema

## user_name_attr=sAMAccountName

[[[groups]]]

# Base filter for searching for groups

## group_filter="objectclass=*"

# The group name attribute in the LDAP schema

## group_name_attr=cn

# The attribute of the group object which identifies the members of the group

## group_member_attr=members

[[[ldap_servers]]]

## [[[[mycompany]]]]

# The search base for finding users and groups

## base_dn="DC=mycompany,DC=com"

# URL of the LDAP server

## ldap_url=ldap://auth.mycompany.com

# A PEM-format file containing certificates for the CA's that

# Hue will trust for authentication over TLS.

# The certificate for the CA that signed the

# LDAP server certificate must be included among these certificates.

# See more here http://www.openldap.org/doc/admin24/tls.html.

## ldap_cert=

## use_start_tls=true

# Distinguished name of the user to bind as -- not necessary if the LDAP server

# supports anonymous searches

## bind_dn="CN=ServiceAccount,DC=mycompany,DC=com"

# Password of the bind user -- not necessary if the LDAP server supports

# anonymous searches

## bind_password=

# Execute this script to produce the bind user password. This will be used

# when `bind_password` is not set.

## bind_password_script=

# Pattern for searching for usernames -- Use <username> for the parameter

# For use when using LdapBackend for Hue authentication

## ldap_username_pattern="uid=<username>,ou=People,dc=mycompany,dc=com"

## Use search bind authentication.

## search_bind_authentication=true

# Whether or not to follow referrals

## follow_referrals=false

# Enable python-ldap debugging.

## debug=false

# Sets the debug level within the underlying LDAP C lib.

## debug_level=255

# Possible values for trace_level are 0 for no logging, 1 for only logging the method calls with arguments,

# 2 for logging the method calls with arguments and the complete results and 9 for also logging the traceback of method calls.

## trace_level=0

## [[[[[users]]]]]

# Base filter for searching for users

## user_filter="objectclass=Person"

# The username attribute in the LDAP schema

## user_name_attr=sAMAccountName

## [[[[[groups]]]]]

# Base filter for searching for groups

## group_filter="objectclass=groupOfNames"

# The username attribute in the LDAP schema

## group_name_attr=cn

# Configuration options for specifying the Desktop Database. For more info,

# see http://docs.djangoproject.com/en/1.4/ref/settings/#database-engine

# ------------------------------------------------------------------------

[[database]]

# Database engine is typically one of:

# postgresql_psycopg2, mysql, sqlite3 or oracle.

#

# Note that for sqlite3, 'name', below is a path to the filename. For other backends, it is the database name.

# Note for Oracle, options={"threaded":true} must be set in order to avoid crashes.

# Note for Oracle, you can use the Oracle Service Name by setting "port=0" and then "name=<host>:<port>/<service_name>".

# Note for MariaDB use the 'mysql' engine.

## engine=sqlite3

## host=

## port=

## user=

## password=

## name=desktop/desktop.db

## options={}

# Configuration options for specifying the Desktop session.

# For more info, see https://docs.djangoproject.com/en/1.4/topics/http/sessions/

# ------------------------------------------------------------------------

[[session]]

# The cookie containing the users' session ID will expire after this amount of time in seconds.

# Default is 2 weeks.

## ttl=1209600

# The cookie containing the users' session ID will be secure.

# Should only be enabled with HTTPS.

## secure=false

# The cookie containing the users' session ID will use the HTTP only flag.

## http_only=true

# Use session-length cookies. Logs out the user when she closes the browser window.

## expire_at_browser_close=false

# Configuration options for connecting to an external SMTP server

# ------------------------------------------------------------------------

[[smtp]]

# The SMTP server information for email notification delivery

host=localhost

port=25

user=

password=

# Whether to use a TLS (secure) connection when talking to the SMTP server

tls=no

# Default email address to use for various automated notification from Hue

## default_from_email=hue@localhost

# Configuration options for Kerberos integration for secured Hadoop clusters

# ------------------------------------------------------------------------

[[kerberos]]

# Path to Hue's Kerberos keytab file

## hue_keytab=

# Kerberos principal name for Hue

## hue_principal=hue/hostname.foo.com

# Path to kinit

## kinit_path=/path/to/kinit

# Configuration options for using OAuthBackend (Core) login

# ------------------------------------------------------------------------

[[oauth]]

# The Consumer key of the application

## consumer_key=XXXXXXXXXXXXXXXXXXXXX

# The Consumer secret of the application

## consumer_secret=XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

# The Request token URL

## request_token_url=https://api.twitter.com/oauth/request_token

# The Access token URL

## access_token_url=https://api.twitter.com/oauth/access_token

# The Authorize URL

## authenticate_url=https://api.twitter.com/oauth/authorize

# Configuration options for Metrics

# ------------------------------------------------------------------------

[[metrics]]

# Enable the metrics URL "/desktop/metrics"

## enable_web_metrics=True

# If specified, Hue will write metrics to this file.

## location=/var/log/hue/metrics.json

# Time in milliseconds on how frequently to collect metrics

## collection_interval=30000

###########################################################################

# Settings to configure SAML

###########################################################################

[libsaml]

# Xmlsec1 binary path. This program should be executable by the user running Hue.

## xmlsec_binary=/usr/local/bin/xmlsec1

# Entity ID for Hue acting as service provider.

# Can also accept a pattern where '<base_url>' will be replaced with server URL base.

## entity_id="<base_url>/saml2/metadata/"

# Create users from SSO on login.

## create_users_on_login=true

# Required attributes to ask for from IdP.

# This requires a comma separated list.

## required_attributes=uid

# Optional attributes to ask for from IdP.

# This requires a comma separated list.

## optional_attributes=

# IdP metadata in the form of a file. This is generally an XML file containing metadata that the Identity Provider generates.

## metadata_file=

# Private key to encrypt metadata with.

## key_file=

# Signed certificate to send along with encrypted metadata.

## cert_file=

# A mapping from attributes in the response from the IdP to django user attributes.

## user_attribute_mapping={'uid':'username'}

# Have Hue initiated authn requests be signed and provide a certificate.

## authn_requests_signed=false

# Have Hue initiated logout requests be signed and provide a certificate.

## logout_requests_signed=false

# Username can be sourced from 'attributes' or 'nameid'.

## username_source=attributes

# Performs the logout or not.

## logout_enabled=true

###########################################################################

# Settings to configure OpenID

###########################################################################

[libopenid]

# (Required) OpenId SSO endpoint url.

## server_endpoint_url=https://www.google.com/accounts/o8/id

# OpenId 1.1 identity url prefix to be used instead of SSO endpoint url

# This is only supported if you are using an OpenId 1.1 endpoint

## identity_url_prefix=https://app.onelogin.com/openid/your_company.com/

# Create users from OPENID on login.

## create_users_on_login=true

# Use email for username

## use_email_for_username=true

###########################################################################

# Settings to configure OAuth

###########################################################################

[liboauth]

# NOTE:

# To work, each of the active (i.e. uncommented) service must have

# applications created on the social network.

# Then the "consumer key" and "consumer secret" must be provided here.

#

# The addresses where to do so are:

# Twitter: https://dev.twitter.com/apps

# Google+ : https://cloud.google.com/

# Facebook: https://developers.facebook.com/apps

# Linkedin: https://www.linkedin.com/secure/developer

#

# Additionnaly, the following must be set in the application settings:

# Twitter: Callback URL (aka Redirect URL) must be set to http://YOUR_HUE_IP_OR_DOMAIN_NAME/oauth/social_login/oauth_authenticated

# Google+ : CONSENT SCREEN must have email address

# Facebook: Sandbox Mode must be DISABLED

# Linkedin: "In OAuth User Agreement", r_emailaddress is REQUIRED

# The Consumer key of the application

## consumer_key_twitter=

## consumer_key_google=

## consumer_key_facebook=

## consumer_key_linkedin=

# The Consumer secret of the application

## consumer_secret_twitter=

## consumer_secret_google=

## consumer_secret_facebook=

## consumer_secret_linkedin=

# The Request token URL

## request_token_url_twitter=https://api.twitter.com/oauth/request_token

## request_token_url_google=https://accounts.google.com/o/oauth2/auth

## request_token_url_linkedin=https://www.linkedin.com/uas/oauth2/authorization

## request_token_url_facebook=https://graph.facebook.com/oauth/authorize

# The Access token URL

## access_token_url_twitter=https://api.twitter.com/oauth/access_token

## access_token_url_google=https://accounts.google.com/o/oauth2/token

## access_token_url_facebook=https://graph.facebook.com/oauth/access_token

## access_token_url_linkedin=https://api.linkedin.com/uas/oauth2/accessToken

# The Authenticate URL

## authenticate_url_twitter=https://api.twitter.com/oauth/authorize

## authenticate_url_google=https://www.googleapis.com/oauth2/v1/userinfo?access_token=

## authenticate_url_facebook=https://graph.facebook.com/me?access_token=

## authenticate_url_linkedin=https://api.linkedin.com/v1/people/~:(email-address)?format=json&oauth2_access_token=

# Username Map. Json Hash format.

# Replaces username parts in order to simplify usernames obtained

# Example: {"@sub1.domain.com":"_S1", "@sub2.domain.com":"_S2"}

# converts 'email@sub1.domain.com' to 'email_S1'

## username_map={}

# Whitelisted domains (only applies to Google OAuth). CSV format.

## whitelisted_domains_google=

###########################################################################

# Settings for the RDBMS application

###########################################################################

[librdbms]

# The RDBMS app can have any number of databases configured in the databases

# section. A database is known by its section name

# (IE sqlite, mysql, psql, and oracle in the list below).

[[databases]]

# sqlite configuration.

## [[[sqlite]]]

# Name to show in the UI.

## nice_name=SQLite

# For SQLite, name defines the path to the database.

## name=/tmp/sqlite.db

# Database backend to use.

## engine=sqlite

# Database options to send to the server when connecting.

# https://docs.djangoproject.com/en/1.4/ref/databases/

## options={}

# mysql, oracle, or postgresql configuration.

## [[[mysql]]]

# Name to show in the UI.

## nice_name="My SQL DB"

# For MySQL and PostgreSQL, name is the name of the database.

# For Oracle, Name is instance of the Oracle server. For express edition

# this is 'xe' by default.

## name=mysqldb

# Database backend to use. This can be:

# 1. mysql

# 2. postgresql

# 3. oracle

## engine=mysql

# IP or hostname of the database to connect to.

## host=localhost

# Port the database server is listening to. Defaults are:

# 1. MySQL: 3306

# 2. PostgreSQL: 5432

# 3. Oracle Express Edition: 1521

## port=3306

# Username to authenticate with when connecting to the database.

## user=example

# Password matching the username to authenticate with when

# connecting to the database.

## password=example

# Database options to send to the server when connecting.

# https://docs.djangoproject.com/en/1.4/ref/databases/

## options={}

###########################################################################

# Settings to configure your Hadoop cluster.

###########################################################################

[hadoop]

# Configuration for HDFS NameNode

# ------------------------------------------------------------------------

[[hdfs_clusters]]

# HA support by using HttpFs

[[[default]]]

# Enter the filesystem uri

fs_defaultfs=hdfs://bigdatamaster:9000

# NameNode logical name.

## logical_name=

# Use WebHdfs/HttpFs as the communication mechanism.

# Domain should be the NameNode or HttpFs host.

# Default port is 14000 for HttpFs.

webhdfs_url=http://bigdatamaster:50070/webhdfs/v1

# Change this if your HDFS cluster is Kerberos-secured

## security_enabled=false

# In secure mode (HTTPS), if SSL certificates from YARN Rest APIs

# have to be verified against certificate authority

## ssl_cert_ca_verify=True

# Directory of the Hadoop configuration

hadoop_conf_dir=/home/hadoop/app/hadoop/etc/hadoop/conf

# Configuration for YARN (MR2)

# ------------------------------------------------------------------------

[[yarn_clusters]]

[[[default]]]

# Enter the host on which you are running the ResourceManager

resourcemanager_host=bigdatamaster

# The port where the ResourceManager IPC listens on

resourcemanager_port=8032

# Whether to submit jobs to this cluster

submit_to=True

# Resource Manager logical name (required for HA)

## logical_name=

# Change this if your YARN cluster is Kerberos-secured

## security_enabled=false

# URL of the ResourceManager API

resourcemanager_api_url=http://bigdatamaster:23188

# URL of the ProxyServer API

proxy_api_url=http://bigdatamaster:8888

# URL of the HistoryServer API

history_server_api_url=http://bigdatamaster:19888

# In secure mode (HTTPS), if SSL certificates from YARN Rest APIs

# have to be verified against certificate authority

## ssl_cert_ca_verify=True

# HA support by specifying multiple clusters

# e.g.

# [[[ha]]]

# Resource Manager logical name (required for HA)

## logical_name=my-rm-name

# Configuration for MapReduce (MR1)

# ------------------------------------------------------------------------

[[mapred_clusters]]

[[[default]]]

# Enter the host on which you are running the Hadoop JobTracker

## jobtracker_host=localhost

# The port where the JobTracker IPC listens on

## jobtracker_port=8021

# JobTracker logical name for HA

## logical_name=

# Thrift plug-in port for the JobTracker

## thrift_port=9290

# Whether to submit jobs to this cluster

submit_to=False

# Change this if your MapReduce cluster is Kerberos-secured

## security_enabled=false

# HA support by specifying multiple clusters

# e.g.

# [[[ha]]]

# Enter the logical name of the JobTrackers

## logical_name=my-jt-name

###########################################################################

# Settings to configure the Filebrowser app

###########################################################################

[filebrowser]

# Location on local filesystem where the uploaded archives are temporary stored.

## archive_upload_tempdir=/tmp

# Show Download Button for HDFS file browser.

## show_download_button=false

# Show Upload Button for HDFS file browser.

## show_upload_button=false

###########################################################################

# Settings to configure liboozie

###########################################################################

[liboozie]

# The URL where the Oozie service runs on. This is required in order for

# users to submit jobs. Empty value disables the config check.

## oozie_url=http://localhost:11000/oozie

# Requires FQDN in oozie_url if enabled

## security_enabled=false

# Location on HDFS where the workflows/coordinator are deployed when submitted.

## remote_deployement_dir=/user/hue/oozie/deployments

###########################################################################

# Settings to configure the Oozie app

###########################################################################

[oozie]

# Location on local FS where the examples are stored.

## local_data_dir=..../examples

# Location on local FS where the data for the examples is stored.

## sample_data_dir=...thirdparty/sample_data

# Location on HDFS where the oozie examples and workflows are stored.

## remote_data_dir=/user/hue/oozie/workspaces

# Maximum of Oozie workflows or coodinators to retrieve in one API call.

## oozie_jobs_count=50

# Use Cron format for defining the frequency of a Coordinator instead of the old frequency number/unit.

## enable_cron_scheduling=true

###########################################################################

# Settings to configure Beeswax with Hive

###########################################################################

[beeswax]

# Host where HiveServer2 is running.

# If Kerberos security is enabled, use fully-qualified domain name (FQDN).

hive_server_host=bigdatamaster

# Port where HiveServer2 Thrift server runs on.

hive_server_port=10000

# Hive configuration directory, where hive-site.xml is located

hive_conf_dir=/home/hadoop/app/hive/conf

# Timeout in seconds for thrift calls to Hive service

## server_conn_timeout=120

# Choose whether to use the old GetLog() thrift call from before Hive 0.14 to retrieve the logs.

# If false, use the FetchResults() thrift call from Hive 1.0 or more instead.

## use_get_log_api=false

# Set a LIMIT clause when browsing a partitioned table.

# A positive value will be set as the LIMIT. If 0 or negative, do not set any limit.

## browse_partitioned_table_limit=250

# The maximum number of partitions that will be included in the SELECT * LIMIT sample query for partitioned tables.

## sample_table_max_partitions=10

# A limit to the number of rows that can be downloaded from a query.

# A value of -1 means there will be no limit.

# A maximum of 65,000 is applied to XLS downloads.

## download_row_limit=1000000

# Hue will try to close the Hive query when the user leaves the editor page.

# This will free all the query resources in HiveServer2, but also make its results inaccessible.

## close_queries=false

# Thrift version to use when communicating with HiveServer2.

# New column format is from version 7.

## thrift_version=7

[[ssl]]

# Path to Certificate Authority certificates.

## cacerts=/etc/hue/cacerts.pem

# Choose whether Hue should validate certificates received from the server.

## validate=true

# Override the default desktop username and password of the hue user used for authentications with other services.

# e.g. LDAP/PAM pass-through authentication.

## auth_username=hue

## auth_password=

###########################################################################

# Settings to configure Impala

###########################################################################

[impala]

# Host of the Impala Server (one of the Impalad)

## server_host=localhost

# Port of the Impala Server

## server_port=21050

# Kerberos principal

## impala_principal=impala/hostname.foo.com

# Turn on/off impersonation mechanism when talking to Impala

## impersonation_enabled=False

# Number of initial rows of a result set to ask Impala to cache in order

# to support re-fetching them for downloading them.

# Set to 0 for disabling the option and backward compatibility.

## querycache_rows=50000

# Timeout in seconds for thrift calls

## server_conn_timeout=120

# Hue will try to close the Impala query when the user leaves the editor page.

# This will free all the query resources in Impala, but also make its results inaccessible.

## close_queries=true

# If QUERY_TIMEOUT_S > 0, the query will be timed out (i.e. cancelled) if Impala does not do any work

# (compute or send back results) for that query within QUERY_TIMEOUT_S seconds.

## query_timeout_s=600

[[ssl]]

# SSL communication enabled for this server.

## enabled=false

# Path to Certificate Authority certificates.

## cacerts=/etc/hue/cacerts.pem

# Choose whether Hue should validate certificates received from the server.

## validate=true

# Override the desktop default username and password of the hue user used for authentications with other services.

# e.g. LDAP/PAM pass-through authentication.

## auth_username=hue

## auth_password=

###########################################################################

# Settings to configure Pig

###########################################################################

[pig]

# Location of piggybank.jar on local filesystem.

## local_sample_dir=/usr/share/hue/apps/pig/examples

# Location piggybank.jar will be copied to in HDFS.

## remote_data_dir=/user/hue/pig/examples

###########################################################################

# Settings to configure Sqoop2

###########################################################################

[sqoop]

# For autocompletion, fill out the librdbms section.

# Sqoop server URL

## server_url=http://localhost:12000/sqoop

# Path to configuration directory

## sqoop_conf_dir=/etc/sqoop2/conf

###########################################################################

# Settings to configure Proxy

###########################################################################

[proxy]

# Comma-separated list of regular expressions,

# which match 'host:port' of requested proxy target.

## whitelist=(localhost|127.0.0.1):(50030|50070|50060|50075)

# Comma-separated list of regular expressions,

# which match any prefix of 'host:port/path' of requested proxy target.

# This does not support matching GET parameters.

## blacklist=

###########################################################################

# Settings to configure HBase Browser

###########################################################################

[hbase]

# Comma-separated list of HBase Thrift servers for clusters in the format of '(name|host:port)'.

# Use full hostname with security.

# If using Kerberos we assume GSSAPI SASL, not PLAIN.

## hbase_clusters=(Cluster|localhost:9090)

# HBase configuration directory, where hbase-site.xml is located.

## hbase_conf_dir=/etc/hbase/conf

# Hard limit of rows or columns per row fetched before truncating.

## truncate_limit = 500

# 'buffered' is the default of the HBase Thrift Server and supports security.

# 'framed' can be used to chunk up responses,

# which is useful when used in conjunction with the nonblocking server in Thrift.

## thrift_transport=buffered

###########################################################################

# Settings to configure Solr Search

###########################################################################

[search]

# URL of the Solr Server

## solr_url=http://localhost:8983/solr/

# Requires FQDN in solr_url if enabled

## security_enabled=false

## Query sent when no term is entered

## empty_query=*:*

# Use latest Solr 5.2+ features.

## latest=false

###########################################################################

# Settings to configure Solr Indexer

###########################################################################

[indexer]

# Location of the solrctl binary.

## solrctl_path=/usr/bin/solrctl

###########################################################################

# Settings to configure Job Designer

###########################################################################

[jobsub]

# Location on local FS where examples and template are stored.

## local_data_dir=..../data

# Location on local FS where sample data is stored

## sample_data_dir=...thirdparty/sample_data

###########################################################################

# Settings to configure Job Browser.

###########################################################################

[jobbrowser]

# Share submitted jobs information with all users. If set to false,

# submitted jobs are visible only to the owner and administrators.

## share_jobs=true

# Whether to disalbe the job kill button for all users in the jobbrowser

## disable_killing_jobs=false

###########################################################################

# Settings to configure the Zookeeper application.

###########################################################################

[zookeeper]

[[clusters]]

[[[default]]]

# Zookeeper ensemble. Comma separated list of Host/Port.

# e.g. localhost:2181,localhost:2182,localhost:2183

host_ports=bigdatamaster:2181,bigdataslave1:2181,bigdataslave2:2181

# The URL of the REST contrib service (required for znode browsing).

## rest_url=http://localhost:9998

# Name of Kerberos principal when using security.

## principal_name=zookeeper

###########################################################################

# Settings to configure the Spark application.

###########################################################################

[spark]

# Host address of the Livy Server.

## livy_server_host=localhost

# Port of the Livy Server.

## livy_server_port=8998

# Configure livy to start with 'process', 'thread', or 'yarn' workers.

## livy_server_session_kind=process

# If livy should use proxy users when submitting a job.

## livy_impersonation_enabled=true

# List of available types of snippets

## languages='[{"name": "Scala Shell", "type": "spark"},{"name": "PySpark Shell", "type": "pyspark"},{"name": "R Shell", "type": "r"},{"name": "Jar", "type": "Jar"},{"name": "Python", "type": "py"},{"name": "Impala SQL", "type": "impala"},{"name": "Hive SQL", "type": "hive"},{"name": "Text", "type": "text"}]'

###########################################################################

# Settings for the User Admin application

###########################################################################

[useradmin]

# The name of the default user group that users will be a member of

## default_user_group=default

[[password_policy]]

# Set password policy to all users. The default policy requires password to be at least 8 characters long,

# and contain both uppercase and lowercase letters, numbers, and special characters.

## is_enabled=false

## pwd_regex="^(?=.*?[A-Z])(?=(.*[a-z]){1,})(?=(.*[d]){1,})(?=(.*[W_]){1,}).{8,}$"

## pwd_hint="The password must be at least 8 characters long, and must contain both uppercase and lowercase letters, at least one number, and at least one special character."

## pwd_error_message="The password must be at least 8 characters long, and must contain both uppercase and lowercase letters, at least one number, and at least one special character."

###########################################################################

# Settings for the Sentry lib

###########################################################################

[libsentry]

# Hostname or IP of server.

## hostname=localhost

# Port the sentry service is running on.

## port=8038

# Sentry configuration directory, where sentry-site.xml is located.

## sentry_conf_dir=/etc/sentry/conf

###########################################################################

# Settings to configure the ZooKeeper Lib

###########################################################################

[libzookeeper]

# ZooKeeper ensemble. Comma separated list of Host/Port.

# e.g. localhost:2181,localhost:2182,localhost:2183

## ensemble=localhost:2181

# Name of Kerberos principal when using security.

## principal_name=zookeeper

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)