1.下载flink 安装包

官网地址

https://flink.apache.org/downloads.html#apache-flink-1112

下载地址:

https://www.apache.org/dyn/closer.lua/flink/flink-1.11.2/flink-1.11.2-bin-scala_2.11.tgz

2.解压安装包

tar -xvf flink-1.11.2-bin-scala_2.11.tgz

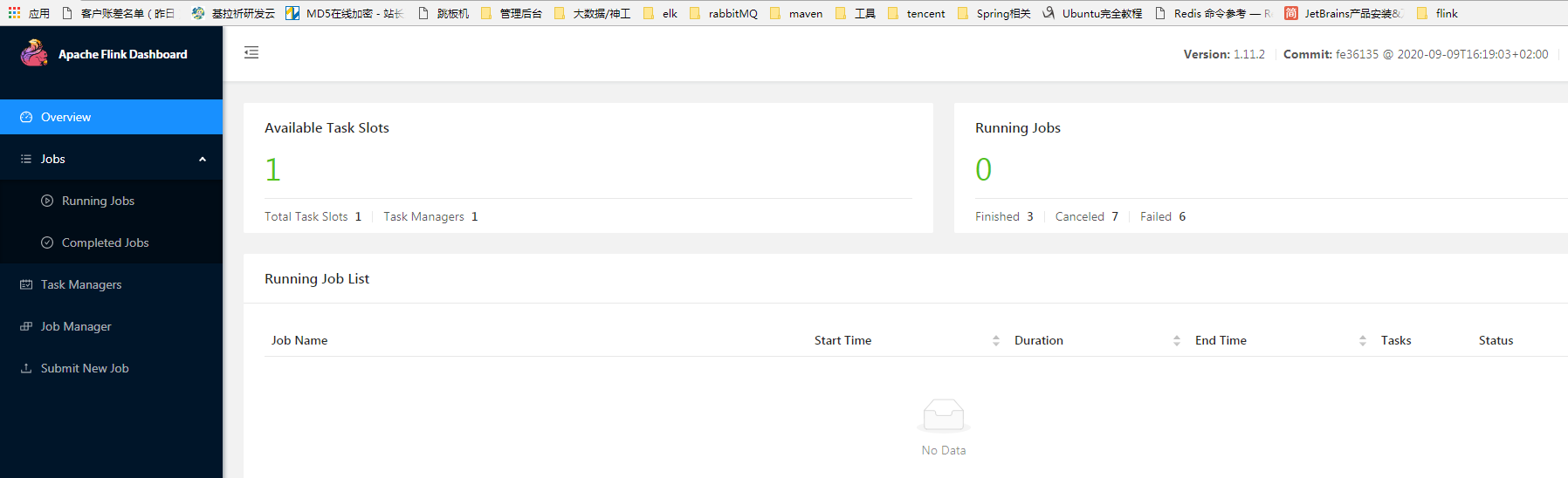

3.启动flink

sudo ./bin/start-cluster.sh

(是否启动成功请访问 http://192.168.**.**:8081/#/overview )

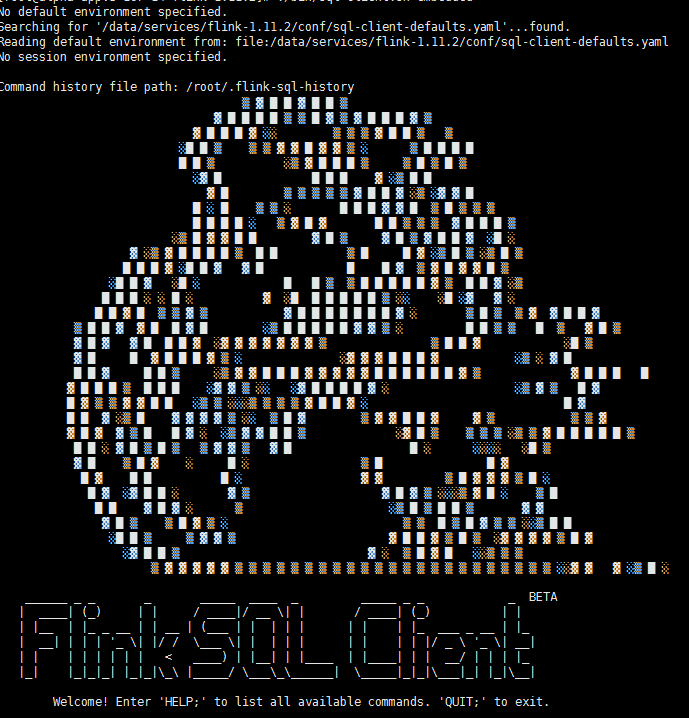

4.启动 sql-client

sudo ./bin/sql-client.sh embedded

5.启动成功 会进入 flink sql> 命令行界面 ( 输入 quit; 退出)

6.1 基本用法

SELECT name, COUNT(*) AS cnt FROM (VALUES ('Bob'), ('Alice'), ('Greg'), ('Bob')) AS nameTable(name) GROUP BY name;

6.2 连接kafka

6.2.1 增加扩展包(默认的只支持csv,file等文件系统)

创建新文件夹kafka, 增加 flink-json-1.11.2.jar, flink-sql-connector-kafka_2.12-1.11.2.jar (可以通过maven来下载到本地,再复制到kafka文件夹里)

<dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-sql-connector-kafka_2.12</artifactId> <version>1.11.2</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-json</artifactId> <version>${flink.version}</version> </dependency>

6.2.2 启动sql-client

sudo ./bin/sql-client.sh embedded -l kafka/

-l,--library <JAR directory> 可以参考 https://ci.apache.org/projects/flink/flink-docs-release-1.11/zh/dev/table/sqlClient.html

6.2.3 创建 kafka Table

CREATE TABLE CustomerStatusChangedEvent(customerId int, oStatus int, nStatus int)with('connector.type' = 'kafka', 'connector.version' = 'universal', 'connector.properties.group.id' = 'g2.group1', 'connector.properties.bootstrap.servers' = '192.168.1.85:9092,192.168.1.86:9092', 'connector.properties.zookeeper.connect' = '192.168.1.85:2181', 'connector.topic' = 'customer_statusChangedEvent', 'connector.startup-mode' = 'earliest-offset', 'format.type' = 'json');

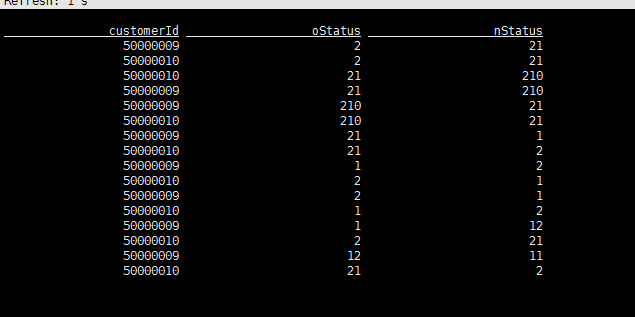

6.2.4 执行查询

select * from CustomerStatusChangedEvent ;

6.2.5 结果输出

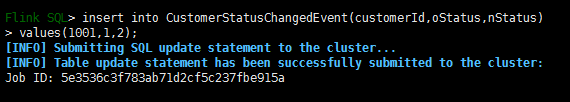

6.2.5 插入数据

insert into CustomerStatusChangedEvent(customerId,oStatus,nStatus) values(1001,1,2);

insert into CustomerStatusChangedEvent(customerId,oStatus,nStatus) values(1001,1,2),(1002,10,2),(1003,1,20);