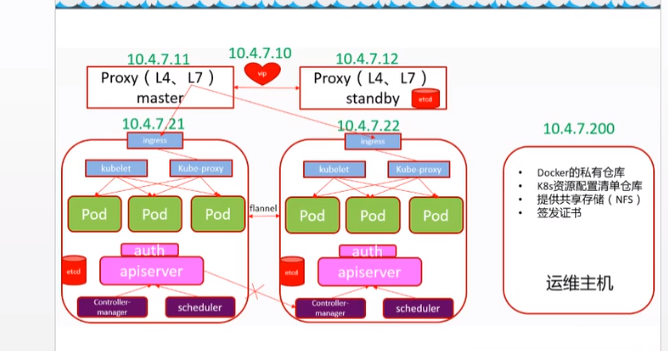

用二进制部署K8S,用了 5 台机器, 几乎每个组件都签一套证书,签了5 套证书,搭建ca 服务器,签这么多证书也是很麻烦的事情。

需要签发证书的组件

etcd 集群--3台

apiserver--2台

node 节点:

kubectl

kube-proxy client 和 server 都要签发证书

1. 基础环境准备

5台机器都是centos7.4 系统, 先关闭iptables 和 selinux , 修改yum 源为阿里云,并且安装常用命令

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all

yum makecache

yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

1.1搭建私有dns 服务器

在10.4.7.11 搭建 bind , yum install bind 修改配置文件vi /etc/named.conf

listen-on port 53 { 10.4.7.11; };

allow-query { any; };

forwarders { 10.4.7.254; };

recursion yes;

dnssec-enable no;

dnssec-validation no

##########

named-checkconf

vi /etc/named.rfc1912.zones

zone "host.com" IN {

type master;

#这个host.com 是内网自定义的

file "host.com.zone";

allow-update { 10.4.7.11; };

};

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 10.4.7.11; };

};

##########

# 因为定义了 directory "/var/named"; 这个配置文件的名字要和 上面定义的host.com od.com 一样才行!

vi /var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.host.com. dnsadmin.host.com. (

2020032001 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

HDSS7-11 A 10.4.7.11

HDSS7-12 A 10.4.7.12

HDSS7-21 A 10.4.7.21

HDSS7-22 A 10.4.7.22

HDSS7-200 A 10.4.7.200

##########

vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020032001 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

# 启动dns 服务

named-checkconf

systemctl start named

netstat -lntup|grep 53

试着解析几台主机

dig -t A hdss7-11.host.com @10.4.7.11 +short

然后把其他的主机dns 改成10.4.7.11

vi /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1=10.4.7.11

1.2 搭建私有 CA 证书服务器

在10.4.7.200 上搭建私有证书服务,用来给k8s 的组件签发证书,后来组件的认证都是通过证书来认证,所以证书很重要,要把证书的时间签长一点!

安装cfssl

也可以用openssl 颁发证书,这里cfssl了。

#安装cfssl 命令

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo chmod +x /usr/bin/cfssl*

然后创建生成ca证书csr的配置文件

mkdir /opt/certs

vi /opt/certs/ca-csr.json

{

"CN": "OldboyEdu",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

# 证书过期时间是20年!

然后用这个配置文件生成ca 证书

cd /opt/certs

cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

会生成 ca.csr ca-csr.json ca-key.pem ca.pem

2 部署docker 和docker harbor

在 10.4.7.21 22 200 三台机器上部署,先把docker 的镜像仓库改成阿里云的,要不直接docker 的 仓库太慢了

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun # 三台机器都执行

mkdir /etc/docker vi /etc/docker/daemon.json { "graph": "/data/docker", "storage-driver": "overlay2", "insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"],

"registry-mirrors": ["https://st0d9feo.mirror.aliyuncs.com"],

# 这个是我的自己阿里云docker 镜像地址,需要自己注册下。

"bip": "172.7.21.1/24", # 这个如果是 7.22 要改成 172.7.22.1/24 7.200 要改成 200.1/24 ,bip 究竟有什么作用呢?

"exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true }

启动docker

mkdir -p /data/docker systemctl start docker systemctl enable docker docker --version

2.2 部署docker镜像私有仓库harbor

在10.4.7.200 上部署 docker harbor

harbor官网github地址

https://github.com/goharbor/harbor

[root@hdss7-200 src]# tar xf harbor-offline-installer-v1.8.3.tgz -C /opt/

[root@hdss7-200 opt]# mv harbor/ harbor-v1.8.3

[root@hdss7-200 opt]# ln -s /opt/harbor-v1.8.3/ /opt/harbor

yum install docker-compose -y

harbor]# ./install.sh

# 检查harbor 启动情况

# docker-compose ps

# docker ps -a

通过nginx 把harbor 代理出来

[root@hdss7-200 harbor]# yum install nginx -y [root@hdss7-200 harbor]# vi /etc/nginx/conf.d/harbor.od.com.conf server { listen 80; server_name harbor.od.com; client_max_body_size 1000m; location / { proxy_pass http://127.0.0.1:180; # 127.0.0.1:180 是 harbor ip 和端口 } } [root@hdss7-200 harbor]# nginx -t [root@hdss7-200 harbor]# systemctl start nginx [root@hdss7-200 harbor]# systemctl enable nginx

然后尝试推送镜像到 harbor 上

[root@hdss7-200 harbor]# docker login harbor.od.com #输入 harbor 用户名和密码 [root@hdss7-200 harbor]# docker push harbor.od.com/public/nginx:v1.7.9 # 必须要先在harbor 创建 public 的项目 要不推送不了!

3.部署master节点

3.1 部署etcd 集群

用三台 做master 节点 先部署 etcd 集群,10.4.7.12 做 leader , 10.4.7.21 和 22 作为 slave 节点。

开始在 7.200 上配置证书生成文件,给etcd 集群通信用的。

vi /opt/certs/ca-config.json { "signing": { "default": { "expiry": "175200h" }, "profiles": { "server": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth" ] }, "client": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "client auth" ] }, "peer": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } }

#这里定义了 server client peer 3 个 profile , 后面生成证书会用到,实际上写一个也行,自义定一个 :k8s 的profile ,后面颁发证书的都用

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=k8s 组件的csr.json | cfssl-json -bare 组件名字 (写组件名字只是为了好区分)

vi /opt/certs/etcd-peer-csr.json { "CN": "k8s-etcd", "hosts": [ "10.4.7.11", "10.4.7.12", "10.4.7.21", "10.4.7.22" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] }

cd /opt/certs/ [root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer

cfssl 的 参数说明 :

-

gencert: 生成新的key(密钥)和签名证书 -

-ca:指明ca的证书

-

-ca-key:指明ca的私钥文件

-

-config:指明请求证书的json文件

-

-profile:与-config中profile 必须要有,否则证书创建不了! 是指根据config中的profile段来生成证书的相关信息

在 10.4.7.12 部署etcd

wget https://github.com/etcd-io/etcd/releases/download/v3.1.20/etcd-v3.1.20-linux-amd64.tar.gz tar xf etcd-v3.1.20-linux-amd64.tar.gz -C /opt/ mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

#然后把 7.200 上 刚才生成的etcd 证书scp 过来

[root@hdss7-12 certs]# scp 10.4.7.200:/opt/certs/ca.pem .

[root@hdss7-12 certs]# scp 10.4.7.200:/opt/certs/etcd-peer.pem .

[root@hdss7-12 certs]# scp 10.4.7.200:/opt/certs/etcd-peer-key.pem .

创建etcd 启动脚本

vim /opt/etcd/etcd-server-startup.sh #!/bin/sh ./etcd --name etcd-server-7-12 --data-dir /data/etcd/etcd-server --listen-peer-urls https://10.4.7.12:2380 # 7.21 和 7.22 要改成对应ip --listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 # 7.21 和 7.22 要改成对应ip --quota-backend-bytes 8000000000 --initial-advertise-peer-urls https://10.4.7.12:2380 # 7.21 和 7.22 要改成对应ip --advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 #对外通告的ip --initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380 --ca-file ./certs/ca.pem --cert-file ./certs/etcd-peer.pem --key-file ./certs/etcd-peer-key.pem --client-cert-auth --trusted-ca-file ./certs/ca.pem --peer-ca-file ./certs/ca.pem --peer-cert-file ./certs/etcd-peer.pem --peer-key-file ./certs/etcd-peer-key.pem --peer-client-cert-auth --peer-trusted-ca-file ./certs/ca.pem --log-output stdout chmod +x /opt/etcd/etcd-server-startup.sh chown -R etcd.etcd /opt/etcd-v3.1.20/ /data/etcd/ /data/logs/etcd-server/

用supervisor 方式 启动etcd,这样停机可以自启动了!

yum install supervisor -y [root@hdss7-12 ~]# systemctl start supervisord [root@hdss7-12 ~]# systemctl enable supervisord [root@hdss7-12 ~]# vi /etc/supervisord.d/etcd-server.ini [program:etcd-server] command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/etcd ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=etcd ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) supervisorctl update [root@hdss7-12 ~]# supervisorctl status

7.12 启动没有问题后 把7.21 和 7.22 按照 刚才的步骤重复一遍,注意改下etcd 启动脚本的ip,然后验证etcd 状态。

[root@hdss7-21 etcd]# ./etcdctl cluster-healthmember 988139385f78284 is healthy: got healthy result from http://127.0.0.1:2379

member 5a0ef2a004fc4349 is healthy: got healthy result from http://127.0.0.1:2379

member f4a0cb0a765574a8 is healthy: got healthy result from http://127.0.0.1:2379

cluster is healthy

[root@hdss7-21 etcd]# ./etcdctl member list 988139385f78284: name=etcd-server-7-22 peerURLs=https://10.4.7.22:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.22:2379 isLeader=false 5a0ef2a004fc4349: name=etcd-server-7-21 peerURLs=https://10.4.7.21:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.21:2379 isLeader=false f4a0cb0a765574a8: name=etcd-server-7-12 peerURLs=https://10.4.7.12:2380 clientURLs=http://127.0.0.1:2379,https://10.4.7.12:2379 isLeader=true

#上面就是正常的了

3.2.部署kube-apiserver集群

在 10.4.7.21 和 22 上部署,先下载apiserver 包

https://github.com/kubernetes/kubernetes/releases/tag/v1.15.2 CHANGELOG-1.15.md--→server binaries--→kubernetes-server-linux-amd64.tar.gz # tar xf kubernetes-server-linux-amd64-v1.15.2.tar.gz -C /opt

cd /opt/kubernetes/server/bin #会看到bin 下面有一堆的kube 二进制包。

root@hdss7-22 bin]# ll

total 1548820

-rwxr-xr-x 1 root root 43534816 Aug 5 2019 apiextensions-apiserver

drwxr-xr-x 2 root root 228 Apr 21 16:29 cert

-rwxr-xr-x 1 root root 100548640 Aug 5 2019 cloud-controller-manager

-rw-r--r-- 1 root root 8 Aug 5 2019 cloud-controller-manager.docker_tag

-rw-r--r-- 1 root root 144437760 Aug 5 2019 cloud-controller-manager.tar

drwxr-xr-x 2 root root 79 Apr 21 16:34 conf

-rwxr-xr-x 1 root root 200648416 Aug 5 2019 hyperkube

-rwxr-xr-x 1 root root 164501920 Aug 5 2019 kube-apiserver

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-apiserver.docker_tag

-rw-r--r-- 1 root root 208390656 Aug 5 2019 kube-apiserver.tar

-rwxr-xr-x 1 root root 116397088 Aug 5 2019 kube-controller-manager

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-controller-manager.docker_tag

-rw-r--r-- 1 root root 160286208 Aug 5 2019 kube-controller-manager.tar

-rwxr-xr-x 1 root root 36987488 Aug 5 2019 kube-proxy

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-proxy.docker_tag

-rwxr-xr-x 1 root root 189 Apr 21 16:48 kube-proxy.sh

-rw-r--r-- 1 root root 84282368 Aug 5 2019 kube-proxy.tar

-rwxr-xr-x 1 root root 38786144 Aug 5 2019 kube-scheduler

-rw-r--r-- 1 root root 8 Aug 5 2019 kube-scheduler.docker_tag

-rw-r--r-- 1 root root 82675200 Aug 5 2019 kube-scheduler.tar

-rwxr-xr-x 1 root root 40182208 Aug 5 2019 kubeadm

-rwxr-xr-x 1 root root 42985504 Aug 5 2019 kubectl

-rwxr-xr-x 1 root root 119616640 Aug 5 2019 kubelet

-rwxr-xr-x 1 root root 1648224 Aug 5 2019 mounter

又要生成证书了,要生成 apiclient 和 apiserver 两套证书。

hdss7-200.host.com上 签发client 证书:

vim /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

创建生成证书apiserver的json配置文件 vi /opt/certs/apiserver-csr.jso{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

#把7.200 上生成的证书scp 到 21 和 22 /opt/kubernetes/server/bin/cert 目录

[root@hdss7-21 cert]# scp hdss7-200:/opt/certs/ca.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/ca-key.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/client.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/client-key.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/apiserver.pem . [root@hdss7-21 cert]# scp hdss7-200:/opt/certs/apiserver-key.pem .

编写7.21 apiserver 启动脚本和supervisor

vim /opt/kubernetes/server/bin/kube-apiserver.sh #!/bin/bash ./kube-apiserver --apiserver-count 2 --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log --audit-policy-file ./conf/audit.yaml --authorization-mode RBAC --client-ca-file ./cert/ca.pem --requestheader-client-ca-file ./cert/ca.pem --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota --etcd-cafile ./cert/ca.pem --etcd-certfile ./cert/client.pem --etcd-keyfile ./cert/client-key.pem --etcd-servers https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 --service-account-key-file ./cert/ca-key.pem --service-cluster-ip-range 192.168.0.0/16 --service-node-port-range 3000-29999 --target-ram-mb=1024 --kubelet-client-certificate ./cert/client.pem --kubelet-client-key ./cert/client-key.pem --log-dir /data/logs/kubernetes/kube-apiserver --tls-cert-file ./cert/apiserver.pem --tls-private-key-file ./cert/apiserver-key.pem --v 2 # chmod +x kube-apiserver.sh # mkdir -p /data/logs/kubernetes/kube-apiserver vi /etc/supervisord.d/kube-apiserver.ini [program:kube-apiserver] command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) # mkdir -p /data/logs/kubernetes/kube-apiserver # supervisorctl update

在 21 和 22 上创建 audit.yaml 文件

[root@hdss7-21 conf]# pwd /opt/kubernetes/server/bin/conf [root@hdss7-21 conf]# cat audit.yaml # 这个文件具体内容没有搞懂 apiVersion: audit.k8s.io/v1beta1 # This is required. kind: Policy # Don't generate audit events for all requests in RequestReceived stage. omitStages: - "RequestReceived" rules: # Log pod changes at RequestResponse level - level: RequestResponse resources: - group: "" # Resource "pods" doesn't match requests to any subresource of pods, # which is consistent with the RBAC policy. resources: ["pods"] # Log "pods/log", "pods/status" at Metadata level - level: Metadata resources: - group: "" resources: ["pods/log", "pods/status"] # Don't log requests to a configmap called "controller-leader" - level: None resources: - group: "" resources: ["configmaps"] resourceNames: ["controller-leader"] # Don't log watch requests by the "system:kube-proxy" on endpoints or services - level: None users: ["system:kube-proxy"] verbs: ["watch"] resources: - group: "" # core API group resources: ["endpoints", "services"] # Don't log authenticated requests to certain non-resource URL paths. - level: None userGroups: ["system:authenticated"] nonResourceURLs: - "/api*" # Wildcard matching. - "/version" # Log the request body of configmap changes in kube-system. - level: Request resources: - group: "" # core API group resources: ["configmaps"] # This rule only applies to resources in the "kube-system" namespace. # The empty string "" can be used to select non-namespaced resources. namespaces: ["kube-system"] # Log configmap and secret changes in all other namespaces at the Metadata level. - level: Metadata resources: - group: "" # core API group resources: ["secrets", "configmaps"] # Log all other resources in core and extensions at the Request level. - level: Request resources: - group: "" # core API group - group: "extensions" # Version of group should NOT be included. # A catch-all rule to log all other requests at the Metadata level. - level: Metadata # Long-running requests like watches that fall under this rule will not # generate an audit event in RequestReceived. omitStages: - "RequestReceived"

3.3.部署四层反向代理

在7.11 和7.12 上部署 , vip 用7.10

yum install nginx keepalived -y vi /etc/nginx/nginx.conf stream { upstream kube-apiserver { server 10.4.7.21:6443 max_fails=3 fail_timeout=30s; server 10.4.7.22:6443 max_fails=3 fail_timeout=30s; } server { listen 7443; proxy_connect_timeout 2s; proxy_timeout 900s; proxy_pass kube-apiserver; } } [root@hdss7-11 etcd]# nginx -t

检查脚本 [root@hdss7-11 ~]# vi /etc/keepalived/check_port.sh #!/bin/bash #keepalived 监控端口脚本 #使用方法: #在keepalived的配置文件中 #vrrp_script check_port {#创建一个vrrp_script脚本,检查配置 # script "/etc/keepalived/check_port.sh 6379" #配置监听的端口 # interval 2 #检查脚本的频率,单位(秒) #} CHK_PORT=$1 if [ -n "$CHK_PORT" ];then PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l` if [ $PORT_PROCESS -eq 0 ];then echo "Port $CHK_PORT Is Not Used,End." exit 1 fi else echo "Check Port Cant Be Empty!" fi [root@hdss7-11 ~]# chmod +x /etc/keepalived/check_port.sh ########## 配置文件 keepalived 主: [root@hdss7-11 conf.d]# vi /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id 10.4.7.11 } vrrp_script chk_nginx { script "/etc/keepalived/check_port.sh 7443" interval 2 weight -20 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 251 priority 100 advert_int 1 mcast_src_ip 10.4.7.11 nopreempt authentication { auth_type PASS auth_pass 11111111 } track_script { chk_nginx } virtual_ipaddress { 10.4.7.10 } } keepalived 从: [root@hdss7-12 conf.d]# vi /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id 10.4.7.12 } vrrp_script chk_nginx { script "/etc/keepalived/check_port.sh 7443" interval 2 weight -20 } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 251 mcast_src_ip 10.4.7.12 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 11111111 } track_script { chk_nginx } virtual_ipaddress { 10.4.7.10 } } nopreempt:非抢占式

然后启动两台 nginx 和 keepalived 查看vip 在哪台上

3.4.部署controller-manager

在21 和 22 上部署,包

/opt/kubernetes/server/bin/kube-controller-manager.sh #!/bin/sh ./kube-controller-manager --cluster-cidr 172.7.0.0/16 --leader-elect true --log-dir /data/logs/kubernetes/kube-controller-manager --master http://127.0.0.1:8080 --service-account-private-key-file ./cert/ca-key.pem --service-cluster-ip-range 192.168.0.0/16 --root-ca-file ./cert/ca.pem --v 2 chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh mkdir -p /data/logs/kubernetes/kube-controller-manager /etc/supervisord.d/kube-conntroller-manager.ini [program:kube-controller-manager] command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) supervisorctl update

3.5.部署kube-scheduler

vi /opt/kubernetes/server/bin/kube-scheduler.sh #!/bin/sh ./kube-scheduler --leader-elect --log-dir /data/logs/kubernetes/kube-scheduler --master http://127.0.0.1:8080 --v 2 chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh mkdir -p /data/logs/kubernetes/kube-scheduler vi /etc/supervisord.d/kube-scheduler.ini [program:kube-scheduler] command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) supervisorctl update # supervisorctl status

ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

[root@hdss7-22 bin]# kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

scheduler Healthy ok

controller-manager Healthy ok

4.部署node节点

4.1.部署kubelet

在7.21 和 22 上部署kubectl , 先在7.200 上生成kubectl 证书

[root@hdss7-200 certs]# vi kubelet-csr.json { "CN": "k8s-kubelet", "hosts": [ "127.0.0.1", "10.4.7.10", "10.4.7.21", "10.4.7.22", "10.4.7.23", "10.4.7.24", "10.4.7.25", "10.4.7.26", "10.4.7.27", "10.4.7.28" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } [root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

然后再把刚才生成的证书scp 到 7.21 和 7.22 上

在7.21 上

cd /opt/kubernetes/server/bin/conf # 设置集群参数 [root@hdss7-21 conf]# kubectl config set-cluster myk8s --certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem --embed-certs=true --server=https://10.4.7.10:7443 --kubeconfig=kubelet.kubeconfig # 设置客户端认证参数 # kubectl config set-credentials k8s-node --client-certificate=/opt/kubernetes/server/bin/cert/client.pem --client-key=/opt/kubernetes/server/bin/cert/client-key.pem --embed-certs=true --kubeconfig=kubelet.kubeconfig # 设置上下文参数 kubectl config set-context myk8s-context --cluster=myk8s --user=k8s-node --kubeconfig=kubelet.kubeconfig # 设置默认上下文 kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig 会生成 kubelet.kubeconfig 文件,把这个文件也scp 到7.22 上

在7.21 上 创建yaml 文件

[root@hdss7-21 conf]# vi k8s-node.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: k8s-node #要和上面的创建的名字一致 roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: k8s-node kubectl create -f k8s-node.yaml #检查 kubectl get clusterrolebinding k8s-node NAME AGE k8s-node 38d

[root@hdss7-200 ~]# docker pull kubernetes/pause #把pause 镜像推送到私有harbor 上 docker tag f9d5de079539 harbor.od.com/public/pause:latest # pause 镜像作用是让同一个pod 不同容器之间共享Linux Namespace 和 cgroups ,一个相当于说中间的容器存在,也见 infra container ,

并且整个 Pod 的生命周期是等同于 Infra container 的生命周期的,没有这个pod 就起不来!

在7.21 上 vi /opt/kubernetes/server/bin/kubelet.sh #!/bin/sh ./kubelet --anonymous-auth=false --cgroup-driver systemd --cluster-dns 192.168.0.2 --cluster-domain cluster.local --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice --fail-swap-on="false" --client-ca-file ./cert/ca.pem --tls-cert-file ./cert/kubelet.pem --tls-private-key-file ./cert/kubelet-key.pem --hostname-override hdss7-21.host.com #7.22 上改下主机名 --image-gc-high-threshold 20 --image-gc-low-threshold 10 --kubeconfig ./conf/kubelet.kubeconfig --log-dir /data/logs/kubernetes/kube-kubelet --pod-infra-container-image harbor.od.com/public/pause:latest --root-dir /data/kubelet chmod +x /opt/kubernetes/server/bin/kubelet.sh [root@hdss7-21 conf]# mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet vi /etc/supervisord.d/kube-kubelet.ini [program:kube-kubelet] command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) supervisorctl update supervisorctl status

然后在7.22 上也按照这个步骤执行一次,然后验证

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

hdss7-21.host.com Ready master,node 38d v1.15.2 10.4.7.21 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

hdss7-22.host.com Ready <none> 38d v1.15.2 10.4.7.22 <none> CentOS Linux 7 (Core) 3.10.0-957.21.3.el7.x86_64 docker://19.3.8

4.2.部署kube-proxy

在7.21 和 22 上部署kube-proxy, 先在200颁发 kube-proxy 证书

[root@hdss7-200 certs]# vi kube-proxy-csr.json { "CN": "system:kube-proxy", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] } cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

然后把刚才生成的 几个kube-proxy 文件scp 到 21 和 22 上

生成kubeproxy.kubeconfig 文件

在 7.21 上 cd /opt/kubernetes/server/bin/conf kubectl config set-cluster myk8s --certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem --embed-certs=true --server=https://10.4.7.10:7443 --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy --client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem --client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig kubectl config set-context myk8s-context --cluster=myk8s --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig 会生成 kube-proxy.kubeconfig 文件 ,把这个文件scp 到 7.22 上

# 创建kubeproy 启动脚本

vi /opt/kubernetes/server/bin/kube-proxy.sh #!/bin/sh ./kube-proxy --cluster-cidr 172.7.0.0/16 --hostname-override hdss7-21.host.com #7.22 要改下名字 --proxy-mode=ipvs --ipvs-scheduler=nq --kubeconfig ./conf/kube-proxy.kubeconfig ls -l /opt/kubernetes/server/bin/conf/|grep kube-proxy chmod +x /opt/kubernetes/server/bin/kube-proxy.sh mkdir -p /data/logs/kubernetes/kube-proxy vi /etc/supervisord.d/kube-proxy.ini [program:kube-proxy] command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) supervisorctl update [root@hdss7-21 bin]# supervisorctl status

7.22 上也重复下这个配置

加载ipvs 模块 ,k8s 集群中用ipvs 调度,比iptables 高效!

lsmod |grep ip_vs [root@hdss7-21 bin]# vi /root/ipvs.sh #!/bin/bash ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs" for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*") do /sbin/modinfo -F filename $i &>/dev/null if [ $? -eq 0 ];then /sbin/modprobe $i fi done [root@hdss7-21 bin]# chmod +x /root/ipvs.sh [root@hdss7-21 bin]# sh /root/ipvs.sh [root@hdss7-21 bin]# lsmod |grep ip_vs yum install ipvsadm -y [root@hdss7-21 bin]# ipvsadm -Ln #查看ipvs 模块转换规则。

[root@hdss7-21 bin]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 38d