使用Kerberos保护Hadoop集群

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

前面我们配置了Kerberos并准备好使用它来验证Hadoop用户。要使用Kerberos保护集群,需要将Kerberos信息添加到相关Hadoop配置文件中,从而将Kerberos与Hadoop连接。

一.映射服务主体

Kerberos使用core-site.xml文件中列出的规则,通过指定"hadoop.security.auth_to_local"参数,将将kerberos主体映射到操作系统本地用户名。默认规则(名为"DEFAULT")将服务主体的名称转换为它们名字的第一个组成部分。 请记住,服务主体的名称要么由两个组件组成,如"jason@YINZHENGJIE.COM",要么使用三部分字符串组成,如"hdfs/hadoop101.yinzhengjie.com@YINZHENGJIE.COM"。Hadoop将Kerberos主体名称映射到其本地用户名。 如果服务主体名称的第一个部分(在本示例中为hdfs)与其它用户名相同,则无需创建任何其他规则,因为在这种情况下,DEFAULT规则就足够了。请注意,还可以通过在其中配置"auth_to_local"参数,将主体映射到krb5.conf文件中的用户名。 如果服务主体的名称与其操作系统用户名称不同,则必须配置"hadoop.security.auth_to_local"参数以指定将主体名称转换为操作系统用户名称的规则。如前所述,此参数的默认值为DEFAULT。 一个规则提供了转换服务主体的名称的规则,由以下三部分组成: Base: Base服务指定服务主体名称由几个部分构成,后跟一个冒号和用于从服务主体名称构建用户名的模式。在模式中,"$0"表示域,"$1"表示服务主体名称中的第一部分,"$2"表示第二部分。 指定格式为"[<number>:<string>]",将其应用于主体的名称以获得翻译后的主体名称,也称为初始本地名称。这里有几个例子: 如果基本格式为[1:$1:$0],则"jason@YINZHENGJIE.COM"的UPN的初始本地名称为"jason.YINZHENGJIE.COM"。 如果基本格式为[2:$1@$2],则"hdfs/hadoop101.yinzhengjie.com@YINZHENGJIE.COM"的SPN的初始本地名称为"hdfs@YINZHENGJIE.COM" Filter: 过滤器(或接收过滤器)是使用与生成的字符串匹配的正则表达式的组件,以便应用规则。例如,过滤器(.*YINZHENGJIE.COM)匹配以"@YINZHENGJIE.COM"结尾的所有字符串,例如"jason.YINZHENGJIE.COM"和"hdfs.YINZHENGJIE.COM"。 Substitution: 这是一个类sed替换,并使用固定字符串替换被匹配的正则表达式的命令。规则的完整规范是:"[<number>:<string>](<正则表达式>)s/<parttern>/<replacement>/"。 可以使用括号括住正则表达式的一部分,并在替换字符串中通过一个数字(例如"1")来引用。替换命名"s/<parttern>/<replacement>/g"与普通的Linux替换命令一样,g指定全局替换。 以下是替换命名根据各种规则转换表达式"jason@YINZHENGJIE.COM"的一些示例: "s/(.*).YINZHENGJIE.COM/1/",匹配结果为"jason"; "s/.YINZHENGJIE.COM//",匹配结果为"jason"; 可以提供多个规则,一旦主体与规则匹配,则会跳过其余规则。可以在末尾放置一个DEFAULT规则,之前没有规则被命中时,将执行该DEFAULT规则。 可以通过"hadoop.security.group.mapping"参数将用户映射到组。默认映射实现通过本地shell命令查找用户和组映射。 由于此默认实现使用Linux界面来建立用户组成员,因此必须在运行认证服务的所有服务器上配置该用户组,例如NameNode,ResourManager和DataNodes等。组信息必须在集群上保持一致。 如果需要使用在LDAP服务器(如Active Directory)上而不是在Hadoop集群本身配置的组,则可以使用"LdapGroupsMapping"实现。 博主推荐阅读: https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/core-default.xml https://web.mit.edu/kerberos/krb5-latest/doc/admin/conf_files/krb5_conf.html

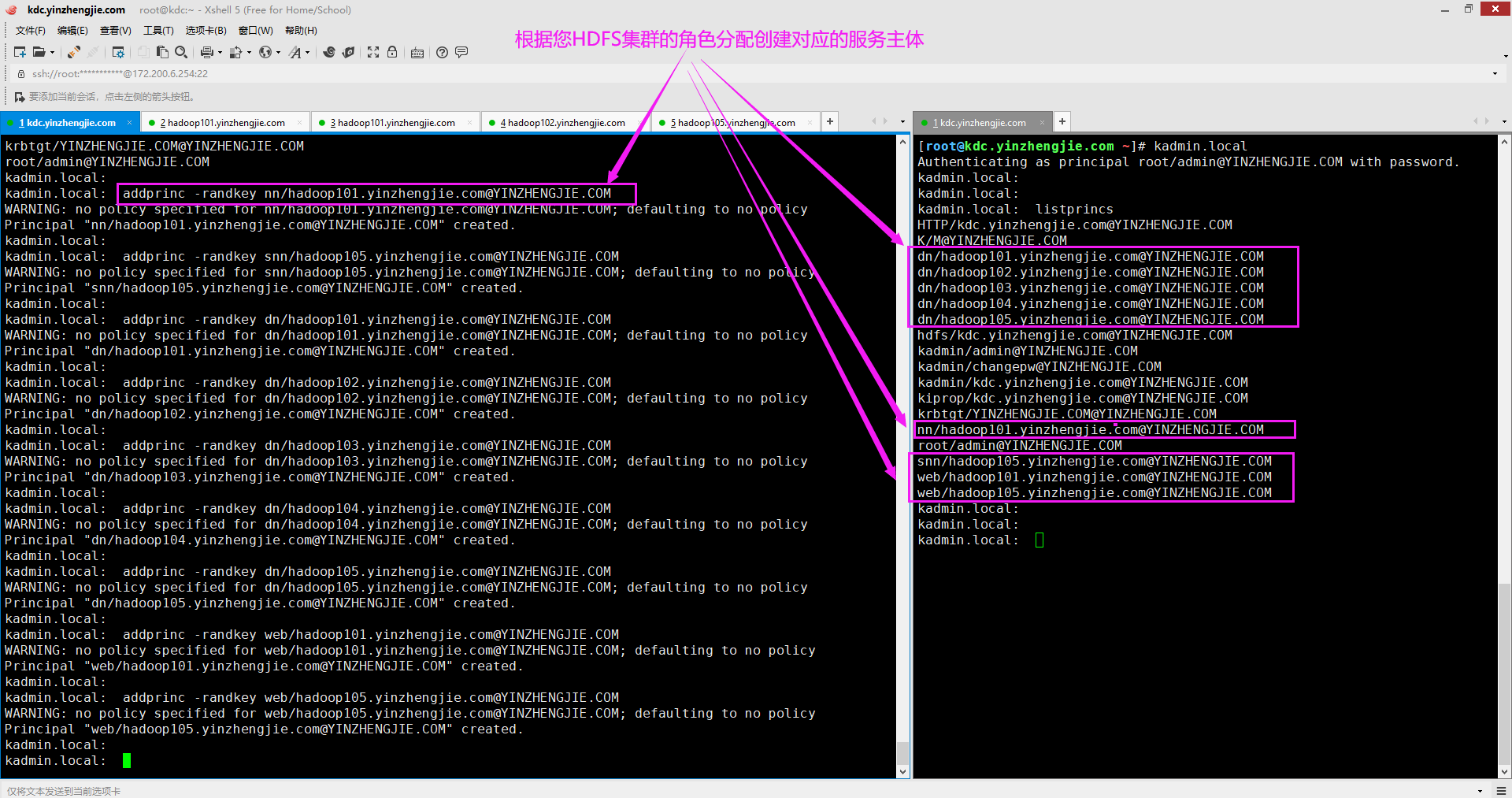

1>.创建服务主体

[root@kdc.yinzhengjie.com ~]# kadmin.local Authenticating as principal root/admin@YINZHENGJIE.COM with password. kadmin.local: kadmin.local: listprincs HTTP/kdc.yinzhengjie.com@YINZHENGJIE.COM K/M@YINZHENGJIE.COM hdfs/kdc.yinzhengjie.com@YINZHENGJIE.COM kadmin/admin@YINZHENGJIE.COM kadmin/changepw@YINZHENGJIE.COM kadmin/kdc.yinzhengjie.com@YINZHENGJIE.COM kiprop/kdc.yinzhengjie.com@YINZHENGJIE.COM krbtgt/YINZHENGJIE.COM@YINZHENGJIE.COM root/admin@YINZHENGJIE.COM kadmin.local: kadmin.local: listprincs HTTP/kdc.yinzhengjie.com@YINZHENGJIE.COM K/M@YINZHENGJIE.COM hdfs/kdc.yinzhengjie.com@YINZHENGJIE.COM kadmin/admin@YINZHENGJIE.COM kadmin/changepw@YINZHENGJIE.COM kadmin/kdc.yinzhengjie.com@YINZHENGJIE.COM kiprop/kdc.yinzhengjie.com@YINZHENGJIE.COM krbtgt/YINZHENGJIE.COM@YINZHENGJIE.COM root/admin@YINZHENGJIE.COM kadmin.local: kadmin.local: addprinc -randkey nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: addprinc -randkey web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM WARNING: no policy specified for web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM; defaulting to no policy Principal "web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM" created. kadmin.local: kadmin.local: listprincs HTTP/kdc.yinzhengjie.com@YINZHENGJIE.COM K/M@YINZHENGJIE.COM dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM hdfs/kdc.yinzhengjie.com@YINZHENGJIE.COM kadmin/admin@YINZHENGJIE.COM kadmin/changepw@YINZHENGJIE.COM kadmin/kdc.yinzhengjie.com@YINZHENGJIE.COM kiprop/kdc.yinzhengjie.com@YINZHENGJIE.COM krbtgt/YINZHENGJIE.COM@YINZHENGJIE.COM nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM root/admin@YINZHENGJIE.COM snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM kadmin.local:

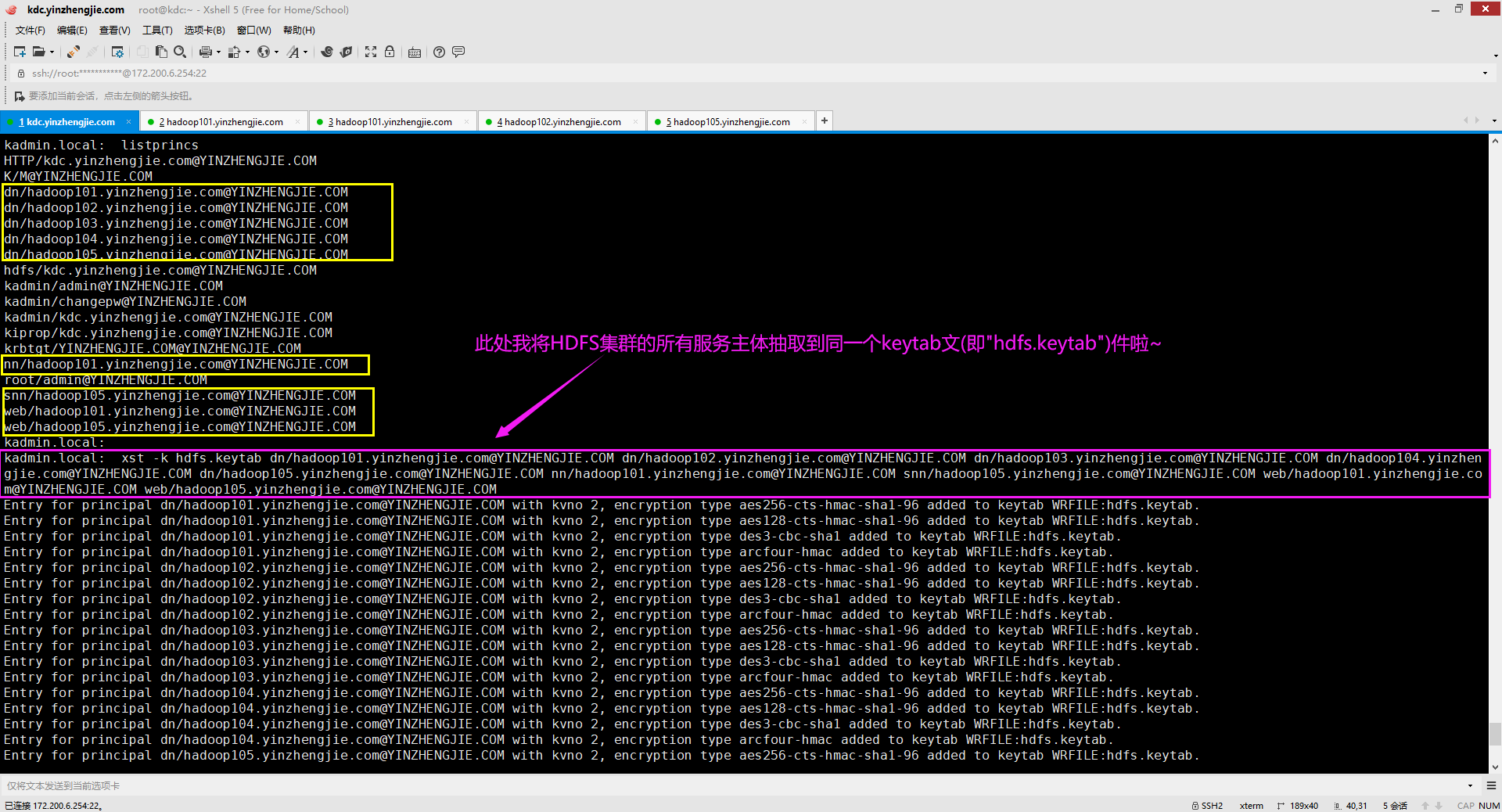

2>.创建keytab文件(我这里偷懒了,将所有的服务主体打包成一个keytab啦,生产环境建议大家拆分成多个keytab文件)

[root@kdc.yinzhengjie.com ~]# kadmin.local Authenticating as principal root/admin@YINZHENGJIE.COM with password. kadmin.local: kadmin.local: listprincs HTTP/kdc.yinzhengjie.com@YINZHENGJIE.COM K/M@YINZHENGJIE.COM dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM hdfs/kdc.yinzhengjie.com@YINZHENGJIE.COM kadmin/admin@YINZHENGJIE.COM kadmin/changepw@YINZHENGJIE.COM kadmin/kdc.yinzhengjie.com@YINZHENGJIE.COM kiprop/kdc.yinzhengjie.com@YINZHENGJIE.COM krbtgt/YINZHENGJIE.COM@YINZHENGJIE.COM nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM root/admin@YINZHENGJIE.COM snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM kadmin.local: kadmin.local: listprincs HTTP/kdc.yinzhengjie.com@YINZHENGJIE.COM K/M@YINZHENGJIE.COM dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM hdfs/kdc.yinzhengjie.com@YINZHENGJIE.COM kadmin/admin@YINZHENGJIE.COM kadmin/changepw@YINZHENGJIE.COM kadmin/kdc.yinzhengjie.com@YINZHENGJIE.COM kiprop/kdc.yinzhengjie.com@YINZHENGJIE.COM krbtgt/YINZHENGJIE.COM@YINZHENGJIE.COM nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM root/admin@YINZHENGJIE.COM snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM kadmin.local: kadmin.local: xst -k hdfs.keytab dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM dn/hadoop104.yinzhen gjie.com@YINZHENGJIE.COM dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM web/hadoop105.yinzhengjie.com@YINZHENGJIE.COMEntry for principal dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop102.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop103.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop104.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal dn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal nn/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal snn/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop101.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type des3-cbc-sha1 added to keytab WRFILE:hdfs.keytab. Entry for principal web/hadoop105.yinzhengjie.com@YINZHENGJIE.COM with kvno 2, encryption type arcfour-hmac added to keytab WRFILE:hdfs.keytab. kadmin.local: kadmin.local: quit [root@kdc.yinzhengjie.com ~]# [root@kdc.yinzhengjie.com ~]# ll total 4 -rw------- 1 root root 3362 Oct 6 12:49 hdfs.keytab [root@kdc.yinzhengjie.com ~]#

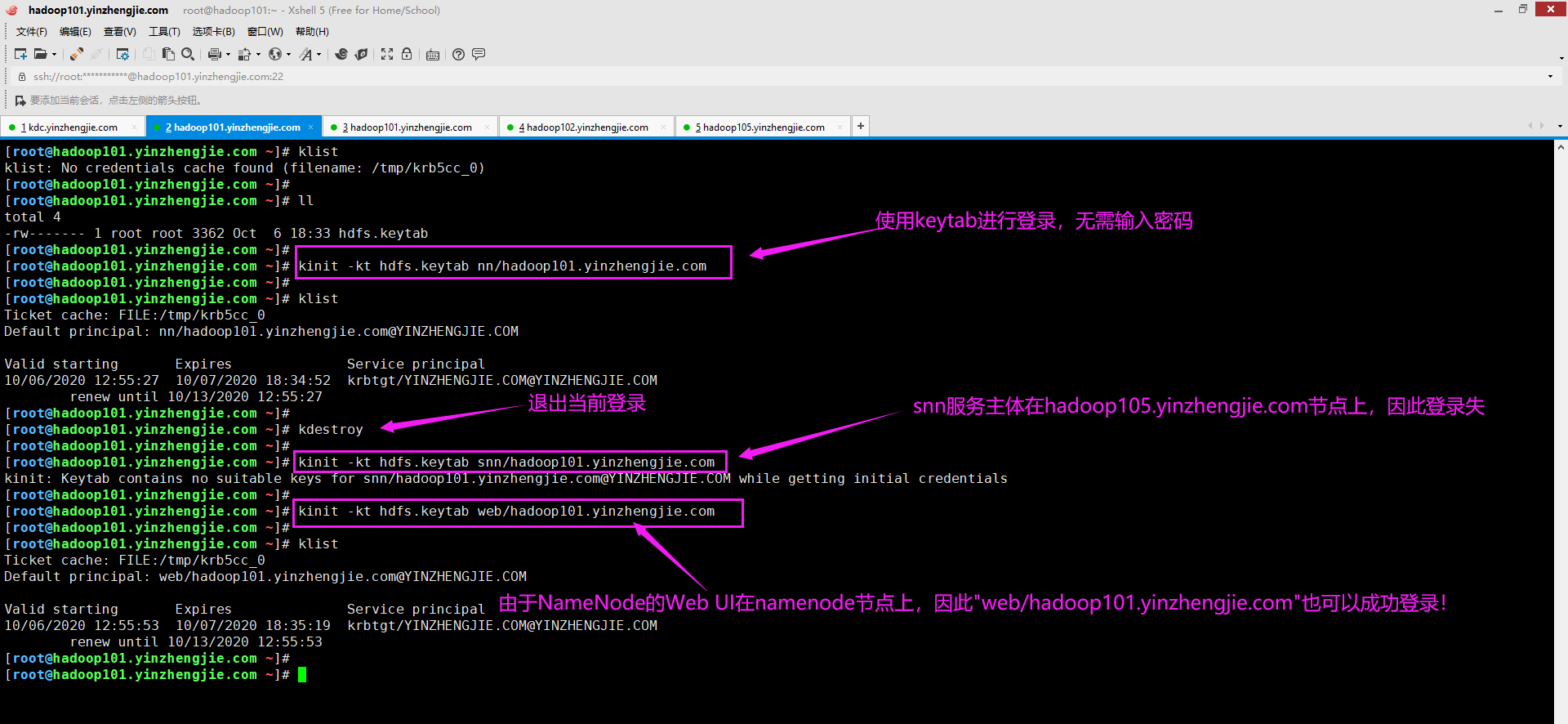

3>.验证keytab文件是否可用并分发到Hadoop集群

[root@kdc.yinzhengjie.com ~]# ll total 4 -rw------- 1 root root 3362 Oct 6 12:49 hdfs.keytab [root@kdc.yinzhengjie.com ~]# [root@kdc.yinzhengjie.com ~]# scp hdfs.keytab hadoop101.yinzhengjie.com:~ root@hadoop101.yinzhengjie.com's password: hdfs.keytab 100% 3362 2.5MB/s 00:00 [root@kdc.yinzhengjie.com ~]# [root@kdc.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ll total 4 -rw------- 1 root root 3362 Oct 6 18:33 hdfs.keytab [root@hadoop101.yinzhengjie.com ~]# [root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a 'src=~/hdfs.keytab dest=/yinzhengjie/softwares/hadoop/etc/hadoop/conf' hadoop105.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "84e8689784161efa5c1e59c60efbd826be8e482c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab", "gid": 0, "group": "root", "md5sum": "a84537a38eedd6db31d4359444d05a5a", "mode": "0644", "owner": "root", "size": 3362, "src": "/root/.ansible/tmp/ansible-tmp-1601980761.27-8932-29340116574563/source", "state": "file", "uid": 0 } hadoop101.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "84e8689784161efa5c1e59c60efbd826be8e482c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab", "gid": 0, "group": "root", "md5sum": "a84537a38eedd6db31d4359444d05a5a", "mode": "0644", "owner": "root", "size": 3362, "src": "/root/.ansible/tmp/ansible-tmp-1601980761.28-8930-183396353685288/source", "state": "file", "uid": 0 } hadoop103.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "84e8689784161efa5c1e59c60efbd826be8e482c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab", "gid": 0, "group": "root", "md5sum": "a84537a38eedd6db31d4359444d05a5a", "mode": "0644", "owner": "root", "size": 3362, "src": "/root/.ansible/tmp/ansible-tmp-1601980761.3-8928-257802276412188/source", "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "84e8689784161efa5c1e59c60efbd826be8e482c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab", "gid": 0, "group": "root", "md5sum": "a84537a38eedd6db31d4359444d05a5a", "mode": "0644", "owner": "root", "size": 3362, "src": "/root/.ansible/tmp/ansible-tmp-1601980761.24-8926-157496120508863/source", "state": "file", "uid": 0 } hadoop104.yinzhengjie.com | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "84e8689784161efa5c1e59c60efbd826be8e482c", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab", "gid": 0, "group": "root", "md5sum": "a84537a38eedd6db31d4359444d05a5a", "mode": "0644", "owner": "root", "size": 3362, "src": "/root/.ansible/tmp/ansible-tmp-1601980761.3-8929-242677670963812/source", "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

二.在Hadoop配置文件中增加Kerberos的配置

为了在Hadoop集群中启用Kerberos身份验证,必须将Kerberos相关信息添加到以下配置文件:

core-site.xml

hdfs-site.xml

yarn-site.xml

这些文件中配置HDFS和YARN与Kerberos一起工作。本篇博客仅针对HDFS集群做Kerberos验证,YARN集群做Kerberos验证流程类似。

1>.修改Hadoop核心配置文件(core-site.xml)并分发到集群节点

[root@hadoop101.yinzhengjie.com ~]# vim ${HADOOP_HOME}/etc/hadoop/core-site.xml ...... <!-- 以下参数用于配置Kerberos --> <property> <name>hadoop.security.authentication</name> <value>kerberos</value> <description>此参数设置集群的认证类型,默认值是"simple"。当使用Kerberos进行身份验证时,请设置为"kerberos".</description> </property> <property> <name>hadoop.security.authorization</name> <value>true</value> <description>此参数用于确认是否启用安全认证,默认值为"false",我们需要启用该功能方能进行安全认证.</description> </property> <property> <name>hadoop.security.auth_to_local</name> <value> RULE:[2:$1@$0](nn/.*@.*YINZHENGJIE.COM)s/.*/hdfs/ RULE:[2:$1@$0](jn/.*@.*YINZHENGJIE.COM)s/.*/hdfs/ RULE:[2:$1@$0](dn/.*@.*YINZHENGJIE.COM)s/.*/hdfs/ RULE:[2:$1@$0](nm/.*@.*YINZHENGJIE.COM)s/.*/yarn/ RULE:[2:$1@$0](rm/.*@.*YINZHENGJIE.COM)s/.*/yarn/ RULE:[2:$1@$0](jhs/.*@.*YINZHENGJIE.COM)s/.*/mapred/ DEFAULT </value> <description>此参数指定如何使用映射规则将Kerberos主体名映射到OS用户名.</description> </property> <property> <name>hadoop.rpc.protection</name> <value>privacy</value> <description>此参数指定保护级别,有三种可能,分别为authentication(默认值,表示仅客户端/服务器相互认值),integrity(表示保证数据的完整性并进行身份验证),privacy(进行身份验证并保护数据完整性,并且还加密在客户端与服务器之间传输的数据)</description> </property> ...... </configuration> [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=${HADOOP_HOME}/etc/hadoop/core-site.xml dest=${HADOOP_HOME}/etc/hadoop/" hadoop105.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "61a71ecb08f9abcc8470b5b19eab1e738282b950", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "gid": 0, "group": "root", "mode": "0644", "owner": "root", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "size": 5765, "state": "file", "uid": 0 } hadoop101.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "61a71ecb08f9abcc8470b5b19eab1e738282b950", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "gid": 190, "group": "systemd-journal", "mode": "0644", "owner": "12334", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "size": 5765, "state": "file", "uid": 12334 } hadoop104.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "61a71ecb08f9abcc8470b5b19eab1e738282b950", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "gid": 0, "group": "root", "mode": "0644", "owner": "root", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "size": 5765, "state": "file", "uid": 0 } hadoop103.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "61a71ecb08f9abcc8470b5b19eab1e738282b950", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "gid": 0, "group": "root", "mode": "0644", "owner": "root", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "size": 5765, "state": "file", "uid": 0 } hadoop102.yinzhengjie.com | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "checksum": "61a71ecb08f9abcc8470b5b19eab1e738282b950", "dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "gid": 0, "group": "root", "mode": "0644", "owner": "root", "path": "/yinzhengjie/softwares/hadoop/etc/hadoop/core-site.xml", "size": 5765, "state": "file", "uid": 0 } [root@hadoop101.yinzhengjie.com ~]#

2>.修改HDFS集群配置文件(hdfs-site.xml)并分发到集群节点

需要在hdfs-site.xml中配置守护程序的keyteb文件位置和主体名称。不需要手动配置大量DataNotes。Hadoop提供了一个名为"_HOST"的变量,可以使用该变量动态配置,而不必在集群中的每个节点单独配置每个HDFS守护程序。

当用户或服务连接到集群时,"_HOST"变量被解析为服务器的FQDN。记住,zookeeper和Hive不支持"_HOST"变量的规范。

[root@hadoop101.yinzhengjie.com ~]# vim ${HADOOP_HOME}/etc/hadoop/hdfs-site.xml ...... <!-- 使用以下配置参数配置Kerberos服务主体 --> <property> <name>dfs.namenode.kerberos.principal</name> <value>nn/_HOST@YINZHENGJIE.COM</value> <description>此参数指定NameNode的Kerberos服务主体名称。通常将其设置为nn/_HOST@REALM.TLD。每个NameNode在启动时都将_HOST替换为其自己的标准主机名。_HOST占位符允许在HA设置中的两个NameNode上使用相同的配置设置。</description> </property> <property> <name>dfs.secondary.namenode.kerberos.principal</name> <value>snn/_HOST@YINZHENGJIE.COM</value> <description>此参数指定Secondary NameNode的Kerberos主体名称。</description> </property> <property> <name>dfs.web.authentication.kerberos.principal</name> <value>web/_HOST@YINZHENGJIE.COM</value> <description>NameNode用于WebHDFS SPNEGO身份验证的服务器主体。启用WebHDFS和安全性时需要。</description> </property> <property> <name>dfs.namenode.kerberos.internal.spnego.principal</name> <value>web/_HOST@YINZHENGJIE.COM</value> <description>启用Kerberos安全性时,NameNode用于Web UI SPNEGO身份验证的服务器主体。若不设置该参数,默认值为"${dfs.web.authentication.kerberos.principal}"</description> </property> <property> <name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name> <value>web/_HOST@YINZHENGJIE.COM</value> <description>启用Kerberos安全性时,Secondary NameNode用于Web UI SPNEGO身份验证的服务器主体。与其他所有Secondary NameNode设置一样,在HA设置中将忽略它。默认值为"${dfs.web.authentication.kerberos.principal}"</description> </property> <property> <name>dfs.datanode.kerberos.principal</name> <value>dn/_HOST@YINZHENGJIE.COM</value> <description>此参数指定DataNode服务主体。通常将其设置为dn/_HOST@REALM.TLD。每个DataNode在启动时都将_HOST替换为其自己的标准主机名。_HOST占位符允许在所有DataNode上使用相同的配置设置</description> </property> <property> <name>dfs.block.access.token.enable</name> <value>true</value> <description>如果为"true",则访问令牌用作访问数据节点的功能。如果为"false",则在访问数据节点时不检查访问令牌。默认值为"false"</description> </property> <!-- 使用以下配置参数指定keytab文件 --> <property> <name>dfs.web.authentication.kerberos.keytab</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab</value> <description>http服务主体的keytab文件位置,即"dfs.web.authentication.kerberos.principal"对应的主体的密钥表文件。</description> </property> <property> <name>dfs.namenode.keytab.file</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab</value> <description>每个NameNode守护程序使用的keytab文件作为其服务主体登录。主体名称使用"dfs.namenode.kerberos.principal"配置。</description> </property> <property> <name>dfs.datanode.keytab.file</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab</value> <description>每个DataNode守护程序使用的keytab文件作为其服务主体登录。主体名称使用"dfs.datanode.kerberos.principal"配置。</description> </property> <property> <name>dfs.secondary.namenode.keytab.file</name> <value>/yinzhengjie/softwares/hadoop/etc/hadoop/conf/hdfs.keytab</value> <description>每个Secondary Namenode守护程序使用的keytab文件作为其服务主体登录。主体名称使用"dfs.secondary.namenode.kerberos.principal"配置。</description> </property> <!-- DataNode SASL配置,若不指定可能导致DataNode启动失败 --> <property> <name>dfs.data.transfer.protection</name> <value>integrity</value> <description>逗号分隔的SASL保护值列表,用于在读取或写入块数据时与DataNode进行安全连接。可能的值为:"authentication"(仅表示身份验证,没有完整性或隐私), "integrity"(意味着启用了身份验证和完整性)和"privacy"(意味着所有身份验证,完整性和隐私都已启用)。如果dfs.encrypt.data.transfer设置为true,则它将取代dfs.data.transfer.protection的设置,并强制所有连接必须使用专门的加密SASL握手。对于与在特权端口上侦听的DataNode的连接,将忽略此属性。在这种情况下,假定特权端口的使用建立了足够的信任。</description> </property> <property> <name>dfs.http.policy</name> <value>HTTP_AND_HTTPS</value> <description>确定HDFS是否支持HTTPS(SSL)。默认值为"HTTP_ONLY"(仅在http上提供服务),"HTTPS_ONLY"(仅在https上提供服务,DataNode节点设置该值),"HTTP_AND_HTTPS"(同时提供服务在http和https上,NameNode和Secondary NameNode节点设置该值)。</description> </property> ...... </configuration> [root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# vim ${HADOOP_HOME}/etc/hadoop/hdfs-site.xml

[root@hadoop101.yinzhengjie.com ~]#

[root@hadoop101.yinzhengjie.com ~]# ansible all -m copy -a "src=${HADOOP_HOME}/etc/hadoop/hdfs-site.xml dest=${HADOOP_HOME}/etc/hadoop/"

hadoop102.yinzhengjie.com | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "b342d14e02a6897590ce45681db0ca2ac692beb3",

"dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml",

"gid": 0,

"group": "root",

"md5sum": "64f6435d1a3370e743be63165a1f8428",

"mode": "0644",

"owner": "root",

"size": 11508,

"src": "/root/.ansible/tmp/ansible-tmp-1601984867.8-10949-176752949394383/source",

"state": "file",

"uid": 0

}

hadoop101.yinzhengjie.com | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"checksum": "b342d14e02a6897590ce45681db0ca2ac692beb3",

"dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml",

"gid": 190,

"group": "systemd-journal",

"mode": "0644",

"owner": "12334",

"path": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml",

"size": 11508,

"state": "file",

"uid": 12334

}

hadoop103.yinzhengjie.com | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "b342d14e02a6897590ce45681db0ca2ac692beb3",

"dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml",

"gid": 0,

"group": "root",

"md5sum": "64f6435d1a3370e743be63165a1f8428",

"mode": "0644",

"owner": "root",

"size": 11508,

"src": "/root/.ansible/tmp/ansible-tmp-1601984867.84-10951-232652152197493/source",

"state": "file",

"uid": 0

}

hadoop105.yinzhengjie.com | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "b342d14e02a6897590ce45681db0ca2ac692beb3",

"dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml",

"gid": 0,

"group": "root",

"md5sum": "64f6435d1a3370e743be63165a1f8428",

"mode": "0644",

"owner": "root",

"size": 11508,

"src": "/root/.ansible/tmp/ansible-tmp-1601984867.86-10955-87808746957801/source",

"state": "file",

"uid": 0

}

hadoop104.yinzhengjie.com | CHANGED => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": true,

"checksum": "b342d14e02a6897590ce45681db0ca2ac692beb3",

"dest": "/yinzhengjie/softwares/hadoop/etc/hadoop/hdfs-site.xml",

"gid": 0,

"group": "root",

"md5sum": "64f6435d1a3370e743be63165a1f8428",

"mode": "0644",

"owner": "root",

"size": 11508,

"src": "/root/.ansible/tmp/ansible-tmp-1601984867.82-10952-228427124500370/source",

"state": "file",

"uid": 0

}

[root@hadoop101.yinzhengjie.com ~]#

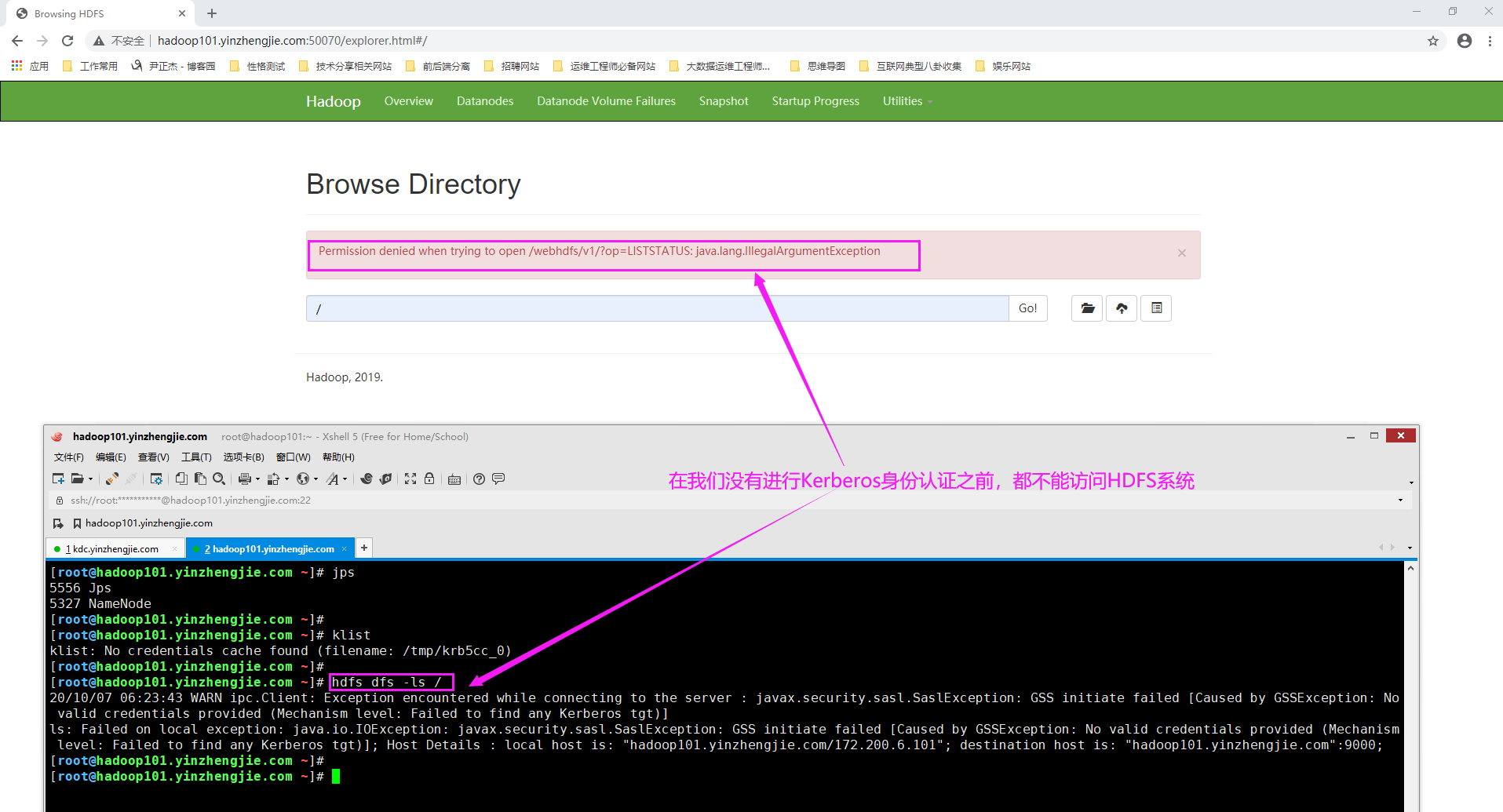

三.验证Kerberos服务是否配置成功

1>.启用Kerberos成功后命令行和NameNode的Web UI实例无法访问HDFS集群

如下图所示,如果在没有进行Kerberos认证时,我们的HDFS client是无法访问HDFS集群的哟~

2>.命令行进行身份认证之后,即可访问HDFS集群

3>.