练习介绍

要求:

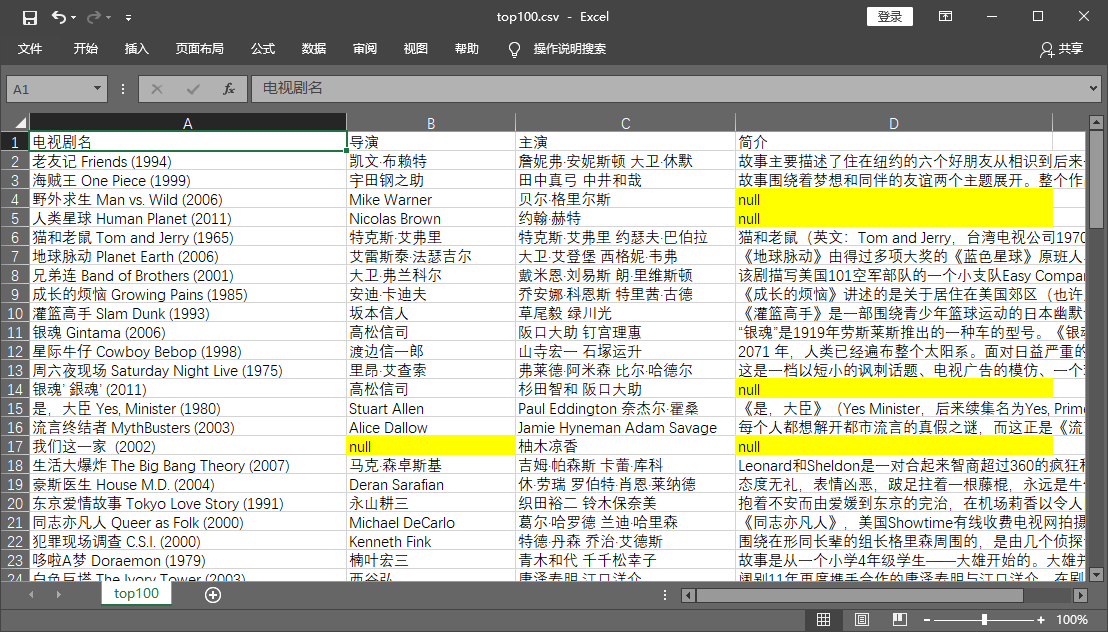

请使用多协程和队列,爬取时光网电视剧TOP100的数据(剧名、导演、主演和简介),并用csv模块将数据存储下来。

时光网TOP100链接:http://www.mtime.com/top/tv/top100/

目的:

1.练习掌握gevent的用法

2.练习掌握queue的用法

1 from gevent import monkey 2 monkey.patch_all() 3 4 from bs4 import BeautifulSoup 5 import gevent,requests,csv 6 from gevent.queue import Queue 7 8 url_list = ['http://www.mtime.com/top/tv/top100/'] 9 for i in range(2,11): 10 url_list.append('http://www.mtime.com/top/tv/top100/index-{}.html'.format(i)) 11 12 work = Queue() 13 14 for url in url_list: 15 work.put_nowait(url) 16 17 def pachong(): 18 while not work.empty(): 19 url = work.get_nowait() 20 res = requests.get(url) 21 items = BeautifulSoup(res.text,'html.parser').find_all('div',class_='mov_con') 22 for item in items: 23 title = item.find('h2').text.strip() 24 director = 'null' 25 actor = 'null' 26 remarks = 'null' 27 tag_ps = item.find_all('p') 28 for tag_p in tag_ps: 29 if tag_p.text[:2] == '导演': 30 director = tag_p.text[3:].strip() 31 elif tag_p.text[:2] == '主演': 32 actor = tag_p.text[3:].strip().replace(' ','') 33 elif tag_p['class']: 34 remarks = tag_p.text.strip() 35 with open('top100.csv','a',newline='',encoding='utf-8-sig') as csv_file: 36 writer = csv.writer(csv_file) 37 writer.writerow([title,director,actor,remarks]) 38 39 task_list = [] 40 41 for x in range(3): 42 task = gevent.spawn(pachong) 43 task_list.append(task) 44 45 with open('top100.csv','w',newline='',encoding='utf-8-sig') as csv_file: 46 writer = csv.writer(csv_file) 47 writer.writerow(['电视剧名','导演','主演','简介']) 48 49 gevent.joinall(task_list)

老师的代码

1 from gevent import monkey 2 monkey.patch_all() 3 import gevent,requests,bs4,csv 4 from gevent.queue import Queue 5 6 work = Queue() 7 8 url_1 = 'http://www.mtime.com/top/tv/top100/' 9 work.put_nowait(url_1) 10 11 url_2 = 'http://www.mtime.com/top/tv/top100/index-{page}.html' 12 for x in range(1,11): 13 real_url = url_2.format(page=x) 14 work.put_nowait(real_url) 15 16 def crawler(): 17 headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36'} 18 while not work.empty(): 19 url = work.get_nowait() 20 res = requests.get(url,headers=headers) 21 bs_res = bs4.BeautifulSoup(res.text,'html.parser') 22 datas = bs_res.find_all('div',class_="mov_con") 23 for data in datas: 24 TV_title = data.find('a').text 25 data = data.find_all('p') 26 TV_data ='' 27 for i in data: 28 TV_data =TV_data + ''+ i.text 29 writer.writerow([TV_title,TV_data]) 30 print([TV_title,TV_data]) 31 32 csv_file = open('timetop.csv','w',newline='',encoding='utf-8-sig') 33 writer = csv.writer(csv_file) 34 35 task_list = [] 36 for x in range(3): 37 task = gevent.spawn(crawler) 38 task_list.append(task) 39 gevent.joinall(task_list)