本文介绍如何使用fluentd在k8s集群做日志收集

k8s日志收集方案

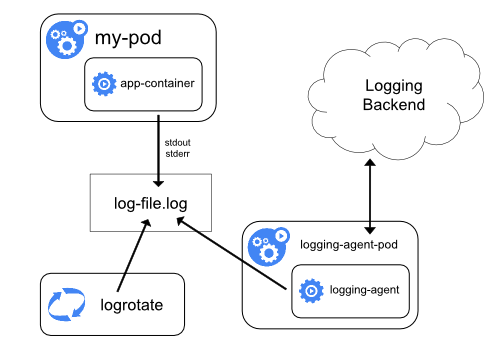

- Use a node-level logging agent that runs on every node.

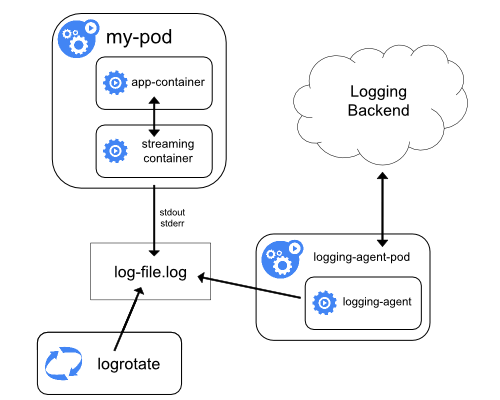

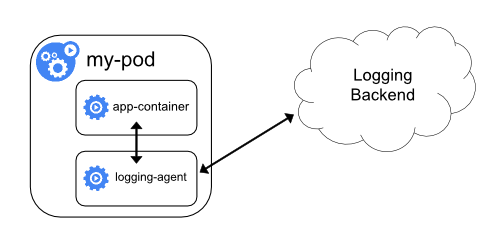

- Include a dedicated sidecar container for logging in an application pod.

- Streaming sidecar container

- Sidecar container with a logging agent

- Push logs directly to a backend from within an application.

| node-level logging agent | Streaming sidecar container | Sidecar container with a logging agent |

|---|---|---|

|

|

|

| node-level logging agent | Streaming sidecar container | Sidecar container with a logging agent | |

|---|---|---|---|

| kubectl logs | 支持 | 支持 | 不支持 |

| 灵活度 | 低 | 中 | 高 |

| 侵入性 | 低 | 中 | 高 |

| 资源消耗 | 低 | 中 | 高 |

node-level logging agent: fluentd

用k8s的daemonset可以方便的在每个node上安装fluent agent,fluent提供了示例仓库:fluentd-kubernetes-daemonset

参考这一只来创建daemonset部署fluentd:https://github.com/fluent/fluentd-kubernetes-daemonset/blob/master/fluentd-daemonset-elasticsearch-rbac.yaml

#@ load("@ytt:data", "data")

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: logging

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-es

namespace: logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: fluentd-es

template:

metadata:

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

#! 此注释确保如果节点被驱逐,fluentd不会被驱逐,支持关键的基于 pod 注释的优先级方案。

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: fluentd-es

containers:

- name: fluentd-es

image: #@ data.values.image

env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

resources:

limits:

memory: 240Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config-volume

mountPath: /fluentd/etc/

nodeSelector:

node-role.kubernetes.io/worker: "true"

tolerations:

- operator: Exists

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config-volume

configMap:

name: fluentd-config

配置configmap,作为配置文件挂载到fluentd:

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: logging

data:

system.conf: |-

<system>

root_dir /tmp/fluentd-buffers/

</system>

kubernetes.conf: |-

fluent.conf: |-

<source>

@id fluentd-containers.log

@type tail # Fluentd 内置的输入方式,其原理是不停地从源文件中获取新的日志。

path /var/log/containers/*.log # 挂载的服务器Docker容器日志地址

pos_file /var/log/es-containers.log.pos

tag kubernetes.* # 设置日志标签

read_from_head true

<parse> # 多行格式化成JSON

@type multi_format # 使用 multi-format-parser 解析器插件

<pattern>

format json # JSON解析器

time_key time # 指定事件时间的时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ # 时间格式

</pattern>

</parse>

</source>

# 添加 Kubernetes metadata 数据

<filter kubernetes.**>

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

# 只保留具有logging=1标签的Pod日志

<filter kubernetes.**>

@id filter_log

@type grep

<regexp>

key $.kubernetes.labels.logging

pattern ^1$

</regexp>

</filter>

# 删除一些多余的属性

<filter kubernetes.**>

@type record_transformer

remove_keys $.docker.container_id,$.kubernetes.container_image_id,$.kubernetes.pod_id,$.kubernetes.namespace_id,$.kubernetes.master_url,$.kubernetes.labels.pod-template-hash

</filter>

<match kubernetes.**>

@id elasticsearch

@type elasticsearch

@log_level debug

include_tag_key true

hosts [hosts]

user [user]

password [password]

logstash_format true

logstash_prefix [prefix] # 设置 index 前缀为 k8s

type_name doc

request_timeout 30s

<buffer>

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

</buffer>

</match>

后续当configmap更新时,会自动更新挂载到容器中的文件,参考mounted-configmaps-are-updated-automatically

创建自定义镜像,安装需要的插件:

FROM fluent/fluentd-kubernetes-daemonset:v1.10.4-debian-elasticsearch7-1.0

# 插件用于添加k8s的一些metadata信息到log中

RUN gem install fluent-plugin-kubernetes_metadata_filter

.dockerignore

Dockerfile

.dockerignore

README.md

*.yaml

*.sh

*.VERSION*

k8s/

部署脚本

创建部署脚本deploy.sh

#!/bin/bash

set -e

env="$1"

registryStage=""

registryProduction=""

versionFile=".VERSION"

if [ -z "$env" ]; then

env="stage"

registry=$registryStage

elif [ $env == 'production' ]; then

registry=$registryProduction

fi

# generate version

bash version.sh $versionFile

version=`cat $versionFile`

img=$registry/fluentd:$version

echo "deploy: $env, version: $version, img: $img"

docker build . -t $img

docker push $img

echo "deploy configmap"

kubectl apply -f k8s/fluentd-configmap-$env.yaml

echo "deploy daemon set"

ytt -f k8s/fluentd-daemonset.yaml -f k8s/values.yaml --data-value image=$img | kubectl apply -f-

版本控管脚本version.sh

#!/bin/bash

set -e

versionFile="$1"

# if versionFile not given, set default file name

if [ -z "$versionFile" ]; then

versionFile=".VERSION"

fi

# if file not exists, set default version

if [ ! -f "$versionFile" ]; then

echo "0.0.0" > "$versionFile"

fi

echo "bump version"

# using treeder/bump to bump version

docker run --rm -v "$PWD":/app treeder/bump --filename "$versionFile"