报错:

Exception in thread "main" java.lang.RuntimeException: java.net.SocketException: Call From bigdata/192.168.0.108 to bigdata:9000 failed on socket exception: java.net.SocketException: Network is unreachable; For more details see: http://wiki.apache.org/hadoop/SocketException at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:610) at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:553) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:750) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:244) at org.apache.hadoop.util.RunJar.main(RunJar.java:158) Caused by: java.net.SocketException: Call From bigdata/192.168.0.108 to bigdata:9000 failed on socket exception: java.net.SocketException: Network is unreachable; For more details see: http://wiki.apache.org/hadoop/SocketException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:824) at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:797) at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1544) at org.apache.hadoop.ipc.Client.call(Client.java:1486) at org.apache.hadoop.ipc.Client.call(Client.java:1385) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118) at com.sun.proxy.$Proxy29.getFileInfo(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:800) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359) at com.sun.proxy.$Proxy30.getFileInfo(Unknown Source) at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1652) at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1523) at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1520) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1520) at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1627) at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:708) at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:654) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:586) ... 9 more Caused by: java.net.SocketException: Network is unreachable at sun.nio.ch.Net.connect0(Native Method) at sun.nio.ch.Net.connect(Net.java:454) at sun.nio.ch.Net.connect(Net.java:446) at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:648) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:192) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531) at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:701) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:805) at org.apache.hadoop.ipc.Client$Connection.access$3700(Client.java:423) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1601) at org.apache.hadoop.ipc.Client.call(Client.java:1432) ... 33 more

解决方案:重启NetworkManager

[root@bigdata tmp]# systemctl restart NetworkManager

[root@bigdata tmp]# ping www.baidu.com

PING www.a.shifen.com (180.101.49.12) 56(84) bytes of data.

64 bytes from 180.101.49.12 (180.101.49.12): icmp_seq=1 ttl=52 time=11.1 ms

其他:

HIVE关闭、开启:

1.关闭

可以通过ps -ef|grep hive 来看hive 的端口号,然后kill 掉相关的进程。

kill -9 xxx

2.启动

nohup hive --service metastore 2>&1 &

用来启动metastore

nohup hive --service hiveserver2 2>&1 &

用来启动hiveserver2

可以通过查看日志,来确认是否正常启动。

注意!如果 hiveserver2 不启动,jdbc将无法正常连接

ref:https://www.jianshu.com/p/7b1b21bf05c2

centos 中,使用ss命令代替netstat查找端口号的进程

[hadoop ~]# ss -lnp|grep 9083

[hadoop ~]# ss -lnp|grep 10000

---------------------

hadoop参数设置完后,需要重启hadoop

$HADOOP_HOME/sbin/stop-dfs.sh

$HADOOP_HOME/sbin/start-dfs.sh

启动hiveserver2

$HIVE_HOME/bin/hive --service hiveserver2

OR

>hiveserver2

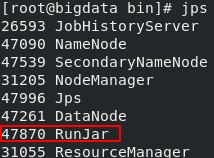

hiveserver2启动后,jps 显示会有Runjar

beeline工具测试使用jdbc方式连接

$HIVE_HOME/bin/beeline -u jdbc:hive2://localhost:10000

or

>beeline

>!connect jdbc:hive2://localhost:10000

hiveserver端口号默认是10000

使用beeline通过jdbc连接上之后就可以像client一样操作。

————————————————

ref:https://blog.csdn.net/lblblblblzdx/article/details/79760959