一、基础环境的准备:

1.1、安装docker:

docker的官网是:https://www.docker.com/

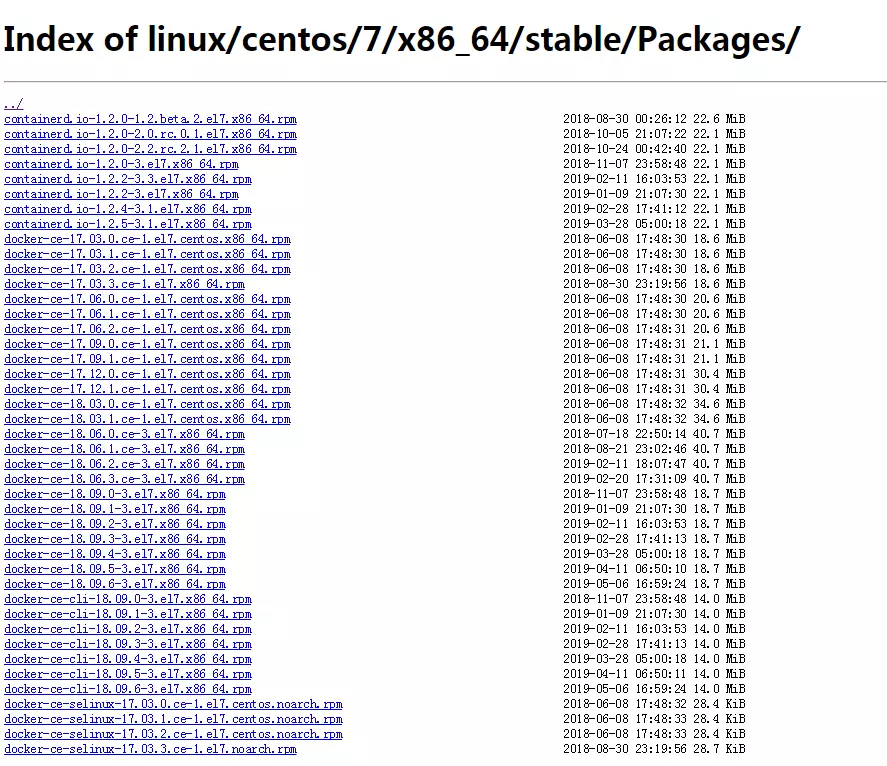

1.1.1、rpm包安装:

官方下载地址:https://download.docker.com/linux/centos/7/x86_64/stable/Packages/

此处选择18.03作为样例:

1.1.2、执行安装:

[root@k8s-master1 k8s]# yum install -y docker-ce-18.03.1.ce-1.el7.centos.x86_64.rpm

1.1.3、验证docker版本:

[root@k8s-master1 ~]# docker -v Docker version 18.03.1-ce, build 9ee9f40

1.1.4、启动docker服务:

启动docker:systemctl start docker

设置开机自启动:systemctl enable docker

查看docker的运行状态:systemctl status docker

二、ETCD集群部署:

etcd由CoreOS开发,是基于Raft算法的key-value分布式存储系统。etcd常用保存分布式系统的核心数据以保证数据一致性和高可用性。kubernetes就是使用的etcd存储所有运行数据,如集群IP划分、master和node状态、pod状态、服务发现数据等。

etcd的四个核心特点是:简单:基于HTTP+JSON的API让你用curl命令就可以轻松使用。安全:可选SSL客户认证机制。快速:每个实例每秒支持一千次写操作。可信:使用Raft算法充分实现了分布式。

三个节点,经测试,三台服务器的集群最多可以有一台宕机,如果两台宕机的话会导致整个etcd集群不可用。

2.1、各节点安装部署etcd服务:

下载地址:https://github.com/etcd-io/etcd/releases

2.1.1、节点部署:

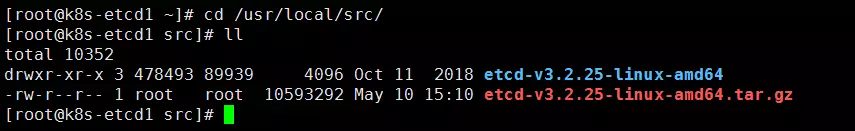

将安装包上传到服务器的/usr/local/src目录下,并且解压二进制安装包。

2.1.2、复制可执行文件到/usr/bin/目录下:

etcd是启动server端的命令,etcdctl是客户端命令操作工具。

创建kubernetes相应的目录:

[root@k8s-etcd1 ~]# mkdir -p /opt/kubernetes/{cfg,bin,ssl,log} [root@k8s-etcd1 ~]# cp etcdctl etcd /opt/kubernetes/bin/ [root@k8s-etcd1 ~]# ln -sv /opt/kubernetes/bin/* /usr/bin/

2.1.3、创建etcd服务启动脚本:

[root@k8s-etcd1 ~]# vim /etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target [Service] Type=simple WorkingDirectory=/var/lib/etcd #数据保存目录 EnvironmentFile=-/opt/kubernetes/cfg/etcd.conf #配置文件保存路径 # set GOMAXPROCS to number of processors ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /opt/kubernetes/bin/etcd" #服务端etcd命令路径 Type=notify [Install] WantedBy=multi-user.target

2.1.4、创建etcd用户并授权目录权限:

[root@k8s-etcd1 ~]# mkdir /var/lib/etcd [root@k8s-etcd1 ~]# useradd etcd -s /sbin/nologin [root@k8s-etcd1 ~]# chown etcd.etcd /var/lib/etcd/ [root@k8s-etcd1 ~]# mkdir /etc/etcd

2.1.5、编辑主配置文件etcd.conf:

使用https的连接方式

[root@k8s-etcd1 ~]# cat /opt/kubernetes/cfg/etcd.conf #[member] ETCD_NAME="etcd-node1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_SNAPSHOT_COUNTER="10000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" ETCD_LISTEN_PEER_URLS="https://10.172.160.250:2380" ETCD_LISTEN_CLIENT_URLS="https://10.172.160.250:2379,https://127.0.0.1:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" #ETCD_CORS="" #[cluster] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.172.160.250:2380" # if you use different ETCD_NAME (e.g. test), # set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..." ETCD_INITIAL_CLUSTER="etcd-node1=https://10.172.160.250:2380,etcd-node2=https://10.51.50.234:2380,etcd-node3=https://10.170.185.97:2380" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="https://10.172.160.250:2379" #[security] CLIENT_CERT_AUTH="true" ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" PEER_CLIENT_CERT_AUTH="true" ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" [root@k8s-etcd1 ~]# #备注 ETCD_DATA_DIR #当前节点的本地数据保存目录 ETCD_LISTEN_PEER_URLS #集群之间通讯端口,写自己的IP ETCD_LISTEN_CLIENT_URLS #客户端访问地址,写自己的IP ETCD_NAME #当前节点名称,同一个集群内的各节点不能相同 ETCD_INITIAL_ADVERTISE_PEER_URLS #通告自己的集群端口,静态发现和动态发现,自己IP ETCD_ADVERTISE_CLIENT_URLS #通告自己的客户端端口,自己IP地址 ETCD_INITIAL_CLUSTER #”节点1名称=http://IP:端口,节点2名称=http://IP:端口,节点3名称=http://IP:端口”,写集群所有的节点信息 ETCD_INITIAL_CLUSTER_TOKEN #创建集群使用的token,一个集群内的节点保持一致 ETCD_INITIAL_CLUSTER_STATE #新建集群的时候的值为new,如果是已经存在的集群为existing。 #如果conf 里没有配置ectdata 参数,默认会在/var/lib 创建*.etcd 文件 #如果etcd集群已经创建成功, 初始状态可以修改为existing。

2.1.6、启动etcd并验证集群服务

启动etcd,设置为开机启动,并查看状态:

systemctl start etcd && systemctl enable etcd && systemctl status etcd

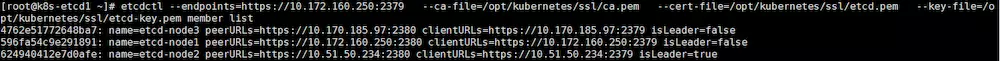

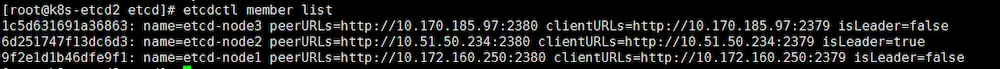

列出所有etcd节点:

[root@k8s-etcd1 ~]# etcdctl --endpoints=https://10.172.160.250:2379 --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/etcd.pem --key-file=/opt/kubernetes/ssl/etcd-key.pem member list

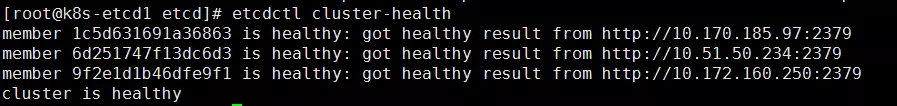

查看集群状态:

[root@k8s-etcd1 ~]# etcdctl --endpoints=https://10.172.160.250:2379 --ca-file=/opt/kubernetes/ssl/ca.pem --cert-file=/opt/kubernetes/ssl/etcd.pem --key-file=/opt/kubernetes/ssl/etcd-key.pem cluster-health

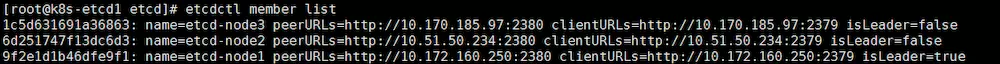

2.1.7由于特殊原因,我的环境需要修改成HTTP模式:

修改配置文件,把设置https的地方修改成http同时删除证书的配置,删除/var/lib/etcd/下的文件,重启操作即可

查看测试是否正常跳转,停到250上的服务。停到250上的服务之后跳转到234节点,说明服务正常。

三、kubernetes集群部署之master部署:

3.1、kubernetes apiserver部署:

Kube-apiserver提供了http rest接口的访问方式,是kubernetes里所有资源的增删改查操作的唯一入口,kube-apiserver是无状态的,即三台kube-apiserver无需进行主备选取,因为apiserver产生的数据是直接保存在etcd上的,因此api可以直接通过负载进行调用。

3.2、下载kubernetes相应的软件包:

软件包放在/usr/local/src/下

[root@k8s-master1 src]# pwd /usr/local/src [root@k8s-master1 src]# tar xvf kubernetes-1.11.0-client-linux-amd64.tar.gz [root@k8s-master1 src]# tar xvf kubernetes-1.11.0-node-linux-amd64.tar.gz [root@k8s-master1 src]# tar xvf kubernetes-1.11.0-server-linux-amd64.tar.gz [root@k8s-master1 src]# tar xvf kubernetes-1.11.0.tar.gz

复制命令到相应的目录下

[root@k8s-master1 src]# cp kubernetes/server/bin/kube-apiserver /opt/kubernetes/bin/ [root@k8s-master1 src]# cp kubernetes/server/bin/kube-scheduler /usr/bin/ [root@k8s-master1 src]# cp kubernetes/server/bin/kube-controller-manager /usr/bin/

3.3、准备工作

3.3.1、创建生成CSR文件的json文件:

[root@k8s-master1 src]# vim kubernetes-csr.json { "CN": "kubernetes", "hosts": [ "127.0.0.1", "10.1.0.1", "192.168.100.101", "192.168.100.102", "192.168.100.112", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

生成相应的证书文件

[root@k8s-master1 src]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=/opt/kubernetes/ssl/ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

将证书复制到各服务器

[root@k8s-master1:/usr/local/src/ssl/master# cp kubernetes*.pem /opt/kubernetes/ssl/ [root@k8s-master1 src]# bash /root/ssh.sh

注:ssh.sh是一个同步脚本,同步服务器间文件

3.3.2、创建apiserver 使用的客户端 token 文件:

[root@k8s-master1:/usr/local/src/ssl/master]# head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 9077bdc74eaffb83f672fe4c530af0d6 [root@k8s-master1 ~]# vim /opt/kubernetes/ssl/bootstrap-token.csv #各master服务器 7b7918630245ac1b5221b26be11e6b85,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

3.3.3、配置认证用户密码:

vim /opt/kubernetes/ssl/basic-auth.csv admin,admin,1 readonly,readonly,2

3.4、部署:

3.4.1、创建api-server 启动脚本:

[root@k8s-master1 src]# cat /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] ExecStart=/opt/kubernetes/bin/kube-apiserver --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction --bind-address=192.168.100.101 --insecure-bind-address=127.0.0.1 --authorization-mode=Node,RBAC --runtime-config=rbac.authorization.k8s.io/v1 --kubelet-https=true --anonymous-auth=false --basic-auth-file=/opt/kubernetes/ssl/basic-auth.csv --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/ssl/bootstrap-token.csv --service-cluster-ip-range=10.1.0.0/16 --service-node-port-range=20000-40000 --tls-cert-file=/opt/kubernetes/ssl/kubernetes.pem --tls-private-key-file=/opt/kubernetes/ssl/kubernetes-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/kubernetes/ssl/ca.pem --etcd-certfile=/opt/kubernetes/ssl/kubernetes.pem --etcd-keyfile=/opt/kubernetes/ssl/kubernetes-key.pem --etcd-servers=https://192.168.100.105:2379,https://192.168.100.106:2379,https://192.168.100.107:2379 --enable-swagger-ui=true --allow-privileged=true --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/opt/kubernetes/log/api-audit.log --event-ttl=1h --v=2 --logtostderr=false --log-dir=/opt/kubernetes/log Restart=on-failure RestartSec=5 Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target

3.4.2启动并验证api-server:

[root@k8s-master1 src]# systemctl daemon-reload && systemctl enable kube-apiserver && systemctl start kube-apiserver && systemctl status kube-apiserver ● kube-apiserver.service - Kubernetes API Server Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2019-05-29 11:05:15 CST; 19s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 1053 (kube-apiserver) Tasks: 14 Memory: 258.0M CGroup: /system.slice/kube-apiserver.service └─1053 /opt/kubernetes/bin/kube-apiserver --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestr... May 29 11:05:03 k8s-master1.example.com systemd[1]: Starting Kubernetes API Server... May 29 11:05:03 k8s-master1.example.com kube-apiserver[1053]: Flag --admission-control has been deprecated, Use --enable-admission-plugins or --disable-ad...version. May 29 11:05:03 k8s-master1.example.com kube-apiserver[1053]: Flag --insecure-bind-address has been deprecated, This flag will be removed in a future version.

将启动脚本复制到server,更改--bind-address 为server2 IP地址,然后重启server 2的api-server并验证

3.4.3、配置Controller Manager服务:

[root@k8s-master1 src]# cat /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/opt/kubernetes/bin/kube-controller-manager --address=127.0.0.1 --master=http://127.0.0.1:8080 --allocate-node-cidrs=true --service-cluster-ip-range=10.1.0.0/16 --cluster-cidr=10.2.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --leader-elect=true --v=2 --logtostderr=false --log-dir=/opt/kubernetes/log Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target

复制启动二进制文件

[root@k8s-master1 src]# cp kubernetes/server/bin/kube-controller-manager /opt/kubernetes/bin/

将启动文件和启动脚本scp到master2并启动服务和验证

3.4.4、启动并验证kube-controller-manager:

[root@k8s-master1 src]# systemctl restart kube-controller-manager && systemctl status kube-controller-manager ● kube-controller-manager.service - Kubernetes Controller Manager Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; disabled; vendor preset: disabled) Active: active (running) since Wed 2019-05-29 11:14:24 CST; 7s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 1790 (kube-controller) Tasks: 8 Memory: 11.0M CGroup: /system.slice/kube-controller-manager.service └─1790 /opt/kubernetes/bin/kube-controller-manager --address=127.0.0.1 --master=http://127.0.0.1:8080 --allocate-node-cidrs=true --service-cluster-ip-r... May 29 11:14:24 k8s-master1.example.com systemd[1]: Started Kubernetes Controller Manager.

3.4.5、部署Kubernetes Scheduler:

[root@k8s-master1 src]# vim /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] ExecStart=/opt/kubernetes/bin/kube-scheduler --address=127.0.0.1 --master=http://127.0.0.1:8080 --leader-elect=true --v=2 --logtostderr=false --log-dir=/opt/kubernetes/log Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target

3.4.6、准备启动二进制:

[root@k8s-master1 src]# cp kubernetes/server/bin/kube-scheduler /opt/kubernetes/bin/ [root@k8s-master1 src]# scp /opt/kubernetes/bin/kube-scheduler 192.168.100.102:/opt/kubernetes/bin/

3.4.7、启动并验证服务:

systemctl daemon-reload && systemctl enable kube-scheduler && systemctl start kube-scheduler && systemctl status kube-scheduler [root@k8s-master1 kube-master]# systemctl status kube-scheduler ● kube-scheduler.service - Kubernetes Scheduler Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2019-05-29 11:14:05 CST; 8min ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 1732 (kube-scheduler) Tasks: 13 Memory: 8.5M CGroup: /system.slice/kube-scheduler.service └─1732 /opt/kubernetes/bin/kube-scheduler --address=127.0.0.1 --master=http://127.0.0.1:8080 --leader-elect=true --v=2 --logtostderr=false --log-dir=/o...

3.5、部署kubectl 命令行工具:

[root@k8s-master1 src]# cp kubernetes/client/bin/kubectl /opt/kubernetes/bin/ [root@k8s-master1 src]# scp /opt/kubernetes/bin/kubectl 192.168.100.102:/opt/kubernetes/bin/ [root@k8s-master1 src]# ln -sv /opt/kubernetes/bin/kubectl /usr/bin/

3.5.1、创建 admin 证书签名请求:

vim admin-csr.json { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] }

3.5.2、生成 admin 证书和私钥:

[root@k8s-master1 src]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=/opt/kubernetes/ssl/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin [root@k8s-master1 src]# ll admin* -rw-r--r-- 1 root root 1009 Jul 11 22:51 admin.csr -rw-r--r-- 1 root root 229 Jul 11 22:50 admin-csr.json -rw------- 1 root root 1679 Jul 11 22:51 admin-key.pem -rw-r--r-- 1 root root 1399 Jul 11 22:51 admin.pem [root@k8s-master1 src]# cp admin*.pem /opt/kubernetes/ssl/

3.5.3、设置集群参数:

master1: kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.100.112:6443 master2: kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.100.112:6443

3.5.4、设置客户端认证参数:

[root@k8s-master1 src]# kubectl config set-credentials admin --client-certificate=/opt/kubernetes/ssl/admin.pem --embed-certs=true --client-key=/opt/kubernetes/ssl/admin-key.pem User "admin" set. [root@k8s-master2 src]# kubectl config set-credentials admin --client-certificate=/opt/kubernetes/ssl/admin.pem --embed-certs=true --client-key=/opt/kubernetes/ssl/admin-key.pem User "admin" set.

3.5.5、设置上下文参数:

[root@k8s-master1 src]# kubectl config set-context kubernetes --cluster=kubernetes --user=admin --user=admin Context "kubernetes" created. [root@k8s-master2 src]# kubectl config set-context kubernetes --cluster=kubernetes --user=admin Context "kubernetes" created.

3.5.6、设置默认上下文:

[root@k8s-master2 src]# kubectl config use-context kubernetes Switched to context "kubernetes". [root@k8s-master1 src]# kubectl config use-context kubernetes Switched to context "kubernetes".

至此master部署完成,可以执行下命令测试下

[root@k8s-master1 ~]# kubectl get pods No resources found [root@k8s-master1 ~]# kubectl get nodes No resources found.