搭建步骤

基础概念:https://www.cnblogs.com/sxdcgaq8080/p/10640879.html

=======================================环境准备阶段=========================================

1.新建三个虚拟机CentOS

参考地址:https://www.cnblogs.com/sxdcgaq8080/p/7466529.html

master 4G 50G

Node1 2G 40G

Node2 2G 40G

【需要注意的是,三台服务器上尽量不要已经安装了docker,否则会导致etcd安装失败,因为版本匹配的问题!!】

2.分别设置root账号 密码

3.分别启动网卡,获得IP,让本机的xshell可以连接上几台服务器

参考地址:https://www.cnblogs.com/sxdcgaq8080/p/9178926.html

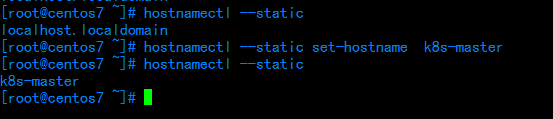

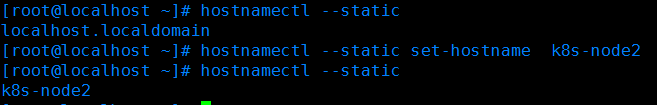

4.分别修改静态主机名为

参考地址:https://www.cnblogs.com/sxdcgaq8080/p/10616441.html

192.168.92.131 k8s-master

192.168.92.132 k8s-node1

192.168.92.133 k8s-node2

分别在三台主机上输入命令

192.168.92.131输入:

hostnamectl --static set-hostname k8s-master

192.168.92.132输入:

hostnamectl --static set-hostname k8s-node1

192.168.92.133输入:

hostnamectl --static set-hostname k8s-node2

5.分别在三台服务器的/etc/hosts文件中添加

192.168.92.131 k8s-master 192.168.92.131 etcd 192.168.92.131 registry 192.168.92.132 k8s-node1 192.168.92.133 k8s-node2

使用以下命令修改即可:

vi /etc/hosts

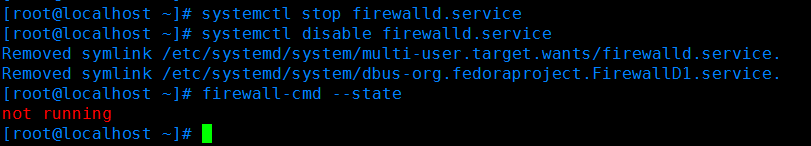

6.分别关闭并禁用三台服务器的防火墙

参考地址:https://www.cnblogs.com/sxdcgaq8080/p/10032829.html

使用以下命令:【先停用,后禁用】

systemctl stop firewalld.service

systemctl disable firewalld.service

再使用查看命令查看,如果是如下效果,说明成功

firewall-cmd --state

====================================主要安装步骤开始=====================================

一、主节点安装

主节点需要安装

etcd 存储数据中心

flannel k8s的一种网络方案

kubernetes 【包含:kube-api-server controllerManager Scheduler 】

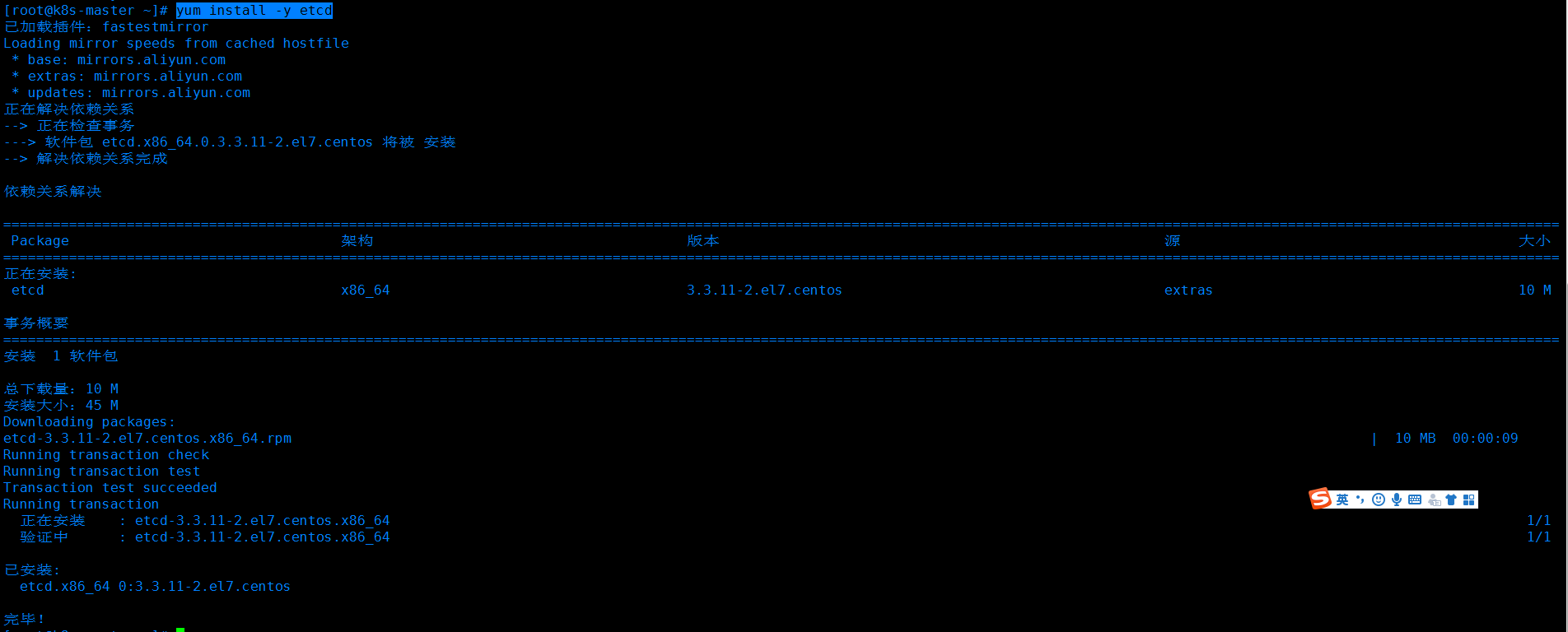

1.etcd的安装

1.1 命令

yum install -y etcd

1.2 修改配置文件

命令

vi /etc/etcd/etcd.conf

修改内容为:

# [member] ETCD_NAME=master ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" #ETCD_SNAPSHOT_COUNT="10000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_LISTEN_PEER_URLS="http://localhost:2380" ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379,http://0.0.0.0:4001" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" #ETCD_CORS="" # #[cluster] #ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..." #ETCD_INITIAL_CLUSTER="default=http://localhost:2380" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="http://etcd:2379,http://etcd:4001" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_SRV="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_STRICT_RECONFIG_CHECK="false" #ETCD_AUTO_COMPACTION_RETENTION="0" #ETCD_ENABLE_V2="true" # #[proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[security] #ETCD_CERT_FILE="" #ETCD_KEY_FILE="" #ETCD_CLIENT_CERT_AUTH="false" #ETCD_TRUSTED_CA_FILE="" #ETCD_AUTO_TLS="false" #ETCD_PEER_CERT_FILE="" #ETCD_PEER_KEY_FILE="" #ETCD_PEER_CLIENT_CERT_AUTH="false" #ETCD_PEER_TRUSTED_CA_FILE="" #ETCD_PEER_AUTO_TLS="false" # #[logging] #ETCD_DEBUG="false" # examples for -log-package-levels etcdserver=WARNING,security=DEBUG #ETCD_LOG_PACKAGE_LEVELS="" # #[profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" # #[auth] #ETCD_AUTH_TOKEN="simple"

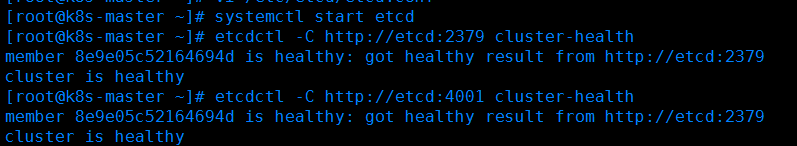

1.3 启动etcd服务并验证

启动etcd服务命令

systemctl start etcd

设置etcd服务自启动

systemctl enable etcd

检查健康状况

etcdctl -C http://etcd:2379 cluster-health

etcdctl -C http://etcd:4001 cluster-health

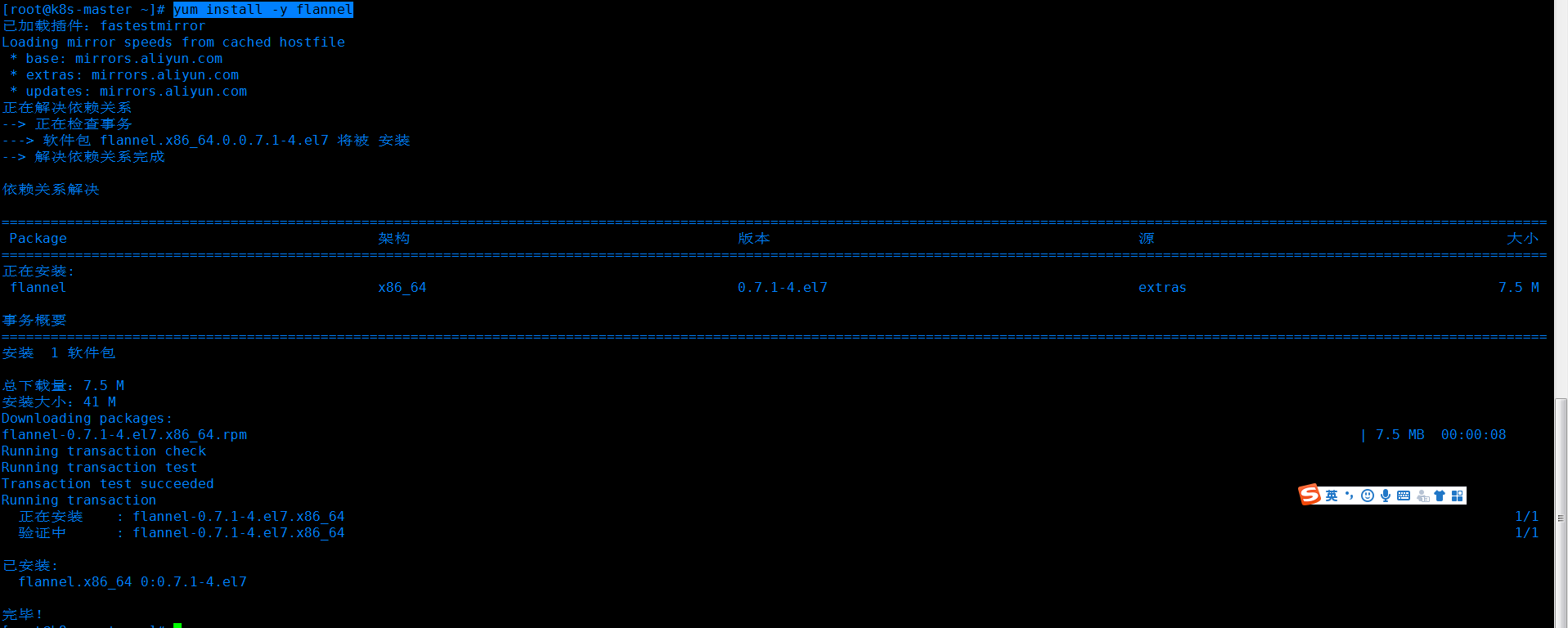

2.安装flannel

2.1 安装命令

yum install -y flannel

2.2 配置flannel

命令:

vi /etc/sysconfig/flanneld

修改内容为:

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://etcd:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/atomic.io/network" # Any additional options that you want to pass #FLANNEL_OPTIONS=""

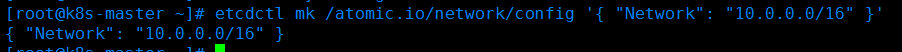

2.3 配置etcd中关于flannel的key

命令

etcdctl mk /atomic.io/network/config '{ "Network": "10.0.0.0/16" }'

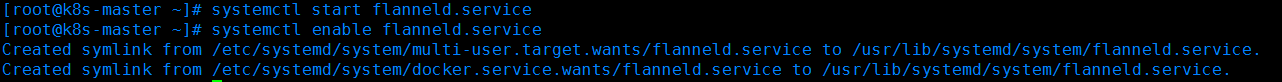

2.4 启动flannel服务,并设置开机自启

systemctl start flanneld.service

systemctl enable flanneld.service

3.安装kubernetes

3.1 安装命令

yum install kubernetes

3.2安装后,需要修改配置

配置修改是为了下面这些需要运行的组件

kube-api-server

kuber-scheduler

kube-controller-manager

命令:

vi /etc/kubernetes/apiserver

更改内容为:

### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to. KUBE_API_ADDRESS="--address=0.0.0.0" # The port on the local server to listen on. KUBE_API_PORT="--port=8080" # Port minions listen on KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://etcd:2379" # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies # KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" # Add your own! KUBE_API_ARGS=""

命令:

vi /etc/kubernetes/config

更改内容为:

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://k8s-master:8080"

3.3 分别启动三个组件服务,并且设置为自启动

systemctl start kube-apiserver.service systemctl start kube-controller-manager.service systemctl start kube-scheduler.service

systemctl enable kube-apiserver.service systemctl enable kube-controller-manager.service systemctl enable kube-scheduler.service

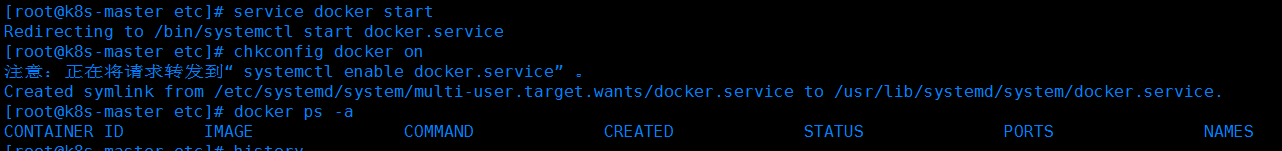

4.安装docker

参考地址:https://www.cnblogs.com/sxdcgaq8080/p/9178918.html

docker一定要最后安装,否则报错,解决方法:https://www.cnblogs.com/sxdcgaq8080/p/10622695.html

4.1 安装docker命令:

yum install -y docker

4.2 启动docker服务命令:

service docker start

4.3 docker加入自启动服务命令:

chkconfig docker on

二.子节点安装

子节点安装需要安装以下组件:

flannel

kubernetes

docker

【注意,两个子节点都要按照下面步骤安装】

1.flannel安装

1.1 安装命令:

yum install flannel

1.2 配置flannel

修改配置文件:

vi /etc/sysconfig/flanneld

配置文件修改为下:

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://etcd:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/atomic.io/network" # Any additional options that you want to pass #FLANNEL_OPTIONS=""

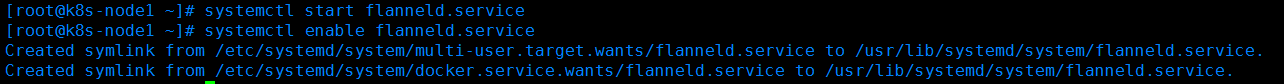

1.3 启动flannel服务并设置自启动

systemctl start flanneld.service

systemctl enable flanneld.service

2.安装kubernetes

2.1 安装命令

yum install kubernetes

2.2 配置文件修改

子节点上需要运行的kubernetes组件是:

kubelet

kubernetes-proxy

修改配置文件命令:

vi /etc/kubernetes/config

两个子节点都修改配置文件内容为:

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://k8s-master:8080"

修改配置文件命令:

vi /etc/kubernetes/kubelet

此处的配置文件内容,需要注意区分【k8s-node1】和【k8s-node2】,分别在两个节点上的配置文件的内容:

【红色字体标注部分,需要自行修改】

### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on # KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=k8s-node1" # location of the api-server KUBELET_API_SERVER="--api-servers=http://k8s-master:8080" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" # Add your own! KUBELET_ARGS=""

2.3 分别启动两个子节点上的kubelet服务和kube-proxy服务,并设置自启动

systemctl start kubelet.service

systemctl start kube-proxy.service

systemctl enable kubelet.service

systemctl enable kube-proxy.service

3.docker安装

分别在两个子节点上执行如下命令:

3.1 安装docker命令:

yum install -y docker

3.2 启动docker服务命令:

service docker start

3.3 docker加入自启动服务命令:

chkconfig docker on

================================================================

************************* 到此处,k8s集群搭建完成 ************************

================================================================

三.验证集群状态

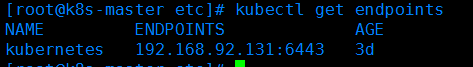

1.master节点执行命令,查看端点信息

kubectl get endpoints

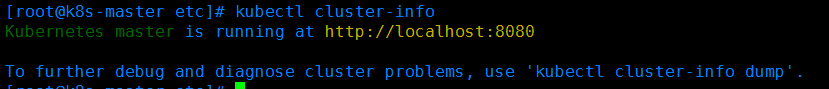

2.master节点执行命令,查看集群信息

kubectl cluster-info

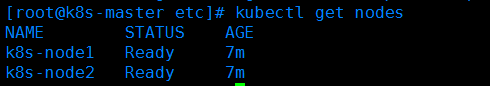

3.master节点执行命令,获取节点信息

kubectl get nodes

如上,即k8s集群良好!!!!

===========================================================================