集群环境

ambari 2.7.3

hdp/hortonwork 2.6.0.3

maven

1 <dependency> 2 <groupId>org.apache.hive</groupId> 3 <artifactId>hive-jdbc</artifactId> 4 <version>1.2.1000.2.6.0.3-8</version> 5 <classifier>standalone</classifier> 6 </dependency> 7 <dependency> 8 <groupId>org.apache.hbase</groupId> 9 <artifactId>hbase-client</artifactId> 10 <version>1.1.2.2.6.0.3-8</version> 11 </dependency>

代码

package com.yingzi.com.dmh;

import java.io.IOException;

import java.net.URL;

import java.sql.SQLException;

import java.sql.Connection;

import java.sql.ResultSet;

import java.sql.Statement;

import java.sql.DriverManager;

import java.util.Enumeration;

//import org.apache.hadoop.hbase.client.Connection;

public class HiveJdbcClient {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

public static void main(String[] args) throws SQLException {

try {

Class.forName(driverName);

} catch (ClassNotFoundException e) {

// TODO Auto-generated catch block

e.printStackTrace();

System.exit(1);

}

ClassLoader classLoader = App.class.getClassLoader();

Enumeration<URL> paths = null;

try {

paths = classLoader.getResources("META-INF");

} catch (IOException e) {

e.printStackTrace();

} finally {

}

int count = 0;

while (paths.hasMoreElements()){

String path = paths.nextElement().toString();

if (path.indexOf("jdk") == -1){

count++;

System.out.println(path);

}

}

System.out.println(count);

//

Connection con = DriverManager.getConnection("jdbc:hive2://hdfs03.yingzi.com:2181,hdfs04.yingzi.com:2181,hdfs05.yingzi.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2");

Statement stmt = con.createStatement();

ResultSet res = stmt.executeQuery("show databases");

if (res.next()) {

System.out.println(res.getString(1));

}

//create table

String sql = "CREATE TABLE IF NOT EXISTS hbase_hive_table(key string, value string)

" +

"STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler'

" +

"WITH SERDEPROPERTIES ("hbase.columns.mapping" = ":key,cf:json")

" +

"TBLPROPERTIES ("hbase.table.name" = "hbase_hive_table")";

System.out.println(sql);

stmt.execute(sql);

}

}

运行报错:

org.apache.hive.service.cli.HiveSQLException: java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/client/Connection

解决办法:

参考:https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.1/hbase-data-access/content/hdag_configuring_hbase_and_hive.html

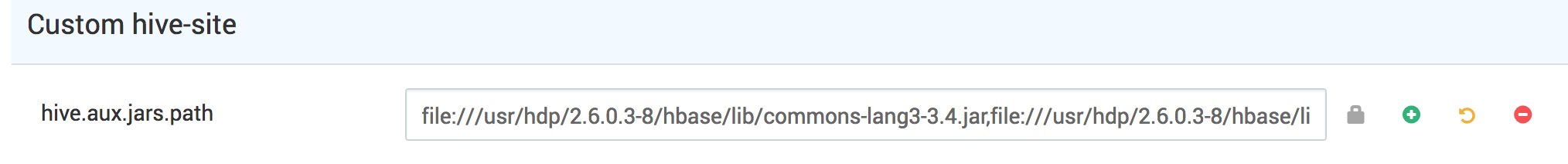

ambari->hive->configs->advanced->Custom hive-site->addproperity