1、NN宕掉切不过去先看zkfc的log

引起原因是dfs.ha.fencing.ssh.private-key-files的配置路径配错造成以致无法找到公钥

2、dfs.namenode.shared.edits.dir为JN启动的所在地址,在部署时必须启动对应服务器的JN,否则无法完成NN的元信息拷贝

3、zkfc为zookeeper的客户端,负责切换action的工作,当zkfc启动了的时候standby的服务器才会切为active

4、dfs.ha.fencing.methods为中断宕机namenode的zookeeper连接

5、hadoop本地库问题:重新编译本地库并替换(上海尚学堂Hadoop的本地库简介):

http://www.shsxt.com/it/Big-data/656.html

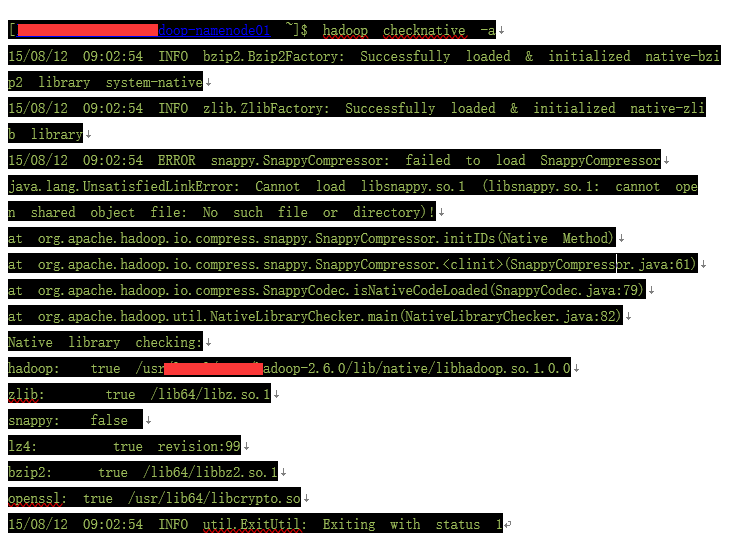

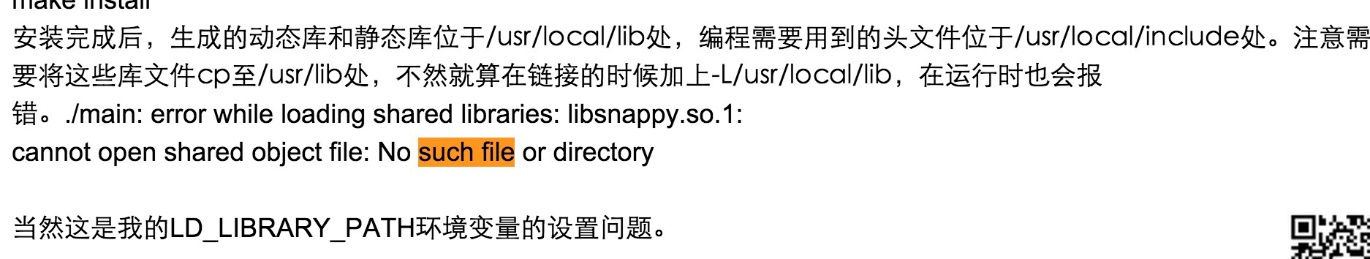

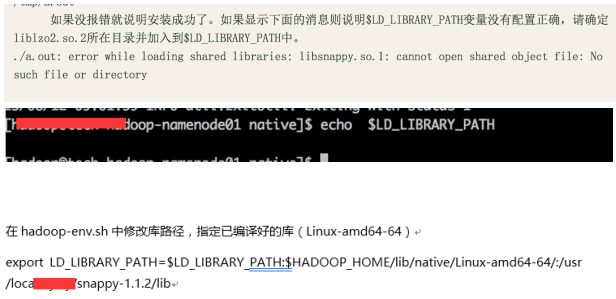

6、ERROR snappy.SnappyCompressor: failed to load SnappyCompressor

java.lang.UnsatisfiedLinkError: Cannot load libsnappy.so.1 (libsnappy.so.1: cannot open shared object file: No such file or directory)!

没有这个环境变量

解决方法:

http://www.cnblogs.com/smartvessel/archive/2011/01/21/1940868.html

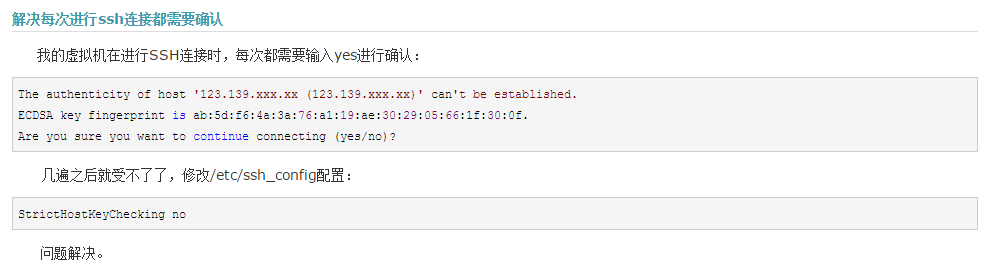

7、ssh 连接的时候需要确认(yes/no)才能使各个节点正常通讯

解决办法:把节点都连一遍

8、启动hdfs的时候需要在/data/里创建hdfs节点记录版本号,如果权限不够创建不到则会启动失败

解决办法:修改权限或者手动创建hdfs节点

9、ssh的确认连接堵塞问题

StrictHostKeyChecking no

http://www.cnblogs.com/yuxc/archive/2012/11/15/2772484.html

10、No Route to Host from xxxx-xxxxxop-namenode01.node.kddi.op.xxxx.com/xxx.xxx.11.1 to xxxx-hadoop-datanode05.node.xxxx.op.xxxx.com:8485 failed on socket timeout exception: java.net.NoRouteToHostException: No route to host; For more details see: http://wiki.apache.org/hadoop/NoRouteToHost

11、找不到mysql.sock,mysql.sock丢失问题解决方法

找不到mysql.sock,mysql.sock丢失问题解决方法

12、[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

解决办法: 把hadoop中lib的jline.jar换成hive的lib下得jline.jar

13、Hive表迁移后无法select * from xxxx(无法查询),报

FAILED: SemanticException Unable to determine if hdfs://hadoop2service/hive/warehouse/pv_tmpis encrypted: java.lang.IllegalArgumentException: Wrong FS: hdfs://hadoop2service/hive/warehouse/pv_tmp, expected: hdfs://hadoop2kddi

解决办法:从备份sql中把所有旧集群名字替换为新集群名字,再重新还原备份到存新hive元信息的数据库中

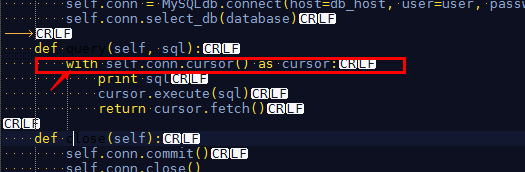

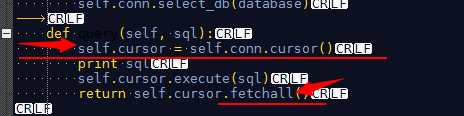

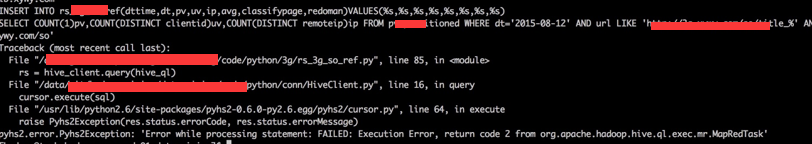

14、

原因:语法错误,属于python的报错

不应该这样写,改成

15、SELECT clientid,url,COUNT(1)pv FROM pv_tmp GROUP BY clientid,url HAVING pv>50

Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.ppd.ExprWalkerInfo.getConvertedNode(Lorg/apache/hadoop/hive/ql/lib/Node;)Lorg/apache/hadoop/hive/ql/plan/ExprNodeDesc;

解决措施:

调试语句:hive -hiveconf hive.root.logger=DEBUG,console

问题原因:hadoop 2.6.0 与 hive 1.1.0以上存在不兼容问题

解决办法:hive 版本换回 1.0.1,正常使用

注意:在迁移的时候必须确保安装正常并能够正常使用,然后再做数据迁移,否则一次做完这两步出错时不能确定是兼容性问题还是操作问题。

16、beeline 的使用 (通过hiveserver去连接hive的一个客户端)

优点:查数据的时候有完整的表格式

beeline

!connect jdbc:hive2://tech-hadoop-namenode01.node.xxxx.op.xxxx.com:10000 hadoop RgWrXlKN9j3VkYQO org.apache.hive.jdbc.HiveDriver

17、Mysql 5.1 改用 Mysql5.5 的语法问题

不带list的执行sql用query 带values的用execute

18、hive数据倾斜问题

解决方案之一:distribute by 指定map输出的key为一个散列列

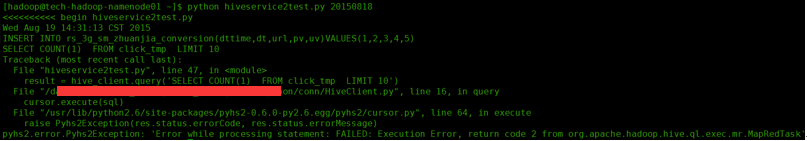

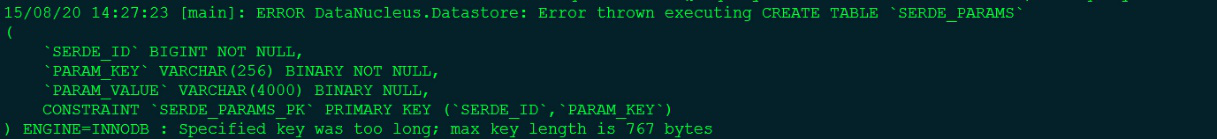

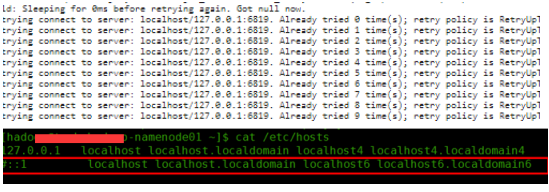

21、

解决办法:

①看日志—— Jobs histroy —— Map kill logs —— full logs ,发现如下报错:

| Log Type: syslog Log Upload Time: 25-Aug-2015 08:50:15 Log Length: 5503 2015-08-25 08:49:32,935 INFO [main] org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties 2015-08-25 08:49:33,039 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s). 2015-08-25 08:49:33,039 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system started 2015-08-25 08:49:33,055 INFO [main] org.apache.hadoop.mapred.YarnChild: Executing with tokens: 2015-08-25 08:49:33,055 INFO [main] org.apache.hadoop.mapred.YarnChild: Kind: mapreduce.job, Service: job_1440463117446_0001, Ident: (org.apache.hadoop.mapreduce.security.token.JobTokenIdentifier@21683789) 2015-08-25 08:49:33,214 INFO [main] org.apache.hadoop.mapred.YarnChild: Sleeping for 0ms before retrying again. Got null now. 2015-08-25 08:49:34,279 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:35,280 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 1 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:36,281 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 2 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:37,282 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 3 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:38,283 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 4 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:39,283 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 5 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:40,284 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 6 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:41,285 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 7 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:42,285 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 8 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:43,286 INFO [main] org.apache.hadoop.ipc.Client: Retrying connect to server: localhost/127.0.0.1:6819. Already tried 9 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) 2015-08-25 08:49:43,289 WARN [main] org.apache.hadoop.mapred.YarnChild: Exception running child : java.net.ConnectException: Call From xxxx-xxxoop-datanode06.node.xxxx.op.xxxx.com/xxx.xxx.12.6 to localhost:6819 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:526) at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791) at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731) at org.apache.hadoop.ipc.Client.call(Client.java:1472) at org.apache.hadoop.ipc.Client.call(Client.java:1399) at org.apache.hadoop.ipc.WritableRpcEngine$Invoker.invoke(WritableRpcEngine.java:244) at com.sun.proxy.$Proxy9.getTask(Unknown Source) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:132) Caused by: java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494) at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:607) at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:705) at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:368) at org.apache.hadoop.ipc.Client.getConnection(Client.java:1521) at org.apache.hadoop.ipc.Client.call(Client.java:1438) ... 4 more 2015-08-25 08:49:43,290 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping MapTask metrics system... 2015-08-25 08:49:43,291 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system stopped. 2015-08-25 08:49:43,291 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system shutdown complete. |

②这个报错一直在localhost回溯,一定是在locathost丢失连接,很大可能被64位IP绑定影响,因此到hosts把64的localhost映射注释掉,问题彻底解决!

注:切记任何时候一定一定要看日志!!!!!!!