motivation

Existing works usually reconstruct the data points on the original view directly, and generate the individual subspace representation for each view. However, each single view alone is usually not sufficient to describe data points, which makes the reconstruction by using only one view itself risky. Moreover, the data collection may be noisy, which further increases the difficulty of clustering. To address these issues, this paper introduces a latent representation to explore the relationships among data points and handle the possible noise.

contribution

This work proposes a novel Latent Multi-view Subspace Clustering (LMSC) method, which clusters data points with latent representation and simultaneously explores underlying complementary information from multiple views.

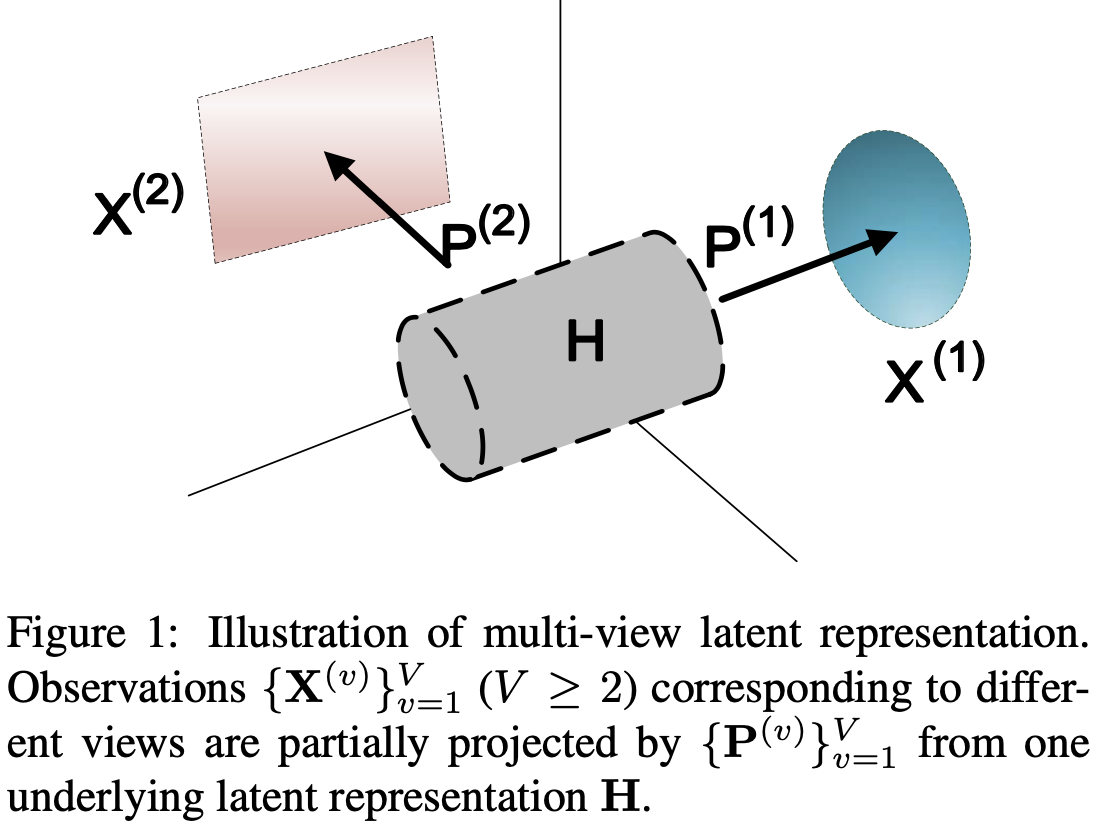

Assumption

The proposed method assumes that multi-view observations are all originated from one underlying latent representation.

Algorithm

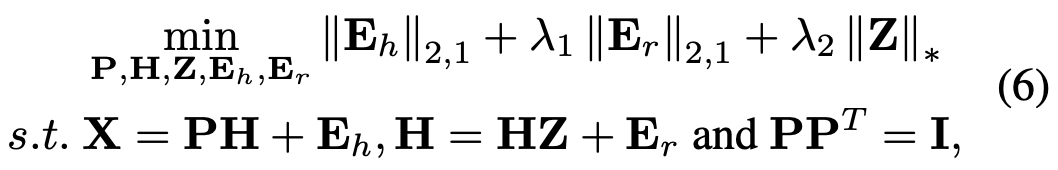

Objective function:

The first term is utilized to assure the learned latent representations H and reconstruction models P(v) associated to different views to be good for reconstructing the observations, while the second one penalizes the reconstruction error in the latent multi-view subspaces. The last term prevents the trivial solution by enforcing the subspace representation to be low-rank.

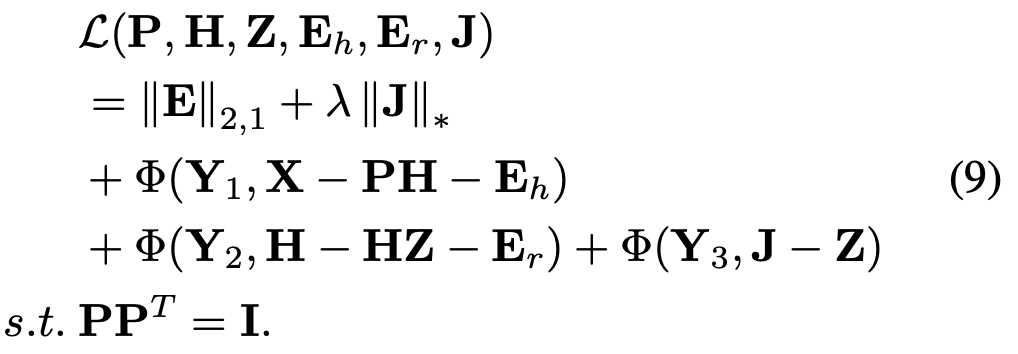

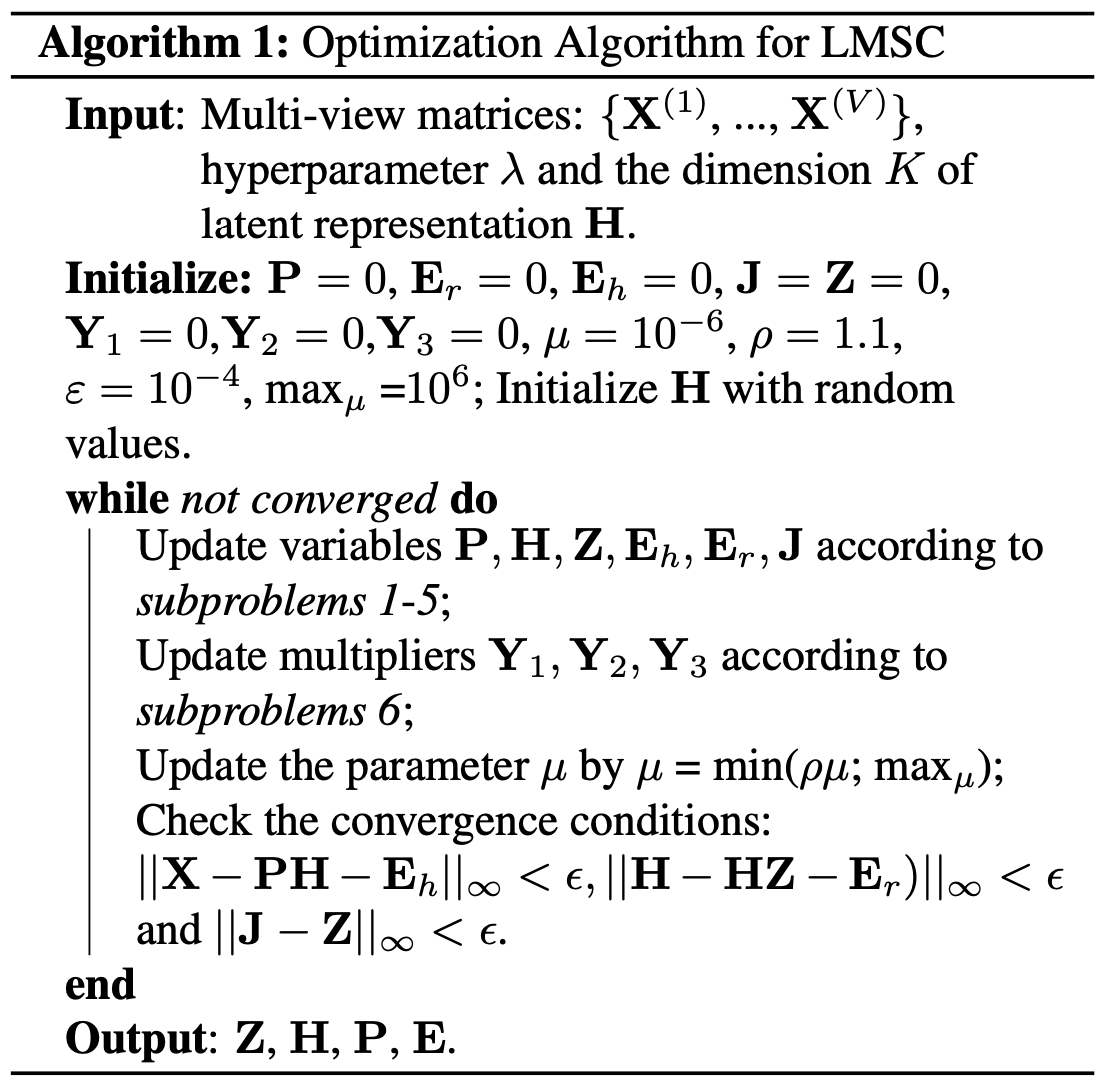

The above objective function can be solved by minimizing the following Augmented Lagrange Multiplier (ALM) problem

this problem is separated into 5 subproblems in order to optimize it with Alternat Direction Minimizing strategy.

experiment results and conclusions

Data sets: MSRCV1, Scene-15, ORL, LandUse-21, Still DB, BBCSport.

- With the help of multiple views the proposed method achieves much more promising results compared with the result that only using single view.

-

In a big picture, the proposed approach outperforms all the baselines with a large margin.

论文信息

Zhang C , Hu Q , Fu H , et al. Latent Multi-view Subspace Clustering[C]// 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2017.