参考文档:

- Github介绍:https://github.com/kubernetes/dns

- Github yaml文件:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns

- DNS for Services and Pods:https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/

-

Kubedns原理1:https://www.kubernetes.org.cn/3347.html

-

Kubedns原理2:https://segmentfault.com/a/1190000007342180

- Github示例:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns

- Configure stub domain and upstream DNS servers:https://kubernetes.io/docs/tasks/administer-cluster/dns-custom-nameservers/

Kube-DNS在集群范围内完成服务名到ClusterIP的解析,对服务进行访问,提供了服务发现机制的基本功能。

一.环境

1. 基础环境

|

组件 |

版本 |

Remark |

|

kubernetes |

v1.9.2 |

|

|

KubeDNS |

V1.4.8 |

服务发现机制同SkyDNS |

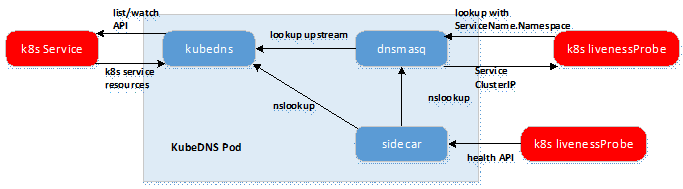

2. 原理

- Kube-DNS以Pod的形式部署到kubernetes集群系统;

- Kube-DNS对SkyDNS进行封装优化,由4个容器变成3个;

- kubedns容器:基于skydns实现;监视k8s Service资源并更新DNS记录;替换etcd,使用TreeCache数据结构保存DNS记录并实现SkyDNS的Backend接口;接入SkyDNS,对dnsmasq提供DNS查询服务;

- dnsmasq容器:为集群提供DNS查询服务,即简易的dns server;设置kubedns为upstream;提供DNS缓存,降低kubedns负载,提高性能;

-

sidecar容器:监控健康模块,同时向外暴露metrics记录;定期检查kubedns和dnsmasq的健康状态;为k8s活性检测提供HTTP API。

二.部署Kube-DNS

Kubernetes支持kube-dns以Cluster Add-On的形式运行。Kubernetes会在集群中调度一个DNS的Pod与Service。

1. 准备images

kubernetes部署Pod服务时,为避免部署时发生pull镜像超时的问题,建议提前将相关镜像pull到相关所有节点(实验),或搭建本地镜像系统。

- 基础环境已做了镜像加速,可参考:http://www.cnblogs.com/netonline/p/7420188.html

- 需要从gcr.io pull的镜像,已利用Docker Hub的"Create Auto-Build GitHub"功能(Docker Hub利用GitHub上的Dockerfile文件build镜像),在个人的Docker Hub build成功,可直接pull到本地使用。

# Pod内namespace共享的基础pause镜像; # 在kubelet的启动参数中已指定pause镜像,Pull到本地后修改名称 [root@kubenode1 ~]# docker pull netonline/pause-amd64:3.0 [root@kubenode1 ~]# docker tag netonline/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0 [root@kubenode1 ~]# docker images

# kubedns [root@kubenode1 ~]# docker pull netonline/k8s-dns-kube-dns-amd64:1.14.8 # dnsmasq-nanny [root@kubenode1 ~]# docker pull netonline/k8s-dns-dnsmasq-nanny-amd64:1.14.8 # sidecar [root@kubenode1 ~]# docker pull netonline/k8s-dns-sidecar-amd64:1.14.8

2. 下载kube-dns范本

# https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns [root@kubenode1 ~]# mkdir -p /usr/local/src/yaml/kubedns [root@kubenode1 ~]# cd /usr/local/src/yaml/kubedns [root@kubenode1 kubedns]# wget -O kube-dns.yaml https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/dns/kube-dns/kube-dns.yaml.base

3. 配置kube-dns Service

# kube-dns将Service,ServiceAccount,ConfigMap,Deployment等4中服务放置在1个yaml文件中,以下章节分别针对各模块修改,红色加粗字体即修改部分; # 对Pod yaml文件的编写这里不做展开,可另参考资料,如《Kubernetes权威指南》; # 修改后的kube-dns.yaml:https://github.com/Netonline2016/kubernetes/blob/master/addons/kubedns/kube-dns.yaml # clusterIP与kubelet启动参数--cluster-dns一致即可,在service cidr中预选1个地址做dns地址 [root@kubenode01 yaml]# vim kube-dns.yaml apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "KubeDNS" spec: selector: k8s-app: kube-dns clusterIP: 169.169.0.11 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP

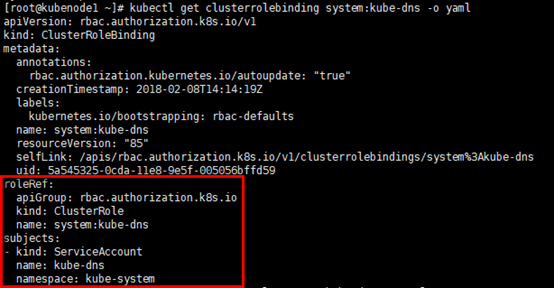

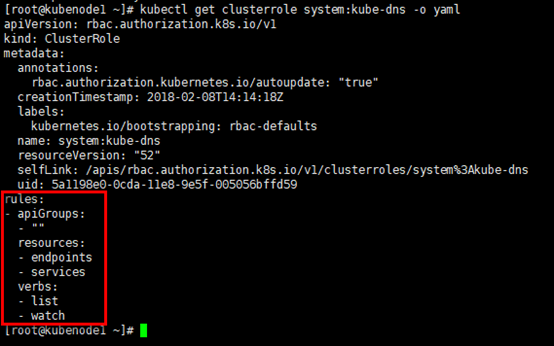

4. 配置kube-dns ServiceAccount

# kube-dns ServiceAccount不用修改,kubernetes集群预定义的ClusterRoleBinding system:kube-dns已将kube-system(系统服务一般部署在此)namespace中的ServiceAccout kube-dns 与预定义的ClusterRole system:kube-dns绑定,而ClusterRole system:kube-dns具有访问kube-apiserver dns的api权限。 # RBAC授权请见:https://blog.frognew.com/2017/04/kubernetes-1.6-rbac.html [root@kubenode1 ~]# kubectl get clusterrolebinding system:kube-dns -o yaml

[root@kubenode1 ~]# kubectl get clusterrole system:kube-dns -o yaml

5. 配置kube-dns ConfigMap

ConfigMap的典型用法是:

-

生成容器内的环境变量;

-

设置容器启动命令的启动参数(需设置为环境变量);

-

以volume的形式挂载为容器内部的文件或目录。

验证kube-dns功能不需要做修改,如果需要自定义DNS与上游DNS服务器,可对ConfigMap进行修改,见第四章节。

6. 配置kube-dns Deployment

# 第97,148,187行的三个容器的启动镜像; # 第127,168,200,201行的域名,域名同kubelet启动参数中的”--cluster-domain”对应,注意域名”cluster.local.”后的“.” [root@kubenode1 kubedns]# vim kube-dns.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kube-dns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: # replicas: not specified here: # 1. In order to make Addon Manager do not reconcile this replicas parameter. # 2. Default is 1. # 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on. strategy: rollingUpdate: maxSurge: 10% maxUnavailable: 0 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: tolerations: - key: "CriticalAddonsOnly" operator: "Exists" volumes: - name: kube-dns-config configMap: name: kube-dns optional: true containers: - name: kubedns image: netonline/k8s-dns-kube-dns-amd64:1.14.8 resources: # TODO: Set memory limits when we've profiled the container for large # clusters, then set request = limit to keep this container in # guaranteed class. Currently, this container falls into the # "burstable" category so the kubelet doesn't backoff from restarting it. limits: memory: 170Mi requests: cpu: 100m memory: 70Mi livenessProbe: httpGet: path: /healthcheck/kubedns port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /readiness port: 8081 scheme: HTTP # we poll on pod startup for the Kubernetes master service and # only setup the /readiness HTTP server once that's available. initialDelaySeconds: 3 timeoutSeconds: 5 args: - --domain=cluster.local. - --dns-port=10053 - --config-dir=/kube-dns-config - --v=2 env: - name: PROMETHEUS_PORT value: "10055" ports: - containerPort: 10053 name: dns-local protocol: UDP - containerPort: 10053 name: dns-tcp-local protocol: TCP - containerPort: 10055 name: metrics protocol: TCP volumeMounts: - name: kube-dns-config mountPath: /kube-dns-config - name: dnsmasq image: netonline/k8s-dns-dnsmasq-nanny-amd64:1.14.8 livenessProbe: httpGet: path: /healthcheck/dnsmasq port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - -v=2 - -logtostderr - -configDir=/etc/k8s/dns/dnsmasq-nanny - -restartDnsmasq=true - -- - -k - --cache-size=1000 - --no-negcache - --log-facility=- - --server=/cluster.local./127.0.0.1#10053 - --server=/in-addr.arpa/127.0.0.1#10053 - --server=/ip6.arpa/127.0.0.1#10053 ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP # see: https://github.com/kubernetes/kubernetes/issues/29055 for details resources: requests: cpu: 150m memory: 20Mi volumeMounts: - name: kube-dns-config mountPath: /etc/k8s/dns/dnsmasq-nanny - name: sidecar image: netonline/k8s-dns-sidecar-amd64:1.14.8 livenessProbe: httpGet: path: /metrics port: 10054 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 args: - --v=2 - --logtostderr - --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local.,5,SRV - --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local.,5,SRV ports: - containerPort: 10054 name: metrics protocol: TCP resources: requests: memory: 20Mi cpu: 10m dnsPolicy: Default # Don't use cluster DNS. serviceAccountName: kube-dns

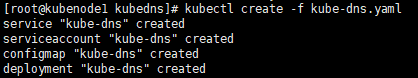

7. 启动kube-dns

[root@kubenode1 ~]# cd /usr/local/src/yaml/kubedns/ [root@kubenode1 kubedns]# kubectl create -f kube-dns.yaml

三.验证Kube-DNS

1. kube-dns Deployment&Service&Pod

# kube-dns Pod 3个容器已”Ready”,服务,deployment等也正常启动 [root@kubenode1 kubedns]# kubectl get pod -n kube-system -o wide [root@kubenode1 kubedns]# kubectl get service -n kube-system -o wide [root@kubenode1 kubedns]# kubectl get deployment -n kube-system -o wide

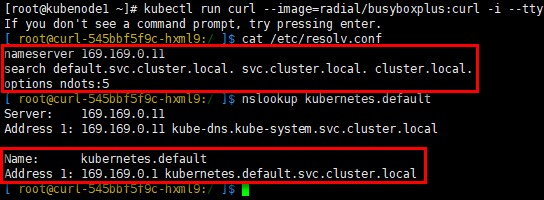

2. kube-dns 查询

# pull测试镜像 [root@kubenode1 ~]# docker pull radial/busyboxplus:curl # 启动测试Pod并进入Pod容器 [root@kubenode1 ~]# kubectl run curl --image=radial/busyboxplus:curl -i --tty # Pod容器中查看/etc/resolv.conf,dns记录已写入文件; # nslookup可查询到kubernetes集群系统的服务ip [ root@curl-545bbf5f9c-hxml9:/ ]$ cat /etc/resolv.conf [ root@curl-545bbf5f9c-hxml9:/ ]$ nslookup kubernetes.default

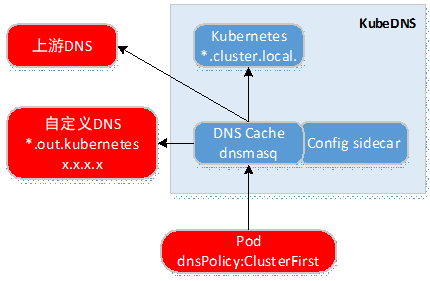

四.自定义DNS与上游DNS服务器

从kubernetes v1.6开始,用户可以在集群内配置私有DNS区域(一般称为存根域Stub Domain)与外部上游域名服务。

1. 原理

- Pod定义中支持两种DNS策略:Default与ClusterFirst,dnsPolicy默认为ClusterFirst;如果将dnsPolicy设置为Default,域名解析配置完全从Pod所在的节点(/etc/resolv.conf)继承而来;

- 当Pod的dnsPolicy设置为ClusterFirst时,DNS查询首先被发送到kube-dns的DNS缓存层;

-

在DNS缓存层检查域名后缀,根据域名后缀发送到集群自身的DNS服务器,或者自定义的stub domain,或者上游域名服务器。

2. 自定义DNS方式

# 集群管理员可使用ConfigMap指定自定义的存根域域上游DNS服务器; [root@kubenode1 ~]# cd /usr/local/src/yaml/kubedns/ # 直接修改kube-dns.yaml模版的ConfigMap服务部分 # stubDomains:可选项,存根域定义,json格式;key为DNS后缀,value是1个json数组,表示1组DNS服务器地址;目标域名服务器可以是kubernetes服务名;多个自定义dns记录采用”,”分隔; # upstreamNameservers:DNS地址组成的数组,最多指定3个ip地址,json格式;如果指定此值,从节点的域名服务设置(/etc/resolv.conf)继承来的值会被覆盖 [root@kubenode1 kubedns]# vim kube-dns.yaml apiVersion: v1 kind: ConfigMap metadata: name: kube-dns namespace: kube-system labels: addonmanager.kubernetes.io/mode: EnsureExists data: stubDomains: | {"out.kubernetes": ["172.20.1.201"]} upstreamNameservers: | ["114.114.114.114", "223.5.5.5"]

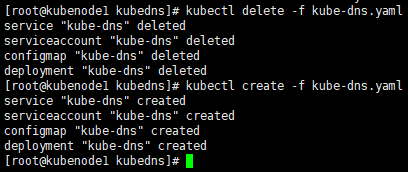

3. 重建kube-dns ConfigMap

# 先删除原kube-dns,再创建新kube-dns; # 也可以只删除原kube-dns中的ConfigMap服务,再单独创建新的 ConfigMap服务 [root@kubenode1 kubedns]# kubectl delete -f -n kube-dns -n kube-system [root@kubenode1 kubedns]# kubectl create -f kube-dns.yaml

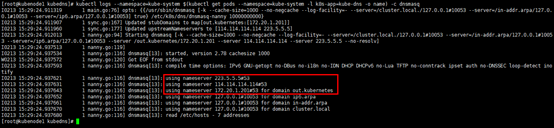

# 查看dnsmasq日志,stub domain与upstreamserver已生效; # kubedns与sidecar两个日志也有stub domain与upstreamserver生效的输出 [root@kubenode1 kubedns]# kubectl logs --namespace=kube-system $(kubectl get pods --namespace=kube-system -l k8s-app=kube-dns -o name) -c dnsmasq

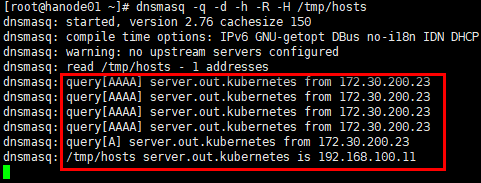

4. 自定义dns服务器

# 在configmap中自定义的stub domain 172.20.1.201上安装dnsmasq服务 [root@hanode01 ~]# yum install dnsmasq -y # 生成自定义的DNS记录文件 [root@hanode01 ~]# echo "192.168.100.11 server.out.kubernetes" > /tmp/hosts # 启动DNS服务; # -q:输出查询记录; # -d:以debug模式启动,前台运行,观察输出日志; # -h:不使用/etc/hosts; # -R:不使用/etc/resolv.conf; # -H:使用自定义的DNS记录文件; # 启动输出日志中warning提示没有设置上游DNS服务器;同时读入自定义DNS记录文件 [root@hanode01 ~]# dnsmasq -q -d -h -R -H /tmp/hosts

# iptables放行udp 53端口 [root@hanode01 ~]# iptables -I INPUT -m state --state NEW -m udp -p udp --dport 53 -j ACCEPT

5. 启动Pod

# 下载镜像 [root@kubenode1 ~]# docker pull busybox # 配置Pod yaml文件; # dnsPolicy设置为ClusterFirst,默认也是ClusterFirst [root@kubenode1 ~]# touch dnstest.yaml [root@kubenode1 ~]# vim dnstest.yaml apiVersion: v1 kind: Pod metadata: name: dnstest namespace: default spec: dnsPolicy: ClusterFirst containers: - name: busybox image: busybox command: - sleep - "3600" imagePullPolicy: IfNotPresent restartPolicy: Always # 创建Pod [root@kubenode1 ~]# kubectl create -f dnstest.yaml

6. 验证自定义的DNS配置

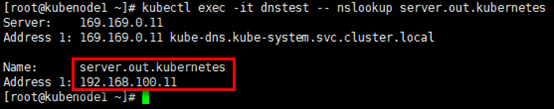

# nslookup查询server.out.kubernetes,返回定义的ip地址 [root@kubenode1 ~]# kubectl exec -it dnstest -- nslookup server.out.kubernetes

观察stub domain 172.20.1.201上dnsmasq服务的输出:kube节点172.30.200.23(Pod所在的节点,flannel网络,snat出节点)对server.out.kubenetes的查询,dnsmasq返回预定义的主机地址。