http://dranger.com/ffmpeg/tutorial01.html

http://dranger.com/ffmpeg/tutorial01.c

首先这个教程太老了,我使用的是ffmpeg 1.1.1稳定版,在 http://ffmpeg.zeranoe.com/builds/ 上下载的编译文件,32-bit Builds (Shared)和32-bit Builds (Dev)

类似 av_open_input_file 的函数都已经改名为 avformat_open_input

具体用法应该参考

* API example for decoding and filtering

* @example doc/examples/filtering_video.c

在VS 2012中使用参考:

http://blog.sina.com.cn/s/blog_4178f4bf01018wqh.html

最近几天一直在折腾ffmpeg,在网上也查了许多资料,费了不少劲,现在在这里和大家分享一下。

然后选择 配置属性 -> C/C++ -> 常规 -> 附加包含目录,添加目录为你下载的32-bit Builds (Dev)中的头文件目录。

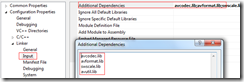

鼠标右键点击工程名,选择属性, 然后选择 配置属性 -> 链接器 -> 输入 -> 附加依赖项,添加的文件为你下载的32-bit Builds (Dev)中的lib文件。

三、可能出现的问题

http://www.cnblogs.com/Jerry-Chou/archive/2011/03/31/2000761.html

另外,C99中添加了几个新的头文件,VC++中没有,所以需要你自己下载。并放至相应目录。对于VS2010来说通常是:C:\Program Files (x86)\Microsoft Visual Studio 10.0\VC\include。

2,示例代码

网上的ffmpeg的示例代码大多过时了,在2009年初img_convert这个函数被sws_scale取代了,所以可能你从网上找到的示例代码并不可以运行(但代码运作原理还是一样的)。

我这里贴出一份当前可以运行的代码。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

|

// ffmpeg-example.cpp : Defines the entry point for the console application.//#include "stdafx.h"#define inline _inline#ifndef INT64_C#define INT64_C(c) (c ## LL)#define UINT64_C(c) (c ## ULL)#endif#ifdef __cplusplusextern "C" {#endif /*Include ffmpeg header file*/#include <libavformat/avformat.h>#include <libavcodec/avcodec.h>#include <libswscale/swscale.h>#ifdef __cplusplus}#endif#include <stdio.h>static void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame);int main (int argc, const char * argv[]){ AVFormatContext *pFormatCtx; int i, videoStream; AVCodecContext *pCodecCtx; AVCodec *pCodec; AVFrame *pFrame; AVFrame *pFrameRGB; AVPacket packet; int frameFinished; int numBytes; uint8_t *buffer; // Register all formats and codecs av_register_all(); // Open video file if(av_open_input_file(&pFormatCtx, argv[1], NULL, 0, NULL)!=0) return -1; // Couldn't open file // Retrieve stream information if(av_find_stream_info(pFormatCtx)<0) return -1; // Couldn't find stream information // Dump information about file onto standard error dump_format(pFormatCtx, 0, argv[1], false); // Find the first video stream videoStream=-1; for(i=0; i<pFormatCtx->nb_streams; i++) if(pFormatCtx->streams[i]->codec->codec_type==CODEC_TYPE_VIDEO) { videoStream=i; break; } if(videoStream==-1) return -1; // Didn't find a video stream // Get a pointer to the codec context for the video stream pCodecCtx=pFormatCtx->streams[videoStream]->codec; // Find the decoder for the video stream pCodec=avcodec_find_decoder(pCodecCtx->codec_id); if(pCodec==NULL) return -1; // Codec not found // Open codec if(avcodec_open(pCodecCtx, pCodec)<0) return -1; // Could not open codec // Hack to correct wrong frame rates that seem to be generated by some codecs if(pCodecCtx->time_base.num>1000 && pCodecCtx->time_base.den==1) pCodecCtx->time_base.den=1000; // Allocate video frame pFrame=avcodec_alloc_frame(); // Allocate an AVFrame structure pFrameRGB=avcodec_alloc_frame(); if(pFrameRGB==NULL) return -1; // Determine required buffer size and allocate buffer numBytes=avpicture_get_size(PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height); //buffer=malloc(numBytes); buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t)); // Assign appropriate parts of buffer to image planes in pFrameRGB avpicture_fill((AVPicture *)pFrameRGB, buffer, PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height); // Read frames and save first five frames to disk i=0; while(av_read_frame(pFormatCtx, &packet)>=0) { // Is this a packet from the video stream? if(packet.stream_index==videoStream) { // Decode video frame avcodec_decode_video(pCodecCtx, pFrame, &frameFinished, packet.data, packet.size); // Did we get a video frame? if(frameFinished) { static struct SwsContext *img_convert_ctx;#if 0 // Older removed code // Convert the image from its native format to RGB swscale img_convert((AVPicture *)pFrameRGB, PIX_FMT_RGB24, (AVPicture*)pFrame, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height); // function template, for reference int sws_scale(struct SwsContext *context, uint8_t* src[], int srcStride[], int srcSliceY, int srcSliceH, uint8_t* dst[], int dstStride[]);#endif // Convert the image into YUV format that SDL uses if(img_convert_ctx == NULL) { int w = pCodecCtx->width; int h = pCodecCtx->height; img_convert_ctx = sws_getContext(w, h, pCodecCtx->pix_fmt, w, h, PIX_FMT_RGB24, SWS_BICUBIC, NULL, NULL, NULL); if(img_convert_ctx == NULL) { fprintf(stderr, "Cannot initialize the conversion context!\n"); exit(1); } } int ret = sws_scale(img_convert_ctx, pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameRGB->data, pFrameRGB->linesize);#if 0 // this use to be true, as of 1/2009, but apparently it is no longer true in 3/2009 if(ret) { fprintf(stderr, "SWS_Scale failed [%d]!\n", ret); exit(-1); }#endif // Save the frame to disk if(i++<=5) SaveFrame(pFrameRGB, pCodecCtx->width, pCodecCtx->height, i); } } // Free the packet that was allocated by av_read_frame av_free_packet(&packet); } // Free the RGB image //free(buffer); av_free(buffer); av_free(pFrameRGB); // Free the YUV frame av_free(pFrame); // Close the codec avcodec_close(pCodecCtx); // Close the video file av_close_input_file(pFormatCtx); return 0;}static void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame){ FILE *pFile; char szFilename[32]; int y; // Open file sprintf(szFilename, "frame%d.ppm", iFrame); pFile=fopen(szFilename, "wb"); if(pFile==NULL) return; // Write header fprintf(pFile, "P6\n%d %d\n255\n", width, height); // Write pixel data for(y=0; y<height; y++) fwrite(pFrame->data[0]+y*pFrame->linesize[0], 1, width*3, pFile); // Close file fclose(pFile);} |

显示代码中有几个地方需要注意一下。就是开头的宏定义部分。第一个是C99中添加了inline关键字,第二个是对ffmpeg头文件中INT64_C的模拟(可能也是为了解决与C99的兼容问题)。第三个是使用extern C在C++代码中使用C的头文件。

3,设置Visual Studio

在你编译,调用上面的代码之前你还要在Visual Stuido中做相应的设置,才可以正确引用ffmpeg的库。

3.1 设置ffmpeg头文件位置

右击项目->属性,添加Include文件目录位置:

3.2 设置LIB文件位置

3.3 设置所引用的LIB文件

如果一切正常,这时你便可以编译成功。

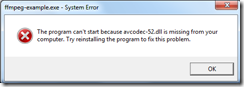

4,可能出现的问题

4.1 运行时出错

虽然你可以成功编译,但你F5,调试时会出现以下错误。

原因是,你虽然引用了LIB文件,但这并不是真正的静态库文件,而是对DLL的引用,所以当你调用ffmpeg库函数时,需要DLL文件在场。你可以用dumpbin(VS自带工具)来查看你生成的exe中引用了哪些DLL文件。你在命令行输入:

>dumpbin ffmpeg-example.exe /imports

你可以从输出中看出你实际引用以下几个的DLL文件。

avcodec-52.dll

avformat-52.dll

swscale-0.dll

avutil-50.dll

还有些朋友可能想将ffmpeg库进行静态引用,这样就不需要这些DLL文件了。这样做是可行的,但是不推荐的。

4.2 av_open_input_file失败

在VS的Command Argumetns中使用全路径。

注意:

上面两个博客转载的都稍微有错 或者 有其他选择

1. 增加Link用的lib可以不在图形界面设置,使用类似 #pragma comment(lib, "avcodec.lib") 的形式

2. dumpbin 可能没有 depends.exe 用的方便 http://dependencywalker.com/

3. ppm图像windows上不好看,而且现在代码图像时翻转的

4. 新库的流程变化比较大要参考 doc/examples/filtering_video.c