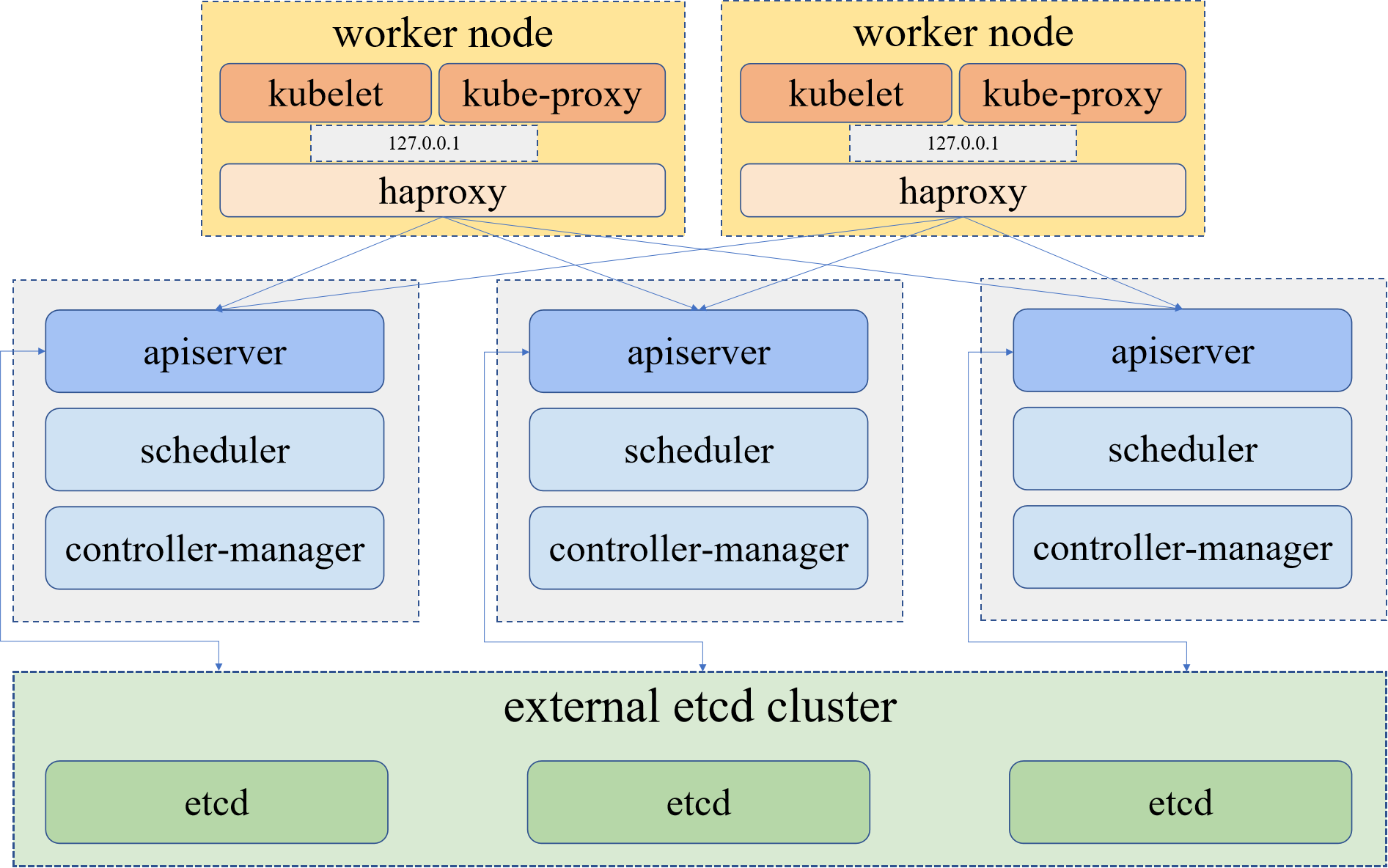

内置 haproxy 高可用架构:

1、下载脚本

[root@master1 ~]# curl -sfL https://get-kk.kubesphere.io | VERSION=v2.0.0 sh -

如果访问 Github 和 Googleapis 受限先执行以下命令再执行上面的命令

export KKZONE=cn

2、给脚本赋予执行权限

[root@master1 ~]# chmod +x kk

3、创建包含默认配置的示例配置文件(我这里只创建的是 k8s 配置文件,没创建 kubesphere 配置文件,kubesphere 配置文件根据自己的需要创建。)

[root@master1 ~]# ./kk create config --with-kubernetes v1.21.5

4、修改配置文件(这里已经修改好了,根据自己环境修改)

[root@master1 ~]# cat config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master1, address: 192.168.200.3, internalAddress: 192.168.200.3, user: root, password: "admin"}

- {name: master2, address: 192.168.200.4, internalAddress: 192.168.200.4, user: root, password: "admin"}

- {name: master3, address: 192.168.200.5, internalAddress: 192.168.200.5, user: root, password: "admin"}

- {name: node1, address: 192.168.200.6, internalAddress: 192.168.200.6, user: root, password: "admin"}

roleGroups:

etcd:

- master1

- master2

- master3

control-plane:

- master1

- master2

- master3

worker:

- node1

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy # 这里我用了内置高可用,如果不需要内置高可用就把这个注释掉

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.21.5

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

plainHTTP: false

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

5、创建集群

[root@master1 ~]# ./kk create cluster -f config-sample.yaml

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

03:40:19 CST [NodePreCheckModule] A pre-check on nodes

03:40:19 CST success: [master3]

03:40:19 CST success: [master1]

03:40:19 CST success: [node1]

03:40:19 CST success: [master2]

03:40:19 CST [ConfirmModule] Display confirmation form

+---------+------+------+---------+----------+-------+-------+-----------+--------+----------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | conntrack | chrony | docker | nfs client | ceph client | glusterfs client | time |

+---------+------+------+---------+----------+-------+-------+-----------+--------+----------+------------+-------------+------------------+--------------+

| node1 | y | y | y | y | y | y | y | y | 19.03.15 | | | | CST 03:40:19 |

| master1 | y | y | y | y | y | y | y | y | 19.03.15 | | | | CST 03:40:19 |

| master2 | y | y | y | y | y | y | y | y | 19.03.15 | | | | CST 03:40:19 |

| master3 | y | y | y | y | y | y | y | y | 19.03.15 | | | | CST 03:40:19 |

+---------+------+------+---------+----------+-------+-------+-----------+--------+----------+------------+-------------+------------------+--------------+

This is a simple check of your environment.

Before installation, you should ensure that your machines meet all requirements specified at

https://github.com/kubesphere/kubekey#requirements-and-recommendations

Continue this installation? [yes/no]: yes

03:40:23 CST success: [LocalHost]

03:40:23 CST [NodeBinariesModule] Download installation binaries

03:40:23 CST message: [localhost]

downloading amd64 kubeadm v1.21.5 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 42.7M 100 42.7M 0 0 408k 0 0:01:47 0:01:47 --:--:-- 532k

03:42:11 CST message: [localhost]

downloading amd64 kubelet v1.21.5 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 112M 100 112M 0 0 394k 0 0:04:52 0:04:52 --:--:-- 475k

03:47:05 CST message: [localhost]

downloading amd64 kubectl v1.21.5 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 44.4M 100 44.4M 0 0 390k 0 0:01:56 0:01:56 --:--:-- 382k

03:49:02 CST message: [localhost]

downloading amd64 helm v3.6.3 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 13.0M 100 13.0M 0 0 403k 0 0:00:33 0:00:33 --:--:-- 557k

03:49:35 CST message: [localhost]

downloading amd64 kubecni v0.9.1 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 671 100 671 0 0 2037 0 --:--:-- --:--:-- --:--:-- 2033

100 37.9M 100 37.9M 0 0 412k 0 0:01:34 0:01:34 --:--:-- 480k

03:51:10 CST message: [localhost]

downloading amd64 docker 20.10.8 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 58.1M 100 58.1M 0 0 406k 0 0:02:26 0:02:26 --:--:-- 558k

03:53:37 CST message: [localhost]

downloading amd64 crictl v1.22.0 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 670 100 670 0 0 1091 0 --:--:-- --:--:-- --:--:-- 1092

100 17.8M 100 17.8M 0 0 412k 0 0:00:44 0:00:44 --:--:-- 456k

03:54:21 CST message: [localhost]

downloading amd64 etcd v3.4.13 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 155 100 155 0 0 266 0 --:--:-- --:--:-- --:--:-- 265

100 668 100 668 0 0 710 0 --:--:-- --:--:-- --:--:-- 710

100 16.5M 100 16.5M 0 0 405k 0 0:00:41 0:00:41 --:--:-- 478k

03:55:03 CST success: [LocalHost]

03:55:03 CST [ConfigureOSModule] Prepare to init OS

03:55:04 CST success: [node1]

03:55:04 CST success: [master3]

03:55:04 CST success: [master2]

03:55:04 CST success: [master1]

03:55:04 CST [ConfigureOSModule] Generate init os script

03:55:04 CST success: [master1]

03:55:04 CST success: [node1]

03:55:04 CST success: [master3]

03:55:04 CST success: [master2]

03:55:04 CST [ConfigureOSModule] Exec init os script

03:55:05 CST stdout: [node1]

setenforce: SELinux is disabled

Disabled

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

no crontab for root

03:55:05 CST stdout: [master2]

setenforce: SELinux is disabled

Disabled

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

no crontab for root

03:55:05 CST stdout: [master3]

setenforce: SELinux is disabled

Disabled

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

no crontab for root

03:55:05 CST stdout: [master1]

setenforce: SELinux is disabled

Disabled

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-arptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_local_reserved_ports = 30000-32767

vm.max_map_count = 262144

vm.swappiness = 1

fs.inotify.max_user_instances = 524288

kernel.pid_max = 65535

no crontab for root

03:55:05 CST success: [node1]

03:55:05 CST success: [master2]

03:55:05 CST success: [master3]

03:55:05 CST success: [master1]

03:55:05 CST [ConfigureOSModule] configure the ntp server for each node

03:55:05 CST skipped: [master1]

03:55:05 CST skipped: [master3]

03:55:05 CST skipped: [master2]

03:55:05 CST skipped: [node1]

03:55:05 CST [KubernetesStatusModule] Get kubernetes cluster status

03:55:05 CST success: [master1]

03:55:05 CST success: [master2]

03:55:05 CST success: [master3]

03:55:05 CST [InstallContainerModule] Sync docker binaries

03:55:05 CST skipped: [master1]

03:55:05 CST skipped: [master3]

03:55:05 CST skipped: [master2]

03:55:05 CST skipped: [node1]

03:55:05 CST [InstallContainerModule] Generate containerd service

03:55:05 CST skipped: [node1]

03:55:05 CST skipped: [master1]

03:55:05 CST skipped: [master3]

03:55:05 CST skipped: [master2]

03:55:05 CST [InstallContainerModule] Enable containerd

03:55:05 CST skipped: [master3]

03:55:05 CST skipped: [master1]

03:55:05 CST skipped: [node1]

03:55:05 CST skipped: [master2]

03:55:05 CST [InstallContainerModule] Generate docker service

03:55:05 CST skipped: [node1]

03:55:05 CST skipped: [master3]

03:55:05 CST skipped: [master2]

03:55:05 CST skipped: [master1]

03:55:05 CST [InstallContainerModule] Generate docker config

03:55:05 CST skipped: [master1]

03:55:05 CST skipped: [node1]

03:55:05 CST skipped: [master3]

03:55:05 CST skipped: [master2]

03:55:05 CST [InstallContainerModule] Enable docker

03:55:06 CST skipped: [master1]

03:55:06 CST skipped: [master2]

03:55:06 CST skipped: [node1]

03:55:06 CST skipped: [master3]

03:55:06 CST [InstallContainerModule] Add auths to container runtime

03:55:06 CST skipped: [master1]

03:55:06 CST skipped: [master3]

03:55:06 CST skipped: [master2]

03:55:06 CST skipped: [node1]

03:55:06 CST [PullModule] Start to pull images on all nodes

03:55:06 CST message: [master3]

downloading image: kubesphere/pause:3.4.1

03:55:06 CST message: [master2]

downloading image: kubesphere/pause:3.4.1

03:55:06 CST message: [node1]

downloading image: kubesphere/pause:3.4.1

03:55:06 CST message: [master1]

downloading image: kubesphere/pause:3.4.1

03:55:26 CST message: [master3]

downloading image: kubesphere/kube-apiserver:v1.21.5

03:55:28 CST message: [node1]

downloading image: kubesphere/kube-proxy:v1.21.5

03:55:28 CST message: [master1]

downloading image: kubesphere/kube-apiserver:v1.21.5

03:55:31 CST message: [master2]

downloading image: kubesphere/kube-apiserver:v1.21.5

04:00:49 CST message: [node1]

downloading image: coredns/coredns:1.8.0

04:01:31 CST message: [master3]

downloading image: kubesphere/kube-controller-manager:v1.21.5

04:01:44 CST message: [master2]

downloading image: kubesphere/kube-controller-manager:v1.21.5

04:01:46 CST message: [master1]

downloading image: kubesphere/kube-controller-manager:v1.21.5

04:02:39 CST message: [node1]

downloading image: kubesphere/k8s-dns-node-cache:1.15.12

04:06:37 CST message: [master3]

downloading image: kubesphere/kube-scheduler:v1.21.5

04:07:12 CST message: [master2]

downloading image: kubesphere/kube-scheduler:v1.21.5

04:07:15 CST message: [master1]

downloading image: kubesphere/kube-scheduler:v1.21.5

04:07:24 CST message: [node1]

downloading image: calico/kube-controllers:v3.20.0

04:08:35 CST message: [master3]

downloading image: kubesphere/kube-proxy:v1.21.5

04:09:53 CST message: [master2]

downloading image: kubesphere/kube-proxy:v1.21.5

04:09:58 CST message: [master1]

downloading image: kubesphere/kube-proxy:v1.21.5

04:12:35 CST message: [node1]

downloading image: calico/cni:v3.20.0

04:14:10 CST message: [master3]

downloading image: coredns/coredns:1.8.0

04:15:09 CST message: [master1]

downloading image: coredns/coredns:1.8.0

04:15:12 CST message: [master2]

downloading image: coredns/coredns:1.8.0

04:16:23 CST message: [master3]

downloading image: kubesphere/k8s-dns-node-cache:1.15.12

04:17:34 CST message: [master1]

downloading image: kubesphere/k8s-dns-node-cache:1.15.12

04:17:41 CST message: [master2]

downloading image: kubesphere/k8s-dns-node-cache:1.15.12

04:21:42 CST message: [master3]

downloading image: calico/kube-controllers:v3.20.0

04:21:45 CST message: [node1]

downloading image: calico/node:v3.20.0

04:23:04 CST message: [master2]

downloading image: calico/kube-controllers:v3.20.0

04:23:36 CST message: [master1]

downloading image: calico/kube-controllers:v3.20.0

04:26:43 CST message: [master3]

downloading image: calico/cni:v3.20.0

04:27:00 CST message: [master2]

downloading image: calico/cni:v3.20.0

04:28:07 CST message: [master1]

downloading image: calico/cni:v3.20.0

04:31:53 CST message: [node1]

downloading image: calico/pod2daemon-flexvol:v3.20.0

04:33:26 CST message: [node1]

downloading image: library/haproxy:2.3

04:35:06 CST message: [master3]

downloading image: calico/node:v3.20.0

04:35:57 CST message: [master2]

downloading image: calico/node:v3.20.0

04:36:26 CST message: [master1]

downloading image: calico/node:v3.20.0

04:43:39 CST message: [master3]

downloading image: calico/pod2daemon-flexvol:v3.20.0

04:44:31 CST message: [master1]

downloading image: calico/pod2daemon-flexvol:v3.20.0

04:44:45 CST message: [master2]

downloading image: calico/pod2daemon-flexvol:v3.20.0

04:45:37 CST success: [node1]

04:45:37 CST success: [master3]

04:45:37 CST success: [master1]

04:45:37 CST success: [master2]

04:45:37 CST [ETCDPreCheckModule] Get etcd status

04:45:37 CST success: [master1]

04:45:37 CST success: [master2]

04:45:37 CST success: [master3]

04:45:37 CST [CertsModule] Fetcd etcd certs

04:45:37 CST success: [master1]

04:45:37 CST skipped: [master2]

04:45:37 CST skipped: [master3]

04:45:37 CST [CertsModule] Generate etcd Certs

[certs] Generating "ca" certificate and key

[certs] admin-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] member-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] node-master1 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] admin-master2 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] member-master2 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] node-master2 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] admin-master3 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] member-master3 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] node-master3 serving cert is signed for DNS names [etcd etcd.kube-system etcd.kube-system.svc etcd.kube-system.svc.cluster.local lb.kubesphere.local localhost master1 master2 master3 node1] and IPs [127.0.0.1 ::1 192.168.200.3 192.168.200.4 192.168.200.5 192.168.200.6]

04:45:38 CST success: [LocalHost]

04:45:38 CST [CertsModule] Synchronize certs file

04:45:42 CST success: [master1]

04:45:42 CST success: [master3]

04:45:42 CST success: [master2]

04:45:42 CST [CertsModule] Synchronize certs file to master

04:45:42 CST skipped: [master1]

04:45:42 CST skipped: [master3]

04:45:42 CST skipped: [master2]

04:45:42 CST [InstallETCDBinaryModule] Install etcd using binary

04:45:43 CST success: [master1]

04:45:43 CST success: [master2]

04:45:43 CST success: [master3]

04:45:43 CST [InstallETCDBinaryModule] Generate etcd service

04:45:43 CST success: [master1]

04:45:43 CST success: [master2]

04:45:43 CST success: [master3]

04:45:43 CST [InstallETCDBinaryModule] Generate access address

04:45:43 CST skipped: [master3]

04:45:43 CST skipped: [master2]

04:45:43 CST success: [master1]

04:45:43 CST [ETCDConfigureModule] Health check on exist etcd

04:45:43 CST skipped: [master3]

04:45:43 CST skipped: [master1]

04:45:43 CST skipped: [master2]

04:45:43 CST [ETCDConfigureModule] Generate etcd.env config on new etcd

04:45:44 CST success: [master1]

04:45:44 CST success: [master2]

04:45:44 CST success: [master3]

04:45:44 CST [ETCDConfigureModule] Refresh etcd.env config on all etcd

04:45:44 CST success: [master1]

04:45:44 CST success: [master2]

04:45:44 CST success: [master3]

04:45:44 CST [ETCDConfigureModule] Restart etcd

04:45:47 CST stdout: [master1]

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

04:45:47 CST stdout: [master2]

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

04:45:47 CST stdout: [master3]

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

04:45:47 CST success: [master1]

04:45:47 CST success: [master2]

04:45:47 CST success: [master3]

04:45:47 CST [ETCDConfigureModule] Health check on all etcd

04:45:47 CST success: [master1]

04:45:47 CST success: [master3]

04:45:47 CST success: [master2]

04:45:47 CST [ETCDConfigureModule] Refresh etcd.env config to exist mode on all etcd

04:45:48 CST success: [master1]

04:45:48 CST success: [master2]

04:45:48 CST success: [master3]

04:45:48 CST [ETCDConfigureModule] Health check on all etcd

04:45:48 CST success: [master2]

04:45:48 CST success: [master1]

04:45:48 CST success: [master3]

04:45:48 CST [ETCDBackupModule] Backup etcd data regularly

04:45:54 CST success: [master3]

04:45:54 CST success: [master1]

04:45:54 CST success: [master2]

04:45:54 CST [InstallKubeBinariesModule] Synchronize kubernetes binaries

04:46:14 CST success: [master1]

04:46:14 CST success: [master3]

04:46:14 CST success: [node1]

04:46:14 CST success: [master2]

04:46:14 CST [InstallKubeBinariesModule] Synchronize kubelet

04:46:14 CST success: [master1]

04:46:14 CST success: [master3]

04:46:14 CST success: [master2]

04:46:14 CST success: [node1]

04:46:14 CST [InstallKubeBinariesModule] Generate kubelet service

04:46:14 CST success: [master2]

04:46:14 CST success: [master1]

04:46:14 CST success: [master3]

04:46:14 CST success: [node1]

04:46:14 CST [InstallKubeBinariesModule] Enable kubelet service

04:46:14 CST success: [master3]

04:46:14 CST success: [master2]

04:46:14 CST success: [master1]

04:46:14 CST success: [node1]

04:46:14 CST [InstallKubeBinariesModule] Generate kubelet env

04:46:15 CST success: [master1]

04:46:15 CST success: [master3]

04:46:15 CST success: [node1]

04:46:15 CST success: [master2]

04:46:15 CST [InitKubernetesModule] Generate kubeadm config

04:46:16 CST skipped: [master3]

04:46:16 CST skipped: [master2]

04:46:16 CST success: [master1]

04:46:16 CST [InitKubernetesModule] Init cluster using kubeadm

04:46:28 CST stdout: [master1]

W0331 04:46:16.106041 24936 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[init] Using Kubernetes version: v1.21.5

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost master1 master1.cluster.local master2 master2.cluster.local master3 master3.cluster.local node1 node1.cluster.local] and IPs [10.233.0.1 192.168.200.3 127.0.0.1 192.168.200.4 192.168.200.5 192.168.200.6]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] External etcd mode: Skipping etcd/ca certificate authority generation

[certs] External etcd mode: Skipping etcd/server certificate generation

[certs] External etcd mode: Skipping etcd/peer certificate generation

[certs] External etcd mode: Skipping etcd/healthcheck-client certificate generation

[certs] External etcd mode: Skipping apiserver-etcd-client certificate generation

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 8.504399 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master1 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: m3cb1z.v2z7g71uckb0xznt

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join lb.kubesphere.local:6443 --token m3cb1z.v2z7g71uckb0xznt \

--discovery-token-ca-cert-hash sha256:4c8e2926999fe60c375bf1f428c6f102b941719c4c094751cd4666c145548367 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lb.kubesphere.local:6443 --token m3cb1z.v2z7g71uckb0xznt \

--discovery-token-ca-cert-hash sha256:4c8e2926999fe60c375bf1f428c6f102b941719c4c094751cd4666c145548367

04:46:28 CST skipped: [master3]

04:46:28 CST skipped: [master2]

04:46:28 CST success: [master1]

04:46:28 CST [InitKubernetesModule] Copy admin.conf to ~/.kube/config

04:46:28 CST skipped: [master3]

04:46:28 CST skipped: [master2]

04:46:28 CST success: [master1]

04:46:28 CST [InitKubernetesModule] Remove master taint

04:46:28 CST skipped: [master3]

04:46:28 CST skipped: [master2]

04:46:28 CST skipped: [master1]

04:46:28 CST [InitKubernetesModule] Add worker label

04:46:28 CST skipped: [master3]

04:46:28 CST skipped: [master1]

04:46:28 CST skipped: [master2]

04:46:28 CST [ClusterDNSModule] Generate coredns service

04:46:29 CST skipped: [master3]

04:46:29 CST skipped: [master2]

04:46:29 CST success: [master1]

04:46:29 CST [ClusterDNSModule] Override coredns service

04:46:29 CST stdout: [master1]

service "kube-dns" deleted

04:46:29 CST stdout: [master1]

service/coredns created

Warning: resource clusterroles/system:coredns is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/system:coredns configured

04:46:29 CST skipped: [master3]

04:46:29 CST skipped: [master2]

04:46:29 CST success: [master1]

04:46:29 CST [ClusterDNSModule] Generate nodelocaldns

04:46:29 CST skipped: [master3]

04:46:29 CST skipped: [master2]

04:46:29 CST success: [master1]

04:46:29 CST [ClusterDNSModule] Deploy nodelocaldns

04:46:29 CST stdout: [master1]

serviceaccount/nodelocaldns created

daemonset.apps/nodelocaldns created

04:46:29 CST skipped: [master3]

04:46:29 CST skipped: [master2]

04:46:29 CST success: [master1]

04:46:29 CST [ClusterDNSModule] Generate nodelocaldns configmap

04:46:30 CST skipped: [master3]

04:46:30 CST skipped: [master2]

04:46:30 CST success: [master1]

04:46:30 CST [ClusterDNSModule] Apply nodelocaldns configmap

04:46:30 CST stdout: [master1]

configmap/nodelocaldns created

04:46:30 CST skipped: [master3]

04:46:30 CST skipped: [master2]

04:46:30 CST success: [master1]

04:46:30 CST [KubernetesStatusModule] Get kubernetes cluster status

04:46:30 CST stdout: [master1]

v1.21.5

04:46:30 CST stdout: [master1]

master1 v1.21.5 [map[address:192.168.200.3 type:InternalIP] map[address:master1 type:Hostname]]

04:46:32 CST stdout: [master1]

I0331 04:46:31.902343 26951 version.go:254] remote version is much newer: v1.23.5; falling back to: stable-1.21

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

8e9f6ae4c2543f32b66ece0dbc414a3c1472e8e13bca41c6235e067ccf46b7c6

04:46:32 CST stdout: [master1]

secret/kubeadm-certs patched

04:46:32 CST stdout: [master1]

secret/kubeadm-certs patched

04:46:32 CST stdout: [master1]

secret/kubeadm-certs patched

04:46:33 CST stdout: [master1]

kli9qd.7epuu1jba4ym2xqu

04:46:33 CST success: [master1]

04:46:33 CST success: [master2]

04:46:33 CST success: [master3]

04:46:33 CST [JoinNodesModule] Generate kubeadm config

04:46:35 CST skipped: [master1]

04:46:35 CST success: [master2]

04:46:35 CST success: [master3]

04:46:35 CST success: [node1]

04:46:35 CST [JoinNodesModule] Join control-plane node

04:46:56 CST stdout: [master2]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0331 04:46:47.966812 24831 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost master1 master1.cluster.local master2 master2.cluster.local master3 master3.cluster.local node1 node1.cluster.local] and IPs [10.233.0.1 192.168.200.4 127.0.0.1 192.168.200.3 192.168.200.5 192.168.200.6]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Skipping etcd check in external mode

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[control-plane-join] using external etcd - no local stacked instance added

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node master2 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

04:46:56 CST stdout: [master3]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0331 04:46:47.982388 24810 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local lb.kubesphere.local localhost master1 master1.cluster.local master2 master2.cluster.local master3 master3.cluster.local node1 node1.cluster.local] and IPs [10.233.0.1 192.168.200.5 127.0.0.1 192.168.200.3 192.168.200.4 192.168.200.6]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Skipping etcd check in external mode

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[control-plane-join] using external etcd - no local stacked instance added

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node master3 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master3 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

04:46:56 CST skipped: [master1]

04:46:56 CST success: [master2]

04:46:56 CST success: [master3]

04:46:56 CST [JoinNodesModule] Join worker node

04:47:02 CST stdout: [node1]

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

W0331 04:46:56.776099 22166 utils.go:69] The recommended value for "clusterDNS" in "KubeletConfiguration" is: [10.233.0.10]; the provided value is: [169.254.25.10]

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

04:47:02 CST success: [node1]

04:47:02 CST [JoinNodesModule] Copy admin.conf to ~/.kube/config

04:47:02 CST skipped: [master1]

04:47:02 CST success: [master3]

04:47:02 CST success: [master2]

04:47:02 CST [JoinNodesModule] Remove master taint

04:47:02 CST skipped: [master3]

04:47:02 CST skipped: [master2]

04:47:02 CST skipped: [master1]

04:47:02 CST [JoinNodesModule] Add worker label to master

04:47:02 CST skipped: [master3]

04:47:02 CST skipped: [master1]

04:47:02 CST skipped: [master2]

04:47:02 CST [JoinNodesModule] Synchronize kube config to worker

04:47:02 CST success: [node1]

04:47:02 CST [JoinNodesModule] Add worker label to worker

04:47:02 CST stdout: [node1]

node/node1 labeled

04:47:02 CST success: [node1]

04:47:02 CST [InternalLoadbalancerModule] Generate haproxy.cfg

04:47:03 CST success: [node1]

04:47:03 CST [InternalLoadbalancerModule] Calculate the MD5 value according to haproxy.cfg

04:47:03 CST success: [node1]

04:47:03 CST [InternalLoadbalancerModule] Generate haproxy manifest

04:47:03 CST success: [node1]

04:47:03 CST [InternalLoadbalancerModule] Update kubelet config

04:47:03 CST stdout: [master3]

server: https://lb.kubesphere.local:6443

04:47:03 CST stdout: [node1]

server: https://lb.kubesphere.local:6443

04:47:03 CST stdout: [master2]

server: https://lb.kubesphere.local:6443

04:47:03 CST stdout: [master1]

server: https://lb.kubesphere.local:6443

04:47:03 CST success: [node1]

04:47:03 CST success: [master1]

04:47:03 CST success: [master3]

04:47:03 CST success: [master2]

04:47:03 CST [InternalLoadbalancerModule] Update kube-proxy configmap

04:47:04 CST success: [master1]

04:47:04 CST [InternalLoadbalancerModule] Update /etc/hosts

04:47:05 CST success: [node1]

04:47:05 CST success: [master3]

04:47:05 CST success: [master2]

04:47:05 CST success: [master1]

04:47:05 CST [DeployNetworkPluginModule] Generate calico

04:47:05 CST skipped: [master3]

04:47:05 CST skipped: [master2]

04:47:05 CST success: [master1]

04:47:05 CST [DeployNetworkPluginModule] Deploy calico

04:47:10 CST stdout: [master1]

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

04:47:10 CST skipped: [master3]

04:47:10 CST skipped: [master2]

04:47:10 CST success: [master1]

04:47:10 CST [ConfigureKubernetesModule] Configure kubernetes

04:47:10 CST success: [master3]

04:47:10 CST success: [node1]

04:47:10 CST success: [master1]

04:47:10 CST success: [master2]

04:47:10 CST [ChownModule] Chown user $HOME/.kube dir

04:47:10 CST success: [master3]

04:47:10 CST success: [node1]

04:47:10 CST success: [master2]

04:47:10 CST success: [master1]

04:47:10 CST [AutoRenewCertsModule] Generate k8s certs renew script

04:47:11 CST success: [master3]

04:47:11 CST success: [master1]

04:47:11 CST success: [master2]

04:47:11 CST [AutoRenewCertsModule] Generate k8s certs renew service

04:47:11 CST success: [master3]

04:47:11 CST success: [master1]

04:47:11 CST success: [master2]

04:47:11 CST [AutoRenewCertsModule] Generate k8s certs renew timer

04:47:11 CST success: [master1]

04:47:11 CST success: [master2]

04:47:11 CST success: [master3]

04:47:11 CST [AutoRenewCertsModule] Enable k8s certs renew service

04:47:11 CST success: [master2]

04:47:11 CST success: [master3]

04:47:11 CST success: [master1]

04:47:11 CST [SaveKubeConfigModule] Save kube config as a configmap

04:47:12 CST success: [LocalHost]

04:47:12 CST [AddonsModule] Install addons

04:47:12 CST success: [LocalHost]

04:47:12 CST Pipeline[CreateClusterPipeline] execute successful

Installation is complete.

Please check the result using the command:

kubectl get pod -A

6、查看集群状态

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 2m51s v1.21.5

master2 Ready control-plane,master 2m25s v1.21.5

master3 Ready control-plane,master 2m25s v1.21.5

node1 Ready worker 2m18s v1.21.5

[root@master1 ~]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-846b5f484d-hknb2 1/1 Running 0 39m

kube-system calico-node-b5db9 1/1 Running 0 39m

kube-system calico-node-hp57h 1/1 Running 0 39m

kube-system calico-node-r6h9n 1/1 Running 0 39m

kube-system calico-node-wbrr8 1/1 Running 0 39m

kube-system coredns-b5648d655-d2vts 1/1 Running 0 39m

kube-system coredns-b5648d655-hzhb5 1/1 Running 0 39m

kube-system haproxy-node1 1/1 Running 0 39m

kube-system kube-apiserver-master1 1/1 Running 0 39m

kube-system kube-apiserver-master2 1/1 Running 0 39m

kube-system kube-apiserver-master3 1/1 Running 0 39m

kube-system kube-controller-manager-master1 1/1 Running 0 39m

kube-system kube-controller-manager-master2 1/1 Running 0 39m

kube-system kube-controller-manager-master3 1/1 Running 0 39m

kube-system kube-proxy-kbjd7 1/1 Running 0 39m

kube-system kube-proxy-lr5kz 1/1 Running 0 39m

kube-system kube-proxy-ntbtz 1/1 Running 0 39m

kube-system kube-proxy-txgzv 1/1 Running 0 39m

kube-system kube-scheduler-master1 1/1 Running 0 39m

kube-system kube-scheduler-master2 1/1 Running 0 39m

kube-system kube-scheduler-master3 1/1 Running 0 39m

kube-system nodelocaldns-7gf62 1/1 Running 0 39m

kube-system nodelocaldns-bmzzt 1/1 Running 0 39m

kube-system nodelocaldns-pw6w7 1/1 Running 0 39m

kube-system nodelocaldns-xtlkd 1/1 Running 0 39m

You have mail in /var/spool/mail/root

[root@master1 ~]# kubectl get po --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-846b5f484d-hknb2 1/1 Running 0 39m 10.233.97.1 master1 <none> <none>

kube-system calico-node-b5db9 1/1 Running 0 39m 192.168.200.5 master3 <none> <none>

kube-system calico-node-hp57h 1/1 Running 0 39m 192.168.200.4 master2 <none> <none>

kube-system calico-node-r6h9n 1/1 Running 0 39m 192.168.200.6 node1 <none> <none>

kube-system calico-node-wbrr8 1/1 Running 0 39m 192.168.200.3 master1 <none> <none>

kube-system coredns-b5648d655-d2vts 1/1 Running 0 39m 10.233.98.1 master2 <none> <none>

kube-system coredns-b5648d655-hzhb5 1/1 Running 0 39m 10.233.96.1 master3 <none> <none>

kube-system haproxy-node1 1/1 Running 0 39m 192.168.200.6 node1 <none> <none>

kube-system kube-apiserver-master1 1/1 Running 0 39m 192.168.200.3 master1 <none> <none>

kube-system kube-apiserver-master2 1/1 Running 0 39m 192.168.200.4 master2 <none> <none>

kube-system kube-apiserver-master3 1/1 Running 0 39m 192.168.200.5 master3 <none> <none>

kube-system kube-controller-manager-master1 1/1 Running 0 39m 192.168.200.3 master1 <none> <none>

kube-system kube-controller-manager-master2 1/1 Running 0 39m 192.168.200.4 master2 <none> <none>

kube-system kube-controller-manager-master3 1/1 Running 0 39m 192.168.200.5 master3 <none> <none>

kube-system kube-proxy-kbjd7 1/1 Running 0 39m 192.168.200.6 node1 <none> <none>

kube-system kube-proxy-lr5kz 1/1 Running 0 39m 192.168.200.3 master1 <none> <none>

kube-system kube-proxy-ntbtz 1/1 Running 0 39m 192.168.200.4 master2 <none> <none>

kube-system kube-proxy-txgzv 1/1 Running 0 39m 192.168.200.5 master3 <none> <none>

kube-system kube-scheduler-master1 1/1 Running 0 39m 192.168.200.3 master1 <none> <none>

kube-system kube-scheduler-master2 1/1 Running 0 39m 192.168.200.4 master2 <none> <none>

kube-system kube-scheduler-master3 1/1 Running 0 39m 192.168.200.5 master3 <none> <none>

kube-system nodelocaldns-7gf62 1/1 Running 0 39m 192.168.200.5 master3 <none> <none>

kube-system nodelocaldns-bmzzt 1/1 Running 0 39m 192.168.200.3 master1 <none> <none>

kube-system nodelocaldns-pw6w7 1/1 Running 0 39m 192.168.200.4 master2 <none> <none>

kube-system nodelocaldns-xtlkd 1/1 Running 0 39m 192.168.200.6 node1 <none> <none>