1 // Ceres Solver - A fast non-linear least squares minimizer 2 // Copyright 2015 Google Inc. All rights reserved. 3 // http://ceres-solver.org/ 4 // 5 // Redistribution and use in source and binary forms, with or without 6 // modification, are permitted provided that the following conditions are met: 7 // 8 // * Redistributions of source code must retain the above copyright notice, 9 // this list of conditions and the following disclaimer. 10 // * Redistributions in binary form must reproduce the above copyright notice, 11 // this list of conditions and the following disclaimer in the documentation 12 // and/or other materials provided with the distribution. 13 // * Neither the name of Google Inc. nor the names of its contributors may be 14 // used to endorse or promote products derived from this software without 15 // specific prior written permission. 16 // 17 // THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" 18 // AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE 19 // IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE 20 // ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE 21 // LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR 22 // CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF 23 // SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS 24 // INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN 25 // CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) 26 // ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE 27 // POSSIBILITY OF SUCH DAMAGE. 28 // 29 // Author: sameeragarwal@google.com (Sameer Agarwal) 30 // 31 // An example program that minimizes Powell's singular function. 32 // 33 // F = 1/2 (f1^2 + f2^2 + f3^2 + f4^2) 34 // 35 // f1 = x1 + 10*x2; 36 // f2 = sqrt(5) * (x3 - x4) 37 // f3 = (x2 - 2*x3)^2 38 // f4 = sqrt(10) * (x1 - x4)^2 39 // 40 // The starting values are x1 = 3, x2 = -1, x3 = 0, x4 = 1. 41 // The minimum is 0 at (x1, x2, x3, x4) = 0. 42 // 43 // From: Testing Unconstrained Optimization Software by Jorge J. More, Burton S. 44 // Garbow and Kenneth E. Hillstrom in ACM Transactions on Mathematical Software, 45 // Vol 7(1), March 1981. 46 47 #include <vector> 48 #include "ceres/ceres.h" 49 #include "gflags/gflags.h" 50 #include "glog/logging.h" 51 52 using ceres::AutoDiffCostFunction; 53 using ceres::CostFunction; 54 using ceres::Problem; 55 using ceres::Solver; 56 using ceres::Solve; 57 58 struct F1 { 59 template <typename T> bool operator()(const T* const x1, 60 const T* const x2, 61 T* residual) const { 62 // f1 = x1 + 10 * x2; 63 residual[0] = x1[0] + 10.0 * x2[0]; 64 return true; 65 } 66 }; 67 68 struct F2 { 69 template <typename T> bool operator()(const T* const x3, 70 const T* const x4, 71 T* residual) const { 72 // f2 = sqrt(5) (x3 - x4) 73 residual[0] = sqrt(5.0) * (x3[0] - x4[0]); 74 return true; 75 } 76 }; 77 78 struct F3 { 79 template <typename T> bool operator()(const T* const x2, 80 const T* const x3, 81 T* residual) const { 82 // f3 = (x2 - 2 x3)^2 83 residual[0] = (x2[0] - 2.0 * x3[0]) * (x2[0] - 2.0 * x3[0]); 84 return true; 85 } 86 }; 87 88 struct F4 { 89 template <typename T> bool operator()(const T* const x1, 90 const T* const x4, 91 T* residual) const { 92 // f4 = sqrt(10) (x1 - x4)^2 93 residual[0] = sqrt(10.0) * (x1[0] - x4[0]) * (x1[0] - x4[0]); 94 return true; 95 } 96 }; 97 98 DEFINE_string(minimizer, "trust_region", 99 "Minimizer type to use, choices are: line_search & trust_region"); 100 101 int main(int argc, char** argv) { 102 CERES_GFLAGS_NAMESPACE::ParseCommandLineFlags(&argc, &argv, true); 103 google::InitGoogleLogging(argv[0]); 104 105 double x1 = 3.0; 106 double x2 = -1.0; 107 double x3 = 0.0; 108 double x4 = 1.0; 109 110 Problem problem; 111 // Add residual terms to the problem using the using the autodiff 112 // wrapper to get the derivatives automatically. The parameters, x1 through 113 // x4, are modified in place. 114 problem.AddResidualBlock(new AutoDiffCostFunction<F1, 1, 1, 1>(new F1), 115 NULL, 116 &x1, &x2); 117 problem.AddResidualBlock(new AutoDiffCostFunction<F2, 1, 1, 1>(new F2), 118 NULL, 119 &x3, &x4); 120 problem.AddResidualBlock(new AutoDiffCostFunction<F3, 1, 1, 1>(new F3), 121 NULL, 122 &x2, &x3); 123 problem.AddResidualBlock(new AutoDiffCostFunction<F4, 1, 1, 1>(new F4), 124 NULL, 125 &x1, &x4); 126 127 Solver::Options options; 128 LOG_IF(FATAL, !ceres::StringToMinimizerType(FLAGS_minimizer, 129 &options.minimizer_type)) 130 << "Invalid minimizer: " << FLAGS_minimizer 131 << ", valid options are: trust_region and line_search."; 132 133 options.max_num_iterations = 100; 134 options.linear_solver_type = ceres::DENSE_QR; 135 options.minimizer_progress_to_stdout = true; 136 137 std::cout << "Initial x1 = " << x1 138 << ", x2 = " << x2 139 << ", x3 = " << x3 140 << ", x4 = " << x4 141 << " "; 142 143 // Run the solver! 144 Solver::Summary summary; 145 Solve(options, &problem, &summary); 146 147 std::cout << summary.FullReport() << " "; 148 std::cout << "Final x1 = " << x1 149 << ", x2 = " << x2 150 << ", x3 = " << x3 151 << ", x4 = " << x4 152 << " "; 153 return 0; 154 }

求导

High lever advice

1.Use Automatic Derivatives.

2.In some cases,it may be worth use Analytic Derivatives.

3.Avoid Numeric Derivations. Use it as a measure of last resort,mostly to interface with extennal libraries.

Analytic Derivatives 手动推导导数表达式

To get the best performance out of analytically computed derivatives, one usually needs to optimize the code to account for common sub-expressions.

Numeric Derivatives 数值求导

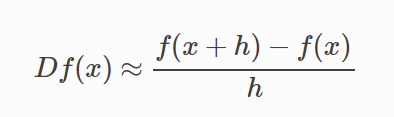

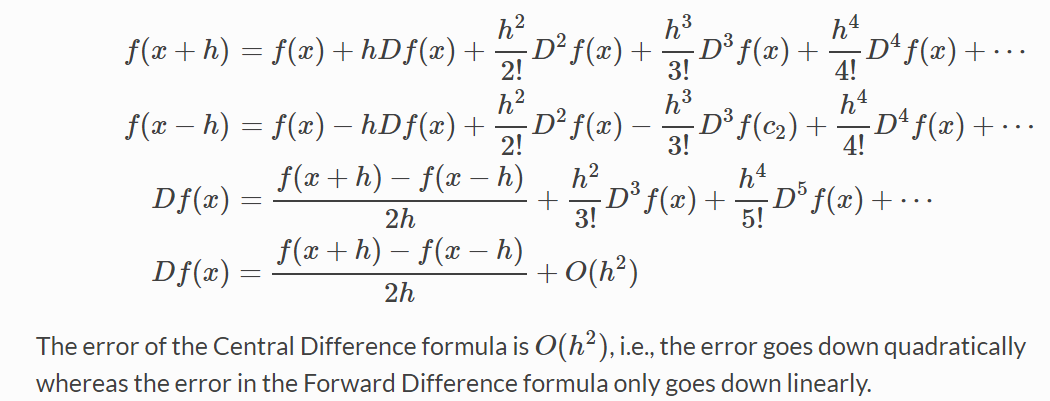

1.Forward Differences

struct Rat43CostFunctor { Rat43CostFunctor(const double x, const double y) : x_(x), y_(y) {} bool operator()(const double* parameters, double* residuals) const { const double b1 = parameters[0]; const double b2 = parameters[1]; const double b3 = parameters[2]; const double b4 = parameters[3]; residuals[0] = b1 * pow(1.0 + exp(b2 - b3 * x_), -1.0 / b4) - y_; return true; } const double x_; const double y_; } CostFunction* cost_function = new NumericDiffCostFunction<Rat43CostFunctor, FORWARD, 1, 4>( new Rat43CostFunctor(x, y));

The error in the forward difference formula is O(h)

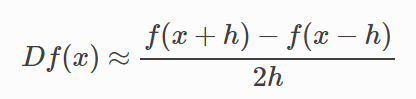

2.Central Differences

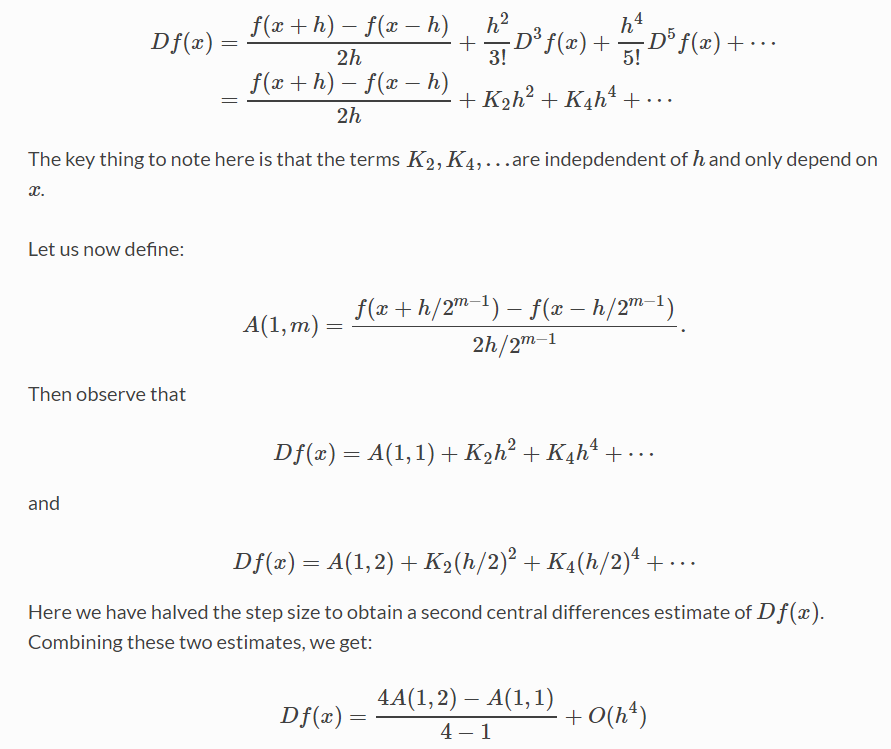

3.Ridders' Method

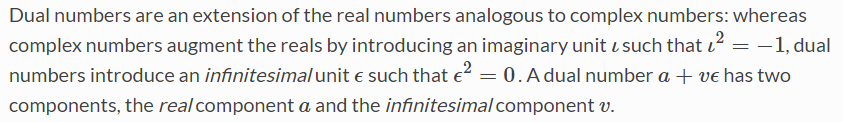

Automatic Derivatives 自动微分

Automatic derivatives is a technique that can compute exact derivatives.

struct Rat43CostFunctor { Rat43CostFunctor(const double x, const double y) : x_(x), y_(y) {} template <typename T> bool operator()(const T* parameters, T* residuals) const { const T b1 = parameters[0]; const T b2 = parameters[1]; const T b3 = parameters[2]; const T b4 = parameters[3]; residuals[0] = b1 * pow(1.0 + exp(b2 - b3 * x_), -1.0 / b4) - y_; return true; } private: const double x_; const double y_; }; CostFunction* cost_function = new AutoDiffCostFunction<Rat43CostFunctor, 1, 4>( new Rat43CostFunctor(x, y));

1 // Ceres Solver - A fast non-linear least squares minimizer 2 // Copyright 2015 Google Inc. All rights reserved. 3 // http://ceres-solver.org/ 4 // 5 // Redistribution and use in source and binary forms, with or without 6 // modification, are permitted provided that the following conditions are met: 7 // 8 // * Redistributions of source code must retain the above copyright notice, 9 // this list of conditions and the following disclaimer. 10 // * Redistributions in binary form must reproduce the above copyright notice, 11 // this list of conditions and the following disclaimer in the documentation 12 // and/or other materials provided with the distribution. 13 // * Neither the name of Google Inc. nor the names of its contributors may be 14 // used to endorse or promote products derived from this software without 15 // specific prior written permission. 16 // 17 // THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" 18 // AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE 19 // IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE 20 // ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE 21 // LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR 22 // CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF 23 // SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS 24 // INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN 25 // CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) 26 // ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE 27 // POSSIBILITY OF SUCH DAMAGE. 28 // 29 // Author: keir@google.com (Keir Mierle) 30 // 31 // A simple implementation of N-dimensional dual numbers, for automatically 32 // computing exact derivatives of functions. 33 // 34 // While a complete treatment of the mechanics of automatic differentiation is 35 // beyond the scope of this header (see 36 // http://en.wikipedia.org/wiki/Automatic_differentiation for details), the 37 // basic idea is to extend normal arithmetic with an extra element, "e," often 38 // denoted with the greek symbol epsilon, such that e != 0 but e^2 = 0. Dual 39 // numbers are extensions of the real numbers analogous to complex numbers: 40 // whereas complex numbers augment the reals by introducing an imaginary unit i 41 // such that i^2 = -1, dual numbers introduce an "infinitesimal" unit e such 42 // that e^2 = 0. Dual numbers have two components: the "real" component and the 43 // "infinitesimal" component, generally written as x + y*e. Surprisingly, this 44 // leads to a convenient method for computing exact derivatives without needing 45 // to manipulate complicated symbolic expressions. 46 // 47 // For example, consider the function 48 // 49 // f(x) = x^2 , 50 // 51 // evaluated at 10. Using normal arithmetic, f(10) = 100, and df/dx(10) = 20. 52 // Next, argument 10 with an infinitesimal to get: 53 // 54 // f(10 + e) = (10 + e)^2 55 // = 100 + 2 * 10 * e + e^2 56 // = 100 + 20 * e -+- 57 // -- | 58 // | +--- This is zero, since e^2 = 0 59 // | 60 // +----------------- This is df/dx! 61 // 62 // Note that the derivative of f with respect to x is simply the infinitesimal 63 // component of the value of f(x + e). So, in order to take the derivative of 64 // any function, it is only necessary to replace the numeric "object" used in 65 // the function with one extended with infinitesimals. The class Jet, defined in 66 // this header, is one such example of this, where substitution is done with 67 // templates. 68 // 69 // To handle derivatives of functions taking multiple arguments, different 70 // infinitesimals are used, one for each variable to take the derivative of. For 71 // example, consider a scalar function of two scalar parameters x and y: 72 // 73 // f(x, y) = x^2 + x * y 74 // 75 // Following the technique above, to compute the derivatives df/dx and df/dy for 76 // f(1, 3) involves doing two evaluations of f, the first time replacing x with 77 // x + e, the second time replacing y with y + e. 78 // 79 // For df/dx: 80 // 81 // f(1 + e, y) = (1 + e)^2 + (1 + e) * 3 82 // = 1 + 2 * e + 3 + 3 * e 83 // = 4 + 5 * e 84 // 85 // --> df/dx = 5 86 // 87 // For df/dy: 88 // 89 // f(1, 3 + e) = 1^2 + 1 * (3 + e) 90 // = 1 + 3 + e 91 // = 4 + e 92 // 93 // --> df/dy = 1 94 // 95 // To take the gradient of f with the implementation of dual numbers ("jets") in 96 // this file, it is necessary to create a single jet type which has components 97 // for the derivative in x and y, and passing them to a templated version of f: 98 // 99 // template<typename T> 100 // T f(const T &x, const T &y) { 101 // return x * x + x * y; 102 // } 103 // 104 // // The "2" means there should be 2 dual number components. 105 // // It computes the partial derivative at x=10, y=20. 106 // Jet<double, 2> x(10, 0); // Pick the 0th dual number for x. 107 // Jet<double, 2> y(20, 1); // Pick the 1st dual number for y. 108 // Jet<double, 2> z = f(x, y); 109 // 110 // LOG(INFO) << "df/dx = " << z.v[0] 111 // << "df/dy = " << z.v[1]; 112 // 113 // Most users should not use Jet objects directly; a wrapper around Jet objects, 114 // which makes computing the derivative, gradient, or jacobian of templated 115 // functors simple, is in autodiff.h. Even autodiff.h should not be used 116 // directly; instead autodiff_cost_function.h is typically the file of interest. 117 // 118 // For the more mathematically inclined, this file implements first-order 119 // "jets". A 1st order jet is an element of the ring 120 // 121 // T[N] = T[t_1, ..., t_N] / (t_1, ..., t_N)^2 122 // 123 // which essentially means that each jet consists of a "scalar" value 'a' from T 124 // and a 1st order perturbation vector 'v' of length N: 125 // 126 // x = a + sum_i v[i] t_i 127 // 128 // A shorthand is to write an element as x = a + u, where u is the perturbation. 129 // Then, the main point about the arithmetic of jets is that the product of 130 // perturbations is zero: 131 // 132 // (a + u) * (b + v) = ab + av + bu + uv 133 // = ab + (av + bu) + 0 134 // 135 // which is what operator* implements below. Addition is simpler: 136 // 137 // (a + u) + (b + v) = (a + b) + (u + v). 138 // 139 // The only remaining question is how to evaluate the function of a jet, for 140 // which we use the chain rule: 141 // 142 // f(a + u) = f(a) + f'(a) u 143 // 144 // where f'(a) is the (scalar) derivative of f at a. 145 // 146 // By pushing these things through sufficiently and suitably templated 147 // functions, we can do automatic differentiation. Just be sure to turn on 148 // function inlining and common-subexpression elimination, or it will be very 149 // slow! 150 // 151 // WARNING: Most Ceres users should not directly include this file or know the 152 // details of how jets work. Instead the suggested method for automatic 153 // derivatives is to use autodiff_cost_function.h, which is a wrapper around 154 // both jets.h and autodiff.h to make taking derivatives of cost functions for 155 // use in Ceres easier. 156 157 #ifndef CERES_PUBLIC_JET_H_ 158 #define CERES_PUBLIC_JET_H_ 159 160 #include <cmath> 161 #include <iosfwd> 162 #include <iostream> // NOLINT 163 #include <limits> 164 #include <string> 165 166 #include "Eigen/Core" 167 #include "ceres/internal/port.h" 168 169 namespace ceres { 170 171 template <typename T, int N> 172 struct Jet { 173 enum { DIMENSION = N }; 174 typedef T Scalar; 175 176 // Default-construct "a" because otherwise this can lead to false errors about 177 // uninitialized uses when other classes relying on default constructed T 178 // (where T is a Jet<T, N>). This usually only happens in opt mode. Note that 179 // the C++ standard mandates that e.g. default constructed doubles are 180 // initialized to 0.0; see sections 8.5 of the C++03 standard. 181 Jet() : a() { 182 v.setZero(); 183 } 184 185 // Constructor from scalar: a + 0. 186 explicit Jet(const T& value) { 187 a = value; 188 v.setZero(); 189 } 190 191 // Constructor from scalar plus variable: a + t_i. 192 Jet(const T& value, int k) { 193 a = value; 194 v.setZero(); 195 v[k] = T(1.0); 196 } 197 198 // Constructor from scalar and vector part 199 // The use of Eigen::DenseBase allows Eigen expressions 200 // to be passed in without being fully evaluated until 201 // they are assigned to v 202 template<typename Derived> 203 EIGEN_STRONG_INLINE Jet(const T& a, const Eigen::DenseBase<Derived> &v) 204 : a(a), v(v) { 205 } 206 207 // Compound operators 208 Jet<T, N>& operator+=(const Jet<T, N> &y) { 209 *this = *this + y; 210 return *this; 211 } 212 213 Jet<T, N>& operator-=(const Jet<T, N> &y) { 214 *this = *this - y; 215 return *this; 216 } 217 218 Jet<T, N>& operator*=(const Jet<T, N> &y) { 219 *this = *this * y; 220 return *this; 221 } 222 223 Jet<T, N>& operator/=(const Jet<T, N> &y) { 224 *this = *this / y; 225 return *this; 226 } 227 228 // Compound with scalar operators. 229 Jet<T, N>& operator+=(const T& s) { 230 *this = *this + s; 231 return *this; 232 } 233 234 Jet<T, N>& operator-=(const T& s) { 235 *this = *this - s; 236 return *this; 237 } 238 239 Jet<T, N>& operator*=(const T& s) { 240 *this = *this * s; 241 return *this; 242 } 243 244 Jet<T, N>& operator/=(const T& s) { 245 *this = *this / s; 246 return *this; 247 } 248 249 // The scalar part. 250 T a; 251 252 // The infinitesimal part. 253 // 254 // We allocate Jets on the stack and other places they might not be aligned 255 // to X(=16 [SSE], 32 [AVX] etc)-byte boundaries, which would prevent the safe 256 // use of vectorisation. If we have C++11, we can specify the alignment. 257 // However, the standard gives wide latitude as to what alignments are valid, 258 // and it might be that the maximum supported alignment *guaranteed* to be 259 // supported is < 16, in which case we do not specify an alignment, as this 260 // implies the host is not a modern x86 machine. If using < C++11, we cannot 261 // specify alignment. 262 263 #if defined(EIGEN_DONT_VECTORIZE) 264 Eigen::Matrix<T, N, 1, Eigen::DontAlign> v; 265 #else 266 // Enable vectorisation iff the maximum supported scalar alignment is >= 267 // 16 bytes, as this is the minimum required by Eigen for any vectorisation. 268 // 269 // NOTE: It might be the case that we could get >= 16-byte alignment even if 270 // max_align_t < 16. However we can't guarantee that this 271 // would happen (and it should not for any modern x86 machine) and if it 272 // didn't, we could get misaligned Jets. 273 static constexpr int kAlignOrNot = 274 // Work around a GCC 4.8 bug 275 // (https://gcc.gnu.org/bugzilla/show_bug.cgi?id=56019) where 276 // std::max_align_t is misplaced. 277 #if defined (__GNUC__) && __GNUC__ == 4 && __GNUC_MINOR__ == 8 278 alignof(::max_align_t) >= 16 279 #else 280 alignof(std::max_align_t) >= 16 281 #endif 282 ? Eigen::AutoAlign : Eigen::DontAlign; 283 284 #if defined(EIGEN_MAX_ALIGN_BYTES) 285 // Eigen >= 3.3 supports AVX & FMA instructions that require 32-byte alignment 286 // (greater for AVX512). Rather than duplicating the detection logic, use 287 // Eigen's macro for the alignment size. 288 // 289 // NOTE: EIGEN_MAX_ALIGN_BYTES can be > 16 (e.g. 32 for AVX), even though 290 // kMaxAlignBytes will max out at 16. We are therefore relying on 291 // Eigen's detection logic to ensure that this does not result in 292 // misaligned Jets. 293 #define CERES_JET_ALIGN_BYTES EIGEN_MAX_ALIGN_BYTES 294 #else 295 // Eigen < 3.3 only supported 16-byte alignment. 296 #define CERES_JET_ALIGN_BYTES 16 297 #endif 298 299 // Default to the native alignment if 16-byte alignment is not guaranteed to 300 // be supported. We cannot use alignof(T) as if we do, GCC 4.8 complains that 301 // the alignment 'is not an integer constant', although Clang accepts it. 302 static constexpr size_t kAlignment = kAlignOrNot == Eigen::AutoAlign 303 ? CERES_JET_ALIGN_BYTES : alignof(double); 304 305 #undef CERES_JET_ALIGN_BYTES 306 alignas(kAlignment) Eigen::Matrix<T, N, 1, kAlignOrNot> v; 307 #endif 308 }; 309 310 // Unary + 311 template<typename T, int N> inline 312 Jet<T, N> const& operator+(const Jet<T, N>& f) { 313 return f; 314 } 315 316 // TODO(keir): Try adding __attribute__((always_inline)) to these functions to 317 // see if it causes a performance increase. 318 319 // Unary - 320 template<typename T, int N> inline 321 Jet<T, N> operator-(const Jet<T, N>&f) { 322 return Jet<T, N>(-f.a, -f.v); 323 } 324 325 // Binary + 326 template<typename T, int N> inline 327 Jet<T, N> operator+(const Jet<T, N>& f, 328 const Jet<T, N>& g) { 329 return Jet<T, N>(f.a + g.a, f.v + g.v); 330 } 331 332 // Binary + with a scalar: x + s 333 template<typename T, int N> inline 334 Jet<T, N> operator+(const Jet<T, N>& f, T s) { 335 return Jet<T, N>(f.a + s, f.v); 336 } 337 338 // Binary + with a scalar: s + x 339 template<typename T, int N> inline 340 Jet<T, N> operator+(T s, const Jet<T, N>& f) { 341 return Jet<T, N>(f.a + s, f.v); 342 } 343 344 // Binary - 345 template<typename T, int N> inline 346 Jet<T, N> operator-(const Jet<T, N>& f, 347 const Jet<T, N>& g) { 348 return Jet<T, N>(f.a - g.a, f.v - g.v); 349 } 350 351 // Binary - with a scalar: x - s 352 template<typename T, int N> inline 353 Jet<T, N> operator-(const Jet<T, N>& f, T s) { 354 return Jet<T, N>(f.a - s, f.v); 355 } 356 357 // Binary - with a scalar: s - x 358 template<typename T, int N> inline 359 Jet<T, N> operator-(T s, const Jet<T, N>& f) { 360 return Jet<T, N>(s - f.a, -f.v); 361 } 362 363 // Binary * 364 template<typename T, int N> inline 365 Jet<T, N> operator*(const Jet<T, N>& f, 366 const Jet<T, N>& g) { 367 return Jet<T, N>(f.a * g.a, f.a * g.v + f.v * g.a); 368 } 369 370 // Binary * with a scalar: x * s 371 template<typename T, int N> inline 372 Jet<T, N> operator*(const Jet<T, N>& f, T s) { 373 return Jet<T, N>(f.a * s, f.v * s); 374 } 375 376 // Binary * with a scalar: s * x 377 template<typename T, int N> inline 378 Jet<T, N> operator*(T s, const Jet<T, N>& f) { 379 return Jet<T, N>(f.a * s, f.v * s); 380 } 381 382 // Binary / 383 template<typename T, int N> inline 384 Jet<T, N> operator/(const Jet<T, N>& f, 385 const Jet<T, N>& g) { 386 // This uses: 387 // 388 // a + u (a + u)(b - v) (a + u)(b - v) 389 // ----- = -------------- = -------------- 390 // b + v (b + v)(b - v) b^2 391 // 392 // which holds because v*v = 0. 393 const T g_a_inverse = T(1.0) / g.a; 394 const T f_a_by_g_a = f.a * g_a_inverse; 395 return Jet<T, N>(f_a_by_g_a, (f.v - f_a_by_g_a * g.v) * g_a_inverse); 396 } 397 398 // Binary / with a scalar: s / x 399 template<typename T, int N> inline 400 Jet<T, N> operator/(T s, const Jet<T, N>& g) { 401 const T minus_s_g_a_inverse2 = -s / (g.a * g.a); 402 return Jet<T, N>(s / g.a, g.v * minus_s_g_a_inverse2); 403 } 404 405 // Binary / with a scalar: x / s 406 template<typename T, int N> inline 407 Jet<T, N> operator/(const Jet<T, N>& f, T s) { 408 const T s_inverse = T(1.0) / s; 409 return Jet<T, N>(f.a * s_inverse, f.v * s_inverse); 410 } 411 412 // Binary comparison operators for both scalars and jets. 413 #define CERES_DEFINE_JET_COMPARISON_OPERATOR(op) 414 template<typename T, int N> inline 415 bool operator op(const Jet<T, N>& f, const Jet<T, N>& g) { 416 return f.a op g.a; 417 } 418 template<typename T, int N> inline 419 bool operator op(const T& s, const Jet<T, N>& g) { 420 return s op g.a; 421 } 422 template<typename T, int N> inline 423 bool operator op(const Jet<T, N>& f, const T& s) { 424 return f.a op s; 425 } 426 CERES_DEFINE_JET_COMPARISON_OPERATOR( < ) // NOLINT 427 CERES_DEFINE_JET_COMPARISON_OPERATOR( <= ) // NOLINT 428 CERES_DEFINE_JET_COMPARISON_OPERATOR( > ) // NOLINT 429 CERES_DEFINE_JET_COMPARISON_OPERATOR( >= ) // NOLINT 430 CERES_DEFINE_JET_COMPARISON_OPERATOR( == ) // NOLINT 431 CERES_DEFINE_JET_COMPARISON_OPERATOR( != ) // NOLINT 432 #undef CERES_DEFINE_JET_COMPARISON_OPERATOR 433 434 // Pull some functions from namespace std. 435 // 436 // This is necessary because we want to use the same name (e.g. 'sqrt') for 437 // double-valued and Jet-valued functions, but we are not allowed to put 438 // Jet-valued functions inside namespace std. 439 using std::abs; 440 using std::acos; 441 using std::asin; 442 using std::atan; 443 using std::atan2; 444 using std::cbrt; 445 using std::ceil; 446 using std::cos; 447 using std::cosh; 448 using std::exp; 449 using std::exp2; 450 using std::floor; 451 using std::fmax; 452 using std::fmin; 453 using std::hypot; 454 using std::isfinite; 455 using std::isinf; 456 using std::isnan; 457 using std::isnormal; 458 using std::log; 459 using std::log2; 460 using std::pow; 461 using std::sin; 462 using std::sinh; 463 using std::sqrt; 464 using std::tan; 465 using std::tanh; 466 467 // Legacy names from pre-C++11 days. 468 inline bool IsFinite (double x) { return std::isfinite(x); } 469 inline bool IsInfinite(double x) { return std::isinf(x); } 470 inline bool IsNaN (double x) { return std::isnan(x); } 471 inline bool IsNormal (double x) { return std::isnormal(x); } 472 473 // In general, f(a + h) ~= f(a) + f'(a) h, via the chain rule. 474 475 // abs(x + h) ~= x + h or -(x + h) 476 template <typename T, int N> inline 477 Jet<T, N> abs(const Jet<T, N>& f) { 478 return f.a < T(0.0) ? -f : f; 479 } 480 481 // log(a + h) ~= log(a) + h / a 482 template <typename T, int N> inline 483 Jet<T, N> log(const Jet<T, N>& f) { 484 const T a_inverse = T(1.0) / f.a; 485 return Jet<T, N>(log(f.a), f.v * a_inverse); 486 } 487 488 // exp(a + h) ~= exp(a) + exp(a) h 489 template <typename T, int N> inline 490 Jet<T, N> exp(const Jet<T, N>& f) { 491 const T tmp = exp(f.a); 492 return Jet<T, N>(tmp, tmp * f.v); 493 } 494 495 // sqrt(a + h) ~= sqrt(a) + h / (2 sqrt(a)) 496 template <typename T, int N> inline 497 Jet<T, N> sqrt(const Jet<T, N>& f) { 498 const T tmp = sqrt(f.a); 499 const T two_a_inverse = T(1.0) / (T(2.0) * tmp); 500 return Jet<T, N>(tmp, f.v * two_a_inverse); 501 } 502 503 // cos(a + h) ~= cos(a) - sin(a) h 504 template <typename T, int N> inline 505 Jet<T, N> cos(const Jet<T, N>& f) { 506 return Jet<T, N>(cos(f.a), - sin(f.a) * f.v); 507 } 508 509 // acos(a + h) ~= acos(a) - 1 / sqrt(1 - a^2) h 510 template <typename T, int N> inline 511 Jet<T, N> acos(const Jet<T, N>& f) { 512 const T tmp = - T(1.0) / sqrt(T(1.0) - f.a * f.a); 513 return Jet<T, N>(acos(f.a), tmp * f.v); 514 } 515 516 // sin(a + h) ~= sin(a) + cos(a) h 517 template <typename T, int N> inline 518 Jet<T, N> sin(const Jet<T, N>& f) { 519 return Jet<T, N>(sin(f.a), cos(f.a) * f.v); 520 } 521 522 // asin(a + h) ~= asin(a) + 1 / sqrt(1 - a^2) h 523 template <typename T, int N> inline 524 Jet<T, N> asin(const Jet<T, N>& f) { 525 const T tmp = T(1.0) / sqrt(T(1.0) - f.a * f.a); 526 return Jet<T, N>(asin(f.a), tmp * f.v); 527 } 528 529 // tan(a + h) ~= tan(a) + (1 + tan(a)^2) h 530 template <typename T, int N> inline 531 Jet<T, N> tan(const Jet<T, N>& f) { 532 const T tan_a = tan(f.a); 533 const T tmp = T(1.0) + tan_a * tan_a; 534 return Jet<T, N>(tan_a, tmp * f.v); 535 } 536 537 // atan(a + h) ~= atan(a) + 1 / (1 + a^2) h 538 template <typename T, int N> inline 539 Jet<T, N> atan(const Jet<T, N>& f) { 540 const T tmp = T(1.0) / (T(1.0) + f.a * f.a); 541 return Jet<T, N>(atan(f.a), tmp * f.v); 542 } 543 544 // sinh(a + h) ~= sinh(a) + cosh(a) h 545 template <typename T, int N> inline 546 Jet<T, N> sinh(const Jet<T, N>& f) { 547 return Jet<T, N>(sinh(f.a), cosh(f.a) * f.v); 548 } 549 550 // cosh(a + h) ~= cosh(a) + sinh(a) h 551 template <typename T, int N> inline 552 Jet<T, N> cosh(const Jet<T, N>& f) { 553 return Jet<T, N>(cosh(f.a), sinh(f.a) * f.v); 554 } 555 556 // tanh(a + h) ~= tanh(a) + (1 - tanh(a)^2) h 557 template <typename T, int N> inline 558 Jet<T, N> tanh(const Jet<T, N>& f) { 559 const T tanh_a = tanh(f.a); 560 const T tmp = T(1.0) - tanh_a * tanh_a; 561 return Jet<T, N>(tanh_a, tmp * f.v); 562 } 563 564 // The floor function should be used with extreme care as this operation will 565 // result in a zero derivative which provides no information to the solver. 566 // 567 // floor(a + h) ~= floor(a) + 0 568 template <typename T, int N> inline 569 Jet<T, N> floor(const Jet<T, N>& f) { 570 return Jet<T, N>(floor(f.a)); 571 } 572 573 // The ceil function should be used with extreme care as this operation will 574 // result in a zero derivative which provides no information to the solver. 575 // 576 // ceil(a + h) ~= ceil(a) + 0 577 template <typename T, int N> inline 578 Jet<T, N> ceil(const Jet<T, N>& f) { 579 return Jet<T, N>(ceil(f.a)); 580 } 581 582 // Some new additions to C++11: 583 584 // cbrt(a + h) ~= cbrt(a) + h / (3 a ^ (2/3)) 585 template <typename T, int N> inline 586 Jet<T, N> cbrt(const Jet<T, N>& f) { 587 const T derivative = T(1.0) / (T(3.0) * cbrt(f.a * f.a)); 588 return Jet<T, N>(cbrt(f.a), f.v * derivative); 589 } 590 591 // exp2(x + h) = 2^(x+h) ~= 2^x + h*2^x*log(2) 592 template <typename T, int N> inline 593 Jet<T, N> exp2(const Jet<T, N>& f) { 594 const T tmp = exp2(f.a); 595 const T derivative = tmp * log(T(2)); 596 return Jet<T, N>(tmp, f.v * derivative); 597 } 598 599 // log2(x + h) ~= log2(x) + h / (x * log(2)) 600 template <typename T, int N> inline 601 Jet<T, N> log2(const Jet<T, N>& f) { 602 const T derivative = T(1.0) / (f.a * log(T(2))); 603 return Jet<T, N>(log2(f.a), f.v * derivative); 604 } 605 606 // Like sqrt(x^2 + y^2), 607 // but acts to prevent underflow/overflow for small/large x/y. 608 // Note that the function is non-smooth at x=y=0, 609 // so the derivative is undefined there. 610 template <typename T, int N> inline 611 Jet<T, N> hypot(const Jet<T, N>& x, const Jet<T, N>& y) { 612 // d/da sqrt(a) = 0.5 / sqrt(a) 613 // d/dx x^2 + y^2 = 2x 614 // So by the chain rule: 615 // d/dx sqrt(x^2 + y^2) = 0.5 / sqrt(x^2 + y^2) * 2x = x / sqrt(x^2 + y^2) 616 // d/dy sqrt(x^2 + y^2) = y / sqrt(x^2 + y^2) 617 const T tmp = hypot(x.a, y.a); 618 return Jet<T, N>(tmp, x.a / tmp * x.v + y.a / tmp * y.v); 619 } 620 621 template <typename T, int N> inline 622 const Jet<T, N>& fmax(const Jet<T, N>& x, const Jet<T, N>& y) { 623 return x < y ? y : x; 624 } 625 626 template <typename T, int N> inline 627 const Jet<T, N>& fmin(const Jet<T, N>& x, const Jet<T, N>& y) { 628 return y < x ? y : x; 629 } 630 631 // Bessel functions of the first kind with integer order equal to 0, 1, n. 632 // 633 // Microsoft has deprecated the j[0,1,n]() POSIX Bessel functions in favour of 634 // _j[0,1,n](). Where available on MSVC, use _j[0,1,n]() to avoid deprecated 635 // function errors in client code (the specific warning is suppressed when 636 // Ceres itself is built). 637 inline double BesselJ0(double x) { 638 #if defined(CERES_MSVC_USE_UNDERSCORE_PREFIXED_BESSEL_FUNCTIONS) 639 return _j0(x); 640 #else 641 return j0(x); 642 #endif 643 } 644 inline double BesselJ1(double x) { 645 #if defined(CERES_MSVC_USE_UNDERSCORE_PREFIXED_BESSEL_FUNCTIONS) 646 return _j1(x); 647 #else 648 return j1(x); 649 #endif 650 } 651 inline double BesselJn(int n, double x) { 652 #if defined(CERES_MSVC_USE_UNDERSCORE_PREFIXED_BESSEL_FUNCTIONS) 653 return _jn(n, x); 654 #else 655 return jn(n, x); 656 #endif 657 } 658 659 // For the formulae of the derivatives of the Bessel functions see the book: 660 // Olver, Lozier, Boisvert, Clark, NIST Handbook of Mathematical Functions, 661 // Cambridge University Press 2010. 662 // 663 // Formulae are also available at http://dlmf.nist.gov 664 665 // See formula http://dlmf.nist.gov/10.6#E3 666 // j0(a + h) ~= j0(a) - j1(a) h 667 template <typename T, int N> inline 668 Jet<T, N> BesselJ0(const Jet<T, N>& f) { 669 return Jet<T, N>(BesselJ0(f.a), 670 -BesselJ1(f.a) * f.v); 671 } 672 673 // See formula http://dlmf.nist.gov/10.6#E1 674 // j1(a + h) ~= j1(a) + 0.5 ( j0(a) - j2(a) ) h 675 template <typename T, int N> inline 676 Jet<T, N> BesselJ1(const Jet<T, N>& f) { 677 return Jet<T, N>(BesselJ1(f.a), 678 T(0.5) * (BesselJ0(f.a) - BesselJn(2, f.a)) * f.v); 679 } 680 681 // See formula http://dlmf.nist.gov/10.6#E1 682 // j_n(a + h) ~= j_n(a) + 0.5 ( j_{n-1}(a) - j_{n+1}(a) ) h 683 template <typename T, int N> inline 684 Jet<T, N> BesselJn(int n, const Jet<T, N>& f) { 685 return Jet<T, N>(BesselJn(n, f.a), 686 T(0.5) * (BesselJn(n - 1, f.a) - BesselJn(n + 1, f.a)) * f.v); 687 } 688 689 // Jet Classification. It is not clear what the appropriate semantics are for 690 // these classifications. This picks that std::isfinite and std::isnormal are "all" 691 // operations, i.e. all elements of the jet must be finite for the jet itself 692 // to be finite (or normal). For IsNaN and IsInfinite, the answer is less 693 // clear. This takes a "any" approach for IsNaN and IsInfinite such that if any 694 // part of a jet is nan or inf, then the entire jet is nan or inf. This leads 695 // to strange situations like a jet can be both IsInfinite and IsNaN, but in 696 // practice the "any" semantics are the most useful for e.g. checking that 697 // derivatives are sane. 698 699 // The jet is finite if all parts of the jet are finite. 700 template <typename T, int N> inline 701 bool isfinite(const Jet<T, N>& f) { 702 if (!std::isfinite(f.a)) { 703 return false; 704 } 705 for (int i = 0; i < N; ++i) { 706 if (!std::isfinite(f.v[i])) { 707 return false; 708 } 709 } 710 return true; 711 } 712 713 // The jet is infinite if any part of the Jet is infinite. 714 template <typename T, int N> inline 715 bool isinf(const Jet<T, N>& f) { 716 if (std::isinf(f.a)) { 717 return true; 718 } 719 for (int i = 0; i < N; ++i) { 720 if (std::isinf(f.v[i])) { 721 return true; 722 } 723 } 724 return false; 725 } 726 727 728 // The jet is NaN if any part of the jet is NaN. 729 template <typename T, int N> inline 730 bool isnan(const Jet<T, N>& f) { 731 if (std::isnan(f.a)) { 732 return true; 733 } 734 for (int i = 0; i < N; ++i) { 735 if (std::isnan(f.v[i])) { 736 return true; 737 } 738 } 739 return false; 740 } 741 742 // The jet is normal if all parts of the jet are normal. 743 template <typename T, int N> inline 744 bool isnormal(const Jet<T, N>& f) { 745 if (!std::isnormal(f.a)) { 746 return false; 747 } 748 for (int i = 0; i < N; ++i) { 749 if (!std::isnormal(f.v[i])) { 750 return false; 751 } 752 } 753 return true; 754 } 755 756 // Legacy functions from the pre-C++11 days. 757 template <typename T, int N> 758 inline bool IsFinite(const Jet<T, N>& f) { 759 return isfinite(f); 760 } 761 762 template <typename T, int N> 763 inline bool IsNaN(const Jet<T, N>& f) { 764 return isnan(f); 765 } 766 767 template <typename T, int N> 768 inline bool IsNormal(const Jet<T, N>& f) { 769 return isnormal(f); 770 } 771 772 // The jet is infinite if any part of the jet is infinite. 773 template <typename T, int N> inline 774 bool IsInfinite(const Jet<T, N>& f) { 775 return isinf(f); 776 } 777 778 // atan2(b + db, a + da) ~= atan2(b, a) + (- b da + a db) / (a^2 + b^2) 779 // 780 // In words: the rate of change of theta is 1/r times the rate of 781 // change of (x, y) in the positive angular direction. 782 template <typename T, int N> inline 783 Jet<T, N> atan2(const Jet<T, N>& g, const Jet<T, N>& f) { 784 // Note order of arguments: 785 // 786 // f = a + da 787 // g = b + db 788 789 T const tmp = T(1.0) / (f.a * f.a + g.a * g.a); 790 return Jet<T, N>(atan2(g.a, f.a), tmp * (- g.a * f.v + f.a * g.v)); 791 } 792 793 794 // pow -- base is a differentiable function, exponent is a constant. 795 // (a+da)^p ~= a^p + p*a^(p-1) da 796 template <typename T, int N> inline 797 Jet<T, N> pow(const Jet<T, N>& f, double g) { 798 T const tmp = g * pow(f.a, g - T(1.0)); 799 return Jet<T, N>(pow(f.a, g), tmp * f.v); 800 } 801 802 // pow -- base is a constant, exponent is a differentiable function. 803 // We have various special cases, see the comment for pow(Jet, Jet) for 804 // analysis: 805 // 806 // 1. For f > 0 we have: (f)^(g + dg) ~= f^g + f^g log(f) dg 807 // 808 // 2. For f == 0 and g > 0 we have: (f)^(g + dg) ~= f^g 809 // 810 // 3. For f < 0 and integer g we have: (f)^(g + dg) ~= f^g but if dg 811 // != 0, the derivatives are not defined and we return NaN. 812 813 template <typename T, int N> inline 814 Jet<T, N> pow(double f, const Jet<T, N>& g) { 815 if (f == 0 && g.a > 0) { 816 // Handle case 2. 817 return Jet<T, N>(T(0.0)); 818 } 819 if (f < 0 && g.a == floor(g.a)) { 820 // Handle case 3. 821 Jet<T, N> ret(pow(f, g.a)); 822 for (int i = 0; i < N; i++) { 823 if (g.v[i] != T(0.0)) { 824 // Return a NaN when g.v != 0. 825 ret.v[i] = std::numeric_limits<T>::quiet_NaN(); 826 } 827 } 828 return ret; 829 } 830 // Handle case 1. 831 T const tmp = pow(f, g.a); 832 return Jet<T, N>(tmp, log(f) * tmp * g.v); 833 } 834 835 // pow -- both base and exponent are differentiable functions. This has a 836 // variety of special cases that require careful handling. 837 // 838 // 1. For f > 0: 839 // (f + df)^(g + dg) ~= f^g + f^(g - 1) * (g * df + f * log(f) * dg) 840 // The numerical evaluation of f * log(f) for f > 0 is well behaved, even for 841 // extremely small values (e.g. 1e-99). 842 // 843 // 2. For f == 0 and g > 1: (f + df)^(g + dg) ~= 0 844 // This cases is needed because log(0) can not be evaluated in the f > 0 845 // expression. However the function f*log(f) is well behaved around f == 0 846 // and its limit as f-->0 is zero. 847 // 848 // 3. For f == 0 and g == 1: (f + df)^(g + dg) ~= 0 + df 849 // 850 // 4. For f == 0 and 0 < g < 1: The value is finite but the derivatives are not. 851 // 852 // 5. For f == 0 and g < 0: The value and derivatives of f^g are not finite. 853 // 854 // 6. For f == 0 and g == 0: The C standard incorrectly defines 0^0 to be 1 855 // "because there are applications that can exploit this definition". We 856 // (arbitrarily) decree that derivatives here will be nonfinite, since that 857 // is consistent with the behavior for f == 0, g < 0 and 0 < g < 1. 858 // Practically any definition could have been justified because mathematical 859 // consistency has been lost at this point. 860 // 861 // 7. For f < 0, g integer, dg == 0: (f + df)^(g + dg) ~= f^g + g * f^(g - 1) df 862 // This is equivalent to the case where f is a differentiable function and g 863 // is a constant (to first order). 864 // 865 // 8. For f < 0, g integer, dg != 0: The value is finite but the derivatives are 866 // not, because any change in the value of g moves us away from the point 867 // with a real-valued answer into the region with complex-valued answers. 868 // 869 // 9. For f < 0, g noninteger: The value and derivatives of f^g are not finite. 870 871 template <typename T, int N> inline 872 Jet<T, N> pow(const Jet<T, N>& f, const Jet<T, N>& g) { 873 if (f.a == 0 && g.a >= 1) { 874 // Handle cases 2 and 3. 875 if (g.a > 1) { 876 return Jet<T, N>(T(0.0)); 877 } 878 return f; 879 } 880 if (f.a < 0 && g.a == floor(g.a)) { 881 // Handle cases 7 and 8. 882 T const tmp = g.a * pow(f.a, g.a - T(1.0)); 883 Jet<T, N> ret(pow(f.a, g.a), tmp * f.v); 884 for (int i = 0; i < N; i++) { 885 if (g.v[i] != T(0.0)) { 886 // Return a NaN when g.v != 0. 887 ret.v[i] = std::numeric_limits<T>::quiet_NaN(); 888 } 889 } 890 return ret; 891 } 892 // Handle the remaining cases. For cases 4,5,6,9 we allow the log() function 893 // to generate -HUGE_VAL or NaN, since those cases result in a nonfinite 894 // derivative. 895 T const tmp1 = pow(f.a, g.a); 896 T const tmp2 = g.a * pow(f.a, g.a - T(1.0)); 897 T const tmp3 = tmp1 * log(f.a); 898 return Jet<T, N>(tmp1, tmp2 * f.v + tmp3 * g.v); 899 } 900 901 // Note: This has to be in the ceres namespace for argument dependent lookup to 902 // function correctly. Otherwise statements like CHECK_LE(x, 2.0) fail with 903 // strange compile errors. 904 template <typename T, int N> 905 inline std::ostream &operator<<(std::ostream &s, const Jet<T, N>& z) { 906 s << "[" << z.a << " ; "; 907 for (int i = 0; i < N; ++i) { 908 s << z.v[i]; 909 if (i != N - 1) { 910 s << ", "; 911 } 912 } 913 s << "]"; 914 return s; 915 } 916 917 } // namespace ceres 918 919 namespace Eigen { 920 921 // Creating a specialization of NumTraits enables placing Jet objects inside 922 // Eigen arrays, getting all the goodness of Eigen combined with autodiff. 923 template<typename T, int N> 924 struct NumTraits<ceres::Jet<T, N>> { 925 typedef ceres::Jet<T, N> Real; 926 typedef ceres::Jet<T, N> NonInteger; 927 typedef ceres::Jet<T, N> Nested; 928 typedef ceres::Jet<T, N> Literal; 929 930 static typename ceres::Jet<T, N> dummy_precision() { 931 return ceres::Jet<T, N>(1e-12); 932 } 933 934 static inline Real epsilon() { 935 return Real(std::numeric_limits<T>::epsilon()); 936 } 937 938 static inline int digits10() { return NumTraits<T>::digits10(); } 939 940 enum { 941 IsComplex = 0, 942 IsInteger = 0, 943 IsSigned, 944 ReadCost = 1, 945 AddCost = 1, 946 // For Jet types, multiplication is more expensive than addition. 947 MulCost = 3, 948 HasFloatingPoint = 1, 949 RequireInitialization = 1 950 }; 951 952 template<bool Vectorized> 953 struct Div { 954 enum { 955 #if defined(EIGEN_VECTORIZE_AVX) 956 AVX = true, 957 #else 958 AVX = false, 959 #endif 960 961 // Assuming that for Jets, division is as expensive as 962 // multiplication. 963 Cost = 3 964 }; 965 }; 966 967 static inline Real highest() { return Real(std::numeric_limits<T>::max()); } 968 static inline Real lowest() { return Real(-std::numeric_limits<T>::max()); } 969 }; 970 971 #if EIGEN_VERSION_AT_LEAST(3, 3, 0) 972 // Specifying the return type of binary operations between Jets and scalar types 973 // allows you to perform matrix/array operations with Eigen matrices and arrays 974 // such as addition, subtraction, multiplication, and division where one Eigen 975 // matrix/array is of type Jet and the other is a scalar type. This improves 976 // performance by using the optimized scalar-to-Jet binary operations but 977 // is only available on Eigen versions >= 3.3 978 template <typename BinaryOp, typename T, int N> 979 struct ScalarBinaryOpTraits<ceres::Jet<T, N>, T, BinaryOp> { 980 typedef ceres::Jet<T, N> ReturnType; 981 }; 982 template <typename BinaryOp, typename T, int N> 983 struct ScalarBinaryOpTraits<T, ceres::Jet<T, N>, BinaryOp> { 984 typedef ceres::Jet<T, N> ReturnType; 985 }; 986 #endif // EIGEN_VERSION_AT_LEAST(3, 3, 0) 987 988 } // namespace Eigen 989 990 #endif // CERES_PUBLIC_JET_H_

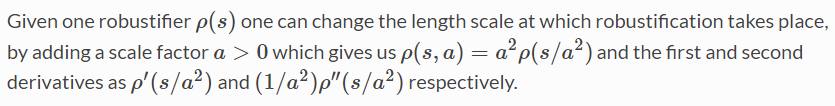

Modeling Non-linear Least Squares

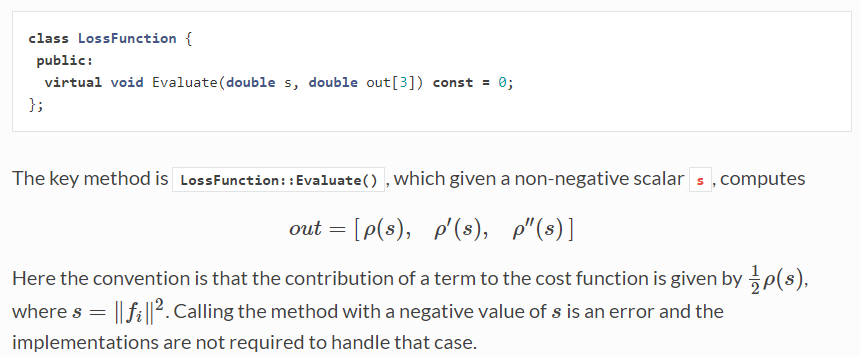

CostFunction

- CostFunction

is responsible for computing a vector of residules and Jacobian matrices.

- SizedCostFunction

SizedCostFunction继承自CostFunction,can be used if the size of parameter blocks and the size of residual vector is known at compile time(this is the common case).

- AutoDiffCostFunction

AutoDiffCostFunction继承自SizedCostFunction,要求参数块数目以及每个参数块大小在编译时已知,要求参数块数目不超过10个。can compute derivatives automaticlly.To get an auto differentiated cost function, you must define a class with a templated operator()(a functor) that computes the cost function in terms of the template parameter T. The autodiff framework substitutes appropriate Jet objects for T in order to compute the derivative when necessary, but this is hidden, and you should write the function as if T were a scalar type (e.g. a double-precision floating point number).

- DynamicAutoDiffCostFunction

can be used when the number of parameter blocks and their sizes not known at compile time.

- NumericDiffCostFunction

can be used when the evaluation of the residual involves a call to library function that you do not have control over.

- ConditionedCostFunction

allows to apply different conditioning to the residual values of a wrapped cost function.

LossFunction

LossFunction reduces the influence of outliers, this leads to outlier terms getting downweighted so they do not overly influence the final solution.

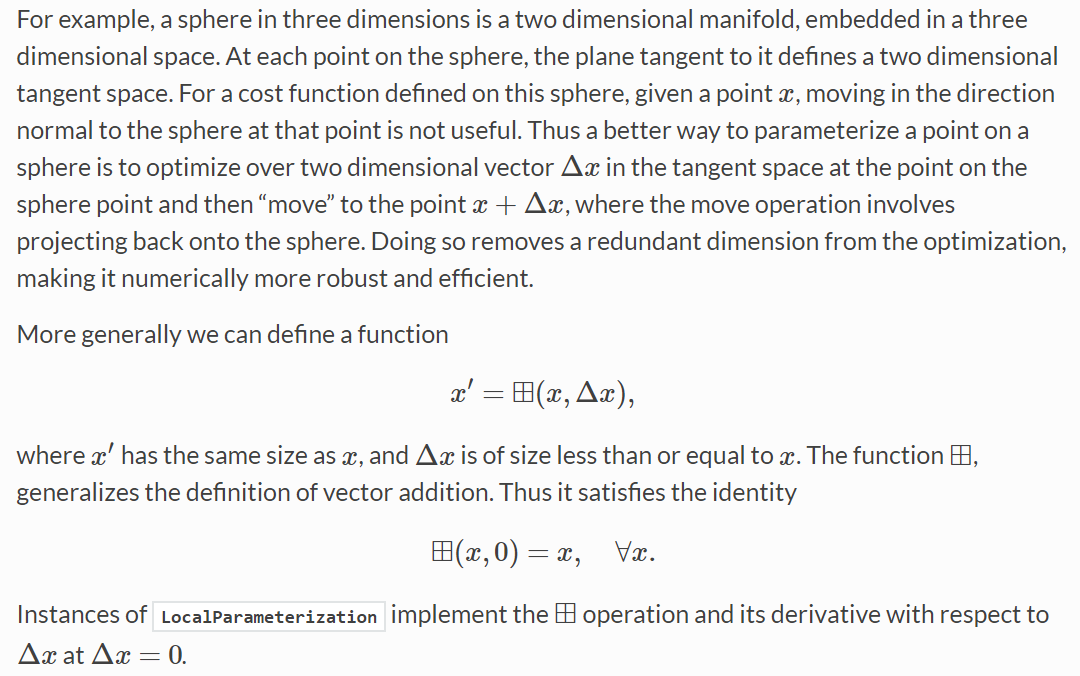

LocalParameterization

Trust Region 信赖域

Trust region methods are in some sense dual to line search methods:trust region methods first choose a step size(the size of trust region) and then a step direction while line search methods first choose a step direction and then a step size.

Levenberg-Marquardt

Dogleg

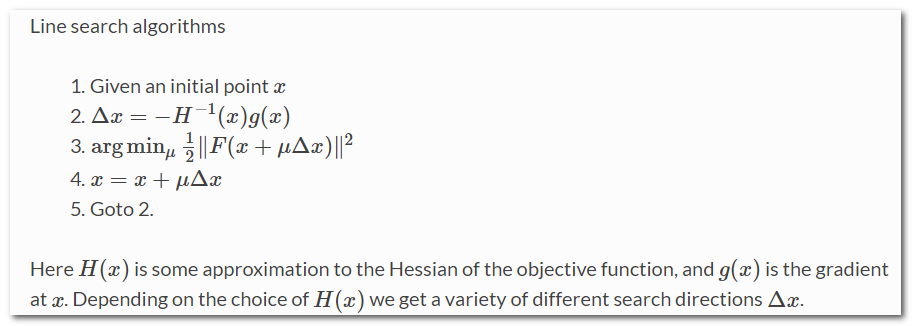

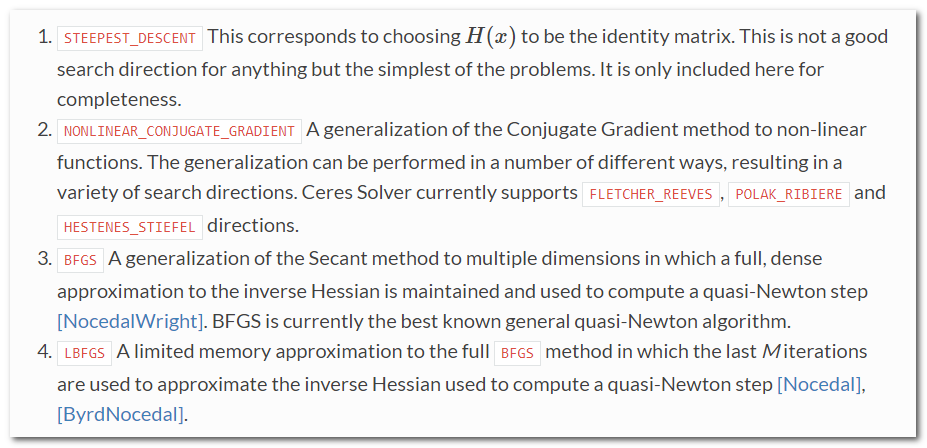

Line Search Methods

The line search method in Ceres Solver cannot handle bounds constraints right now, so it can only be used for solving unconstrained problems.