一、环境准备

在aliyun上购买三台服务器,安全组相关配置后续按需开通

1)关闭防火墙(阿里云服务器默认已关闭)

2)关闭selinux(阿里云服务器默认已关闭)

3)关闭swap(阿里云服务器默认已关闭)

以上阿里云服务器无需配置

4)添加主机名与 IP 对应关系(三台都需要)

[root@k8s-1 ~]# cat /etc/hosts ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 172.30.36.1 k8s-1 k8s-1 #阿里云默认会添加对应的解析 172.30.36.2 k8s-2 k8s-2 172.30.36.3 k8s-3 k8s-3

5)将桥接的 IPv4 流量传递到 iptables 的链

#内核参数 [root@k8s-1 ~]# cat > /etc/sysctl.d/k8s.conf << EOF > net.bridge.bridge-nf-call-ip6tables = 1 > net.bridge.bridge-nf-call-iptables = 1 > EOF #配置生效 [root@k8s-1 ~]# sysctl -p /etc/sysctl.d/k8s.conf sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory [root@k8s-1 ~]# modprobe br_netfilter [root@k8s-1 ~]# ls /proc/sys/net/bridge bridge-nf-call-arptables bridge-nf-call-ip6tables bridge-nf-call-iptables bridge-nf-filter-pppoe-tagged bridge-nf-filter-vlan-tagged bridge-nf-pass-vlan-input-dev [root@k8s-1 ~]# sysctl -p /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

二、docker安装

三台都需要安装

[root@k8s-1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-1 ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

[root@k8s-1 ~]# yum install -y docker-ce docker-ce-cli containerd.io

[root@k8s-1 ~]# mkdir -p /etc/docker

[root@k8s-1 ~]# cat >/etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://t09ww3n9.mirror.aliyuncs.com"]

}

EOF

[root@k8s-1 ~]# systemctl daemon-reload && systemctl start docker && systemctl enable docker

[root@k8s-1 ~]# docker version三、安装k8s

1)添加k8s的yum源

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

2)指定版本安装kubelet,kubeadm,kubectl

[root@k8s-1 ~]# yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3 [root@k8s-1 ~]# systemctl enable kubelet && systemctl start kubelet #此时kubelet无法启动,无需担心

3)master节点初始化

先可以执行脚本拉取镜像

[root@k8s-1 k8s]# cat master_images.sh

#!/bin/bash

images=(

kube-apiserver:v1.17.3

kube-proxy:v1.17.3

kube-controller-manager:v1.17.3

kube-scheduler:v1.17.3

coredns:1.6.5

etcd:3.4.3-0

pause:3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

#docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

[root@k8s-1 k8s]# chmod +x master_images.sh

[root@k8s-1 k8s]# ./master_images.sh初始化master节点

kubeadm init --apiserver-advertise-address=172.30.36.1 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.17.3 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.244.0.0/16

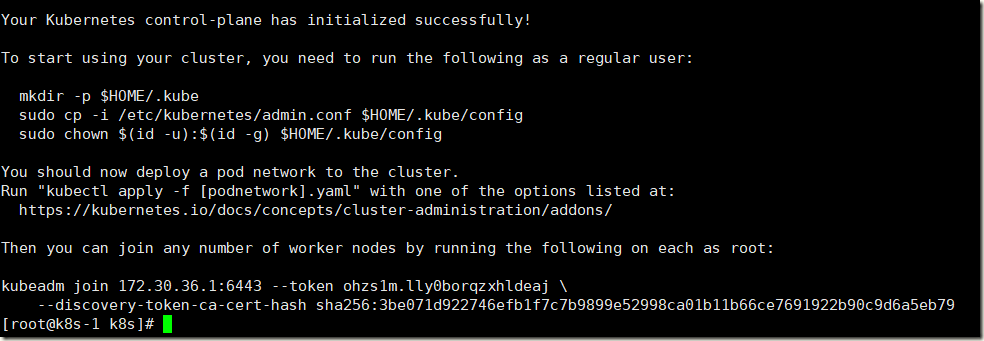

执行命令:

[root@k8s-1 k8s]# mkdir -p $HOME/.kube [root@k8s-1 k8s]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8s-1 k8s]# sudo chown $(id -u):$(id -g) $HOME/.kube/config [root@k8s-1 k8s]# kubectl get nodes #目前 master 状态为 notready。等待网络加入完成即可 NAME STATUS ROLES AGE VERSION k8s-1 NotReady master 2m13s v1.17.3

4)安装网络插件flannel

kube-flannel.yml文件地址:

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s-1 k8s]# kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds-amd64 created daemonset.apps/kube-flannel-ds-arm64 created daemonset.apps/kube-flannel-ds-arm created daemonset.apps/kube-flannel-ds-ppc64le created daemonset.apps/kube-flannel-ds-s390x created [root@k8s-1 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-7f9c544f75-kqjr9 1/1 Running 0 15m kube-system coredns-7f9c544f75-ztg6j 1/1 Running 0 15m kube-system etcd-k8s-1 1/1 Running 0 15m kube-system kube-apiserver-k8s-1 1/1 Running 0 15m kube-system kube-controller-manager-k8s-1 1/1 Running 0 15m kube-system kube-flannel-ds-amd64-l4754 1/1 Running 0 5m55s kube-system kube-proxy-288xw 1/1 Running 0 15m kube-system kube-scheduler-k8s-1 1/1 Running 0 15m [root@k8s-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-1 Ready master 22m v1.17.3

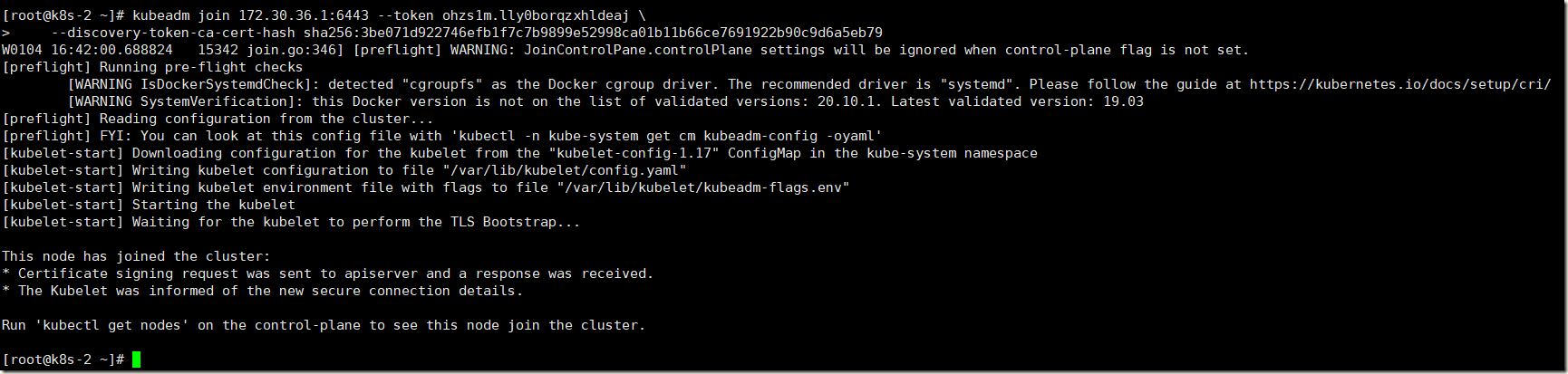

5)加入其它node节点

kubeadm join 172.30.36.1:6443 --token ohzs1m.lly0borqzxhldeaj

--discovery-token-ca-cert-hash sha256:3be071d922746efb1f7c7b9899e52998ca01b11b66ce7691922b90c9d6a5eb796)master节点监控查询集群状态

[root@k8s-1 ~]# watch kubectl get pod -n kube-system -o wide [root@k8s-1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-1 Ready master 28m v1.17.3 k8s-2 Ready <none> 2m21s v1.17.3 k8s-3 Ready <none> 61s v1.17.3

查看pods状态

[root@k8s-1 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-7f9c544f75-kqjr9 1/1 Running 0 31m kube-system coredns-7f9c544f75-ztg6j 1/1 Running 0 31m kube-system etcd-k8s-1 1/1 Running 0 32m kube-system kube-apiserver-k8s-1 1/1 Running 0 32m kube-system kube-controller-manager-k8s-1 1/1 Running 0 32m kube-system kube-flannel-ds-amd64-6bhnq 1/1 Running 0 4m33s kube-system kube-flannel-ds-amd64-l4754 1/1 Running 0 22m kube-system kube-flannel-ds-amd64-wpxkm 1/1 Running 0 5m53s kube-system kube-proxy-288xw 1/1 Running 0 31m kube-system kube-proxy-mt97b 1/1 Running 0 4m33s kube-system kube-proxy-qjgkr 1/1 Running 0 5m53s kube-system kube-scheduler-k8s-1 1/1 Running 0 32m

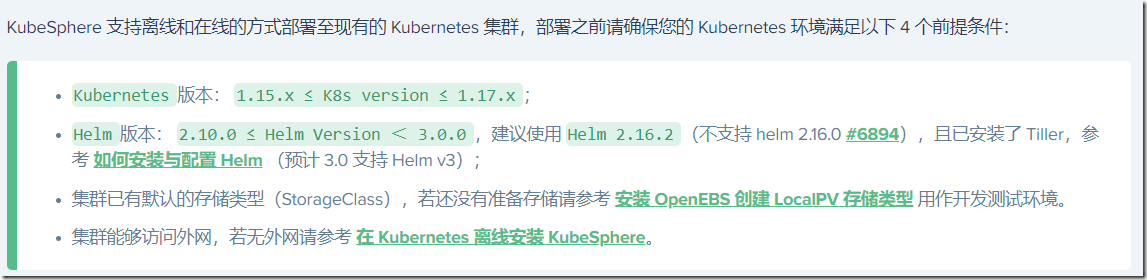

四、KubeSphere

4.1、kubesphere介绍

KubeSphere 是一款面向云原生设计的开源项目,在目前主流容器调度平台 Kubernetes 之 上构建的分布式多租户容器管理平台,提供简单易用的操作界面以及向导式操作方式,在降低用户使用容器调度平台学习成本的同时,极大降低开发、测试、运维的日常工作的复杂度

3.0安装文档:https://kubesphere.io/zh/docs/installing-on-kubernetes/introduction/overview/

2.1安装文档:https://v2-1.docs.kubesphere.io/docs/zh-CN/installation/install-on-k8s/

两者最好结合一起看,比较坑

4.2、安装前准备

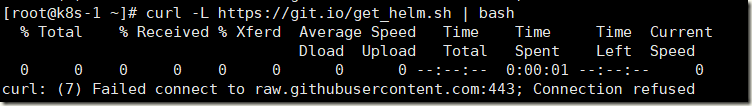

4.2.1、安装helm和tiller

Helm 是 Kubernetes 的包管理器。包管理器类似于我们在 Ubuntu 中使用的 apt、Centos中使用的 yum 或者 Python 中的 pip 一样,能快速查找、下载和安装软件包。Helm 由客 户端组件 helm 和服务端组件 Tiller 组成, 能够将一组 K8S 资源打包统一管理, 是查找、共 享和使用为 Kubernetes 构建的软件的最佳方式

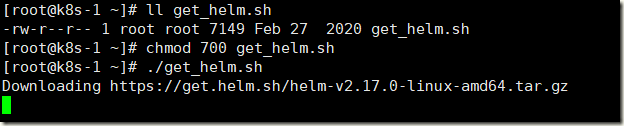

由于被墙,需要手动下载下来执行

还是执行不了,手动下载软件包执行

#安装helm

[root@k8s-1 ~]# ll helm-v2.17.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 25097357 Jan 5 14:24 helm-v2.17.0-linux-amd64.tar.gz

[root@k8s-1 ~]# tar xf helm-v2.17.0-linux-amd64.tar.gz

[root@k8s-1 ~]# cp linux-amd64/helm /usr/local/bin

[root@k8s-1 ~]# cp linux-amd64/tiller /usr/local/bin

[root@k8s-1 ~]# helm version

Client: &version.Version{SemVer:"v2.17.0", GitCommit:"a690bad98af45b015bd3da1a41f6218b1a451dbe", GitTreeState:"clean"}

Error: could not find tiller

#创建rbac权限文件

cat > helm-rbac.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

EOF

[root@k8s-1 ~]# kubectl apply -f helm-rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

#安装tiller

[root@k8s-1 ~]# helm init --service-account tiller --upgrade -i registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.17.0 --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://v2.helm.sh/docs/securing_installation/

[root@k8s-1 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f9c544f75-57thw 1/1 Running 1 17h

kube-system coredns-7f9c544f75-hfgql 1/1 Running 1 17h

kube-system etcd-k8s-1 1/1 Running 1 17h

kube-system kube-apiserver-k8s-1 1/1 Running 1 17h

kube-system kube-controller-manager-k8s-1 1/1 Running 1 17h

kube-system kube-flannel-ds-amd64-9b7zd 1/1 Running 1 16h

kube-system kube-flannel-ds-amd64-9b982 1/1 Running 1 17h

kube-system kube-flannel-ds-amd64-mr6vw 1/1 Running 1 16h

kube-system kube-proxy-5tcsm 1/1 Running 1 16h

kube-system kube-proxy-897f5 1/1 Running 1 17h

kube-system kube-proxy-xvbds 1/1 Running 1 16h

kube-system kube-scheduler-k8s-1 1/1 Running 1 17h

kube-system tiller-deploy-59665c97b6-qfzmc 1/1 Running 0 69s #pod已安装

#检查

[root@k8s-1 ~]# tiller

[main] 2021/01/05 14:29:33 Starting Tiller v2.17.0 (tls=false)

[main] 2021/01/05 14:29:33 GRPC listening on :44134

[main] 2021/01/05 14:29:33 Probes listening on :44135

[main] 2021/01/05 14:29:33 Storage driver is ConfigMap

[main] 2021/01/05 14:29:33 Max history per release is 0

^C

[root@k8s-1 ~]# helm version

Client: &version.Version{SemVer:"v2.17.0", GitCommit:"a690bad98af45b015bd3da1a41f6218b1a451dbe", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.17.0", GitCommit:"a690bad98af45b015bd3da1a41f6218b1a451dbe", GitTreeState:"clean"}4.2.2、安装 OpenEBS

文档:https://v2-1.docs.kubesphere.io/docs/zh-CN/appendix/install-openebs/

#去除master上污点

[root@k8s-1 ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-1 Ready master 17h v1.17.3 172.30.36.1 <none> CentOS Linux 7 (Core) 3.10.0-1062.18.1.el7.x86_64 docker://20.10.1

k8s-2 Ready <none> 16h v1.17.3 172.30.36.2 <none> CentOS Linux 7 (Core) 3.10.0-1062.18.1.el7.x86_64 docker://20.10.1

k8s-3 Ready <none> 16h v1.17.3 172.30.36.3 <none> CentOS Linux 7 (Core) 3.10.0-1062.18.1.el7.x86_64 docker://20.10.1

[root@k8s-1 ~]# kubectl describe node k8s-1 | grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

[root@k8s-1 ~]# kubectl taint nodes k8s-1 node-role.kubernetes.io/master:NoSchedule-

node/k8s-1 untainted

[root@k8s-1 ~]# kubectl describe node k8s-1 | grep Taint

Taints: <none>

#安装 OpenEBS

[root@k8s-1 ~]# kubectl create ns openebs

namespace/openebs created

[root@k8s-1 ~]# kubectl apply -f https://openebs.github.io/charts/openebs-operator-1.5.0.yaml

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

namespace/openebs configured

serviceaccount/openebs-maya-operator created

clusterrole.rbac.authorization.k8s.io/openebs-maya-operator created

clusterrolebinding.rbac.authorization.k8s.io/openebs-maya-operator created

deployment.apps/maya-apiserver created

service/maya-apiserver-service created

deployment.apps/openebs-provisioner created

deployment.apps/openebs-snapshot-operator created

configmap/openebs-ndm-config created

daemonset.apps/openebs-ndm created

deployment.apps/openebs-ndm-operator created

deployment.apps/openebs-admission-server created

deployment.apps/openebs-localpv-provisioner created

#查看storageclass

[root@k8s-1 ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 2m29s

openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 2m29s

openebs-jiva-default openebs.io/provisioner-iscsi Delete Immediate false 2m29s

openebs-snapshot-promoter volumesnapshot.external-storage.k8s.io/snapshot-promoter Delete Immediate false 2m29s

#设置默认storageclass

[root@k8s-1 ~]# kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/openebs-hostpath patched

[root@k8s-1 ~]# kubectl get pod -n openebs

NAME READY STATUS RESTARTS AGE

maya-apiserver-7f664b95bb-d4rgz 1/1 Running 3 6m14s

openebs-admission-server-889d78f96-gvf5j 1/1 Running 0 6m14s

openebs-localpv-provisioner-67bddc8568-c9p5m 1/1 Running 0 6m14s

openebs-ndm-klmvz 1/1 Running 0 6m14s

openebs-ndm-m7c22 1/1 Running 0 6m14s

openebs-ndm-mwv8l 1/1 Running 0 6m14s

openebs-ndm-operator-5db67cd5bb-8fbbc 1/1 Running 1 6m14s

openebs-provisioner-c68bfd6d4-cv8md 1/1 Running 0 6m14s

openebs-snapshot-operator-7ffd685677-jwqdp 2/2 Running 0 6m14s

[root@k8s-1 ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 3m42s

openebs-hostpath (default) openebs.io/local Delete WaitForFirstConsumer false 3m42s

openebs-jiva-default openebs.io/provisioner-iscsi Delete Immediate false 3m42s

openebs-snapshot-promoter volumesnapshot.external-storage.k8s.io/snapshot-promoter Delete Immediate false 3m42s注意:此时不要给master加上污点,否者导致后面的pods安装不上(openldap,redis),待kubesphere安装完成后加上污点

4.3、安装kubesphere

文档:https://kubesphere.io/zh/docs/installing-on-kubernetes/introduction/overview/

[root@k8s-1 ~]# kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yaml

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

namespace/kubesphere-system created

serviceaccount/ks-installer created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

[root@k8s-1 ~]#

[root@k8s-1 ~]# kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml

clusterconfiguration.installer.kubesphere.io/ks-installer created

#使用如下命令监控

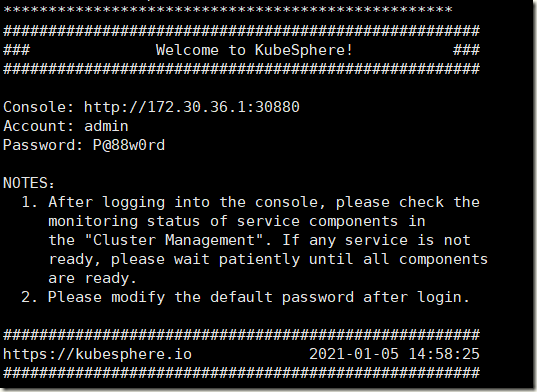

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f使用 kubectl get pod --all-namespaces,查看所有 Pod 在 KubeSphere 相关的命名空间运行是否正常。如果是,请通过以下命令检查控制台的端口(默认为 30880),安全组需放行该端口

[root@k8s-1 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-7f9c544f75-57thw 1/1 Running 1 17h kube-system coredns-7f9c544f75-hfgql 1/1 Running 1 17h kube-system etcd-k8s-1 1/1 Running 1 17h kube-system kube-apiserver-k8s-1 1/1 Running 1 17h kube-system kube-controller-manager-k8s-1 1/1 Running 1 17h kube-system kube-flannel-ds-amd64-9b7zd 1/1 Running 1 17h kube-system kube-flannel-ds-amd64-9b982 1/1 Running 1 17h kube-system kube-flannel-ds-amd64-mr6vw 1/1 Running 1 17h kube-system kube-proxy-5tcsm 1/1 Running 1 17h kube-system kube-proxy-897f5 1/1 Running 1 17h kube-system kube-proxy-xvbds 1/1 Running 1 17h kube-system kube-scheduler-k8s-1 1/1 Running 1 17h kube-system snapshot-controller-0 1/1 Running 0 6m10s kube-system tiller-deploy-59665c97b6-qfzmc 1/1 Running 0 32m kubesphere-controls-system default-http-backend-5d464dd566-glk2c 1/1 Running 0 5m33s kubesphere-controls-system kubectl-admin-6c9bd5b454-m7jfc 1/1 Running 0 2m21s kubesphere-monitoring-system alertmanager-main-0 2/2 Running 0 4m kubesphere-monitoring-system alertmanager-main-1 2/2 Running 0 4m kubesphere-monitoring-system alertmanager-main-2 2/2 Running 0 4m kubesphere-monitoring-system kube-state-metrics-5c466fc7b6-n9b6t 3/3 Running 0 4m21s kubesphere-monitoring-system node-exporter-44682 2/2 Running 0 4m22s kubesphere-monitoring-system node-exporter-5h45l 2/2 Running 0 4m22s kubesphere-monitoring-system node-exporter-kwjdg 2/2 Running 0 4m22s kubesphere-monitoring-system notification-manager-deployment-7ff95b7544-cf5nl 1/1 Running 0 108s kubesphere-monitoring-system notification-manager-deployment-7ff95b7544-fpl8b 1/1 Running 0 108s kubesphere-monitoring-system notification-manager-operator-5cbb58b756-fwktw 2/2 Running 0 4m5s kubesphere-monitoring-system prometheus-k8s-0 3/3 Running 1 3m44s kubesphere-monitoring-system prometheus-k8s-1 3/3 Running 1 3m39s kubesphere-monitoring-system prometheus-operator-78c5cdbc8f-tbbmd 2/2 Running 0 4m23s kubesphere-system ks-apiserver-5c64d4dc5d-ldtnj 1/1 Running 0 3m38s kubesphere-system ks-console-fb4c655cf-brd6s 1/1 Running 0 5m18s kubesphere-system ks-controller-manager-8496977664-8s5k8 1/1 Running 0 3m37s kubesphere-system ks-installer-85854b8c8-zq5j2 1/1 Running 0 8m19s kubesphere-system openldap-0 1/1 Running 0 5m44s kubesphere-system redis-6fd6c6d6f9-lq8xp 1/1 Running 0 5m56s openebs maya-apiserver-7f664b95bb-d4rgz 1/1 Running 3 18m openebs openebs-admission-server-889d78f96-gvf5j 1/1 Running 0 18m openebs openebs-localpv-provisioner-67bddc8568-c9p5m 1/1 Running 0 18m openebs openebs-ndm-klmvz 1/1 Running 0 18m openebs openebs-ndm-m7c22 1/1 Running 0 18m openebs openebs-ndm-mwv8l 1/1 Running 0 18m openebs openebs-ndm-operator-5db67cd5bb-8fbbc 1/1 Running 1 18m openebs openebs-provisioner-c68bfd6d4-cv8md 1/1 Running 0 18m openebs openebs-snapshot-operator-7ffd685677-jwqdp 2/2 Running 0 18m [root@k8s-1 ~]# kubectl get svc/ks-console -n kubesphere-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ks-console NodePort 10.96.180.136 <none> 80:30880/TCP 6m26s

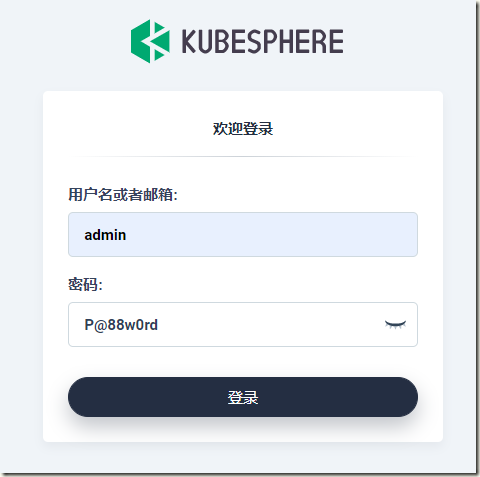

通过http://公网ip:30880访问

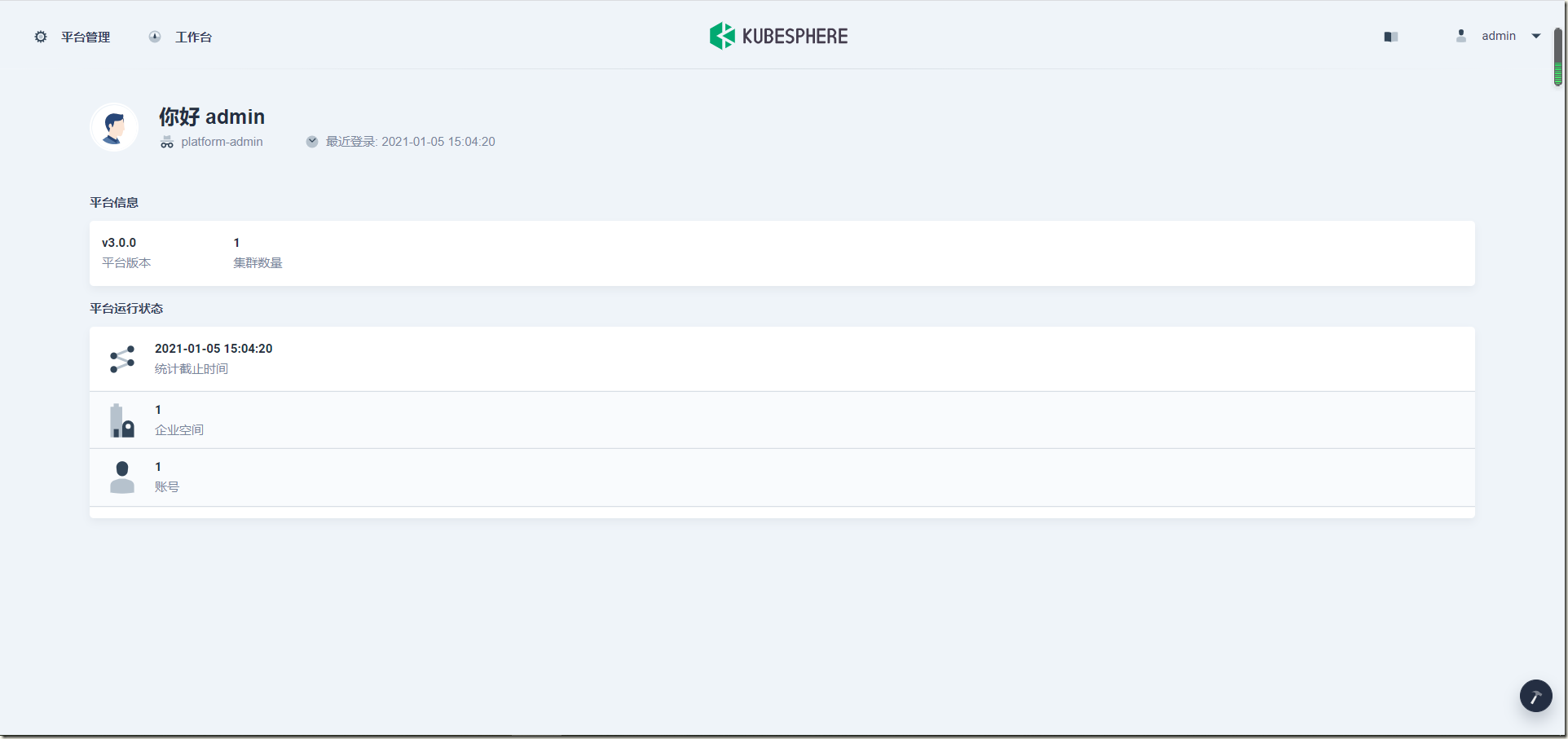

修改密码:

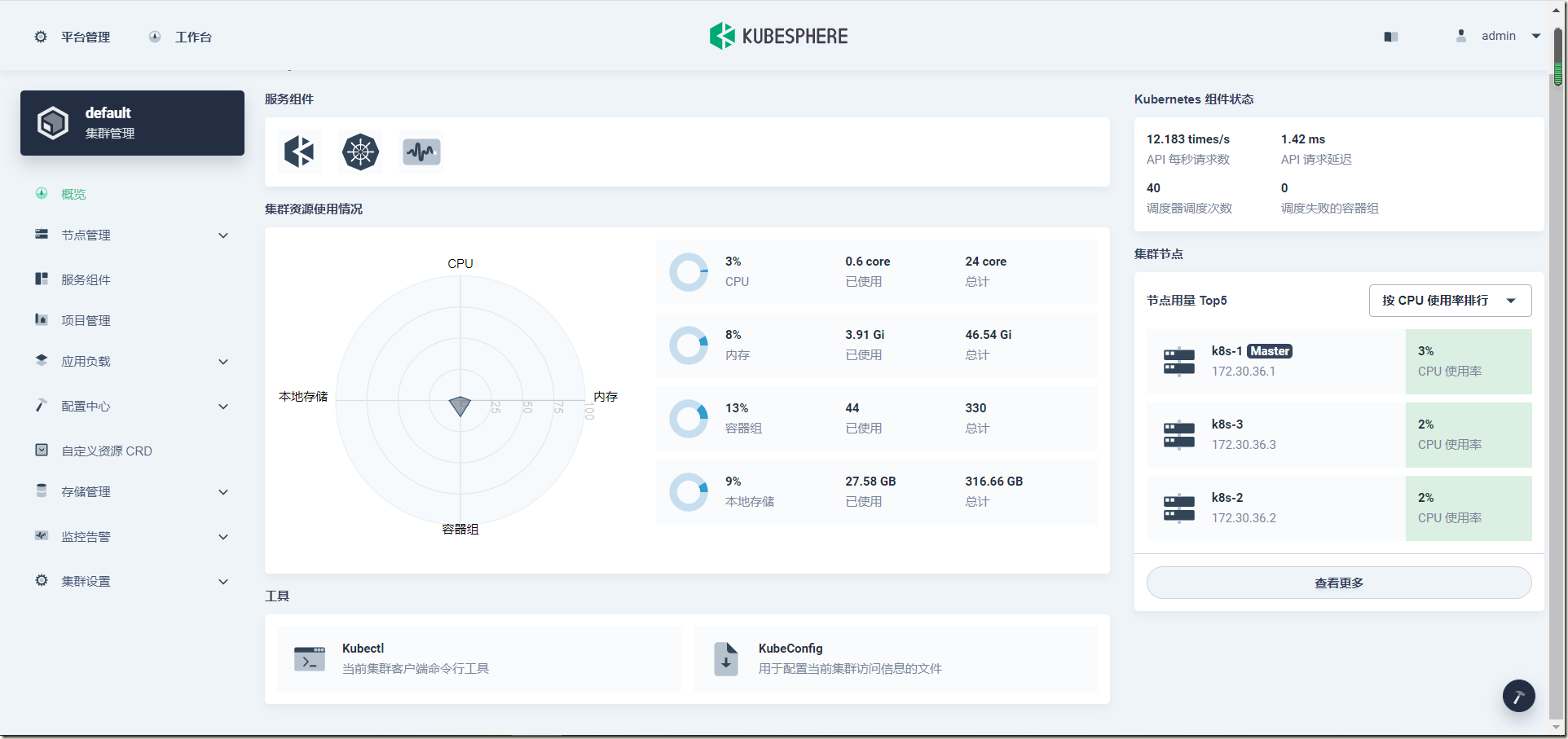

集群管理界面:

重新给master打上污点:

[root@k8s-1 ~]# kubectl taint nodes k8s-1 node-role.kubernetes.io/master=:NoSchedule node/k8s-1 tainted [root@k8s-1 ~]# kubectl describe node k8s-1 | grep Taint Taints: node-role.kubernetes.io/master:NoSchedule

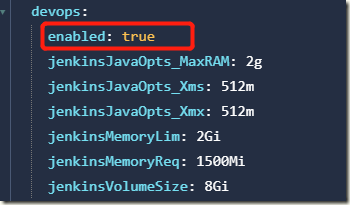

4.4、可插拔安裝插件

1)安裝devops插件

文档:https://kubesphere.io/zh/docs/pluggable-components/devops/