第一题

设置线程块中线程数为1024效果优于设置为1023,且提升明显,不过原因未知,以后章节看看能不能回答。

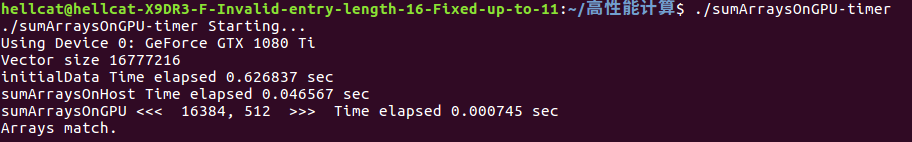

第二题

参考文件sumArraysOnGPUtimer.cu,设置block=256,新建内核,使每个线程处理两个元素。

思路很简单,将数据的虚拟内存对半分为高低两块,每一内核线程同时处理两个索引区域序列相同的数据即可:

# include <cuda_runtime.h>

# include <stdio.h>

# include <sys/time.h>

# include "common.h"

__global__ void sumArraysOnGPU(float *A, float *B, float *C, const int N)

{

int i = blockIdx.x * blockDim.x + threadIdx.x;

if (i < N/2) {

C[i] = A[i] + B[i];

C[i+N/2] = A[i+N/2] + B[i+N/2];

}

}

int main(int argc, char **argv)

{

printf("%s Starting...

", argv[0]);

// set up device

int dev = 0;

cudaDeviceProp deviceProp;

CHECK(cudaGetDeviceProperties(&deviceProp, dev));

printf("Using Device %d: %s

", dev, deviceProp.name);

CHECK(cudaSetDevice(dev));

// set up data size of vectors

int nElem = 1 << 24;

printf("Vector size %d

", nElem);

// malloc host memory

size_t nBytes = nElem * sizeof(float);

float *h_A, *h_B, *hostRef, *gpuRef;

h_A = (float *)malloc(nBytes);

h_B = (float *)malloc(nBytes);

hostRef = (float *)malloc(nBytes);

gpuRef = (float *)malloc(nBytes);

double iStart, iElaps;

// initialize data at host side

iStart = cpuSecond();

initialData(h_A, nElem);

initialData(h_B, nElem);

iElaps = cpuSecond() - iStart;

printf("initialData Time elapsed %f sec

", iElaps);

memset(hostRef, 0, nBytes);

memset(gpuRef, 0, nBytes);

// add vector at host side for result checks

iStart = cpuSecond();

sumArraysOnHost(h_A, h_B, hostRef, nElem);

iElaps = cpuSecond() - iStart;

printf("sumArraysOnHost Time elapsed %f sec

", iElaps);

// malloc device global memory

float *d_A, *d_B, *d_C;

CHECK(cudaMalloc((float**)&d_A, nBytes));

CHECK(cudaMalloc((float**)&d_B, nBytes));

CHECK(cudaMalloc((float**)&d_C, nBytes));

// transfer data from host to device

CHECK(cudaMemcpy(d_A, h_A, nBytes, cudaMemcpyHostToDevice));

CHECK(cudaMemcpy(d_B, h_B, nBytes, cudaMemcpyHostToDevice));

CHECK(cudaMemcpy(d_C, gpuRef, nBytes, cudaMemcpyHostToDevice));

// invoke kernel at host side

int iLen = 512;

dim3 block (iLen);

dim3 grid ((nElem + block.x - 1) / block.x / 2);

// <<< 16384, 512 >>> Time elapsed 0.000747 sec

// <<< 32768, 512 >>> Time elapsed 0.000709 sec

iStart = cpuSecond();

sumArraysOnGPU<<<grid, block>>>(d_A, d_B, d_C, nElem);

CHECK(cudaDeviceSynchronize());

iElaps = cpuSecond() - iStart;

printf("sumArraysOnGPU <<< %d, %d >>> Time elapsed %f sec

", grid.x,

block.x, iElaps);

// check kernel error

// CHECK(cudaGetLastError()) ;

// copy kernel result back to host side

CHECK(cudaMemcpy(gpuRef, d_C, nBytes, cudaMemcpyDeviceToHost));

// check device results

checkResult(hostRef, gpuRef, nElem);

// free device global memory

CHECK(cudaFree(d_A));

CHECK(cudaFree(d_B));

CHECK(cudaFree(d_C));

// free host memory

free(h_A);

free(h_B);

free(hostRef);

free(gpuRef);

return(0);

}

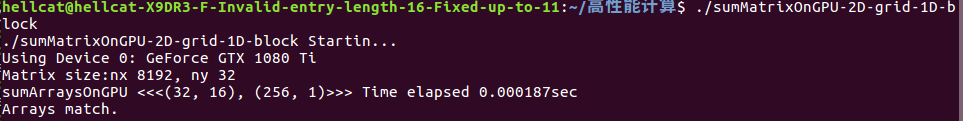

第四题

参考文件sumMatrixOnGPU-2D-gril-1D-block.cu,新建内核,使每个线程处理两个元素。

思路同上,由于是二维索引,所以采取的划分是按照纵坐标y将数据对半划分,可以直观理解为沿着y/2将数据对折,然后同一个线程处理数据为两个块中对应位置即可:

# include <cuda_runtime.h>

# include <stdio.h>

# include <sys/time.h>

# include "common.h"

// grid 2D block 1D

__global__ void sumMatrixsOnGPUMix(float *MatA, float *MatB, float *MatC,

int nx, int ny)

{

int ix = threadIdx.x + blockIdx.x * blockDim.x;

int iy = blockIdx.y;

int idx = iy * nx + ix;

if (ix < nx && iy < ny/2) {

MatC[idx] = MatA[idx] + MatB[idx];

MatC[idx + nx*ny/2] = MatA[idx + nx*ny/2] + MatB[idx + nx*ny/2];

}

}

int main(int argc, char **argv){

printf("%s Startin...

", argv[0]);

//set up device

int dev = 0;

cudaDeviceProp deviceProp;

CHECK(cudaGetDeviceProperties(&deviceProp, dev));

printf("Using Device %d: %s

", dev, deviceProp.name);

CHECK(cudaSetDevice(dev));

// matrix size

int nx = 1<<13;

int ny = 1<<5; // 2**18

int nxy = nx * ny;

int nBytes = nxy * sizeof(float);

printf("Matrix size:nx %d, ny %d

", nx, ny);

float *h_A, *h_B, *hostRef, *gpuRef;

h_A = (float *)malloc(nBytes);

h_B = (float *)malloc(nBytes);

hostRef = (float *)malloc(nBytes);

gpuRef = (float *)malloc(nBytes);

// initialize data at host side

double iStart, iElaps;

iStart = cpuSecond();

initialData(h_A, nxy);

initialData(h_B, nxy);

iElaps = cpuSecond() - iStart;

memset(hostRef, 0, nBytes);

memset(gpuRef, 0, nBytes);

iStart = cpuSecond();

sumMatrixsOnHost(h_A, h_B, hostRef, nx, ny);

iElaps = cpuSecond() - iStart;

// malloc device global memory

float *d_MatA, *d_MatB, *d_MatC;

cudaMalloc((float **)&d_MatA, nBytes);

cudaMalloc((float **)&d_MatB, nBytes);

cudaMalloc((float **)&d_MatC, nBytes);

// transfer data from host to device

cudaMemcpy(d_MatA, h_A, nBytes, cudaMemcpyHostToDevice);

cudaMemcpy(d_MatB, h_B, nBytes, cudaMemcpyHostToDevice);

// invoke kernel at host to device

dim3 block (256); // 2维块设置

dim3 grid ((nx+block.x-1)/block.x, ny/2); // 2维网格设置

/*

<<<(1024, 16384), (16, 1)>>> Time elapsed 0.021947sec

<<<(512, 16384), (32, 1)>>> Time elapsed 0.011039sec

<<<(64, 16384), (256, 1)>>> Time elapsed 0.009063sec

*/

iStart = cpuSecond();

sumMatrixsOnGPUMix<<<grid, block>>>(d_MatA, d_MatB, d_MatC, nx, ny);

cudaDeviceSynchronize(); // 测试用,同步线程,实际无需等待子线程

iElaps = cpuSecond() - iStart;

printf("sumArraysOnGPU <<<(%d, %d), (%d, %d)>>> Time elapsed %f"

"sec

", grid.x, grid.y, block.x, block.y, iElaps);

cudaMemcpy(gpuRef, d_MatC, nBytes, cudaMemcpyDeviceToHost);

checkResult(hostRef, gpuRef, nxy);

// free device global memory

cudaFree(d_MatA);

cudaFree(d_MatB);

cudaFree(d_MatC);

// free host memory

free(h_A);

free(h_B);

free(hostRef);

free(gpuRef);

// reset device

cudaDeviceReset();

return 0;

}

运行结果如下:

附common.h文件

# include <cuda_runtime.h>

# include <stdio.h>

# include <sys/time.h>

# define CHECK(call)

{

const cudaError_t error = call;

if (error != cudaSuccess)

{

fprintf(stderr, "Error: %s:%d, ", __FILE__, __LINE__);

fprintf(stderr, "code: %d, reason: %s

", error,

cudaGetErrorString(error));

exit(1);

}

}

void initialData(float *ip, int size)

{

time_t t;

srand((unsigned int) time(&t));

for (int i=0; i<size; i++)

{

ip[i] = (float)(rand() & 0xFF)/10.0f;

}

}

double cpuSecond() {

struct timeval tp;

gettimeofday(&tp, NULL);

return ((double)tp.tv_sec + (double)tp.tv_usec*1.e-6);

}

void checkResult(float *hostRef, float *gpuRef, const int N) {

double epsilon = 1.0E-8;

bool match = 1;

for (int i=0; i<N; i++) {

if (abs(hostRef[i] - gpuRef[i]) > epsilon) {

match = 0;

printf("Arrays do not match!

");

printf("host %5.2f gpu %5.2f at current %d

",

hostRef[i], gpuRef[i], i);

break;

}

}

if (match) printf("Arrays match.

");

}

void sumArraysOnHost(float *A, float *B, float *C, const int N) {

for (int idx=0; idx<N; idx++)

C[idx] = A[idx] + B[idx];

}

void sumMatrixsOnHost(float *A, float *B, float *C, const int nx, const int ny){

float *ia = A;

float *ib = B;

float *ic = C;

for (int iy=0; iy<ny; iy++){

for (int ix=0; ix<nx; ix++){

ic[ix] = ia[ix] + ib[ix];

}

ia += nx;

ib += nx;

ic += nx;

}

}