Distributed Algorithms in NoSQL Databases, http://highlyscalable.wordpress.com/2012/09/18/distributed-algorithms-in-nosql-databases/

NOSQL Patterns, http://horicky.blogspot.com/2009/11/nosql-patterns.html

Consistent Hashing(ZZ) , 使用memcached结合Consistent hasning 算法, 实现非常完备的分布式缓存服务器

The Simple Magic of Consistent Hashing, http://www.paperplanes.de/2011/12/9/the-magic-of-consistent-hashing.html

Amazon's Dynamo, 4.2 4.3, 6.2确保均匀的负载分布, 6.4

当面对bigdata, scale-up思路完全行不通, 需要使用scale-out来进行系统扩展的时候

Data Sharding将是必须要面对的问题, how to map records to physical nodes?

1. Load balance, 避免出现hotspots

2. 节点发生变化时, fail, new add, leave, 不会影响到sharding的结果

使用的方法,

1. 基于master, 比如google的bigtable, master来协调一切, 避免hotspots, 当节点失效或加入时, 经行相应的调整

所有的数据分布状况信息都存在master上, 当然问题就是单点

2. 基于内容的划分, 比如时间, 地点, 问题在于无法解决hotspots问题

3. 基于hash的划分 (partition = key mod (total_VNs)), 这个可以比较有效的解决hotspots问题, 但是无法解决节点变化问题

当节点变化时, 会导致之前的所有划分失效

4, 一致性hash, 比较理想的方案, 并且是去中心化设计

Consistent Hashing

The idea of consistent hashing was introduced by David Karger et al. in 1997 (cf. [KLL+97]) in the context of a paper about “a family of caching protocols for distributed networks that can be used to decrease or eliminate the occurrence of hot spots in the networks”.

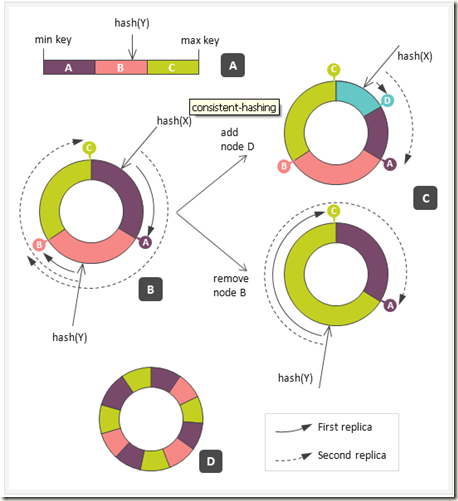

A到B就是, 普通hash到一致性hash的转化

C, 当节点增加或删除时, 一致性hash可以简单的应对

D, 为了load balance, 使用虚拟节点的概念, 并可以根据节点的能力分配不同个数的节点

算法实际使用例子可以参考Amazon's Dynamo, 4.2 划分算法

直接使用一致性hash在效率上, 会存在一些问题, 参考Amazon's Dynamo, 6.2确保均匀的负载分布, 看看dynamo是怎样优化一致性hash以达到更好的load balance

关于一致性hash, 由谁来进行coordinate的问题, 参考Amazon's Dynamo, 6.4 Client-driven or Server-driven Coordination

client是否需要保存一致性hash环信息, 并如何更新的问题?

如果能容忍多一跳的延迟, client可以不保存任何一致性hash环信息, 就近将request发给任一server, 由server进行coordinate

Data replication

To provide high reiability from individually unreliable resource, we need to replicate the data partitions.

关于使用一致性hash后的复本机制参考Amazon's Dynamo, 4.3

Membership Changes

In a partitioned database where nodes may join and leave the system at any time without impacting its operation all nodes have to communicate with each other, especially when membership changes.

一致性hash有效解决节点动态变化的问题, 也只是将影响降到较小的范围, 在节点变化时, 邻接的节点仍然需要做一定的调整和数据transfer

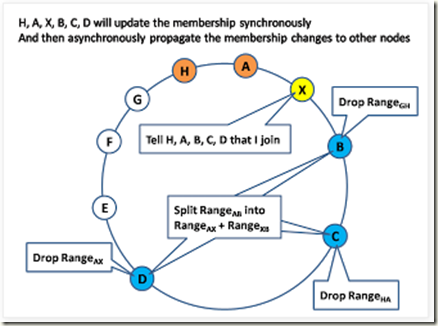

When a new node joins the system the following actions have to happen (cf. [Ho09a]):

1. The newly arriving node announces its presence and its identifier to adjacent nodes or to all nodes via broadcast.

2. The neighbors of the joining node react by adjusting their object and replica ownerships.

3. The joining node copies datasets it is now responsible for from its neighbours. This can be done in bulk and also asynchronously.

4. If, in step 1, the membership change has not been broadcasted to all nodes, the joining node is now announcing its arrival.

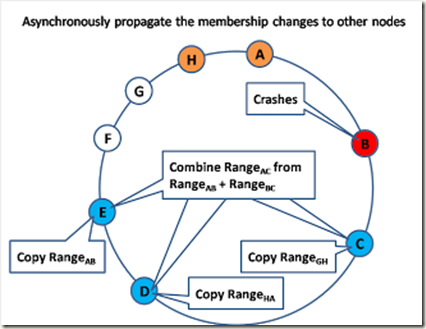

When a node leaves the system the following actions have to occur (cf. [Ho09a]):

1. Nodes within the system need to detect whether a node has left as it might have crashed and not been able to notify the other nodes of its departure.

2. If a node’s departure has been detected, the neighbors of the node have to react by exchanging data with each other and adjusting their object and replica ownerships.