案例来源

整体架构

- 概述

- 统计模块,召回历史最热、最近最热、平均评分最高、每类别评分Top10

- 离线推荐,基于ALS召回与用户最相近、与电影最相近的TopN电影

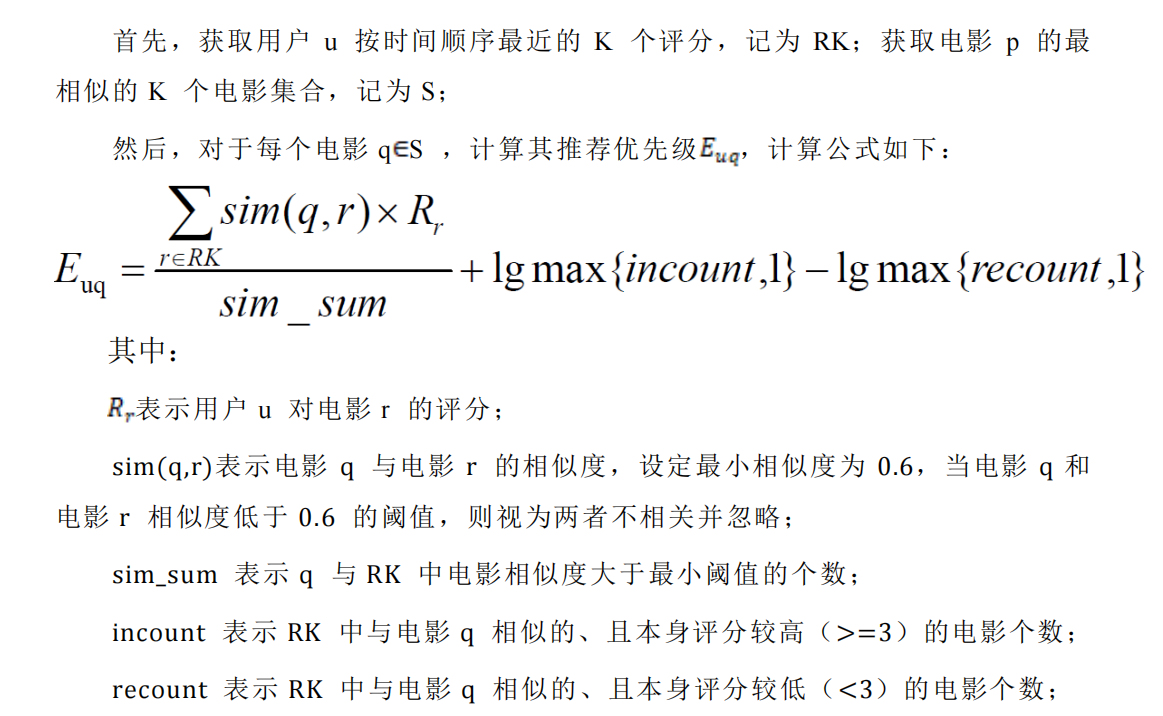

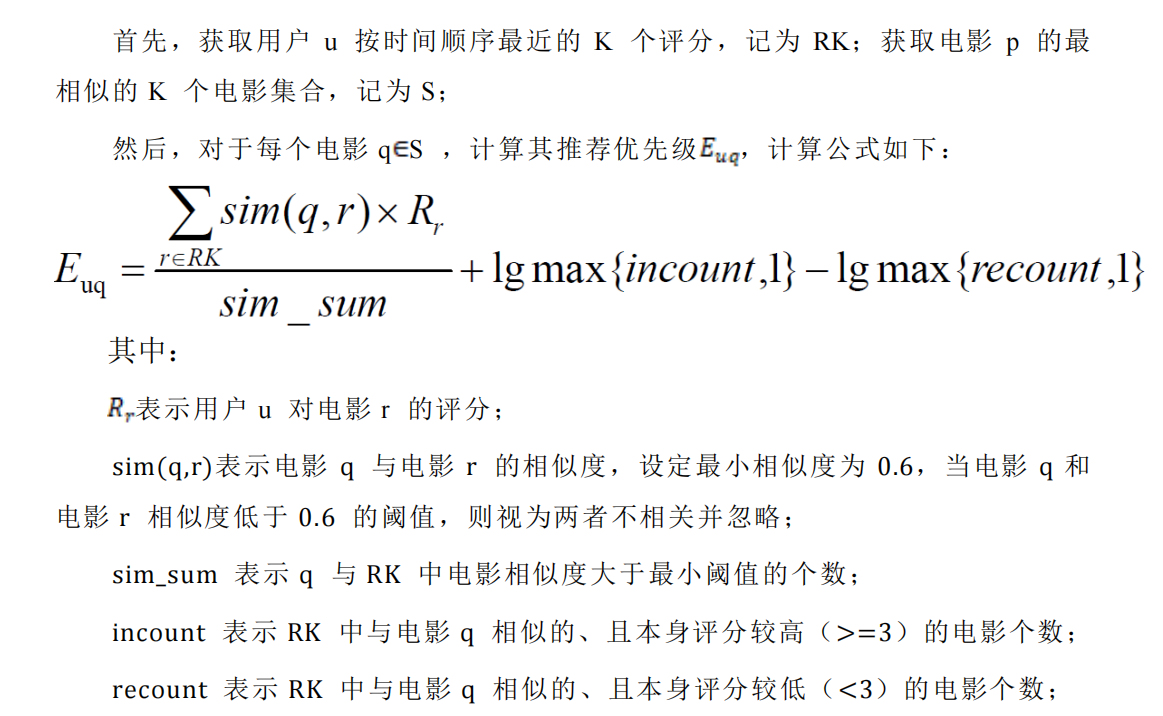

- 实时推荐,基于离线推荐计算的电影相似度矩阵,结合用户最近K次评分,计算当前评分电影的某个相似电影与最近K次评分电影的平均相似得分,混合增强减弱因子,获得当前评分电影的相似电影序列的排序结果

- 内容推荐,基于TF-IDF计算电影之间的相似度,获取电影相似度矩阵,召回逻辑未实现

- 问题:没有过滤模块,没有混排模块(视具体场景而定)

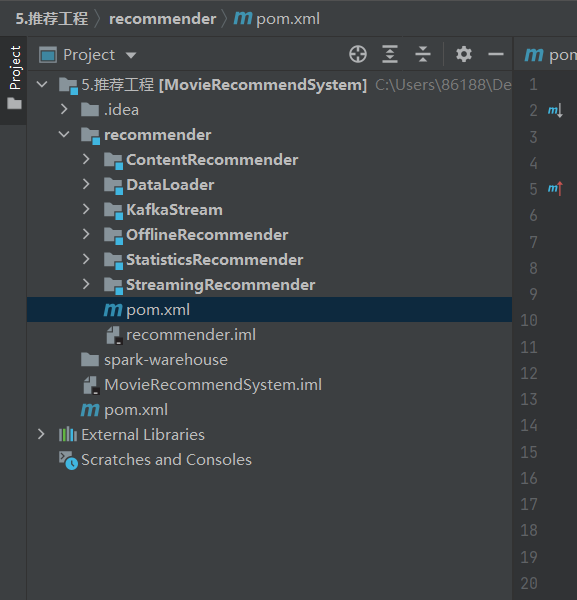

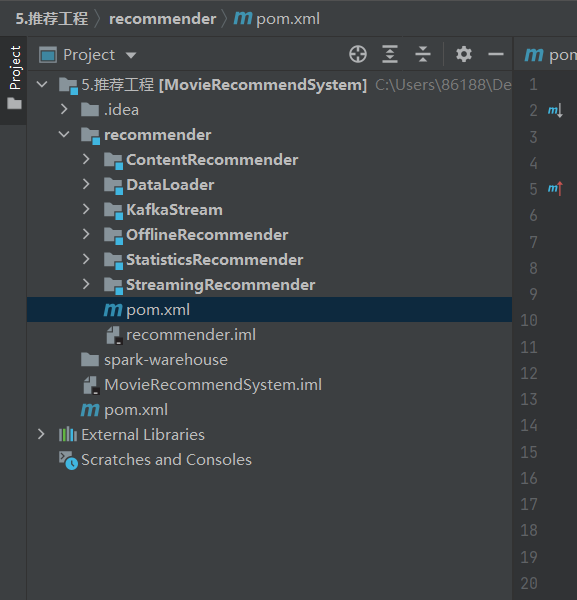

代码结构及pom文件配置

- 代码结构

- 推荐工程pom

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.lotuslaw</groupId>

<artifactId>MovieRecommendSystem</artifactId>

<packaging>pom</packaging>

<version>1.0-SNAPSHOT</version>

<modules>

<module>recommender</module>

</modules>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<log4j.version>1.2.17</log4j.version>

<slf4j.version>1.7.22</slf4j.version>

<mongodb-spark.version>2.0.0</mongodb-spark.version>

<casbah.version>3.1.1</casbah.version>

<elasticsearch-spark.version>5.6.2</elasticsearch-spark.version>

<elasticsearch.version>5.6.2</elasticsearch.version>

<redis.version>2.9.0</redis.version>

<kafka.version>0.10.2.1</kafka.version>

<spark.version>2.1.1</spark.version>

<scala.version>2.11.8</scala.version>

<jblas.version>1.2.1</jblas.version>

</properties>

<dependencies>

<!--引入共同的日志管理工具-->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jcl-over-slf4j</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${log4j.version}</version>

</dependency>

</dependencies>

<build>

<!--声明并引入子项目共有的插件-->

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.6.1</version>

<!--所有的编译用 JDK1.8-->

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

<pluginManagement>

<plugins>

<!--maven 的打包插件-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

<!--该插件用于将 scala 代码编译成 class 文件-->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

<executions>

<!--绑定到 maven 的编译阶段-->

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>MovieRecommendSystem</artifactId>

<groupId>com.lotuslaw</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>recommender</artifactId>

<packaging>pom</packaging>

<modules>

<module>DataLoader</module>

<module>StatisticsRecommender</module>

<module>OfflineRecommender</module>

<module>StreamingRecommender</module>

<module>ContentRecommender</module>

<module>KafkaStream</module>

</modules>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencyManagement>

<dependencies>

<!-- 引入 Spark 相关的 Jar 包 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-graphx_2.11</artifactId>

<version>${spark.version}</version>

</dependency> <dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<!-- 父项目已声明该 plugin,子项目在引入的时候,不用声明版本和已经声明的配置 -->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>recommender</artifactId>

<groupId>com.lotuslaw</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>DataLoader</artifactId>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<!-- Spark 的依赖引入 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

</dependency>

<!-- 引入 Scala -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

</dependency>

<!-- 加入 MongoDB 的驱动 -->

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>${casbah.version}</version>

</dependency>

<dependency>

<groupId>org.mongodb.spark</groupId>

<artifactId>mongo-spark-connector_2.11</artifactId>

<version>${mongodb-spark.version}</version>

</dependency>

<!-- 加入 ElasticSearch 的驱动 -->

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>transport</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch-spark-20_2.11</artifactId>

<version>${elasticsearch-spark.version}</version>

<!-- 将不需要依赖的包从依赖路径中除去 -->

<exclusions>

<exclusion>

<groupId>org.apache.hive</groupId>

<artifactId>hive-service</artifactId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

</project>

- StatisticsRecommender pom

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>recommender</artifactId>

<groupId>com.lotuslaw</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>StatisticsRecommender</artifactId>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<!-- Spark 的依赖引入 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

</dependency>

<!-- 引入 Scala -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

</dependency>

<!-- 加入 MongoDB 的驱动 -->

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>${casbah.version}</version>

</dependency>

<dependency>

<groupId>org.mongodb.spark</groupId>

<artifactId>mongo-spark-connector_2.11</artifactId>

<version>${mongodb-spark.version}</version>

</dependency>

</dependencies>

</project>

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>recommender</artifactId>

<groupId>com.lotuslaw</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>OfflineRecommender</artifactId>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.scalanlp</groupId>

<artifactId>jblas</artifactId>

<version>${jblas.version}</version>

</dependency>

<!-- 引入 Spark 相关的 Jar 包 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<!-- 加入 MongoDB 的驱动 -->

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>${casbah.version}</version>

</dependency>

<dependency>

<groupId>org.mongodb.spark</groupId>

<artifactId>mongo-spark-connector_2.11</artifactId>

<version>${mongodb-spark.version}</version>

</dependency>

</dependencies>

</project>

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>recommender</artifactId>

<groupId>com.lotuslaw</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>StreamingRecommender</artifactId>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<!-- Spark 的依赖引入 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

</dependency>

<!-- 引入 Scala -->

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

</dependency>

<!-- 加入 MongoDB 的驱动 -->

<!-- 用于代码方式连接 MongoDB -->

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>${casbah.version}</version>

</dependency>

<!-- 用于 Spark 和 MongoDB 的对接 -->

<dependency>

<groupId>org.mongodb.spark</groupId>

<artifactId>mongo-spark-connector_2.11</artifactId>

<version>${mongodb-spark.version}</version>

</dependency>

<!-- redis -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>3.2.0</version>

</dependency>

<!-- kafka -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.7.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

</dependencies>

</project>

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>recommender</artifactId>

<groupId>com.lotuslaw</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>ContentRecommender</artifactId>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.scalanlp</groupId>

<artifactId>jblas</artifactId>

<version>${jblas.version}</version>

</dependency>

<!-- 引入 Spark 相关的 Jar 包 -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<!-- 加入 MongoDB 的驱动 -->

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>casbah-core_2.11</artifactId>

<version>${casbah.version}</version>

</dependency>

<dependency>

<groupId>org.mongodb.spark</groupId>

<artifactId>mongo-spark-connector_2.11</artifactId>

<version>${mongodb-spark.version}</version>

</dependency>

</dependencies>

</project>

各模块代码

package com.lotuslaw.recommender

import com.mongodb.casbah.commons.MongoDBObject

import com.mongodb.casbah.{MongoClient, MongoClientURI}

import org.apache.spark.SparkConf

import org.apache.spark.sql.{DataFrame, SparkSession}

import org.elasticsearch.action.admin.indices.create.CreateIndexRequest

import org.elasticsearch.action.admin.indices.delete.DeleteIndexRequest

import org.elasticsearch.action.admin.indices.exists.indices.IndicesExistsRequest

import org.elasticsearch.common.settings.Settings

import org.elasticsearch.common.transport.InetSocketTransportAddress

import org.elasticsearch.transport.client.PreBuiltTransportClient

import java.net.InetAddress

/**

* @author: lotuslaw

* @version: V1.0

* @package: com.lotuslaw.recommender

* @create: 2021-08-23 22:49

* @description:

*/

/**

* Movie 数据集

* 260 电影ID:mid

* Star Wars: Episode IV - A New Hope (1977) 电影名称:name

* Princess Leia is captured and held hostage by the evil 详情描述:descri

* 121 minutes 时长:timelong

* September 21, 2004 发行时间:issue

* 1977 拍摄时间:shoot

* English 语言:language

* Action|Adventure|Sci-Fi 类型:genres

* Mark Hamill|Harrison Ford|Carrie Fisher|Peter Cushing|Alec 演员表:actors

* George Lucas 导演:directors

*/

case class Movie(mid: Int, name: String, descri: String, timelong: String, issue: String, shoot: String, language: String,

genres: String, actors: String, directors: String)

/**

* Ratings 数据集

* 1,31,2.5,1260759144

*/

case class Rating(uid: Int, mid: Int, score: Double, timestamp: Int)

/**

* Tags 数据集

* 15,1955,dentist,1193435061

*/

case class Tag(uid: Int, mid: Int, tag: String, timestamp: Int)

// 把mongo和ES的配置封装成样例类

/**

*

* @param uri MongoDB连接

* @param db MongoDB数据库

*/

case class MongoConfig(uri: String, db: String)

/**

*

* @param httpHosts http主机列表

* @param transportHosts transport主机列表

* @param index 需要操作的索引

* @param clustername 集群名称,es-cluster

*/

case class ESConfig(httpHosts: String, transportHosts: String, index: String, clustername: String)

object DataLoader {

// 定义常量

val MOVIE_DATA_PATH = "C:\Users\86188\Desktop\推荐系统课程\5.推荐工程\recommender\DataLoader\src\main\resources\movies.csv"

val RATING_DATA_PATH = "C:\Users\86188\Desktop\推荐系统课程\5.推荐工程\recommender\DataLoader\src\main\resources\ratings.csv"

val TAG_DATA_PATH = "C:\Users\86188\Desktop\推荐系统课程\5.推荐工程\recommender\DataLoader\src\main\resources\tags.csv"

val MONGODB_MOVIE_COLLECTION = "Movie"

val MONGODB_RATING_COLLECTION = "Rating"

val MONGODB_TAG_COLLECTION = "Tag"

val ES_MOVIE_INDEX = "Movie"

def main(args: Array[String]): Unit = {

val config = Map(

"spark.cores" -> "local[*]",

"mongo.uri" -> "mongodb://192.168.88.132:27017/recommender",

"mongo.db" -> "recommender",

"es.httpHosts" -> "linux:9200",

"es.transportHosts" -> "linux:9300",

"es.index" -> "recommender",

"es.cluster.name" -> "es-cluster"

)

// 创建一个sparkConf

val sparkConf: SparkConf = new SparkConf().setMaster(config("spark.cores")).setAppName("DataLoader")

// 创建一个sparkSession

val spark = SparkSession.builder().config(sparkConf).getOrCreate()

import spark.implicits._

// 加载数据

val movieRDD = spark.sparkContext.textFile(MOVIE_DATA_PATH)

val movieDF = movieRDD.map{

item => {

val attr = item.split("\^")

Movie(attr(0).toInt, attr(1).trim, attr(2).trim, attr(3).trim, attr(4).trim, attr(5).trim, attr(6).trim, attr(7).trim, attr(8).trim, attr(9).trim)

}

}.toDF()

val ratingRDD = spark.sparkContext.textFile(RATING_DATA_PATH)

val ratingDF = ratingRDD.map{

item => {

val attr = item.split(",")

Rating(attr(0).toInt, attr(1).toInt, attr(2).toDouble, attr(3).toInt)

}

}.toDF()

val tagRDD = spark.sparkContext.textFile(TAG_DATA_PATH)

val tagDF = tagRDD.map{

item => {

val attr = item.split(",")

Tag(attr(0).toInt, attr(1).toInt, attr(2).trim, attr(3).toInt)

}

}.toDF()

implicit val mongoConfig: MongoConfig = MongoConfig(config("mongo.uri"), config("mongo.db"))

// 将数据保存到MongoDB

storeDataInMongoDB(movieDF, ratingDF, tagDF)

// 数据预处理,把movie对应的tag信息添加进去,加一列 tag1|tag2|tag3...

import org.apache.spark.sql.functions._

/**

* mid, tags

* tags: tag1|tag2|tag3...

*/

val newTag = tagDF.groupBy($"mid")

.agg(concat_ws("|", collect_set($"tag")).as("tags"))

.select("mid", "tags")

// 对newTag和movie做join,数据合并在一起,左外连接

val movieWithTagsDF = movieDF.join(newTag, Seq("mid"), "left")

implicit val esConfig: ESConfig = ESConfig(config("es.httpHosts"), config("es.transportHosts"), config("es.index"), config("es.cluster.name"))

// 保存数据到ES

storeDataInES(movieWithTagsDF)

// spark.stop()

}

def storeDataInMongoDB(movieDF: DataFrame, ratingDF: DataFrame, tagDF: DataFrame)(implicit mongoConfig: MongoConfig): Unit = {

// 新建一个mongodb的连接

val mongoClient = MongoClient(MongoClientURI(mongoConfig.uri))

// 如果mongodb中已经有相应的数据库,先删除

mongoClient(mongoConfig.db)(MONGODB_MOVIE_COLLECTION).dropCollection()

mongoClient(mongoConfig.db)(MONGODB_RATING_COLLECTION).dropCollection()

mongoClient(mongoConfig.db)(MONGODB_TAG_COLLECTION).dropCollection()

// 将数据写入mongodb表中

movieDF.write

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_MOVIE_COLLECTION)

.mode("overwrite")

.format("com.mongodb.spark.sql")

.save()

ratingDF.write

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_RATING_COLLECTION)

.mode("overwrite")

.format("com.mongodb.spark.sql")

.save()

tagDF.write

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_TAG_COLLECTION)

.mode("overwrite")

.format("com.mongodb.spark.sql")

.save()

// 对数据表建索引

// 1 为指定按升序创建索引

mongoClient(mongoConfig.db)(MONGODB_MOVIE_COLLECTION).createIndex(MongoDBObject("mid" -> 1))

mongoClient(mongoConfig.db)(MONGODB_RATING_COLLECTION).createIndex(MongoDBObject("uid" -> 1))

mongoClient(mongoConfig.db)(MONGODB_RATING_COLLECTION).createIndex(MongoDBObject("mid" -> 1))

mongoClient(mongoConfig.db)(MONGODB_TAG_COLLECTION).createIndex(MongoDBObject("uid" -> 1))

mongoClient(mongoConfig.db)(MONGODB_TAG_COLLECTION).createIndex(MongoDBObject("mid" -> 1))

mongoClient.close()

}

def storeDataInES(movieDF: DataFrame)(implicit eSConfig: ESConfig): Unit = {

// 新建es配置

val settings: Settings = Settings.builder().put("cluster.name", eSConfig.clustername).build()

// 新建一个es客户端

val esClient = new PreBuiltTransportClient(settings)

val REGEX_HOST_PORT = "(.+):(\d+)".r

eSConfig.transportHosts.split(",").foreach{

case REGEX_HOST_PORT(host: String, port: String) =>

esClient.addTransportAddress(new InetSocketTransportAddress(InetAddress.getByName(host), port.toInt))

}

// 先清理遗留的数据

if(esClient.admin().indices().exists(new IndicesExistsRequest(eSConfig.index))

.actionGet()

.isExists

) {

esClient.admin().indices().delete(new DeleteIndexRequest(eSConfig.index))

}

esClient.admin().indices().create(new CreateIndexRequest(eSConfig.index))

movieDF.write

.option("es.nodes", eSConfig.httpHosts)

.option("es.http.timeout", "100m")

.option("es.mapping.id", "mid")

.option("es.nodes.wan.only","true")

.mode("overwrite")

.format("org.elasticsearch.spark.sql")

.save(eSConfig.index + "/" + ES_MOVIE_INDEX)

}

}

package com.lotuslaw.statistics

import org.apache.spark.SparkConf

import org.apache.spark.sql.{DataFrame, SparkSession}

import java.text.SimpleDateFormat

import java.util.Date

/**

* @author: lotuslaw

* @version: V1.0

* @package: com.lotuslaw.statistics

* @create: 2021-08-24 11:24

* @description:

*/

case class Movie(mid: Int, name: String, descri: String, timelong: String, issue: String, shoot: String, language: String,

genres: String, actors: String, directors: String)

case class Rating(uid: Int, mid: Int, score: Double, timestamp: Int)

case class MongoConfig(uri: String, db: String)

// 定义一个基础推荐对象

case class Recommendation(mid: Int, score: Double)

// 定义电影类别top10推荐对象

case class GenresRecommendation(genres: String, recs: Seq[Recommendation])

object StatisticsRecommender {

// 定义表名

val MONGODB_MOVIE_COLLECTION = "Movie"

val MONGODB_RATING_COLLECTION = "Rating"

// 统计表的名称

val RATE_MORE_MOVIES = "RateMoreMovies"

val RATE_MORE_RECENTLY_MOVIES = "RateMoreRecentlyMovies"

val AVERAGE_TOP_MOVIES = "AverageMovies"

val GENRES_TOP_MOVIES = "GenresTopMovies"

def main(args: Array[String]): Unit = {

val config = Map(

"spark.cores" -> "local[*]",

"mongo.uri" -> "mongodb://linux:27017/recommender",

"mongo.db" -> "recommender"

)

// 创建一个sparkConf

val sparkConf: SparkConf = new SparkConf().setMaster(config("spark.cores")).setAppName("DataLoader")

// 创建一个sparkSession

val spark = SparkSession.builder().config(sparkConf).getOrCreate()

import spark.implicits._

implicit val mongoConfig: MongoConfig = MongoConfig(config("mongo.uri"), config("mongo.db"))

// 从mongodb加载数据

val ratingDF = spark.read

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_RATING_COLLECTION)

.format("com.mongodb.spark.sql")

.load()

.as[Rating]

.toDF()

val movieDF = spark.read

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_MOVIE_COLLECTION)

.format("com.mongodb.spark.sql")

.load()

.as[Movie]

.toDF()

// 创建名为ratings的临时表

ratingDF.createOrReplaceTempView("ratings")

// TODO: 不同的统计推荐结果

// 1.历史热门统计:历史评分数据最多,mid,count

val rateMoreMoviesDF = spark.sql("select mid, count(mid) as count from ratings group by mid")

// 把结果写入对应的mongodb表中

storeDFInMongoDB(rateMoreMoviesDF, RATE_MORE_MOVIES)

// 2.近期热门统计:按照"yyyyMM"格式选取最近的评分数据,统计评分个数

// 创建一个日期格式化工具

val simpleDateFormat = new SimpleDateFormat("yyyyMM")

// 注册udf,把时间戳转换成年月格式

spark.udf.register("changDate", (x: Int) => simpleDateFormat.format(new Date(x * 1000L)).toInt)

// 对原始数据做处理,去掉uid

val ratingOfYearMonth = spark.sql("select mid, score, changDate(timestamp) as yearmonth from ratings")

ratingOfYearMonth.createOrReplaceTempView("ratingOfMonth")

// 从ratingOfMonth中查找电影在各个月份的评分,mid,count,yearmonth

val rateMoreRecentlyMoviesDF = spark.sql("select mid, count(mid) as count, yearmonth from ratingOfMonth group by yearmonth, mid order by yearmonth desc, count desc")

// 存入mongodb

storeDFInMongoDB(rateMoreRecentlyMoviesDF, RATE_MORE_RECENTLY_MOVIES)

// 3.优质电影统计,统计电影的平均评分

val averageMoviesDF = spark.sql("select mid, avg(score) as avg from ratings group by mid")

storeDFInMongoDB(averageMoviesDF, AVERAGE_TOP_MOVIES)

// 4.各类别电影Top统计

// 定义所有类别

val genres = List("Action","Adventure","Animation","Comedy","Crime","Documentary","Drama","Famiy","Fantasy","Foreign","History","Horror","Music","Mystery","Romance","Science","Tv","Thriller","War","Western")

// 把平均评分加入movie表里,加一列,inner join

val movieWithScore = movieDF.join(averageMoviesDF, "mid")

// 为做笛卡尔积,把genres转成rdd

val genresRDD = spark.sparkContext.makeRDD(genres)

// 计算类别top10, 首先对类别和电影做笛卡尔积

val genresTopMoviesDF = genresRDD.cartesian(movieWithScore.rdd)

.filter{

// 条件过滤找出movie的字段genres值包含当前类别genre的那些

case (genre, movieRow) => movieRow.getAs[String]("genres").toLowerCase.contains(genre.toLowerCase())

}

.map{

case (genre, movieRow) => (genre, (movieRow.getAs[Int]("mid"), movieRow.getAs[Double]("avg")))

}

.groupByKey()

.map{

case (genre, items) => GenresRecommendation(genre, items.toList.sortWith(_._2>_._2).take(10).map{

items => Recommendation(items._1, items._2)

})

}

.toDF()

storeDFInMongoDB(genresTopMoviesDF, GENRES_TOP_MOVIES)

spark.stop()

}

def storeDFInMongoDB(df: DataFrame, collection_name: String)(implicit mongoConfig: MongoConfig): Unit = {

df.write

.option("uri", mongoConfig.uri)

.option("collection", collection_name)

.mode("overwrite")

.format("com.mongodb.spark.sql")

.save()

}

}

package com.lotuslaw.offline

import org.apache.spark.SparkConf

import org.apache.spark.mllib.recommendation.{ALS, Rating}

import org.apache.spark.sql.SparkSession

import org.jblas.DoubleMatrix

/**

* @author: lotuslaw

* @version: V1.0

* @package: com.lotuslaw.offline

* @create: 2021-08-24 14:49

* @description:

*/

// 基于评分数据的隐语义模型只需要rating数据

case class MovieRating(uid: Int, mid: Int, score: Double, timestamp: Int)

case class MongoConfig(uri: String, db: String)

case class Recommendation(mid: Int, score: Double)

// 定义基于预测评分的用户推荐列表

case class UserRecs(uid: Int, recs: Seq[Recommendation])

// 定义基于LFM电影特征向量的电影相似度列表

case class MovieRecs(mid: Int, recs: Seq[Recommendation])

object OfflineRecommender {

// 定义表名和常量

val MONGODB_RATING_COLLECTION = "Rating"

val USER_RECS = "UserRecs"

val MOVIE_RECS = "MovieRecs"

val USER_MAX_RECOMMENTDATION = 20

def main(args: Array[String]): Unit = {

val config = Map(

"spark.cores" -> "local[*]",

"mongo.uri" -> "mongodb://linux:27017/recommender",

"mongo.db" -> "recommender"

)

val sparkConf: SparkConf = new SparkConf().setMaster(config("spark.cores")).setAppName("OfflineRecommender")

val spark = SparkSession.builder().config(sparkConf).getOrCreate()

import spark.implicits._

implicit val mongoConfig: MongoConfig = MongoConfig(config("mongo.uri"), config("mongo.db"))

// 加载数据

val ratingRDD = spark.read

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_RATING_COLLECTION)

.format("com.mongodb.spark.sql")

.load()

.as[MovieRating]

.rdd

.map{rating => (rating.uid, rating.mid, rating.score)} // 转化成rdd并去掉时间戳

.cache()

// 从rating数据中提取所有的uid,mid,并去重

val userRDD = ratingRDD.map(_._1).distinct()

val movieRDD = ratingRDD.map(_._2).distinct()

// 训练隐语义模型

val trainData = ratingRDD.map(x => Rating(x._1, x._2, x._3))

val (rank, iterations, lambda) = (100, 5, 0.1)

val model = ALS.train(trainData, rank, iterations, lambda)

// 基于用户和电影的隐特征,计算预测评分,得到用户的推荐列表

// 计算user和movie的笛卡尔积,得到一个评分矩阵

val userMovies = userRDD.cartesian(movieRDD)

// 调用model的predict方法预测评分

val preRatings = model.predict(userMovies)

val userRecs = preRatings

.filter(_.rating > 0) // 过滤出评分大于0的项

.map(rating => (rating.user, (rating.product, rating.rating)))

.groupByKey()

.map{

case (uid, recs) => UserRecs(uid, recs.toList.sortWith(_._2>_._2).take(USER_MAX_RECOMMENTDATION).map(x=>Recommendation(x._1, x._2)))

}

.toDF()

userRecs.write

.option("uri", mongoConfig.uri)

.option("collection", USER_RECS)

.format("com.mongodb.spark.sql")

.save()

// 基于电影隐特征计算相似度矩阵,得到电影的相似度列表

val movieFeatures = model.productFeatures.map{

case (mid, features) => (mid, new DoubleMatrix(features))

}

// 对所有电影两两计算他们的相似度,先做笛卡尔积

val movieRecs = movieFeatures.cartesian(movieFeatures)

.filter{

// 把自己跟自己的配对过滤掉

case (a, b) => a._1 != b._1

}

.map{

case (a, b) =>

val simScore = this.consinSim(a._2, b._2)

(a._1, (b._1, simScore))

}

.filter(_._2._2>0.6) // 过滤出相似度大于0.6的

.groupByKey()

.map{

case (mid, items) => MovieRecs(mid, items.toList.sortWith(_._2>_._2).map(x=>Recommendation(x._1, x._2)))

}

.toDF()

movieRecs.write

.option("uri", mongoConfig.uri)

.option("collection", MOVIE_RECS)

.mode("overwrite")

.format("com.mongodb.spark.sql")

.save()

spark.stop()

}

// 求向量余弦相似度

def consinSim(movie1: DoubleMatrix, movie2: DoubleMatrix): Double = {

movie1.dot(movie2) / (movie1.norm2() * movie2.norm2())

}

}

- StreamingRecommender

package com.lotuslaw.streaming

import com.mongodb.casbah.commons.MongoDBObject

import com.mongodb.casbah.{MongoClient, MongoClientURI}

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

import org.apache.spark.streaming.kafka010.{ConsumerStrategies, KafkaUtils, LocationStrategies}

import org.apache.spark.streaming.{Seconds, StreamingContext}

import redis.clients.jedis.Jedis

/**

* @author: lotuslaw

* @version: V1.0

* @package: com.lotuslaw.streaming

* @create: 2021-08-24 16:29

* @description:

*/

// 定义连接助手对象,序列化

object ConnHelper extends Serializable {

lazy val jedis = new Jedis("linux")

lazy val mongoClient: MongoClient = MongoClient(MongoClientURI("mongodb://linux:27017/recommender"))

}

case class MongoConfig(uri: String, db: String)

// 标准推荐

case class Recommendation(mid: Int, score: Double)

// 用户的推荐

case class UserRecs(uid: Int, recs: Seq[Recommendation])

//电影的相似度

case class MovieRecs(mid: Int, recs: Seq[Recommendation])

object StreamingRecommender {

// 定义表名与常量

val MAX_USER_RATINGS_NUM = 20

val MAX_SIM_MOVIES_NUM = 20

val MONGODB_STREAM_RECS_COLLECTION = "StreamRecs"

val MONGODB_RATING_COLLECTION = "Rating"

val MONGODB_MOVIE_RECS_COLLECTION = "MovieRecs"

def main(args: Array[String]): Unit = {

val config = Map(

"spark.cores" -> "local[*]",

"mongo.uri" -> "mongodb://linux:27017/recommender",

"mongo.db" -> "recommender",

"kafka.topic" -> "recommender"

)

val sparkConf: SparkConf = new SparkConf().setMaster(config("spark.cores")).setAppName("StreamingRecommender")

val spark = SparkSession.builder().config(sparkConf).getOrCreate()

// 拿到streaming context

val sc = spark.sparkContext

val ssc = new StreamingContext(sc, Seconds(2)) // batch duration

import spark.implicits._

implicit val mongoConfig: MongoConfig = MongoConfig(config("mongo.uri"), config("mongo.db"))

// 加载电影相似度矩阵数据,把它广播出去

val simMovieMatrix = spark.read

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_MOVIE_RECS_COLLECTION)

.format("com.mongodb.spark.sql")

.load()

.as[MovieRecs]

.rdd

.map { movieRecs => // 为了查询相似度方便,转换成map

(movieRecs.mid, movieRecs.recs.map(x=>(x.mid, x.score)).toMap)

}.collectAsMap()

val simMovieMatrixBroadCast = sc.broadcast(simMovieMatrix)

// 定义kafka连接参数

val kafkaParam = Map(

"bootstrap.servers" -> "linux:9092",

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> "recommender",

"auto.offset.reset" -> "latest"

)

// 通过kafka创建一个DSteam

val kafkaStream = KafkaUtils.createDirectStream[String, String](

ssc,

LocationStrategies.PreferConsistent,

ConsumerStrategies.Subscribe[String, String](Array(config("kafka.topic")), kafkaParam)

)

// 把原始数据UID|MID|SCORE|TIMESTAMP转换成评分流

val ratingStream = kafkaStream.map {

msg =>

val attr = msg.value().split("\|")

(attr(0).toInt, attr(1).toInt, attr(2).toDouble, attr(3).toInt)

}

// 继续做流式处理,核心算法部分

ratingStream.foreachRDD{

rdds => rdds.foreach{

case (uid, mid, score, timestamp) =>

println("rating data coming! >>>>>>>>>>>>>>>>>>")

// 从redis里获取当前用户最近的k次评分,保存成Array[(mid, score)]

val userRecntlyRatings = getUserRecentlyRating(MAX_USER_RATINGS_NUM, uid, ConnHelper.jedis)

// 从相似度矩阵中取出当前电影最相似的N个电影,作为备选列表,Array[mid]

val candidateMovies = getTopSimMovies(MAX_SIM_MOVIES_NUM, mid, uid, simMovieMatrixBroadCast.value)

// 对每个备选电影,计算推荐优先级,得到当前用户的实时推荐列表,Array[(mid, score)]

val streamRecs = computeMovieScore(candidateMovies, userRecntlyRatings, simMovieMatrixBroadCast.value)

// 把推荐数据保存到mongodb

saveDataToMongoDB(uid, streamRecs)

}

}

// 开始接收和处理数据

ssc.start()

println(">>>>>>>>>>>>>>> straming started")

ssc.awaitTermination()

}

// Redis操作返回的是java类,为了用map操作需要引入转换类

import scala.collection.JavaConversions._

def getUserRecentlyRating(num: Int, uid: Int, jedis: Jedis): Array[(Int, Double)] = {

// 从Redis读取数据,用户评分数据保存在uid:UID 为key的队列里,value是MID:SCORE

jedis.lrange("uid:" + uid, 0, num-1)

.map{

item =>

val attr = item.split("\:")

(attr(0).trim.toInt, attr(1).trim.toDouble)

}

.toArray

}

/**

* 获取当前电影最相似的num个电影,作为备选电影

* @param num 相似电影数量

* @param mid 当前电影ID

* @param uid 当前评分用户ID

* @param simMovies 相似度矩阵

* @return 过滤之后的备选电影列表

*/

def getTopSimMovies(num: Int, mid: Int, uid: Int, simMovies: scala.collection.Map[Int, scala.collection.immutable.Map[Int, Double]])(implicit mongoConfig: MongoConfig): Array[Int] = {

// 从相似度矩阵中拿到所有相似的电影

val allSimMovies = simMovies(mid).toArray

// 从mongodb中查询用户已看过的电影

val ratingExist = ConnHelper.mongoClient(mongoConfig.db)(MONGODB_RATING_COLLECTION)

.find(MongoDBObject("uid" -> uid))

.toArray

.map{

item => item.get("mid").toString.toInt

}

// 把看过的过滤,得到输出列表

allSimMovies.filter(x => ! ratingExist.contains(x._1))

.sortWith(_._2>_._2)

.take(num)

.map(x => x._1)

}

def computeMovieScore(candidateMovies: Array[Int], userRecentlyRatings: Array[(Int, Double)], simMovies: scala.collection.Map[Int, scala.collection.immutable.Map[Int, Double]]): Array[(Int, Double)] = {

// 定义一个ArrayBuffer,用于保存每一个备选电影的基础得分

val scores = scala.collection.mutable.ArrayBuffer[(Int, Double)]()

// 定义一个HashMap,保存每一个备选定影的增强减弱因子

val increMap = scala.collection.mutable.HashMap[Int, Int]()

val decreMap = scala.collection.mutable.HashMap[Int, Int]()

for (candidateMovie <- candidateMovies; userRecentlyRating <- userRecentlyRatings) {

// 拿到备选电影和最近评分电影的相似度

val simScore = getMoviesSimScore(candidateMovie, userRecentlyRating._1, simMovies)

if (simScore > 0.7) {

// 计算备选电影的基础推荐得分

scores += ((candidateMovie, simScore * userRecentlyRating._2))

if (userRecentlyRating._2 > 3) {

increMap(candidateMovie) = increMap.getOrDefault(candidateMovie, 0) + 1

} else {

decreMap(candidateMovie) = decreMap.getOrDefault(candidateMovie, 0) + 1

}

}

}

// 根据备选电影的mid做groupby,根据公式去求最后的推荐评分

scores.groupBy(_._1).map{

// groupBy之后得到的数据 Map(mid -> ArrayBuffer[(mid, score)])

case (mid, scoreList) =>

(mid, scoreList.map(_._2).sum / scoreList.length + log(increMap.getOrDefault(mid, 1)) - log(decreMap.getOrDefault(mid, 1)))

}.toArray

}

// 获取两个电影之间的相似度

def getMoviesSimScore(mid1: Int, mid2: Int, simMovies: scala.collection.Map[Int, scala.collection.immutable.Map[Int, Double]]): Double = {

simMovies.get(mid1) match {

case Some(sims) => sims.get(mid2) match {

case Some(score) => score

case None => 0.0

}

case None => 0.0

}

}

// 求一个数的对数

def log(m: Int): Double = {

val N = 10

math.log(m) / math.log(N)

}

def saveDataToMongoDB(uid: Int, streamRecs: Array[(Int, Double)])(implicit mongoConfig: MongoConfig): Unit = {

// 定义到StreamRecs表的连接

val streamRecsCollection = ConnHelper.mongoClient(mongoConfig.db)(MONGODB_STREAM_RECS_COLLECTION)

// 如果表中已有uid对应的数据,先删除

streamRecsCollection.findAndRemove(MongoDBObject("uid" -> uid))

// 将streamRecs存入表中

streamRecsCollection.insert(MongoDBObject("uid" -> uid, "recs" -> streamRecs.map(x=>MongoDBObject("mid"->x._1, "score"->x._2))))

}

}

package com.lotuslaw.content

import org.apache.spark.SparkConf

import org.apache.spark.ml.feature.{HashingTF, IDF, Tokenizer}

import org.apache.spark.ml.linalg.SparseVector

import org.apache.spark.sql.SparkSession

import org.jblas.DoubleMatrix

/**

* @author: lotuslaw

* @version: V1.0

* @package: com.lotuslaw.content

* @create: 2021-08-24 19:37

* @description:

*/

// 需要的数据源是电影内容信息

case class Movie(mid: Int, name: String, descri: String, timelong: String, issue: String, shoot: String, language: String,

genres: String, actors: String, directors: String)

case class MongoConfig(uri: String, db: String)

case class Recommendation(mid: Int, score: Double)

// 定义基于电影内容信息提取出的特征向量的电影相似度列表

case class MovieRecs(mid: Int, recs: Seq[Recommendation])

object ContentRecommender {

// 定义常量及表名

val MONGODB_MOVIE_COLLECTION = "Movie"

val CONTENT_MOVIE_RECS = "ContentMovieRecs"

def main(args: Array[String]): Unit = {

val config = Map(

"spark.cores" -> "local[*]",

"mongo.uri" -> "mongodb://linux:27017/recommender",

"mongo.db" -> "recommender"

)

val sparkConf: SparkConf = new SparkConf().setMaster(config("spark.cores")).setAppName("OfflineRecommender")

val spark = SparkSession.builder().config(sparkConf).getOrCreate()

import spark.implicits._

implicit val mongoConfig: MongoConfig = MongoConfig(config("mongo.uri"), config("mongo.db"))

// 加载数据并做预处理

val movieTagsDF = spark.read

.option("uri", mongoConfig.uri)

.option("collection", MONGODB_MOVIE_COLLECTION)

.format("com.mongodb.spark.sql")

.load()

.as[Movie]

.map(

x => (x.mid, x.name, x.genres.map(c=>if(c=='|') ' ' else c))

)

.toDF("mid", "name", "genres")

.cache()

// TODO: 从内容信息中提取电影特征向量

// 核心部分,用TF-IDF从内容信息中提取电影特征向量

// 创建一个分词器,默认按照空格分词

val tokenizer = new Tokenizer().setInputCol("genres").setOutputCol("words")

// 用分词器对原始数据做转换,生成新的一列words

val wordsData = tokenizer.transform(movieTagsDF)

// 引入HashingTF工具,可以把一个词语序列转化成对应的词频

val hasingTF = new HashingTF().setInputCol("words").setOutputCol("rawFeatures").setNumFeatures(50)

val featurizeData = hasingTF.transform(wordsData)

// 引入IDF工具,可以得到idf模型

val idf = new IDF().setInputCol("rawFeatures").setOutputCol("features")

// 训练idf模型,得到每个词的逆文档频率

val idfModel = idf.fit(featurizeData)

// 用模型对元数据进行处理,得到文档中每个词的tf-idf,作为新的特征向量

val rescaledData = idfModel.transform(featurizeData)

val movieFeatures = rescaledData.map(

row => (row.getAs[Int]("mid"), row.getAs[SparseVector]("features").toArray)

)

.rdd

.map(

x => (x._1, new DoubleMatrix(x._2))

)

// 对所有电影两两计算他们的相似度,先做笛卡尔积

val movieRecs = movieFeatures.cartesian(movieFeatures)

.filter{

// 把自己跟自己的配对过滤掉

case (a, b) => a._1 != b._1

}

.map{

case (a, b) =>

val simScore = this.consinSim(a._2, b._2)

(a._1, (b._1, simScore))

}

.filter(_._2._2>0.6) // 过滤出相似度大于0.6的

.groupByKey()

.map{

case (mid, items) => MovieRecs(mid, items.toList.sortWith(_._2>_._2).map(x=>Recommendation(x._1, x._2)))

}

.toDF()

movieRecs.write

.option("uri", mongoConfig.uri)

.option("collection", CONTENT_MOVIE_RECS)

.mode("overwrite")

.format("com.mongodb.spark.sql")

.save()

spark.stop()

}

// 求向量余弦相似度

def consinSim(movie1: DoubleMatrix, movie2: DoubleMatrix): Double = {

movie1.dot(movie2) / (movie1.norm2() * movie2.norm2())

}

}