一,ReplicationController 简称 rc

RC保证在同一时间能够运行指定数量的Pod副本,保证Pod总是可用。如果实际Pod数量比指定的多就结束掉多余的,如果实际数量比指定的少就启动缺少的。

当Pod失败、被删除或被终结时,RC会自动创建新的Pod来保证副本数量,所以即使只有一个Pod,也应该使用RC来进行管理。

apiVersion: v1

kind: ReplicationController #rc类型

metadata:

name: nginx-rc #rc名字

spec:

replicas: 2 #2个副本

selector:

app: nginx #通过这个标签找到生成的Pod

template: #定义Pod模板

metadata:

name: nginx-pod #名字无效,实际pod名字是 nginx-rc-5位字母或数字

labels:

app: nginx #必须和selector一致

spec:

containers: #rc的重启策略必须是always,这样才能保证副本数正确

- name: nginx-container #容器名字,可以使用docker ps |grep nginx-container查看

image: nginx

ports:

- containerPort: 80

提示:

K8S 通过template来生成pod,创建完后模板和pod就没有任何关系了,rc通过 labels来找对应的pod,控制副本

查询rc

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get rc nginx-rc

NAME DESIRED CURRENT READY AGE

nginx-rc 2 2 2 169m

查询pod容器

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod --selector app=nginx

NAME READY STATUS RESTARTS AGE

nginx-rc-w29rm 1/1 Running 0 172m

nginx-rc-zntt2 1/1 Running 0 172m

同时查询rc和rc创建的pod

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod --selector app=nginx --label-columns app

NAME READY STATUS RESTARTS AGE APP

nginx-rc-w29rm 1/1 Running 0 174m nginx

nginx-rc-zntt2 1/1 Running 0 174m nginx

删除pod会后会立刻在拉起一个pod

[root@cce-7day-fudonghai-24106 01CNL]# kubectl delete pod nginx-rc-zntt2

pod "nginx-rc-zntt2" deleted

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-rc-c5lkq 1/1 Running 0 53s

nginx-rc-k4gj7 1/1 Running 0 3s

删除rc,默认pod会被级联删除(--cascade=false只删除rc保留创建的pod)

[root@cce-7day-fudonghai-24106 01CNL]# kubectl delete rc nginx-rc

replicationcontroller "nginx-rc" deleted

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

No resources found.

[root@cce-7day-fudonghai-24106 01CNL]# kubectl delete rc nginx-rc --cascade=false

replicationcontroller "nginx-rc" deleted

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-rc-d752f 1/1 Running 0 95s

nginx-rc-z8hxt 1/1 Running 0 95s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl delete pod --selector app=nginx

pod "nginx-rc-d752f" deleted

pod "nginx-rc-z8hxt" deleted

使用edit命令修改副本数到3

[root@cce-7day-fudonghai-24106 01CNL]# kubectl edit rc nginx-rc

replicationcontroller/nginx-rc edited

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-rc-7nswx 1/1 Running 0 3s

nginx-rc-92sns 1/1 Running 0 3m32s

nginx-rc-p8px9 1/1 Running 0 3m32s

二,ReplicaSet,简称rs,和rc类似,匹配labels支持表达式

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

labels:

app: guestbook

tier: frontend

spec:

# this replicas value is default

# modify it according to your case

replicas: 3

selector:

matchLabels:

tier: frontend

matchExpressions:

- {key: tier, operator: In, values: [frontend]} #此处支持表达式

template:

metadata:

labels:

app: guestbook

tier: frontend

spec:

containers:

- name: php-redis

image: nginx #gcr.io/google_samples/gb-frontend:v3

resources:

requests:

cpu: 100m

memory: 100Mi

env:

- name: GET_HOSTS_FROM

value: dns

# If your cluster config does not include a dns service, then to

# instead access environment variables to find service host

# info, comment out the 'value: dns' line above, and uncomment the

# line below.

# value: env

ports:

- containerPort: 80

三,Deployment,配合rs使用,可以滚动升级

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.11.1-alpine

ports:

- containerPort: 80

创建deployment,并查看

[root@cce-7day-fudonghai-24106 01CNL]# kubectl create -f 03deployment.yaml

deployment.apps/nginx-deployment created

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-6c77cccbb-7nkkp 0/1 ContainerCreating 0 12s

nginx-deployment-6c77cccbb-mdbp7 0/1 ContainerCreating 0 12s

nginx-deployment-6c77cccbb-nwmtw 0/1 ContainerCreating 0 12s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-6c77cccbb 3 3 0 21s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-6c77cccbb-7nkkp 1/1 Running 0 2m56s

nginx-deployment-6c77cccbb-mdbp7 1/1 Running 0 2m56s

nginx-deployment-6c77cccbb-nwmtw 1/1 Running 0 2m56s

You have new mail in /var/spool/mail/root

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-6c77cccbb 3 3 3 3m

带升级策略的deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment-strategy

labels:

app: nginx

spec:

revisionHistoryLimit: 10 #升级的版本历史最多保留10个

strategy:

type: RollingUpdate #升级策略默认就是RollingUpdate,滚动升级

rollingUpdate:

maxUnavailable: 1 #最多不可用是 1个

maxSurge: 1 #最多多出来 1个

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.11.1-alpine

ports:

- containerPort: 80

使用下列命令更改镜像版本到 latest

kubectl edit deployment nginx-deployment-strategy

spec:

containers:

- image: latest

上面写法错误,导致升级失败,随后我们观察pod

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-55ddb8b99-llnlt 0/1 ErrImagePull 0 5s

nginx-deployment-strategy-55ddb8b99-mfczm 0/1 ContainerCreating 0 5s

nginx-deployment-strategy-6c77cccbb-5qtl4 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-d57tg 0/1 Terminating 0 6m29s

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-h48n8 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-qjnnl 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-rbh87 1/1 Running 0 6m29s

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Running 0 6m29s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-55ddb8b99-llnlt 0/1 ImagePullBackOff 0 15s

nginx-deployment-strategy-55ddb8b99-mfczm 0/1 ErrImagePull 0 15s

nginx-deployment-strategy-6c77cccbb-5qtl4 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-h48n8 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-qjnnl 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-rbh87 1/1 Running 0 6m39s

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Running 0 6m39s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-55ddb8b99-llnlt 0/1 ImagePullBackOff 0 34s

nginx-deployment-strategy-55ddb8b99-mfczm 0/1 ImagePullBackOff 0 34s

nginx-deployment-strategy-6c77cccbb-5qtl4 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-h48n8 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-qjnnl 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-rbh87 1/1 Running 0 6m58s

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Running 0 6m58s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-55ddb8b99-llnlt 0/1 ErrImagePull 0 52s

nginx-deployment-strategy-55ddb8b99-mfczm 0/1 ErrImagePull 0 52s

nginx-deployment-strategy-6c77cccbb-5qtl4 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-h48n8 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-qjnnl 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-rbh87 1/1 Running 0 7m16s

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Running 0 7m16s

上面按照升级策略一共产生了11个pod(maxSurge =1最多多出来1个),同时拉取不到镜像导致失败,使不可用最大为1(maxUnavailable: 1),其余9个还是老版本的pod,处于可用状态。

把镜像的写法改成正确的

spec:

containers:

- image: nginx: latest

出来以后继续观察pod状态,发现之前错误的pod开始重建了,最后稳定在10个

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-6bb6554bf-6swcc 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6bb6554bf-hzb98 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6bb6554bf-hzchn 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6c77cccbb-5qtl4 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-h48n8 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-qjnnl 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-rbh87 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Running 0 24m

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-6bb6554bf-6swcc 1/1 Running 0 9s

nginx-deployment-strategy-6bb6554bf-ggzgr 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6bb6554bf-hzb98 1/1 Running 0 9s

nginx-deployment-strategy-6bb6554bf-hzchn 1/1 Running 0 9s

nginx-deployment-strategy-6bb6554bf-jgs2v 0/1 Pending 0 3s

nginx-deployment-strategy-6bb6554bf-skf6q 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6c77cccbb-5qtl4 1/1 Terminating 0 24m

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-h48n8 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-qjnnl 1/1 Terminating 0 24m

nginx-deployment-strategy-6c77cccbb-rbh87 1/1 Terminating 0 24m

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Running 0 24m

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-6bb6554bf-6swcc 1/1 Running 0 20s

nginx-deployment-strategy-6bb6554bf-98pbh 0/1 ContainerCreating 0 5s

nginx-deployment-strategy-6bb6554bf-dpjzl 0/1 ContainerCreating 0 6s

nginx-deployment-strategy-6bb6554bf-ggzgr 1/1 Running 0 15s

nginx-deployment-strategy-6bb6554bf-h2z8b 0/1 ContainerCreating 0 7s

nginx-deployment-strategy-6bb6554bf-hzb98 1/1 Running 0 20s

nginx-deployment-strategy-6bb6554bf-hzchn 1/1 Running 0 20s

nginx-deployment-strategy-6bb6554bf-jgs2v 1/1 Running 0 14s

nginx-deployment-strategy-6bb6554bf-skf6q 1/1 Running 0 15s

nginx-deployment-strategy-6c77cccbb-8tr4b 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-cvz56 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-gdv9g 1/1 Terminating 0 24m

nginx-deployment-strategy-6c77cccbb-l7jc6 1/1 Running 0 24m

nginx-deployment-strategy-6c77cccbb-zfqfx 1/1 Terminating 0 24m

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-6bb6554bf-6swcc 1/1 Running 0 31s

nginx-deployment-strategy-6bb6554bf-98pbh 1/1 Running 0 16s

nginx-deployment-strategy-6bb6554bf-dpjzl 1/1 Running 0 17s

nginx-deployment-strategy-6bb6554bf-ggzgr 1/1 Running 0 26s

nginx-deployment-strategy-6bb6554bf-h2z8b 1/1 Running 0 18s

nginx-deployment-strategy-6bb6554bf-hzb98 1/1 Running 0 31s

nginx-deployment-strategy-6bb6554bf-hzchn 1/1 Running 0 31s

nginx-deployment-strategy-6bb6554bf-jgs2v 1/1 Running 0 25s

nginx-deployment-strategy-6bb6554bf-ll4kf 1/1 Running 0 9s

nginx-deployment-strategy-6bb6554bf-skf6q 1/1 Running 0 26s

下面查看升级情况,一共有三个版本

[root@cce-7day-fudonghai-24106 01CNL]# kubectl rollout history deployment nginx-deployment-strategy deployments "nginx-deployment-strategy" REVISION CHANGE-CAUSE 1 <none> 2 <none> 3 <none>

逐一查看各个版本

[root@cce-7day-fudonghai-24106 01CNL]# kubectl rollout history deployment nginx-deployment-strategy --revision=1 deployments "nginx-deployment-strategy" with revision #1 Pod Template: Labels: app=nginx pod-template-hash=6c77cccbb Containers: nginx: Image: nginx:1.11.1-alpine Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> [root@cce-7day-fudonghai-24106 01CNL]# kubectl rollout history deployment nginx-deployment-strategy --revision=2 deployments "nginx-deployment-strategy" with revision #2 Pod Template: Labels: app=nginx pod-template-hash=55ddb8b99 Containers: nginx: Image: latest Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> [root@cce-7day-fudonghai-24106 01CNL]# kubectl rollout history deployment nginx-deployment-strategy --revision=3 deployments "nginx-deployment-strategy" with revision #3 Pod Template: Labels: app=nginx pod-template-hash=6bb6554bf Containers: nginx: Image: nginx:latest Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none>

下面是回滚

[root@cce-7day-fudonghai-24106 01CNL]# kubectl rollout undo deployment nginx-deployment-strategy --to-revision=1

deployment.extensions/nginx-deployment-strategy

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-6bb6554bf-6swcc 1/1 Running 0 41m

nginx-deployment-strategy-6bb6554bf-98pbh 0/1 Terminating 0 41m

nginx-deployment-strategy-6bb6554bf-dpjzl 1/1 Terminating 0 41m

nginx-deployment-strategy-6bb6554bf-ggzgr 1/1 Running 0 41m

nginx-deployment-strategy-6bb6554bf-h2z8b 1/1 Terminating 0 41m

nginx-deployment-strategy-6bb6554bf-hzb98 1/1 Running 0 41m

nginx-deployment-strategy-6bb6554bf-hzchn 1/1 Running 0 41m

nginx-deployment-strategy-6bb6554bf-jgs2v 1/1 Running 0 41m

nginx-deployment-strategy-6bb6554bf-skf6q 1/1 Running 0 41m

nginx-deployment-strategy-6c77cccbb-4r7x2 1/1 Running 0 8s

nginx-deployment-strategy-6c77cccbb-5jkrg 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6c77cccbb-fgk8h 0/1 ContainerCreating 0 4s

nginx-deployment-strategy-6c77cccbb-kl2gt 1/1 Running 0 8s

nginx-deployment-strategy-6c77cccbb-s9595 0/1 ContainerCreating 0 5s

nginx-deployment-strategy-6c77cccbb-swtt8 1/1 Running 0 8s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-strategy-6c77cccbb-4r7x2 1/1 Running 0 31s

nginx-deployment-strategy-6c77cccbb-5jkrg 1/1 Running 0 27s

nginx-deployment-strategy-6c77cccbb-98qnl 1/1 Running 0 12s

nginx-deployment-strategy-6c77cccbb-fgk8h 1/1 Running 0 27s

nginx-deployment-strategy-6c77cccbb-jfdx6 1/1 Running 0 19s

nginx-deployment-strategy-6c77cccbb-kl2gt 1/1 Running 0 31s

nginx-deployment-strategy-6c77cccbb-s9595 1/1 Running 0 28s

nginx-deployment-strategy-6c77cccbb-swtt8 1/1 Running 0 31s

nginx-deployment-strategy-6c77cccbb-thhkr 1/1 Running 0 19s

nginx-deployment-strategy-6c77cccbb-zgd5s 1/1 Running 0 21s

参数解析

Replicas(副本数量):

.spec.replicas 是可以选字段,指定期望的pod数量,默认是1。

Selector(选择器):

.spec.selector是可选字段,用来指定 label selector ,圈定Deployment管理的pod范围。如果被指定, .spec.selector 必须匹配 .spec.template.metadata.labels,否则它将被API拒绝。如果 .spec.selector 没有被指定, .spec.selector.matchLabels 默认是.spec.template.metadata.labels。

在Pod的template跟.spec.template不同或者数量超过了.spec.replicas规定的数量的情况下,Deployment会杀掉label跟selector不同的Pod。

Pod Template(Pod模板):

.spec.template 是 .spec中唯一要求的字段。

.spec.template 是 pod template. 它跟 Pod有一模一样的schema,除了它是嵌套的并且不需要apiVersion 和 kind字段。

另外为了划分Pod的范围,Deployment中的pod template必须指定适当的label(不要跟其他controller重复了,参考selector)和适当的重启策略。

.spec.template.spec.restartPolicy 可以设置为 Always , 如果不指定的话这就是默认配置。

strategy(更新策略):

.spec.strategy 指定新的Pod替换旧的Pod的策略。 .spec.strategy.type 可以是"Recreate"或者是 "RollingUpdate"。"RollingUpdate"是默认值。

Recreate: 重建式更新,就是删一个建一个。类似于ReplicaSet的更新方式,即首先删除现有的Pod对象,然后由控制器基于新模板重新创建新版本资源对象。

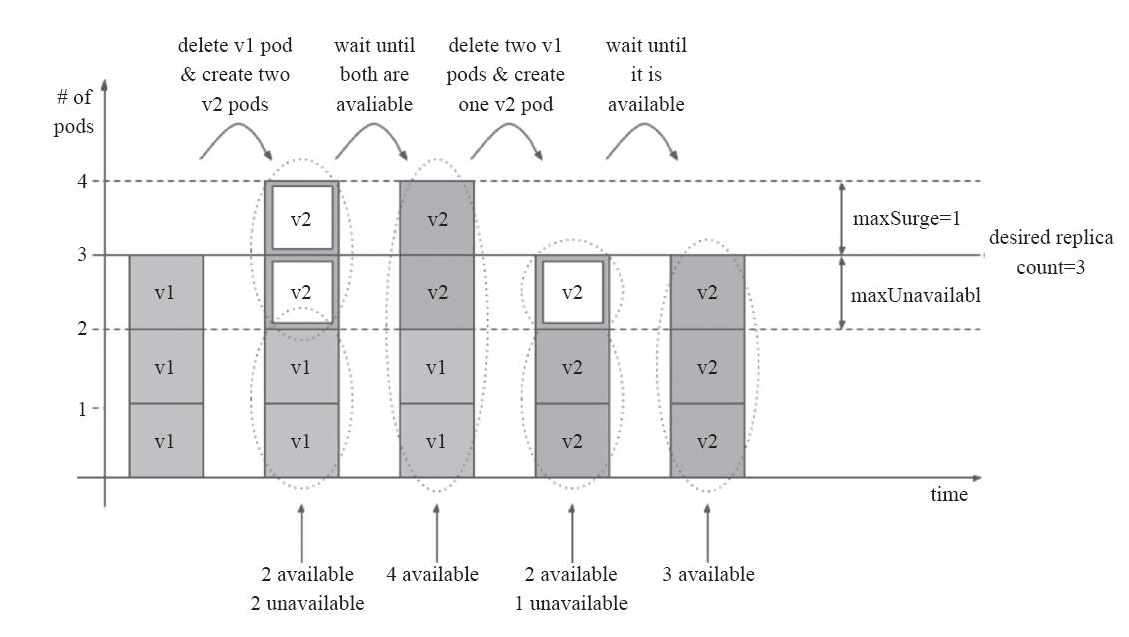

rollingUpdate:滚动更新,简单定义 更新期间pod最多有几个等。可以指定maxUnavailable 和 maxSurge 来控制 rolling update 进程。

maxSurge:.spec.strategy.rollingUpdate.maxSurge 是可选配置项,用来指定可以超过期望的Pod数量的最大个数。该值可以是一个绝对值(例如5)或者是期望的Pod数量的百分比(例如10%)。当MaxUnavailable为0时该值不可以为0。通过百分比计算的绝对值向上取整。默认值是1。

例如,该值设置成30%,启动rolling update后新的ReplicatSet将会立即扩容,新老Pod的总数不能超过期望的Pod数量的130%。旧的Pod被杀掉后,新的ReplicaSet将继续扩容,旧的ReplicaSet会进一步缩容,确保在升级的所有时刻所有的Pod数量和不会超过期望Pod数量的130%。

maxUnavailable:.spec.strategy.rollingUpdate.maxUnavailable 是可选配置项,用来指定在升级过程中不可用Pod的最大数量。该值可以是一个绝对值(例如5),也可以是期望Pod数量的百分比(例如10%)。通过计算百分比的绝对值向下取整。 如果.spec.strategy.rollingUpdate.maxSurge 为0时,这个值不可以为0。默认值是1。

例如,该值设置成30%,启动rolling update后旧的ReplicatSet将会立即缩容到期望的Pod数量的70%。新的Pod ready后,随着新的ReplicaSet的扩容,旧的ReplicaSet会进一步缩容确保在升级的所有时刻可以用的Pod数量至少是期望Pod数量的70%。

PS:maxSurge和maxUnavailable的属性值不可同时为0,否则Pod对象的副本数量在符合用户期望的数量后无法做出合理变动以进行更新操作。

在配置时,用户还可以使用Deployment控制器的spec.minReadySeconds属性来控制应用升级的速度。新旧更替过程中,新创建的Pod对象一旦成功响应就绪探测即被认为是可用状态,然后进行下一轮的替换。而spec.minReadySeconds能够定义在新的Pod对象创建后至少需要等待多长的时间才能会被认为其就绪,在该段时间内,更新操作会被阻塞。

revisionHistoryLimit(历史版本记录):

Deployment revision history存储在它控制的ReplicaSets中。默认保存记录10个

.spec.revisionHistoryLimit 是一个可选配置项,用来指定可以保留的旧的ReplicaSet数量。该理想值取决于心Deployment的频率和稳定性。如果该值没有设置的话,默认所有旧的Replicaset或会被保留,将资源存储在etcd中,是用kubectl get rs查看输出。每个Deployment的该配置都保存在ReplicaSet中,然而,一旦删除的旧的RepelicaSet,Deployment就无法再回退到那个revison了。

如果将该值设置为0,所有具有0个replica的ReplicaSet都会被删除。在这种情况下,新的Deployment rollout无法撤销,因为revision history都被清理掉了。

PS:为了保存版本升级的历史,需要再创建Deployment对象时,在命令中使用"--record"选项

rollbackTo:

.spec.rollbackTo 是一个可以选配置项,用来配置Deployment回退的配置。设置该参数将触发回退操作,每次回退完成后,该值就会被清除。

revision:.spec.rollbackTo.revision是一个可选配置项,用来指定回退到的revision。默认是0,意味着回退到上一个revision。

progressDeadlineSeconds:

.spec.progressDeadlineSeconds 是可选配置项,用来指定在系统报告Deployment的failed progressing——表现为resource的状态中type=Progressing、Status=False、 Reason=ProgressDeadlineExceeded前可以等待的Deployment进行的秒数。Deployment controller会继续重试该Deployment。未来,在实现了自动回滚后, deployment controller在观察到这种状态时就会自动回滚。

如果设置该参数,该值必须大于 .spec.minReadySeconds。

paused:

.spec.paused是可以可选配置项,boolean值。用来指定暂停和恢复Deployment。Paused和没有paused的Deployment之间的唯一区别就是,所有对paused deployment中的PodTemplateSpec的修改都不会触发新的rollout。Deployment被创建之后默认是非paused。

四,Statefulset,有状态应用

五,DaemonSet,守护应用

准备工作:拉取镜像

新版本的Kubernetes在安装部署中,需要从k8s.grc.io仓库中拉取所需镜像文件,但由于国内网络防火墙问题导致无法正常拉取。docker.io仓库对google的容器做了镜像,可以通过下列命令下拉取相关镜像:

[root@cce-7day-fudonghai-24106 01CNL]# docker pull mirrorgooglecontainers/fluentd-elasticsearch:1.20

1.20: Pulling from mirrorgooglecontainers/fluentd-elasticsearch

169bb74c2cc6: Pull complete

a3ed95caeb02: Pull complete

fa2b73488d2b: Pull complete

8adc08acc1c8: Pull complete

220ee2c01d13: Pull complete

Digest: sha256:5296b5290969d6856035a81db67ad288ba1a15e66ef4c0b3b0ba0ba2869dc85e

Status: Downloaded newer image for mirrorgooglecontainers/fluentd-elasticsearch:1.20

打上标签

[root@cce-7day-fudonghai-24106 01CNL]# docker tag docker.io/mirrorgooglecontainers/fluentd-elasticsearch:1.20 k8s.gcr.io/fluentd-elasticsearch:1.20

[root@cce-7day-fudonghai-24106 01CNL]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

100.125.17.64:20202/hwofficial/storage-driver-linux-amd64 1.0.13 9b1a762c647a 3 weeks ago 749MB

100.125.17.64:20202/op_svc_apm/icagent 5.11.27 797b45c7e959 4 weeks ago 340MB

canal-agent 1.0.RC8.SPC300.B010 4e31d812d31d 2 months ago 505MB

canal-agent latest 4e31d812d31d 2 months ago 505MB

100.125.17.64:20202/hwofficial/cce-coredns-linux-amd64 1.2.6.1 614e71c360a5 3 months ago 328MB

swr.cn-east-2.myhuaweicloud.com/fudonghai/tank v1.0 77c91a2d6c53 5 months ago 112MB

swr.cn-north-1.myhuaweicloud.com/hwstaff_m00402518/tank v1.0 77c91a2d6c53 5 months ago 112MB

redis latest 415381a6cb81 8 months ago 94.9MB

nginx latest 06144b287844 10 months ago 109MB

euleros 2.2.5 b0f6bcd0a2a0 20 months ago 289MB

mirrorgooglecontainers/fluentd-elasticsearch 1.20 c264dff3420b 2 years ago 301MB

k8s.gcr.io/fluentd-elasticsearch 1.20 c264dff3420b 2 years ago 301MB

nginx 1.11.1-alpine 5ad9802b809e 3 years ago 69.3MB

cce-pause 2.0 2b58359142b0 3 years ago 350kB

顺利运行

[root@cce-7day-fudonghai-24106 01CNL]# kubectl create -f 03daemonset.yaml daemonset.apps/fluentd-elasticsearch created [root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod NAME READY STATUS RESTARTS AGE fluentd-elasticsearch-dkbrc 1/1 Running 0 7s [root@cce-7day-fudonghai-24106 01CNL]# kubectl get ds NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE fluentd-elasticsearch 1 1 1 1 1 <none> 17s

六,job

七,cronjob

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello #Cronjob的名称

spec:

schedule: "*/1 * * * *" #job执行的周期,cron格式的字符串

jobTemplate: #job模板

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the kubernetes cluster

#command: ["bash","-c","date;echo Hello from the Kubernetes cluste"] #job具体执行的任务

restartPolicy: OnFailure

创建cronjob

[root@cce-7day-fudonghai-24106 01CNL]# kubectl create -f 03cronjob.yaml cronjob.batch/hello created [root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod No resources found. [root@cce-7day-fudonghai-24106 01CNL]# kubectl get cronjob NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE hello */1 * * * * False 0 <none> 24s

查看pod

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-1563603000-4bsd7 0/1 Completed 0 119s

hello-1563603060-sp9qj 0/1 Completed 0 59s

[root@cce-7day-fudonghai-24106 01CNL]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hello-1563603000-4bsd7 0/1 Completed 0 2m2s

hello-1563603060-sp9qj 0/1 Completed 0 62s

hello-1563603120-2vf4r 0/1 ContainerCreating 0 2s