下面配置主从

1)关闭SELinux和防火墙

vi /etc/sysconfig/selinux SELINUX=disabled setenforce 0 临时关闭SELinux,文件配置后,重启生效 182.148.15.0/24是服务器的公网网段,192.168.1.0/24是服务器的私网网段 一定要注意:加上这个组播规则后,MASTER和BACKUP故障时,才能实现VIP资源的正常转移。其故障恢复后,VIP也还会正常转移回来。 vim /etc/sysconfig/iptables -A INPUT -s 182.148.15.0/24 -d 224.0.0.18 -j ACCEPT #允许组播地址通信。 -A INPUT -s 192.168.1.0/24 -d 224.0.0.18 -j ACCEPT -A INPUT -s 182.148.15.0/24 -p vrrp -j ACCEPT #允许 VRRP(虚拟路由器冗余协)通信 -A INPUT -s 192.168.1.0/24 -p vrrp -j ACCEPT -A INPUT -m state --state NEW -m tcp -p tcp --dport 80 -j ACCEPT /etc/init.d/iptables restart

2) lvs安装(主备两台都要操作)

yum install -y libnl* popt* modprobe -l |grep ipvs cd /usr/local/src/ wget http://www.linuxvirtualserver.org/software/kernel-2.6/ipvsadm-1.26.tar.gz ln -s /usr/src/kernels/2.6.32-431.5.1.el6.x86_64/ /usr/src/linux tar -zxvf ipvsadm-1.26.tar.gz cd ipvsadm-1.26 make && make install 查看lvs集群 ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn

3)编写lvs启动脚本(两台都要操作)

vim /etc/init.d/realserver #!/bin/sh VIP=182.148.15.239 . /etc/rc.d/init.d/functions case "$1" in # 禁用本地的ARP请求、绑定本地回环地址 start) /sbin/ifconfig lo down /sbin/ifconfig lo up echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce /sbin/sysctl -p >/dev/null 2>&1 /sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up #在回环地址上绑定VIP,设定掩码,与Direct Server(自身)上的IP保持通信 /sbin/route add -host $VIP dev lo:0 echo "LVS-DR real server starts successfully. " ;; stop) /sbin/ifconfig lo:0 down /sbin/route del $VIP >/dev/null 2>&1 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce echo "LVS-DR real server stopped. " ;; status) isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"` isRoOn=`/bin/netstat -rn | grep "$VIP"` if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then echo "LVS-DR real server has run yet." else echo "LVS-DR real server is running." fi exit 3 ;; *) echo "Usage: $0 {start|stop|status}" exit 1 esac exit 0

将lvs脚本加入开机自启动 chmod +x /etc/init.d/realserver echo "/etc/init.d/realserver start" >> /etc/rc.d/rc.local 启动LVS脚本(注意:如果这两台realserver机器重启了,一定要确保service realserver start 启动了,即lo:0本地回环上绑定了vip地址,否则lvs转发失败!) service realserver start 查看Real_Server1服务器,发现VIP已经成功绑定到本地回环口lo上了 ifconfig eth0 Link encap:Ethernet HWaddr 52:54:00:D1:27:75 inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224 inet6 addr: fe80::5054:ff:fed1:2775/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:309741 errors:0 dropped:0 overruns:0 frame:0 TX packets:27993954 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:37897512 (36.1 MiB) TX bytes:23438654329 (21.8 GiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 b) TX bytes:0 (0.0 b) lo:0 Link encap:Local Loopback inet addr:182.148.15.239 Mask:255.255.255.255 UP LOOPBACK RUNNING MTU:65536 Metric:1

4)安装keepalive(主备)

yum install -y openssl-devel cd /usr/local/src/ wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz tar -zvxf keepalived-1.3.5.tar.gz cd keepalived-1.3.5 ./configure --prefix=/usr/local/keepalived make && make install cp /usr/local/src/keepalived-1.3.5/keepalived/etc/init.d/keepalived /etc/rc.d/init.d/ cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ mkdir /etc/keepalived/ cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/ cp /usr/local/keepalived/sbin/keepalived /usr/sbin/ echo "/etc/init.d/keepalived start" >> /etc/rc.local chmod +x /etc/rc.d/init.d/keepalived chkconfig keepalived on service keepalived start

5)配置

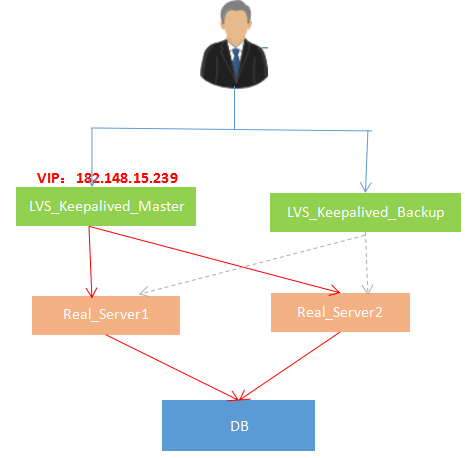

主备打开ip_forward转发功能 echo "1" > /proc/sys/net/ipv4/ip_forward 主的keepalive.conf配置 vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_Master } vrrp_instance VI_1 { state MASTER #指定instance初始状态,实际根据优先级决定.backup节点不一样 interface eth0 #虚拟IP所在网 virtual_router_id 51 #VRID,相同VRID为一个组,决定多播MAC地址 priority 100 #优先级,另一台改为90.backup节点不一样 advert_int 1 #检查间隔 authentication { auth_type PASS #认证方式,可以是pass或ha auth_pass 1111 #认证密码 } virtual_ipaddress { 182.148.15.239 #VIP } } virtual_server 182.148.15.239 80 { delay_loop 6 #服务轮询的时间间隔 lb_algo wrr #加权轮询调度,LVS调度算法 rr|wrr|lc|wlc|lblc|sh|sh lb_kind DR #LVS集群模式 NAT|DR|TUN,其中DR模式要求负载均衡器网卡必须有一块与物理网卡在同一个网段 #nat_mask 255.255.255.0 persistence_timeout 50 #会话保持时间 protocol TCP #健康检查协议 ## Real Server设置,80就是连接端口 real_server 182.148.15.233 80 { weight 3 ##权重 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } /etc/init.d/keepadlived start ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.48.115.237/27 brd 182.48.115.255 scope global eth0 inet 182.48.115.239/32 scope global eth0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft foreve ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 182.48.115.239:80 wrr persistent 50 -> 182.48.115.233:80 Route 3 0 0 -> 182.48.115.238:80 Route 3 0 0 备用上的keepalived.conf配置 vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_Backup } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.239 } } virtual_server 182.148.15.239 80 { delay_loop 6 lb_algo wrr lb_kind DR persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } /etc/init.d/keepalived start ip addr 查看LVS_Keepalived_Backup机器上,发现VIP默认在LVS_Keepalived_Master机器上,只要当LVS_Keepalived_Backup发生故障时,VIP资源才会飘到自己这边来。 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:7c:b8:f0 brd ff:ff:ff:ff:ff:ff inet 182.48.115.236/27 brd 182.48.115.255 scope global eth0 inet 182.48.115.239/27 brd 182.48.115.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe7c:b8f0/64 scope link valid_lft forever preferred_lft forever ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 182.48.115.239:80 wrr persistent 50 -> 182.48.115.233:80 Route 3 0 0 -> 182.48.115.238:80 Route 3 0 0

3)后端真实服务器上的操作

分别在两台Real Server上配置两个域名www.test1.com,www.test2.com 在master和backup正常访问两个域名 curl http://www.tes1.com this is page of Real_Server1:182.148.15.238 www.test1.com curl http://www.test2.com this is page of Real_Server2:182.148.15.238 www.test2.com 关闭真实服务器2的nginx,发现对应域名请求到real_server 上 usr/local/nginx/sbin/nginx -s stop lsof -i:80 再次在LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器上访问这两个域名,就会发现已经负载到Real_Server1上了 curl http://www.test1.com this is page of Real_Server1:182.148.15.233 www.test1.com curl http://www.test2.com this is page of Real_Server1:182.148.15.233 www.test2.com curl http://www.test1.com this is page of Real_Server1:182.148.15.233 www.test1.com curl http://www.test2.com this is page of Real_Server1:182.148.15.233 www.test2.com 另外,设置这两台Real Server的iptables,让其80端口只对前面的两个vip资源开放 vim /etc/sysconfig/iptables ...... -A INPUT -s 182.148.15.239 -m state --state NEW -m tcp -p tcp --dport 80 -j ACCEPT /etc/init.d/iptables restart

4)测试

将www.test1.com和www.test2.com测试域名解析到VIP:182.148.15.239,然后在浏览器里是可以正常访问的。

1)测试LVS功能(上面Keepalived的lvs配置中,自带了健康检查,当后端服务器的故障出现故障后会自动从lvs集群中踢出,当故障恢复后,再自动加入到集群中) 先查看当前LVS集群,如下:发现后端两台Real Server的80端口都运行正常 [root@LVS_Keepalived_Master ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 182.148.15.239:80 wrr persistent 50 -> 182.148.15.233:80 Route 3 0 0 -> 182.148.15.238:80 Route 3 0 0 现在测试关闭一台Real Server,比如Real_Server2 [root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx -s stop 过一会儿再次查看当前LVS集群,如下:发现Real_Server2已经被踢出当前LVS集群了 [root@LVS_Keepalived_Master ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 182.148.15.239:80 wrr persistent 50 -> 182.148.15.233:80 Route 3 0 0 最后重启Real_Server2的80端口,发现LVS集群里又再次将其添加进来了 [root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx [root@LVS_Keepalived_Master ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 182.148.15.239:80 wrr persistent 50 -> 182.148.15.233:80 Route 3 0 0 -> 182.148.15.238:80 Route 3 0 0 以上测试中,http://www.wangshibo.com和http://www.guohuihui.com域名访问都不受影响。 2)测试Keepalived心跳测试的高可用 默认情况下,VIP资源是在LVS_Keepalived_Master上 [root@LVS_Keepalived_Master ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/32 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 然后关闭LVS_Keepalived_Master的keepalived,发现VIP就会转移到LVS_Keepalived_Backup上。 [root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived stop Stopping keepalived: [ OK ] [root@LVS_Keepalived_Master ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 查看系统日志,能查看到LVS_Keepalived_Master的VIP的移动信息 [root@LVS_Keepalived_Master ~]# tail -f /var/log/messages ............. May 8 10:19:36 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: TCP connection to [182.148.15.233]:80 failed. May 8 10:19:39 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: TCP connection to [182.148.15.233]:80 failed. May 8 10:19:39 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: Check on service [182.148.15.233]:80 failed after 1 retry. May 8 10:19:39 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: Removing service [182.148.15.233]:80 from VS [182.148.15.239]:80 [root@LVS_Keepalived_Backup ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:7c:b8:f0 brd ff:ff:ff:ff:ff:ff inet 182.148.15.236/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/32 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe7c:b8f0/64 scope link valid_lft forever preferred_lft forever 接着再重新启动LVS_Keepalived_Master的keepalived,发现VIP又转移回来了 [root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived start Starting keepalived: [ OK ] [root@LVS_Keepalived_Master ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/32 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 查看系统日志,能查看到LVS_Keepalived_Master的VIP转移回来的信息 [root@LVS_Keepalived_Master ~]# tail -f /var/log/messages ............. May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239 May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 182.148.15.239 May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239 May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239 May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239 May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239

LVS+KEEPALIVED主主热备的高可用环境部署

主主环境相比于主从环境,区别只在于: 1)LVS负载均衡层需要两个VIP。比如182.148.15.239和182.148.15.235 2)后端的realserver上要绑定这两个VIP到lo本地回环口上 3)Keepalived.conf的配置相比于上面的主从模式也有所不同 主主架构的具体配置如下: 1)编写LVS启动脚本(在Real_Server1 和Real_Server2上都要操作,realserver脚本内容是一样的) 由于后端realserver机器要绑定两个VIP到本地回环口lo上(分别绑定到lo:0和lo:1),所以需要编写两个启动脚本 [root@Real_Server1 ~]# vim /etc/init.d/realserver1 #!/bin/sh VIP=182.148.15.239 . /etc/rc.d/init.d/functions case "$1" in start) /sbin/ifconfig lo down /sbin/ifconfig lo up echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce /sbin/sysctl -p >/dev/null 2>&1 /sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up /sbin/route add -host $VIP dev lo:0 echo "LVS-DR real server starts successfully. " ;; stop) /sbin/ifconfig lo:0 down /sbin/route del $VIP >/dev/null 2>&1 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce echo "LVS-DR real server stopped. " ;; status) isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"` isRoOn=`/bin/netstat -rn | grep "$VIP"` if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then echo "LVS-DR real server has run yet." else echo "LVS-DR real server is running." fi exit 3 ;; *) echo "Usage: $0 {start|stop|status}" exit 1 esac exit 0 [root@Real_Server1 ~]# vim /etc/init.d/realserver2 #!/bin/sh VIP=182.148.15.235 . /etc/rc.d/init.d/functions case "$1" in start) /sbin/ifconfig lo down /sbin/ifconfig lo up echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce /sbin/sysctl -p >/dev/null 2>&1 /sbin/ifconfig lo:1 $VIP netmask 255.255.255.255 up /sbin/route add -host $VIP dev lo:1 echo "LVS-DR real server starts successfully. " ;; stop) /sbin/ifconfig lo:1 down /sbin/route del $VIP >/dev/null 2>&1 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce echo "LVS-DR real server stopped. " ;; status) isLoOn=`/sbin/ifconfig lo:1 | grep "$VIP"` isRoOn=`/bin/netstat -rn | grep "$VIP"` if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then echo "LVS-DR real server has run yet." else echo "LVS-DR real server is running." fi exit 3 ;; *) echo "Usage: $0 {start|stop|status}" exit 1 esac exit 0 将lvs脚本加入开机自启动 [root@Real_Server1 ~]# chmod +x /etc/init.d/realserver1 [root@Real_Server1 ~]# chmod +x /etc/init.d/realserver2 [root@Real_Server1 ~]# echo "/etc/init.d/realserver1" >> /etc/rc.d/rc.local [root@Real_Server1 ~]# echo "/etc/init.d/realserver2" >> /etc/rc.d/rc.local 启动LVS脚本 [root@Real_Server1 ~]# service realserver1 start LVS-DR real server starts successfully. [root@Real_Server1 ~]# service realserver2 start LVS-DR real server starts successfully. 查看Real_Server1服务器,发现VIP已经成功绑定到本地回环口lo上了 [root@Real_Server1 ~]# ifconfig eth0 Link encap:Ethernet HWaddr 52:54:00:D1:27:75 inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224 inet6 addr: fe80::5054:ff:fed1:2775/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:309741 errors:0 dropped:0 overruns:0 frame:0 TX packets:27993954 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:37897512 (36.1 MiB) TX bytes:23438654329 (21.8 GiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 b) TX bytes:0 (0.0 b) lo:0 Link encap:Local Loopback inet addr:182.148.15.239 Mask:255.255.255.255 UP LOOPBACK RUNNING MTU:65536 Metric:1 lo:1 Link encap:Local Loopback inet addr:182.148.15.235 Mask:255.255.255.255 UP LOOPBACK RUNNING MTU:65536 Metric:1 2)Keepalived.conf的配置 LVS_Keepalived_Master机器上的Keepalived.conf配置 先打开ip_forward路由转发功能 [root@LVS_Keepalived_Master ~]# echo "1" > /proc/sys/net/ipv4/ip_forward [root@LVS_Keepalived_Master ~]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_Master } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.239 } } vrrp_instance VI_2 { state BACKUP interface eth0 virtual_router_id 52 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.235 } } virtual_server 182.148.15.239 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } virtual_server 182.148.15.235 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } LVS_Keepalived_Backup机器上的Keepalived.conf配置 [root@LVS_Keepalived_Master ~]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_Backup } vrrp_instance VI_1 { state Backup interface eth0 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.239 } } vrrp_instance VI_2 { state Master interface eth0 virtual_router_id 52 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.235 } } virtual_server 182.148.15.239 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } virtual_server 182.148.15.235 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } 其他验证操作和上面主从模式一样~