本来想把股票的涨跌抓取出来,用汇通网的股票为例,就找了国际外汇为例。

页面里有xhr请求,并且每个xhr的url请求的 http://api.q.fx678.com/history.php?symbol=USD&limit=288&resolution=5&codeType=8100&st=0.43342772855649625

然后请求里面哟一个limit=288表示288个时间段,汇通网里面将5分钟为一个时间段,所以一天的话有288个时间间隔。

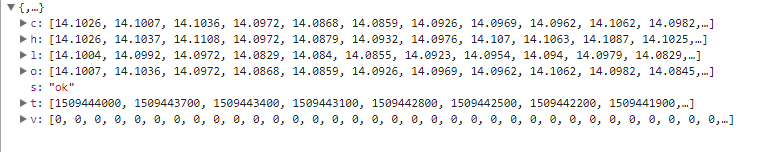

xhr请求中的st参数好像是跟页面的请求的过期与否有关,因为有的股票可以通过该st值获得数据,但是有的股票就不可以。然后此xhr请求可以将从该时间段为止的24小时内的所有股票的变动返回,包括该时间段的开盘,收盘,最高,最低,然后依次来解析。

处理的时候只是将所有整点的数据该股票的最高点解析出来,xhr请求中的时间戳是秒级的,然后将之转换成北京时间,并且将时间进行排序,然后获取正确的其他的并没有进行处理,程序中存储时间和最高点都是用数组存储的,并且改程序是单进程,会更新~

只想说xpath是真心好用,第二次用,解析参数的时候很方便。

#-*-coding:utf-8 -*- import urllib import re import json import urllib2 from lxml import etree import requests import time from Queue import Queue import matplotlib.pyplot as plt URL = 'http://quote.fx678.com/exchange/WH' nation_que = Queue() nation = Queue() high = Queue() start_time = Queue() Chart = [] def __cmp__(self, other): if self.time < other.time: return -1 elif self.time == other.time: return 0 else: return 1 class Share: def __init__(self,time,score): self.time = time self.score = score def __getitem__(self, key): self.item.get(key) def __cmp__(self, other): return cmp(self.time, other.time) def __str__(self): return self.time + " " + str(self.score) def download(url, headers, num_try=2): while num_try >0: num_try -= 1 try: content = requests.get(url, headers=headers) return content.text except urllib2.URLError as e: print 'Download error', e.reason return None def get_type_url(): headers = { 'User_agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36', 'Referer': 'http://quote.fx678.com/exchange/WH', 'Cookie': 'io=-voMclEjiizK9nWKALqB; UM_distinctid=15f5938ddc72db-089cf9ba58d9e5-31657c00-fa000-15f5938ddc8b24; Hm_lvt_d25bd1db5bca2537d34deae7edca67d3=1509030420; Hm_lpvt_d25bd1db5bca2537d34deae7edca67d3=1509031023', 'Accept-Language': 'zh-CN,zh;q=0.8', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*' } content = download(URL,headers) html = etree.HTML(content) result = html.xpath('//a[@class="mar_name"]/@href') for each in result: print each st = each.split('/') nation_que.put(st[len(st)-1]) get_precent() def get_precent(): while not nation_que.empty(): ss = nation_que.get(False) print ss url = 'http://api.q.fx678.com/history.php?symbol=' + ss +'&limit=288&resolution=5&codeType=8100&st=0.43342772855649625' print url headers = {'Accept':'application/json, text/javascript, */*; q=0.01', 'Accept-Encoding':'gzip, deflate', 'Accept-Language':'zh-CN,zh;q=0.8', 'Connection':'keep-alive', 'Host':'api.q.fx678.com', 'Origin':'http://quote.fx678.com', 'Referer':'http://quote.fx678.com/symbol/USD', 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36' } num_try = 2 while num_try >0: num_try -= 1 try: content = requests.get(url, headers=headers) html = json.loads(content.text) st = html['h'] T_time = html['t'] if len(st) > 0 and len(T_time) > 0: nation.put(ss) high.put(st) start_time.put(T_time) break except urllib2.URLError as e: print 'Download error', e.reason nation_que.task_done() draw_pict() def sub_sort(array,array1,low,high): key = array[low] key1 = array1[low] while low < high: while low < high and array[high] >= key: high -= 1 while low < high and array[high] < key: array[low] = array[high] array1[low] = array1[high] low += 1 array[high] = array[low] array1[high] = array1[low] array[low] = key array1[low] = key1 return low def quick_sort(array,array1,low,high): if low < high: key_index = sub_sort(array,array1,low,high) quick_sort(array,array1,low,key_index) quick_sort(array,array1,key_index+1,high) def draw_pict(): while True: Nation = nation.get(False) High = high.get(False) Time = start_time.get(False) T_time = [] High_Rate = [] num = 0 for each,high1 in zip(Time,High): st = time.localtime(float(each)) if st.tm_min == 0: T_time.append(st.tm_hour) High_Rate.append(high1) quick_sort(T_time,High_Rate,0,len(T_time)-1) plt.plot(T_time,High_Rate, marker='*') plt.show() plt.title(Nation) nation.task_done() high.task_done() break if __name__ == '__main__': get_type_url()