代码参考:https://aistudio.baidu.com/aistudio/projectdetail/467668

https://www.cnblogs.com/feifeifeisir/p/10627474.html

本次爬虫主要是通过正则表达式获取想要的信息,以表格的形式存储

参考代码的过程中,加入了自己的一些代码形式,运行过程中有过错误

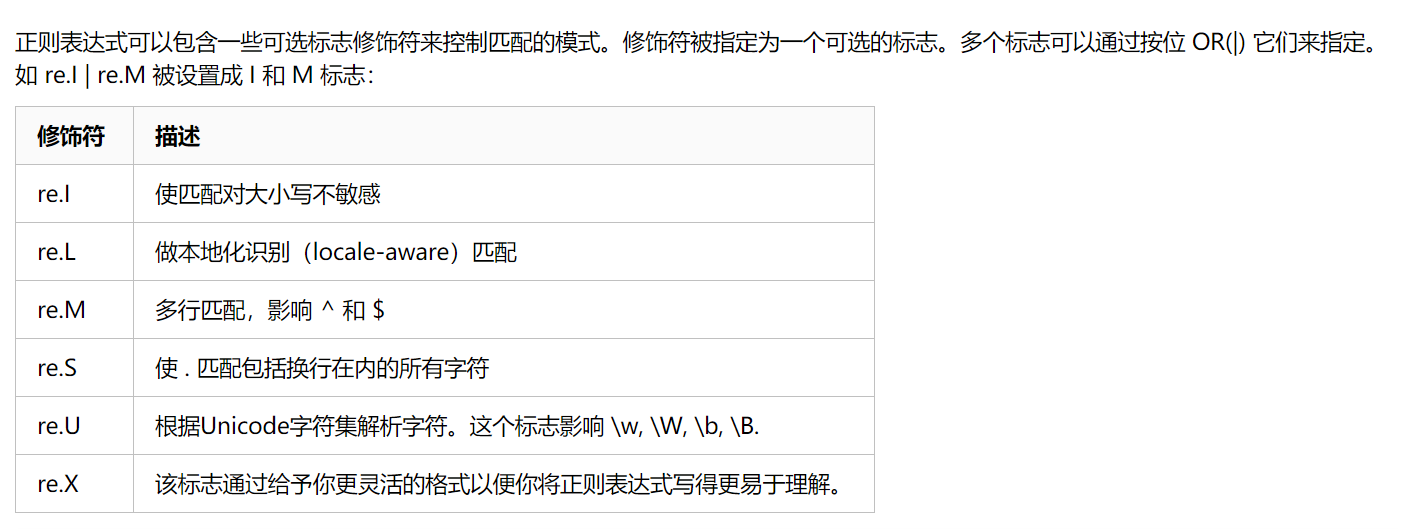

re.S的运用

1 import urllib 2 from urllib import request, error 3 import re 4 from bs4 import BeautifulSoup 5 import xlwt 6 import time 7 8 def askURL(url): 9 headers = { 10 "User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.120 Safari/537.36" 11 } 12 request = urllib.request.Request(url,headers = headers)#发送请求 13 try: 14 response = urllib.request.urlopen(request)#取得响应 15 html= response.read()#获取网页内容 16 # print (html) 17 except urllib.error.URLError as e: 18 if hasattr(e,"code"): 19 print (e.code) 20 if hasattr(e,"reason"): 21 print (e.reason) 22 return html 23 24 25 26 def getData(baseurl): 27 remove = re.compile(r' | |</br>|.*') 28 datalist = [] 29 30 for i in range(0, 10): 31 url = baseurl + str(i * 25) 32 html = askURL(url) 33 soup = BeautifulSoup(html, "html.parser") 34 for item in soup.find_all('div', class_='item'): # 找到每一个影片项 35 data = [] 36 item = str(item) # 转换成字符串 37 # 影片详情链接 38 link = re.findall('<a href="(.*?)">', item)[0] 39 data.append(link) # 添加详情链接 40 imgSrc = re.findall('<img.*src="(.*?)"', item, re.S)[0] 41 data.append(imgSrc) # 添加图片链接 42 titles = re.findall('<span class="title">(.*)</span>', item) 43 # 片名可能只有一个中文名,没有外国名 44 if (len(titles) == 2): 45 ctitle = titles[0] 46 data.append(ctitle) # 添加中文片名 47 otitle = titles[1].replace("/", "") # 去掉无关符号 48 data.append(otitle) # 添加外国片名 49 else: 50 data.append(titles[0]) # 添加中文片名 51 data.append(' ') # 留空 52 53 rating = re.findall('<span class="rating_num" property="v:average">(.*)</span>', item)[0] 54 data.append(rating) # 添加评分 55 judgeNum = re.findall('<span>(d*)人评价</span>', item)[0] 56 data.append(judgeNum) # 添加评论人数 57 inq = re.findall('<span class="inq">(.*)</span>', item) 58 59 # 可能没有概况 60 if len(inq) != 0: 61 inq = inq[0].replace("。", "") # 去掉句号 62 data.append(inq) # 添加概况 63 else: 64 data.append(' ') # 留空 65 bd = re.findall('<p class="">(.*?)</p>', item, re.S)[0] 66 bd = re.sub(remove, "", bd) 67 bd = re.sub('<br(s+)?/?>(s+)?', " ", bd) # 去掉<br > 68 bd = re.sub('/', " ", bd) # 替换/ 69 data.append(bd.strip()) 70 datalist.append(data) 71 72 time.sleep(5) 73 return datalist 74 75 # 将相关数据写入excel表格中 76 def saveData(datalist, savepath): 77 book = xlwt.Workbook(encoding='utf-8', style_compression=0) 78 sheet = book.add_sheet('豆瓣电影Top250', cell_overwrite_ok=True) 79 col = ('电影详情链接', '图片链接', '影片中文名', '影片外国名', '评分', '评价数', '概况', '相关信息') 80 for i in range(0, 8): 81 sheet.write(0, i, col[i]) 82 for i in range(0, 250): 83 data = datalist[i] # 列名 84 for j in range(0, 8): 85 sheet.write(i + 1, j, data[j]) # 数据 86 book.save(savepath) 87 88 89 # savepath = u'/home/cxf/ML_1/豆瓣电影Top250.xlsx' 90 # saveData(datalist,savepath) 91 92 93 def main(): 94 print("爬虫开始了........................") 95 baseurl = 'https://movie.douban.com/top250?start=' 96 datalist = getData(baseurl) 97 savepath = '1豆瓣电影Top250_2.xlsx' 98 saveData(datalist, savepath) 99 100 101 if __name__ == "__main__": 102 main() 103 print("爬取完成,请查看.xlsx文件")