一些概念

安装 mysql

yum安装mysql-server没有可用包问题解决方法:

step 1: wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

step 2: rpm -ivh mysql-community-release-el7-5.noarch.rpm

经过以上两个步骤后再次执行:yum install mysql-server 命令就可以成功安装了。

修改 密码

# 停掉 mysql 服务

systemctl stop mysqld

#修改 mysql 配置

vim /etc/my.cnf

# Disabling symbolic-links is recommended to prevent assorted security risks

skip-grant-tables #添加这句话,这时候登入mysql就不需要密码

symbolic-links=0

# 重新启动 mysql 服务

systemctl start mysqld

mysql -u root -p #敲回车

set password for root@localhost = password('root123'); # 这个会报错误

flush privileges;

set password for root@localhost = password('root123');

flush privileges;

quit;

systemctl stop mysqld.service

修改 配置文件 注释掉 skip-grant 语句

hive 安装步骤

# 去官网拷贝下载地址

wget xxx

tar -xzvf xxx.tar.gz

mv apache-hive-2.3.5-bin /usr/local/

cd /usr/local/apache-hive-2.3.5-bin/conf

需要修改两个 配置文件

cp hive-env.sh.template hive-env.sh

cp hive-default.xml.template hive-site.xml

vim hive-env.sh ,添加 JAVA_HOME , HADOOP_HOME

vim hive-site.xml # 修改 metastore 存储在哪里 (localhost 的 mysql 等)

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root123</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/hive/warhouse</value>

</property>

<property>

<name>hive.exec.scratchdir</name>

<value>/hive/tmp</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/hive/log</value>

</property>

</configuration>

接下来,我们需要去 创建我们的 mysql 数据库服务器 以及数据库实例

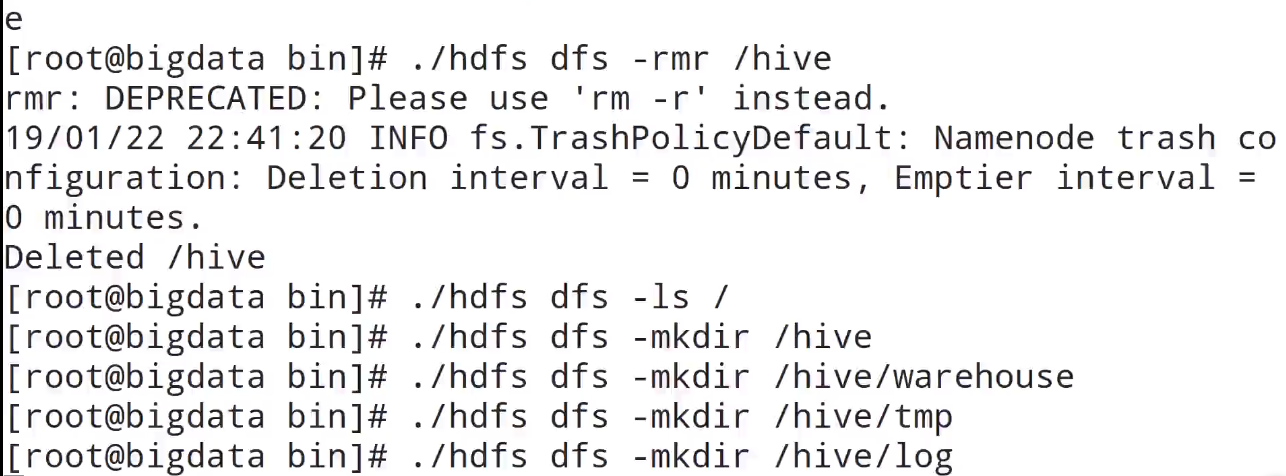

并且,需要在 hdfs 文件系统上为 hive 创建 对应的文件目录 如 下图所示

hdfs dfs -ls /

hdfs dfs -mkdir /hive

hdfs dfs -mkdir /hive/warehouse

hdfs dfs -mkdir /hive/tmp

hdfs dfs -mkdir /hive/log

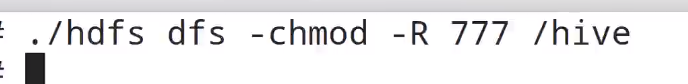

hdfs dfs -chmod -R 777 /hive

./schematool -dbType mysql -initSchema

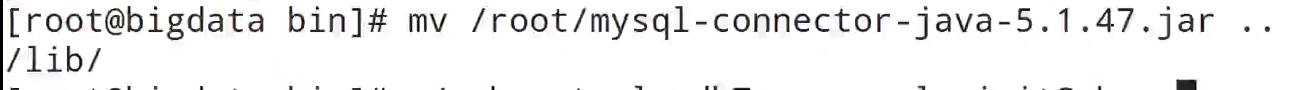

# 提示 缺少 myql 驱动 jar 包

cd /usr/local/apache-hivexxxx/lib

wget http://central.maven.org/maven2/mysql/mysql-connector-java/6.0.6/mysql-connector-java-6.0.6.jar

./schematool -dbType mysql -initSchema

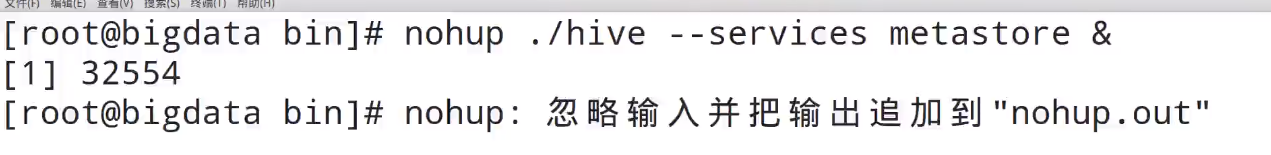

# 以 metastore 方式 启动 hive

nohup ./hive --services metastore &

中间遇到 一些坑 主要是 jdbc 连接字符串的问题 & 进行转义 与符号

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExsit=true&characterEncoding=UTF-8&serverTimezone=UTC</value>

</property>

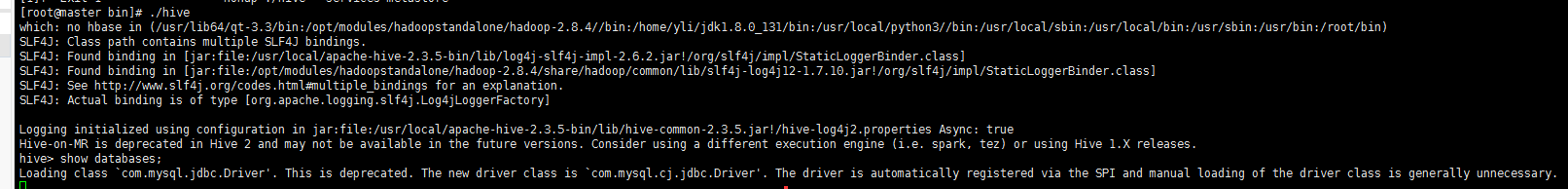

./hive # 进入 交互式的 hive shell

./hive

进入 交互

show databases;

vim testdata.txt

1,tom,music-runing-code,c++:98.0-java:76.0-php:65.0

2,jerry,music-code,c++:93.0-java:70.0-php:55.0

3,john,code,go:87.0-python:93.0

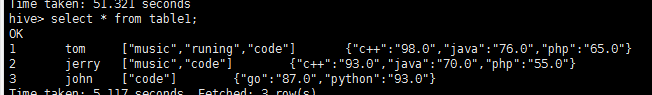

使用 hive 进行导入

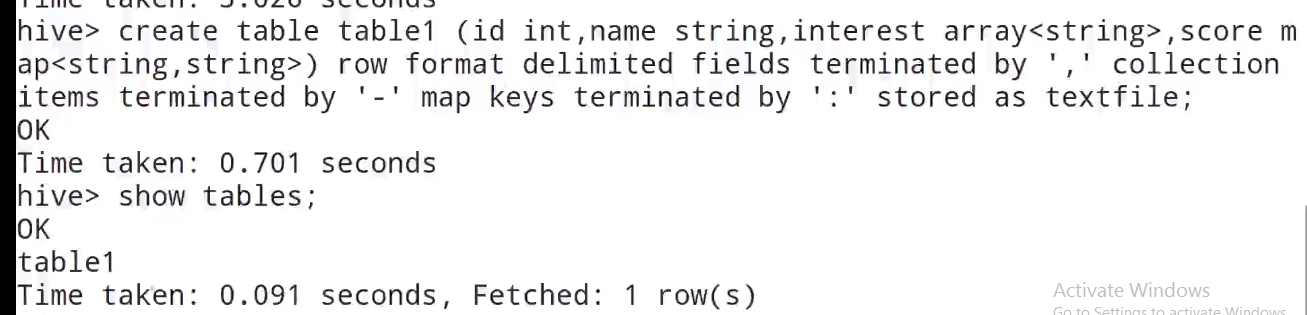

create table table1(id int,name string,interest array

collection items terminated by '-' map keys terminated by ':' stored as textfile;

导入本地文件到 内部表

load data local inpath '/root/testdata.txt' overwrite into table table1;

hdfs dfs -ls /hive/warehouse/table1 # 可以查看到 数据实际上是保存在 了 hdfs 上,元数据信息保存在 mysql 上

外部表

hdfs dfs -mkdir /outertables/

hdfs dfs -copyFromLocal /root/testdata.txt /outertables/

create external table table2(id int,name string,interest array

select * from table2;

desc formatted table2;

加载本地文件到 内部表

加载文件到外部表

内部表在 drop 时候 hdfs 上 /hive/warhouse/ 下的数据 也被干掉了

而 外部表的 则不会被干掉

external 关键字

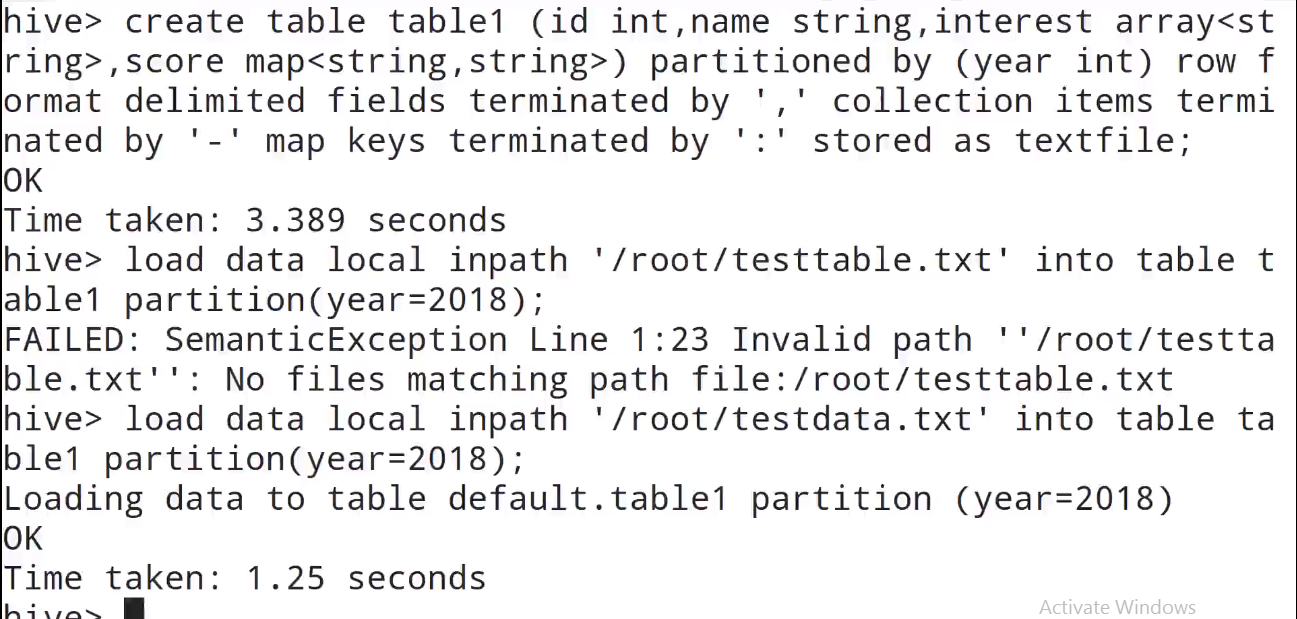

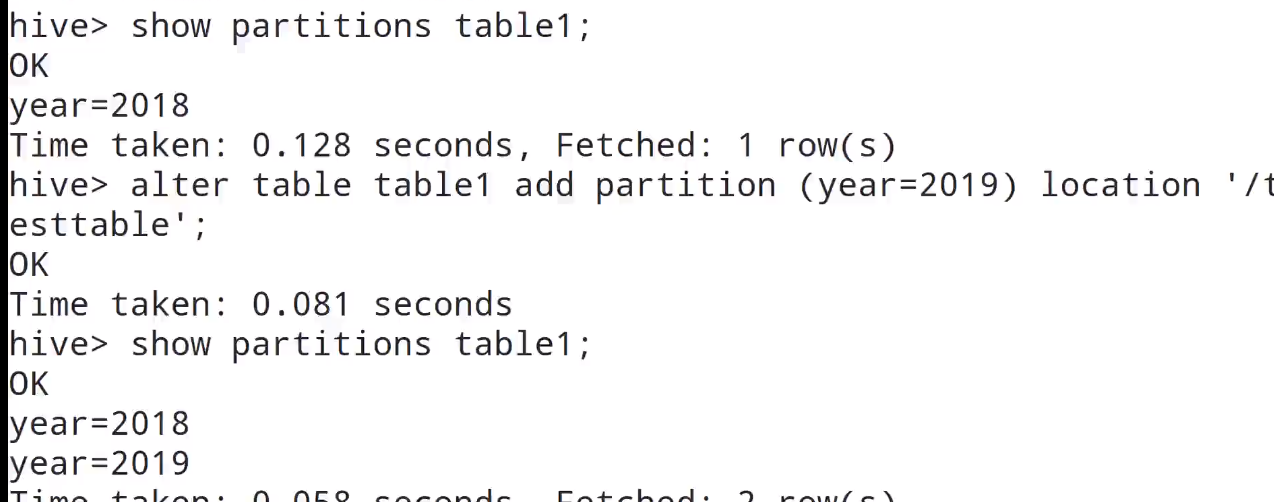

分区表 ,可以提高 查找效率

create table table3(id int, name string, interest array

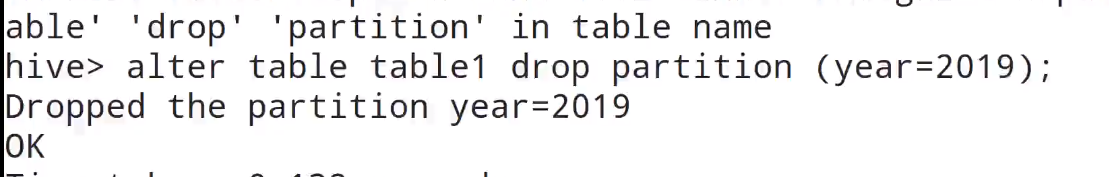

删除分区

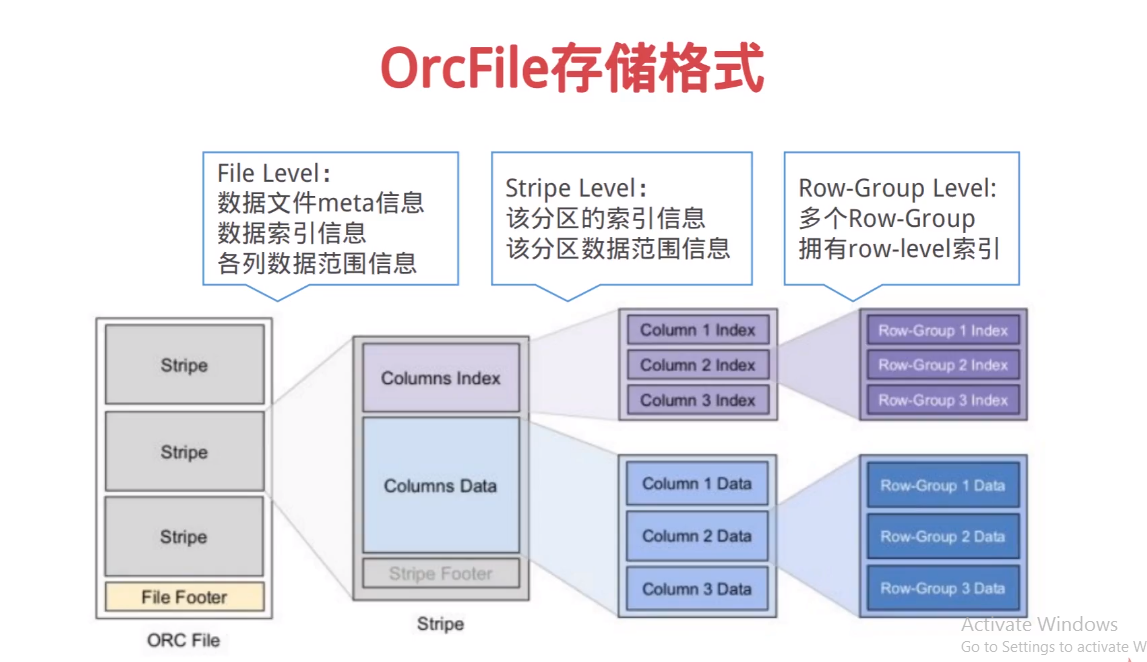

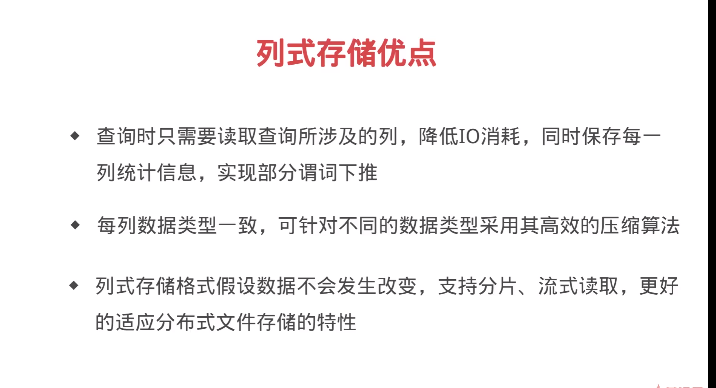

列式存储

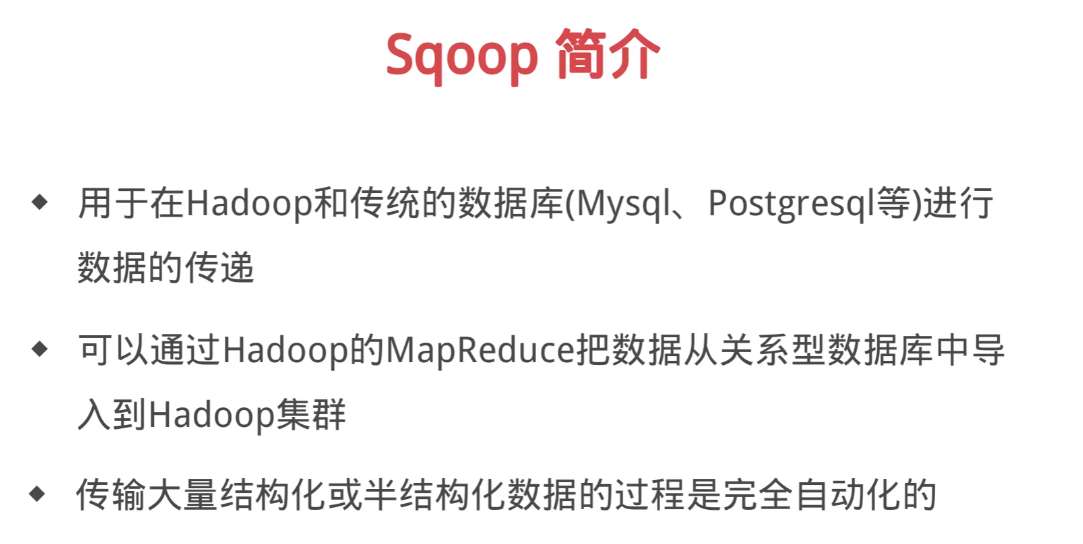

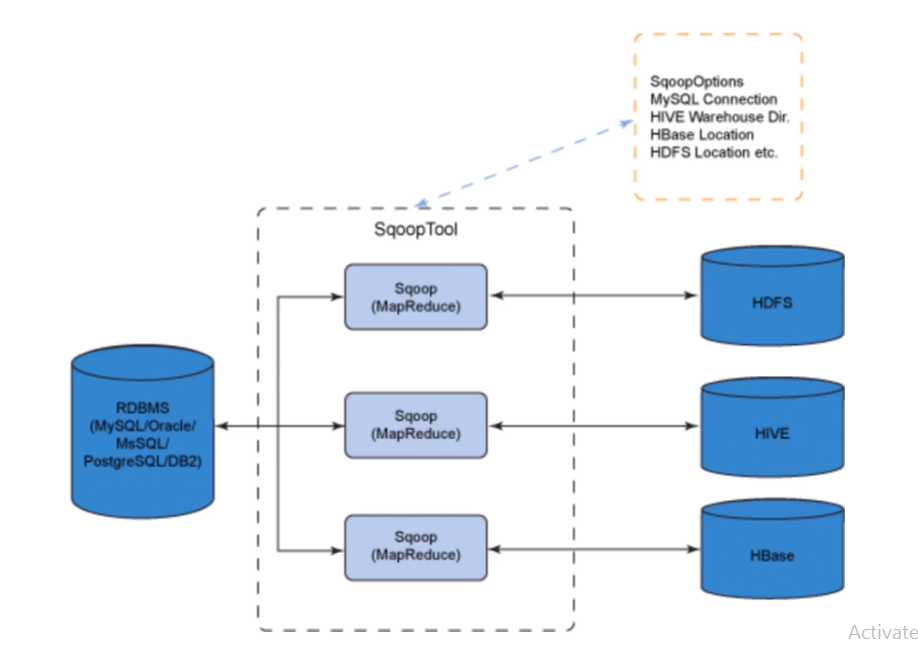

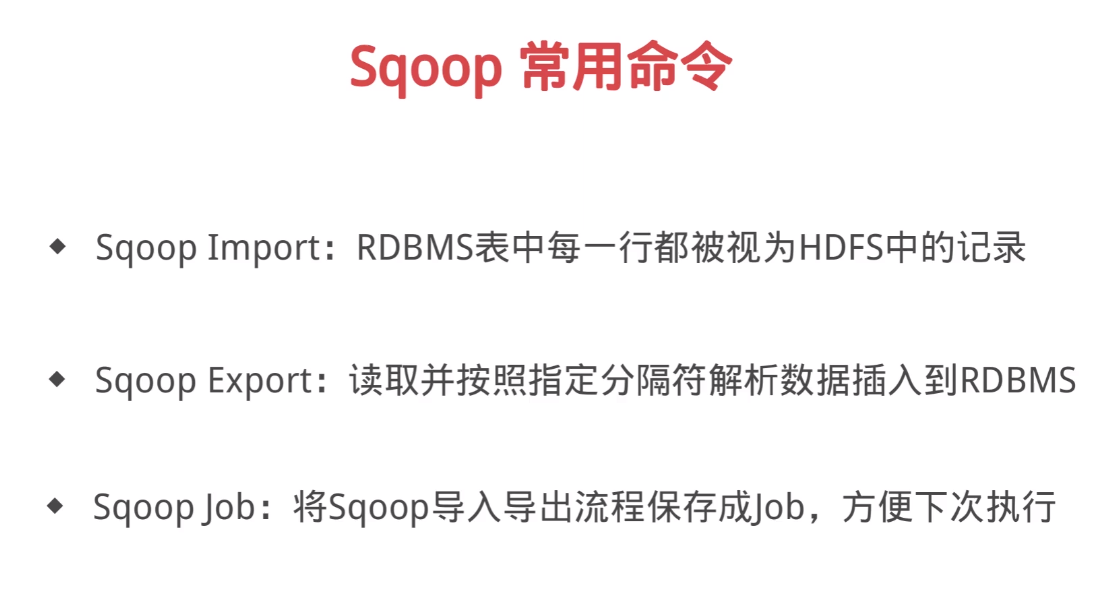

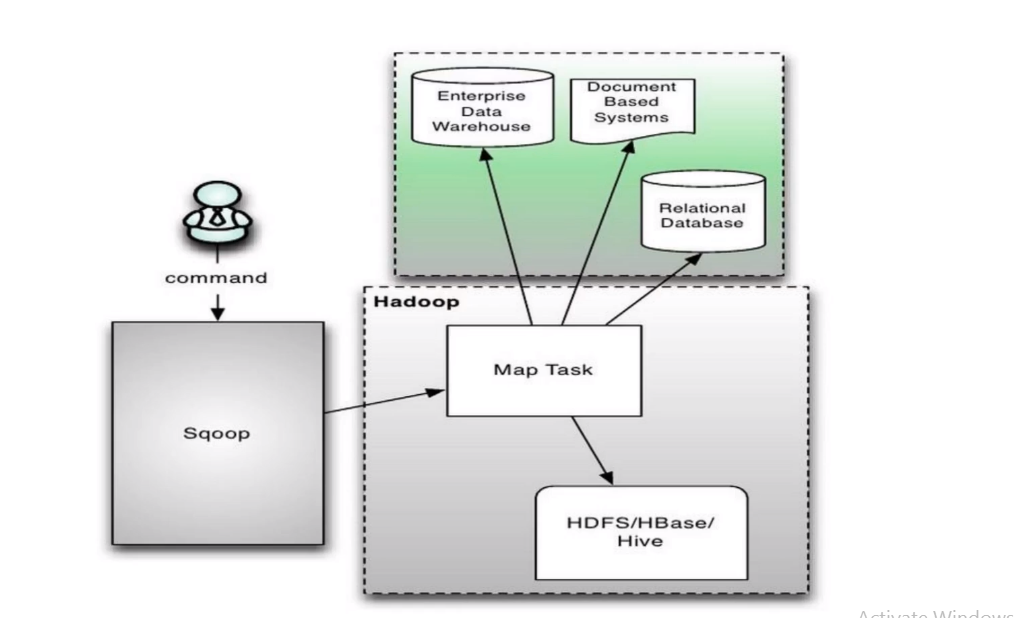

sqoop 可以认为 是 ETL 工具, 数据采集,沟通 传统关系型数据库与hdfs的桥梁 (转化为 MR 操作)

wget https://mirrors.tuna.tsinghua.edu.cn/apache/sqoop/1.4.7/sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

tar xzvf xxx

mv xxx /usr/local

cp ../apache-hive-2.3.5-bin/lib/mysql-connector-java-6.0.6.jar ./lib/

./sqoop list-databases --connect jdbc:mysql://localhost:3306?serverTimezone=UTC --username root -password root123

presto 安装

wget https://repo1.maven.org/maven2/com/facebook/presto/presto-cli/0.212/presto-cli-0.212-executable.jar

wget https://repo1.maven.org/maven2/com/facebook/presto/presto-server/0.212/presto-server-0.212.tar.gz

总结

hive sql 映射成为 map reduce

hive bin 目录下常用命令

sqoop 命令详细 导入导出, list 等

presto