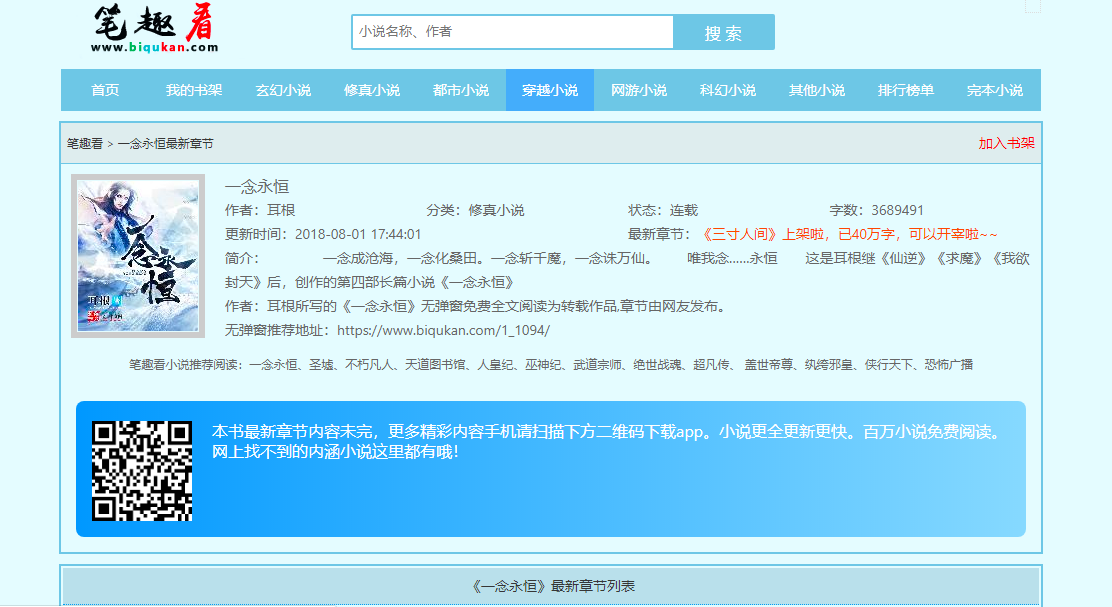

我们首先选定从笔趣看网站爬取这本小说。

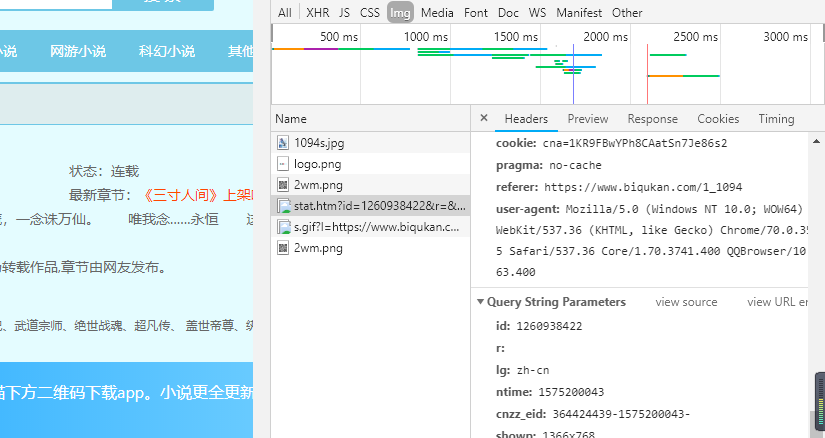

然后开始分析网页构造,这些与以前的分析过程大同小异,就不再多叙述了,只需要找到几个关键的标签和user-agent基本上就可以了。

那么下面,我们直接来看代码。

from urllib import request from bs4 import BeautifulSoup import re import sys if __name__ == "__main__": #创建txt文件 file = open('一念永恒.txt', 'w', encoding='utf-8') #一念永恒小说目录地址 target_url = 'http://www.biqukan.com/1_1094/' head = {} head['User-Agent'] = 'Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19' target_req = request.Request(url = target_url, headers = head) target_response = request.urlopen(target_req) target_html = target_response.read().decode('gbk','ignore') listmain_soup = BeautifulSoup(target_html) #找出div标签中class为listmain的所有子标签 chapters = listmain_soup.find_all('div',class_ = 'listmain') download_soup = BeautifulSoup(str(chapters)) #计算章节个数 numbers = (len(download_soup.dl.contents) - 1) / 2 - 8 index = 1 begin_flag = False for child in download_soup.dl.children: if child != ' ': #找到《一念永恒》正文卷 if child.string == u"《一念永恒》正文卷": begin_flag = True #爬取链接并下载链接内容 if begin_flag == True and child.a != None: download_url = "http://www.biqukan.com" + child.a.get('href') download_req = request.Request(url = download_url, headers = head) download_response = request.urlopen(download_req) download_html = download_response.read().decode('gbk','ignore') download_name = child.string soup_texts = BeautifulSoup(download_html) texts = soup_texts.find_all(id = 'content', class_ = 'showtxt') soup_text = BeautifulSoup(str(texts)) write_flag = True file.write(download_name + ' ') #将爬取内容写入文件 for each in soup_text.div.text.replace('xa0',''): if each == 'h': write_flag = False if write_flag == True and each != ' ': file.write(each) if write_flag == True and each == ' ': file.write(' ') print('正在写入第{0}小节'.format(index)) index+=1 file.write(' ') #打印爬取进度 sys.stdout.write("已下载:%.3f%%" % float(index/numbers) + ' ') sys.stdout.flush() index += 1 file.close()

这个代码可能还存在着一些小问题,但是并不影响我们爬取小说,下面来看看我们的运行结果。