作业一:

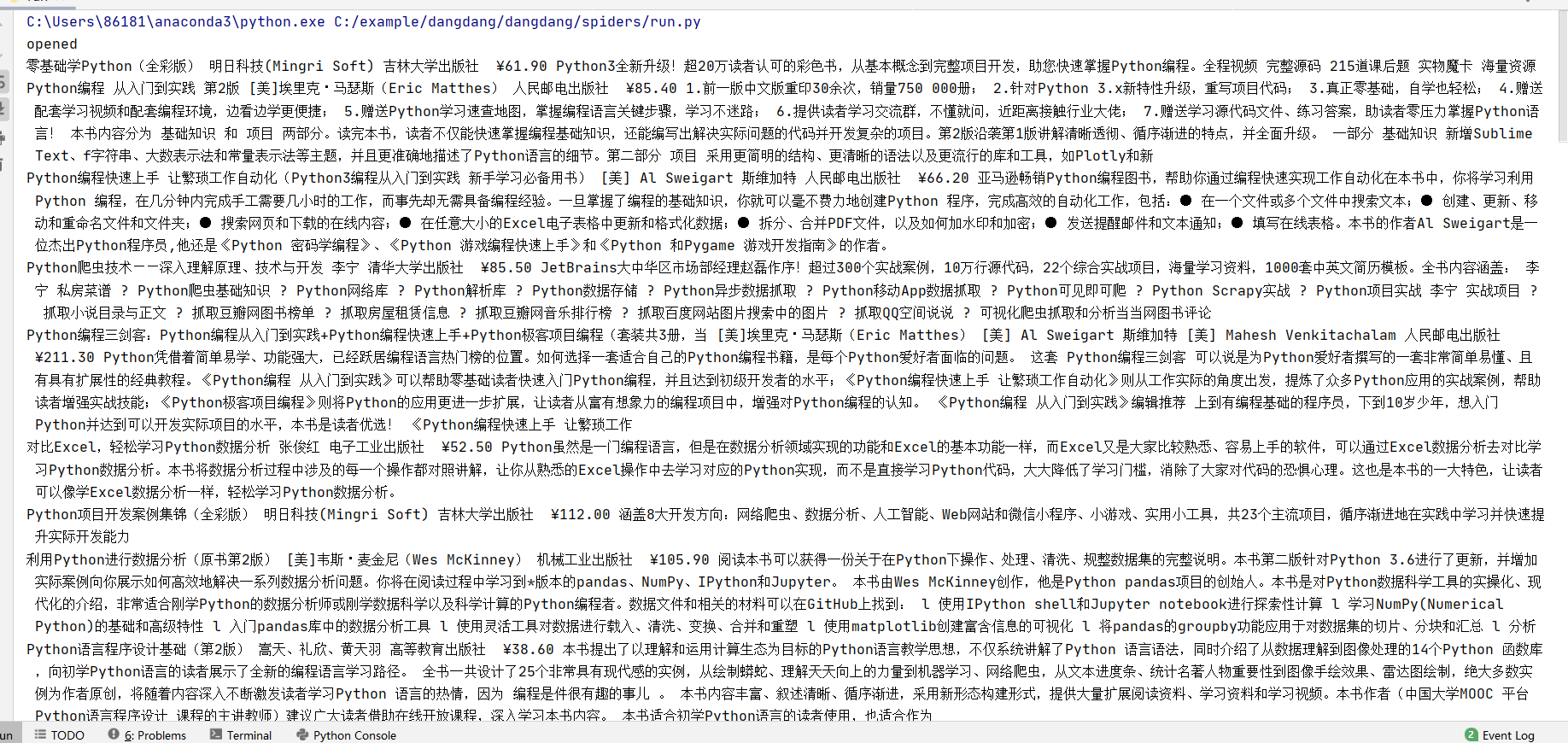

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取当当网站图书数据

候选网站:http://www.dangdang.com/

关键词:学生自由选择

输出信息:MYSQL的输出信息如下

代码

mysql表格结构:

book.py

import scrapy

from ..items import DangdangItem

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

class BookSpider(scrapy.Spider):

name = 'book'

key = "python"

start_url = 'http://search.dangdang.com/'

def start_requests(self):

url = BookSpider.start_url + "?key=" + BookSpider.key+"act=input"

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

try:

dammit = UnicodeDammit(response.body,["utf-8","gdk"])

data =dammit.unicode_markup

selector = scrapy.Selector(text=data)

lis = selector.xpath("//li['@ddt-pit'][starts-with(@class,'line')]")

for li in lis:

title = li.xpath("./a[position()=1]/@title").extract_first()

price = li.xpath("./p[@class='price']/span[@class='search_now_price']/text()").extract_first()

author = li.xpath("./p[@class='search_book_author']/span[position()=1]/a/@title").extract_first()

date = li.xpath("./p[@class='search_book_author']/span[position()=last-1]/text()").extract_first()

publisher =li.xpath("./p[@class='search_book_author']/span[position()=last()]/a/@title").extract_first()

detail = li.xpath("./p[@class='detail']/text()").extract_first()

item = DangdangItem()

item["title"]=title.strip()if title else""

item["author"]=author.strip()if author else""

item["publisher"]=publisher.strip()if publisher else""

item["date"]=date.strip()[1:]if date else""

item["price"]=price.strip()if price else""

item["detail"]=detail.strip()if detail else""

yield item

link = selector.xpath("//div[@class='paging']/ul[@name='Fy']/li[@class='next']/a/@href").extract_first()

if link:

url=response.urljoin(link)

except Exception as err:

print(err)

pass

items.py

import scrapy

class DangdangItem(scrapy.Item):

title = scrapy.Field()

author = scrapy.Field()

date = scrapy.Field()

publisher = scrapy.Field()

detail = scrapy.Field()

price = scrapy.Field()

pipeline.py

import pymysql

class DangdangPipeline(object):

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="20201006Wu", db="book",

charset="utf8") # 链接数据库,db要是自己建的数据库

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from book") # 删除表格的原来内容

self.opened = True # 执行打开数据库

self.count = 0 # 计数

except Exception as error:

print(error)

self.opened = False # 不执行打开数据库

def process_item(self, item, spider):

try:

print(item["title"], item["author"], item["publisher"], item["date"], item["price"], item["detail"])

if self.opened:

self.cursor.execute(

"insert into book(bTitle,bAuthor,bPublisher,bDate,bPrice,bDetail)values(%s,%s,%s,%s,%s,%s)",

(item["title"], item["author"], item["publisher"], item["date"], item["price"], item["detail"]))

self.count +=1

except Exception as e:

print(e)

return item

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

print("爬取了", self.count, "本书")

settings.py

BOT_NAME = 'dangdang'

ROBOTSTXT_OBEY = False

SPIDER_MODULES = ['dangdang.spiders']

NEWSPIDER_MODULE = 'dangdang.spiders'

ITEM_PIPELINES = {

'dangdang.pipelines.DangdangPipeline': 300,

}

run.py

from scrapy import cmdline

cmdline.execute("scrapy crawl book -s LOG_ENABLED=False".split())

心得

基本上都是书上的代码,重要的是如何理解这些操作,后面的作业需要这些基础,代码部分有些注释

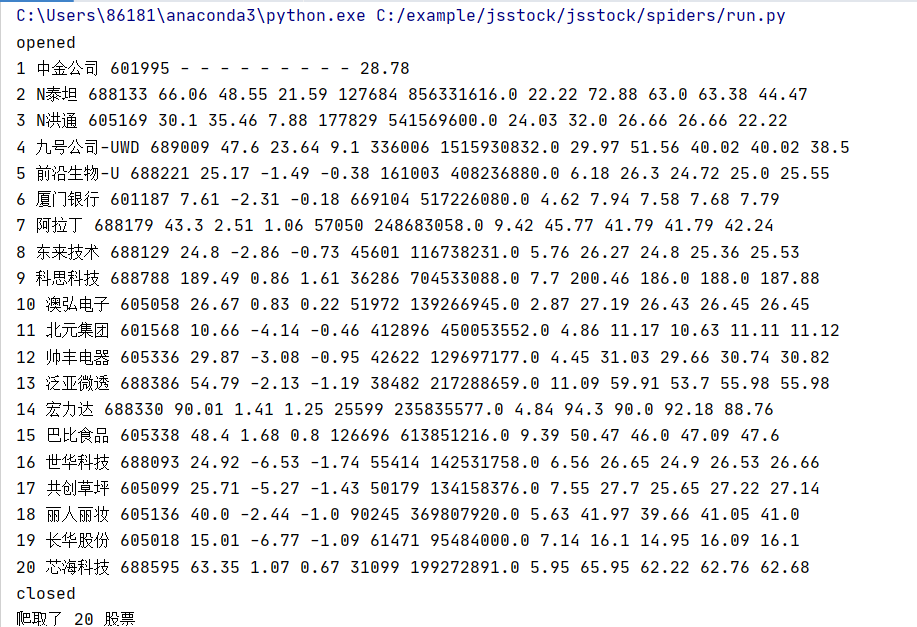

作业二:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取股票相关信息

候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

输出信息:MYSQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

代码

mysql表格建立:

stock.py

import scrapy

from ..items import JsstockItem

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import re

class StockSpider(scrapy.Spider):

name = 'stock'

start_url = 'http://82.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124007929044454484524_1601878281258&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f26&fs=m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1601536578736'

def start_requests(self):

url = StockSpider.start_url

yield scrapy.Request(url=url, callback=self.parse)

# 初始网址由于之前写的比较混乱所以就用page=1来代替不做翻页操作

def parse(self, response):

count = 0

pat = '"diff":[(.*?)]' # 因为之前是用正则表达式

data = re.compile(pat, re.S).findall(response.text) # 获取数据

data = data[0].strip("{").strip("}").split('},{') # 一页股票数据

for i in range(len(data)):

data_one = data[i].replace('"', "") # 相当于一条记录

count += 1

item = JsstockItem() # 获取信息定义为item

stat = data_one.split(',') # 数据所在的位置

# 接下来要做的就是一一对应传参

item['count'] = count

name = stat[13].split(":")[1]

item['name'] = name

num = stat[11].split(":")[1]

item['num'] = num

lastest_pri = stat[1].split(":")[1]

item['lastest_pri'] = lastest_pri

dzf = stat[2].split(":")[1]

item['dzf'] = dzf

dze = stat[3].split(":")[1]

item['dze'] = dze

cjl = stat[4].split(":")[1]

item['cjl'] = cjl

cje = stat[5].split(":")[1]

item['cje'] = cje

zf = stat[6].split(":")[1]

item['zf'] = zf

top = stat[14].split(":")[1]

item['top'] = top

low = stat[15].split(":")[1]

item['low'] = low

today = stat[16].split(":")[1]

item['today'] = today

yestd = stat[17].split(":")[1]

item['yestd'] = yestd

yield item

items.py

import scrapy

class JsstockItem(scrapy.Item):

# 定义变量

count = scrapy.Field()

name = scrapy.Field()

num = scrapy.Field()

lastest_pri = scrapy.Field()

dzf = scrapy.Field()

dze = scrapy.Field()

cjl = scrapy.Field()

cje = scrapy.Field()

zf = scrapy.Field()

top = scrapy.Field()

low = scrapy.Field()

today = scrapy.Field()

yestd = scrapy.Field()

pipeline.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

import pymysql

class JsstockPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="20201006Wu", db="jsstock",

charset="utf8") # 链接数据库,db要是自己建的数据库

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from stock") # 删除表格的原来内容

self.opened = True # 执行打开数据库

self.count = 0 # 总计数

except Exception as error:

print(error)

self.opened = False # 不执行打开数据库

def process_item(self, item, spider):

try:

print(item['count'], item['name'], item['num'], item['lastest_pri'], item['dzf'], item['dze'], item['cjl'],

item['cje'], item['zf'], item['top'], item['low'], item['today'], item['yestd'])

if self.opened:

self.cursor.execute(

"insert into stock(count,stockname,num,lastest_pri,dzf, dze, cjl,cje, zf, top,low,today,yestd) values(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(item["count"], item["name"], item["num"], item["lastest_pri"], item["dzf"], item["dze"],

item["cjl"],

item["cje"], item["zf"], item["top"], item["low"], item["today"], item["yestd"])

)

self.count += 1

except Exception as e:

print(e)

return item

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

print("爬取了", self.count, "股票")

settings.py

BOT_NAME = 'jsstock'

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'jsstock.pipelines.JsstockPipeline': 300,

}

SPIDER_MODULES = ['jsstock.spiders']

NEWSPIDER_MODULE = 'jsstock.spiders'

run.py

from scrapy import cmdline

cmdline.execute("scrapy crawl stock -s LOG_ENABLED=False".split())

心得

主要是pipeline.py是需要根据上面作业①的理解基础上实现出来,

其他的部分与实验三是可以直接转移的,

后续短暂的调试了一下基本正确的结果就会出来了

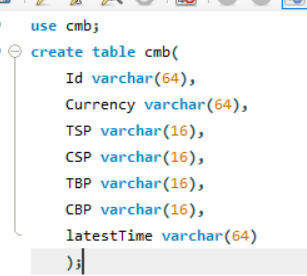

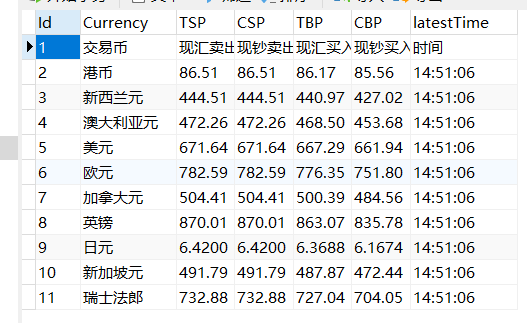

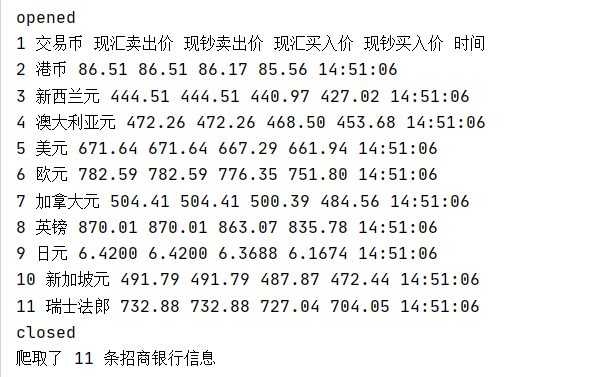

作业三:

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:招商银行网:http://fx.cmbchina.com/hq/

输出信息:MYSQL数据库存储和输出格式

代码

mysql建立表格结构:

cmbbank.py

import scrapy

from ..items import CmbbankItem

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

class CmbchinaSpider(scrapy.Spider):

name = 'cmbchina'

start_url = 'http://fx.cmbchina.com/hq/'

def start_requests(self):

url = CmbchinaSpider.start_url

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

dammit = UnicodeDammit(response.body, ["utf-8", "gdk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

count = 1

# 找到要存储的信息

lis = selector.xpath("//div[@id='realRateInfo']/table/tr")

for li in lis:

Id = count

count += 1

Currency = li.xpath("./td[position()=1]/text()").extract_first()

Currency =str(Currency).strip()

TSP = li.xpath("./td[position()=4]/text()").extract_first()

TSP =str(TSP).strip()

CSP = li.xpath("./td[position()=5]/text()").extract_first()

CSP = str(CSP).strip()

TBP = li.xpath("./td[position()=6]/text()").extract_first()

TBP = str(TBP).strip()

CBP = li.xpath("./td[position()=7]/text()").extract_first()

CBP = str(CBP).strip()

Time = li.xpath("./td[position()=8]/text()").extract_first()

Time=str(Time).strip()

item = CmbbankItem()

item["Id"] = Id

item["Currency"] = Currency

item["TSP"] = TSP

item["CSP"] = CSP

item["TBP"] = TBP

item["CBP"] = CBP

item["Time"] = Time

yield item

items.py

import scrapy

class CmbbankItem(scrapy.Item):

Id= scrapy.Field()

Currency= scrapy.Field()

TSP= scrapy.Field()

CSP= scrapy.Field()

TBP= scrapy.Field()

CBP= scrapy.Field()

Time= scrapy.Field()

setting.py

ITEM_PIPELINES = {

'cmbbank.pipelines.CmbbankPipeline': 300,

}

BOT_NAME = 'cmbbank'

ROBOTSTXT_OBEY = False

SPIDER_MODULES = ['cmbbank.spiders']

NEWSPIDER_MODULE = 'cmbbank.spiders'

pipelines.py

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

import pymysql

class CmbbankPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="127.0.0.1", port=3306, user="root", passwd="20201006Wu", db="cmb",

charset="utf8") # 链接数据库,db要是自己建的数据库

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.cursor.execute("delete from cmb") # 删除表格的原来内容

self.opened = True # 执行打开数据库

self.count = 0 # 计数

except Exception as error:

print(error)

self.opened = False # 不执行打开数据库

def process_item(self, item, spider):

try:

print(item["Id"], item["Currency"], item["TSP"], item["CSP"], item["TBP"], item["CBP"],item["Time"])

if self.opened:

self.cursor.execute(

"insert into cmb(Id,Currency,TSP,CSP,TBP,CBP,latestTime)values(%s,%s,%s,%s,%s,%s,%s)",

(item["Id"], item["Currency"], item["TSP"], item["CSP"], item["TBP"], item["CBP"],item["Time"]))

self.count +=1

except Exception as e:

print(e)

return item

def close_spider(self, spider):

if self.opened:

self.con.commit()

self.con.close()

self.opened = False

print("closed")

print("爬取了", self.count, "条招商银行信息")

run.py

from scrapy import cmdline

cmdline.execute("scrapy crawl cmbchina -s LOG_ENABLED=False".split())

心得

最后一个可能花的时间更长一些,写出来很快,但是运行出准确的答案却耗时不少。原因可能如下:

1.xpath的灵活运用可能欠缺,

2.然后网页的html阅读起来是不难,但是自己理解可能与实际的网页还是有所偏差;

3.xpath的寻找的时候多个条件直接显示none type,最后我是通过position一个个限制住,

4.最大的收获可能就是在scrapy的框架进行调试吧!

附上调试的中间图片: