1.使用Docker-compose实现Tomcat+Nginx负载均衡

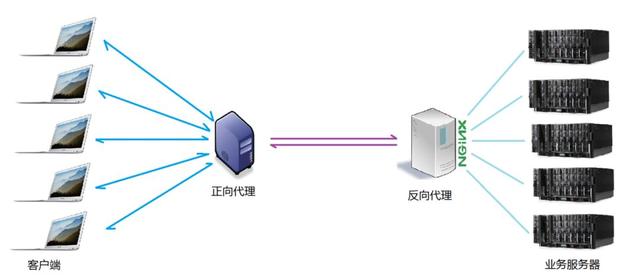

(1)对nginx反向代理的理解

- 我觉得这个讲的挺明白的:nginx和代理说明

- 正反向代理示意图如下

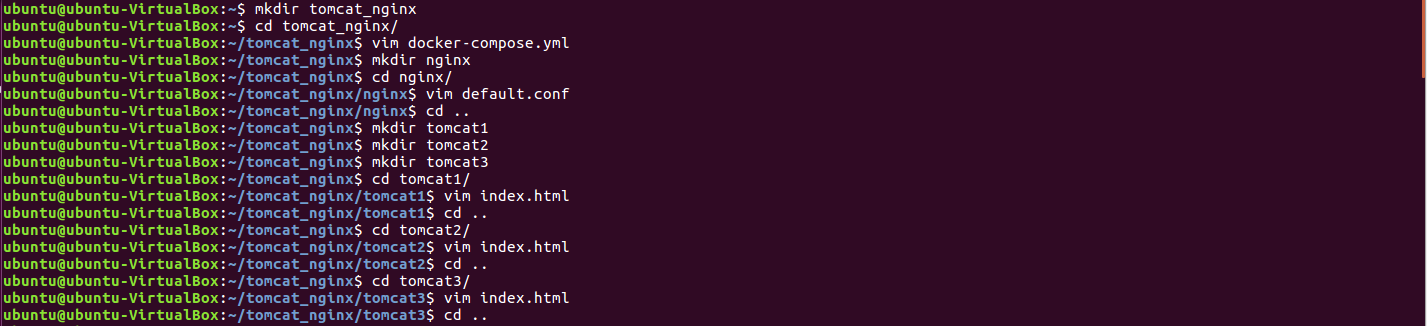

(2)nginx代理tomcat集群,代理2个以上tomcat

-

使用命令建立文件和文件夹

-

最后得到文件树结构

-

default.conf

upstream tomcats { server tomcat1:8080; # 主机名:端口号 server tomcat2:8080; # tomcat默认端口号8080 server tomcat3:8080; # 默认使用轮询策略 } server { listen 80; server_name localhost; location / { proxy_pass http://tomcats; # 请求转向tomcats } } -

tomcat1的html

<h1> hello tomcat1 </h1> -

tomcat2的html

<h1> hello tomcat2 </h1> -

tomcat3的html

<h1> hello tomcat3 </h1> -

docker-compose.yml

-

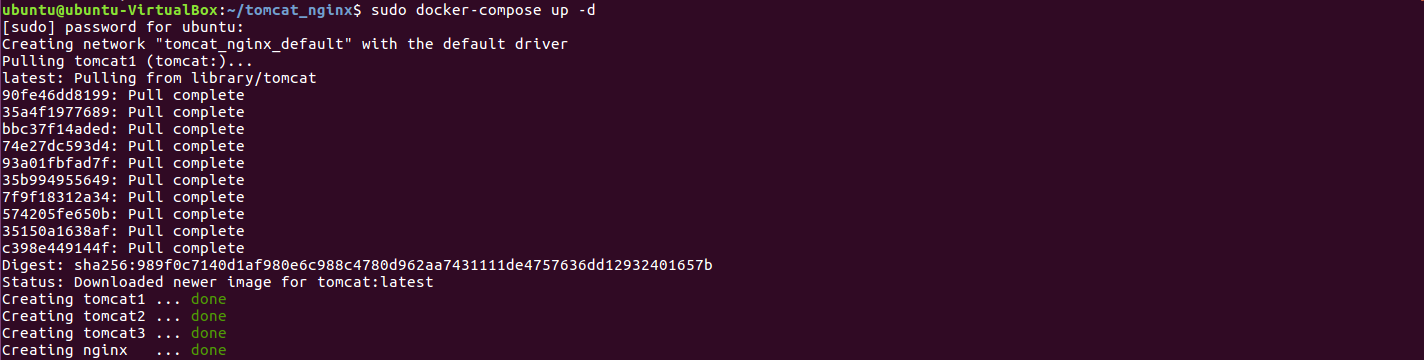

运行命令

sudo docker-compose up -d

-

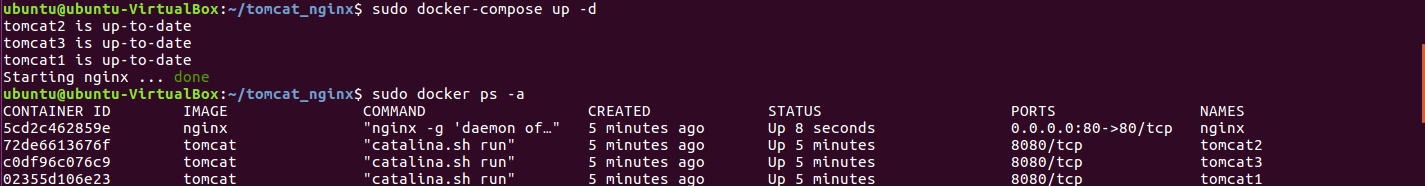

特别地,在用权重均衡时查看容器状态

sudo docker ps -a, 发现nginx运行失败经过检查,原因是nginx的default,conf文件严格遵守语法规则,weight后的等号两边不允许出现空格

version: "3" services: nginx: image: nginx container_name: nginx ports: - "80:80" volumes: - ./nginx/default.conf:/etc/nginx/conf.d/default.conf # 将主机上的tomcat_nginx/nginx/default.conf目录挂在到容器的/etc/nginx/conf.d/default.conf目录 depends_on: - tomcat1 - tomcat2 - tomcat3 tomcat1: image: tomcat container_name: tomcat1 volumes: - ./tomcat1:/usr/local/tomcat/webapps/ROOT # 挂载web目录 tomcat2: image: tomcat container_name: tomcat2 volumes: - ./tomcat2:/usr/local/tomcat/webapps/ROOT tomcat3: image: tomcat container_name: tomcat3 volumes: - ./tomcat3:/usr/local/tomcat/webapps/ROOT

-

(3)了解nginx的负载均衡策略,并至少实现nginx的2种负载均衡策略

-

编写爬虫

import requests url="http://127.0.0.1" for i in range(0,10): reponse=requests.get(url) print(reponse.text) -

轮询策略(默认)

-

权重策略

-

重新编写default.conf文件

#注意不能有空格 upstream tomcats { server tomcat1:8080 weight=2; # 主机名:端口号 server tomcat2:8080 weight=3; # tomcat默认端口号8080 server tomcat3:8080 weight=5; # 使用权重策略 } server { listen 80; server_name localhost; location / { proxy_pass http://tomcats; # 请求转向tomcats } } -

运行爬虫

-

此爬虫难以观察结果,重新编写爬虫

import requests url="http://127.0.0.1" count={} for i in range(0,2000): response=requests.get(url) if response.text in count: count[response.text]+=1; else: count[response.text]=1 for a in count: print(a, count[a])

-

2.使用Docker-compose部署javaweb运行环境

-

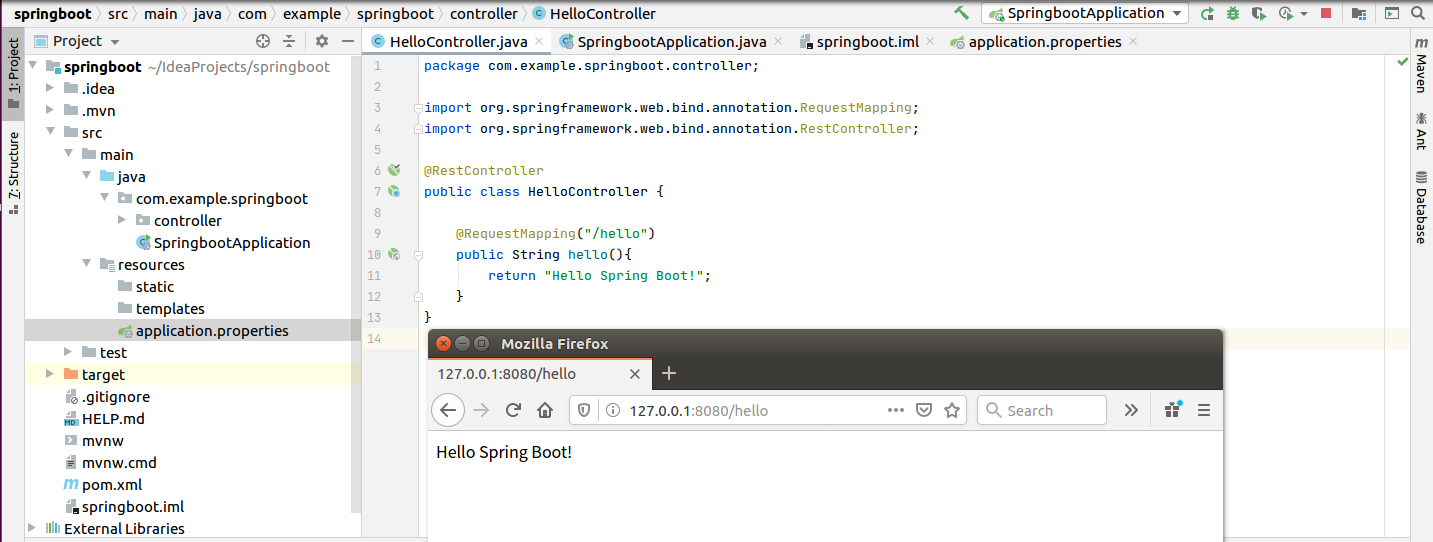

同学推荐用的SpringBoot且成功了,就不踩别的坑了。

-

参考资料

-

IDEA搭建SpringBoot的过程参考, 熟悉IDEA工具

-

关于打包成war的方法,可直接在SpringBoot构建项目的过程中选择war包,也可以参考 使用idea将springboot打包成war包

-

-

测试IDEA的SpringBoot项目是否可用

-

构建的文件树结构如下

-

mysql的Dockerfile

#基础镜像 FROM mysql:5.7 #将所需文件放到容器中 COPY setup.sh /mysql/setup.sh COPY schema.sql /mysql/schema.sql COPY privileges.sql /mysql/privileges.sql #设置容器启动时执行的命令 CMD ["sh", "/mysql/setup.sh"] -

mysql的privileges.sql

grant all privileges on *.* to 'root'@'%' identified by '123456' with grant option; flush privileges; -

mysql的schema.sql

-- 创建数据库 create database `docker_mysql` default character set utf8 collate utf8_general_ci; use docker_mysql; -- 建表 DROP TABLE IF EXISTS user; CREATE TABLE user ( `id` int NOT NULL, `name` varchar(40) DEFAULT NULL, `major` varchar(255) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=latin1; -- 插入数据 INSERT INTO user (`id`, `name`, `major`) VALUES (031702223,'zzq','computer science'); -

mysql的setup.sh

#!/bin/bash set -e #查看mysql服务的状态,方便调试,这条语句可以删除 echo `service mysql status` echo '1.启动mysql....' #启动mysql service mysql start sleep 3 echo `service mysql status` echo '2.开始导入数据....' #导入数据 mysql < /mysql/schema.sql echo '3.导入数据完毕....' sleep 3 echo `service mysql status` #重新设置mysql密码 echo '4.开始修改密码....' mysql < /mysql/privileges.sql echo '5.修改密码完毕....' #sleep 3 echo `service mysql status` echo `mysql容器启动完毕,且数据导入成功` tail -f /dev/null -

tomcat的Dockerfile

-

FROM tomcat COPY ./wait-for-it.sh /usr/local/tomcat/bin/ RUN chmod +x /usr/local/tomcat/bin/wait-for-it.sh # 判断mysql是否已启动,如果启动就执行 catalina.sh run CMD ["wait-for-it.sh", "cdb:3306", "--", "catalina.sh", "run"]

-

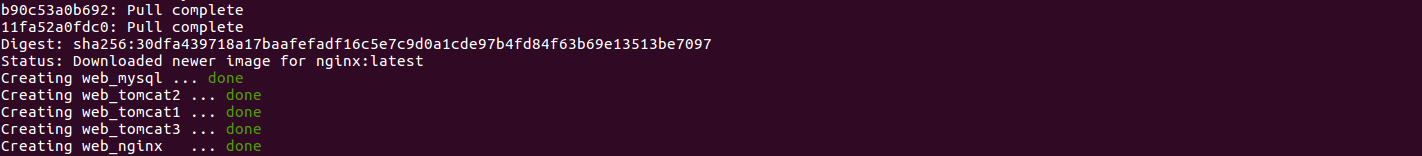

docker-compose.yml

version: '3' services: #tomcat setting tomcat1: container_name: web_tomcat1 build: ./tomcat image: tomcat #volumes path|host path:container path volumes: - ./tomcat:/usr/local/tomcat/webapps #connect to another container links: - mysql depends_on: - mysql restart: always tomcat2: container_name: web_tomcat2 build: ./tomcat image: tomcat #volumes path|host path:container path volumes: - ./tomcat:/usr/local/tomcat/webapps #connect to another container links: - mysql depends_on: - mysql restart: always tomcat3: container_name: web_tomcat3 build: ./tomcat image: tomcat #volumes path|host path:container path volumes: - ./tomcat:/usr/local/tomcat/webapps #connect to another container links: - mysql depends_on: - mysql restart: always #mysql setting mysql: container_name: web_mysql image: mysql:5.7 build: ./mysql ports: - "3306:3306" environment: #Initialize the root password MYSQL_ROOT_PASSWORD: 123456 #nginx setting nginx: container_name: web_nginx image: nginx ports: - 80:2223 volumes: - ./nginx/default.conf:/etc/nginx/conf.d/default.conf restart: always depends_on: - tomcat1 - tomcat2 - tomcat3 -

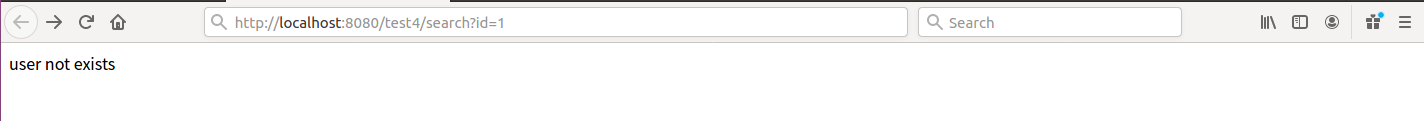

Controller

package com.example.mysql.Controller; import com.example.mysql.Bean.UserBean; import com.example.mysql.Mapper.UserMapper; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; import javax.servlet.http.HttpServletRequest; @RestController @RequestMapping(path = "/test4") public class HelloController { @Autowired private UserMapper userMapper; @RequestMapping(value = "/hello") String hello() { return "hello"; } @RequestMapping(value = "/search") public String search(String id){ UserBean user = userMapper.selectByPrimaryKey(id); if (user == null){ return "user not exists"; } String str = user.getId() + " " + user.getName() + " " + user.getMajor(); return "search success fully!"+ " The User's information is:" + " " + str; } @RequestMapping(value = "/addr") String getPort(HttpServletRequest request) { return "本次服务器地址 " + request.getLocalAddr(); } } -

准备好这些文件后,构建

sudo docker-compose up --build

-

测试接口

-

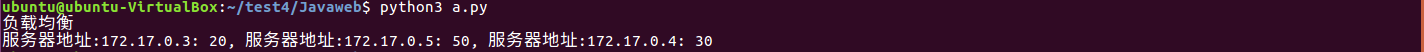

负载均衡

-

编写爬虫方便查看结果

import requests url = 'http://localhost/test/addr' num = {} for i in range(0,100): res = requests.get(url) if res.text in num: num[res.text] += 1 else: num[res.text] = 0 -

其中default配置与上面相同,为 2:3:5

-

3、使用Docker搭建大数据集群环境

-

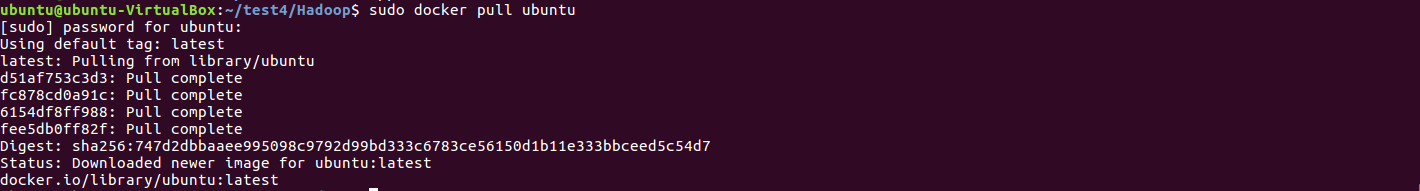

首先准备好需要的ubuntu镜像

-

为了提高下载速度,换源

-

Dockerfile

FROM ubuntu COPY ./sources.list /etc/apt/sources.list -

sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

-

-

运行容器

sudo docker run -it -v /home/hadoop/build:/root/build --name ubuntu ubuntu -

进入容器后,安装一些工具,以便后续进行

apt-get update apt-get install vim apt-get install ssh -

开启ssh服务,并让他自启

/etc/init.d/ssh start //此为开启 vim ~/.bashrc //打开这个文件,添加上面语句即可自动启动 -

配置ssh

cd ~/.ssh //如果没有这个文件夹,执行一下ssh localhost,再执行这个 ssh-keygen -t rsa //一直按回车即可 cat id_rsa.pub >> authorized_keys -

安装JDK

apt install openjdk-8-jdk vim ~/.bashrc //在文件末尾添加以下两行,配置Java环境变量: export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ export PATH=$PATH:$JAVA_HOME/bin -

使配置生效,并查看是否安装成功

source ~/.bashrc java -version #查看是否安装成功

-

保存配置好的容器映射为镜像

//可以打开一个新的终端 sudo docker ps //查看容器id sudo docker commit [容器id] 镜像名字 -

接下来安装hadoop,把下载好的包挂载到目录中,下载地址推荐

docker run -it -v /home/hadoop/build:/root/build --name ubuntu-jdk ubuntu/jdk -

解压hadoop并查看版本

//在打开的容器里运行 cd /root/build tar -zxvf hadoop-3.2.1.tar.gz -C /usr/local cd /usr/local/hadoop-3.2.1 ./bin/hadoop version

-

接下来开始配置集群

cd /usr/local/hadoop-3.2.1/etc/hadoop vim hadoop-env.sh #把下面一行代码加入文件 export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/ -

修改core-site.xml文件内容

vim core-site.xml- 可以使用%d来快速清除

- % 是匹配所有行

- d 是删除的意思

<?xml version="1.0" encoding="UTF-8" ?> <?xml-stylesheet type="text/xsl" href="configuration.xsl" ?> <configuration> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> </configuration> -

修改hdfs-site.xml文件内容

vim hdfs-site.xml<?xml version="1.0" encoding="UTF-8" ?> <?xml-stylesheet type="text/xsl" href="configuration.xsl" ?> <configuration> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop/namenode_dir</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop/datanode_dir</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> </configuration> -

修改mapred-site.xml文件内容

vim mapred-site.xml<?xml version="1.0" ?> <?xml-stylesheet type="text/xsl" href="configuration.xsl" ?> <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.1</value> </property> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.1</value> </property> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.1</value> </property> </configuration> -

修改yarn-site.xml文件内容

vim yarn-site.xml<?xml version="1.0" ?> <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> </configuration> -

完成这些步骤之后,再映射得到镜像

sudo docker commit 容器id ubuntu/hadoop -

这里要修改脚本,因为 使用root配置时会出现报错

-

进入脚本文件存放目录:

cd /usr/local/hadoop-3.2.1/sbin -

为start-dfs.sh和stop-dfs.sh添加以下参数

HDFS_DATANODE_USER=root HADOOP_SECURE_DN_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root -

为start-yarn.sh和stop-yarn.sh添加以下参数

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

-

-

从三个终端分别为ubuntu/hadoop镜像构建容器

# 第一个终端 sudo docker run -it -h master --name master ubuntu/hadoop # 第二个终端 sudo docker run -it -h slave01 --name slave01 ubuntu/hadoop # 第三个终端 sudo docker run -it -h slave02 --name slave02 ubuntu/hadoop -

在三个终端里分别对host修改ip,

vim /etc/hosts # 根据具体内容修改 172.17.0.4 master 172.17.0.5 slave01 172.17.0.6 slave02

-

在master节点测试ssh

ssh slave01 ssh slave02

-

master上的worker文件内容修改为

vim /usr/local/hadoop-3.2.1/etc/hadoop/workers slave01 slave02 -

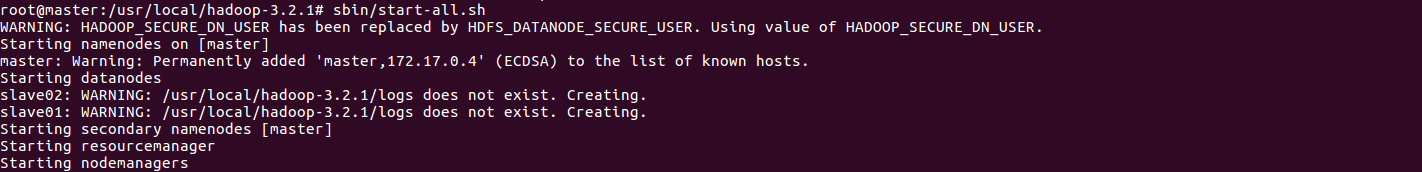

测试hadoop集群

#在master上操作 cd /usr/local/hadoop-3.2.1 bin/hdfs namenode -format #首次启动Hadoop需要格式化 sbin/start-all.sh #启动所有服务 jps #分别查看三个终端

-

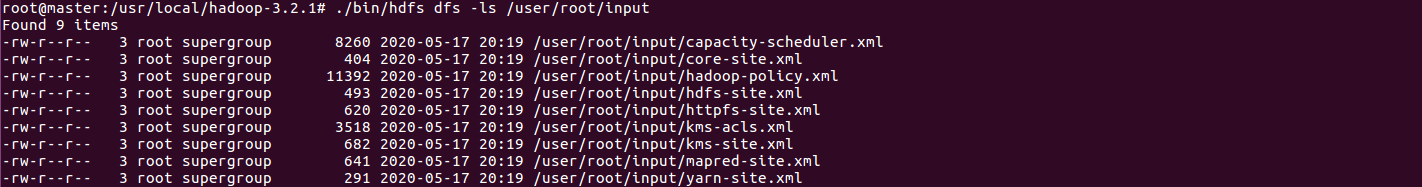

将hadoop内的一些文件放入hdfs上的input文件夹

./bin/hdfs dfs -mkdir -p /user/root/input ./bin/hdfs dfs -put ./etc/hadoop/*.xml /user/root/input ./bin/hdfs dfs -ls /user/root/input

-

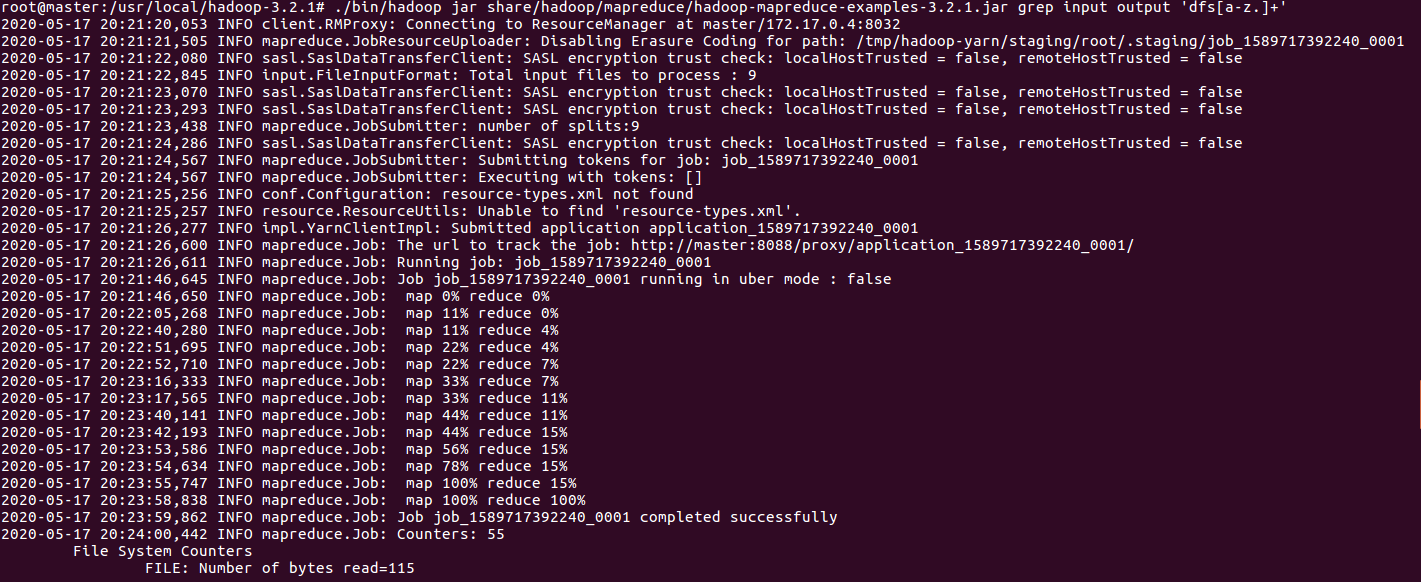

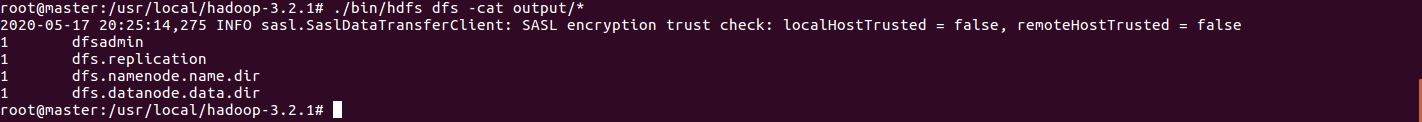

执行指定的应用程序,查看文件内符合正则表达式的字符串

./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar grep input output 'dfs[a-z.]+'

-

输出结果

./bin/hdfs dfs -cat output/*

4.问题解决和总结

问题的解决已分布在各个对应的内容中,就不在重复了。

这次实验内容很多,最难的还是第二个,对javaweb一无所知,在同学推荐下去大概看了下SpringBoot,而且手头的资料很少,就连对IDEA的使用都花了很多时间(网上找的资料,由于版本不一样导致项目难以进行,只能一步一步试下去)。第一个第三个实验相比第二个花得时间就少了很多,通过这次实验学了很多内容,包括反向代理原理、javaweb的简单部署、hadoop的基本了解,还有IDEA工具的使用。

什么时候我才能 变强 变得平凡 啊!,这次实验大概花了一天吧,24个小时。