不多说,直接上干货!

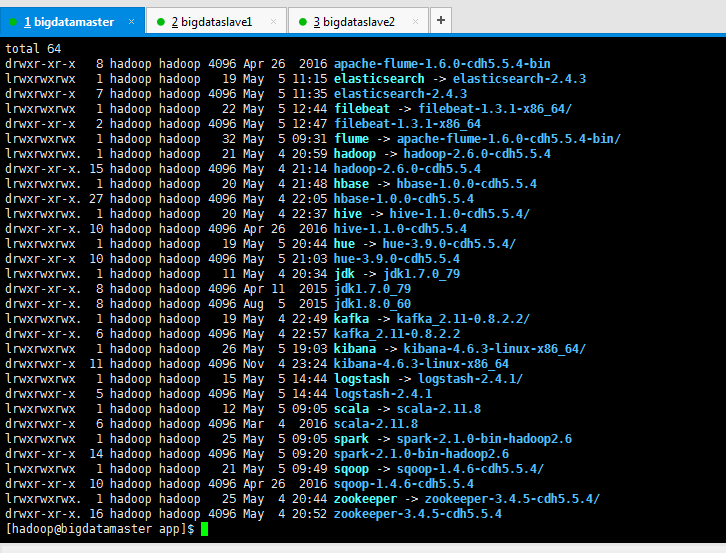

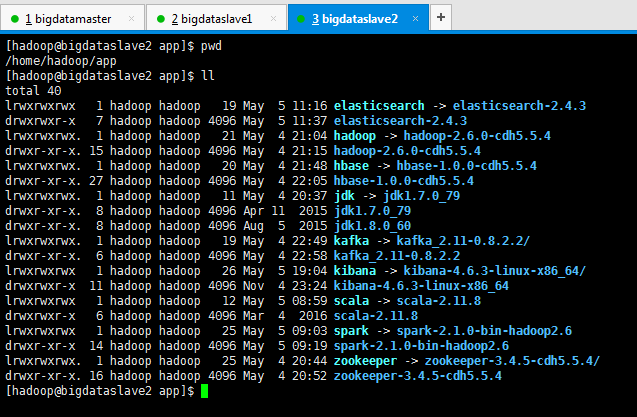

我的集群机器情况是 bigdatamaster(192.168.80.10)、bigdataslave1(192.168.80.11)和bigdataslave2(192.168.80.12)

然后,安装目录是在/home/hadoop/app下。

官方建议在master机器上安装Hue,我这里也不例外。安装在bigdatamaster机器上。

Hue版本:hue-3.9.0-cdh5.5.4

需要编译才能使用(联网)

说给大家的话:大家电脑的配置好的话,一定要安装cloudera manager。毕竟是一家人的。废话不多说,因为我目前读研,自己笔记本电脑最大8G,只能玩手动来练手。

纯粹是为了给身边没高配且条件有限的学生党看的! 但我已经在实验室机器群里搭建好cloudera manager 以及 ambari都有。

大数据领域两大最主流集群管理工具Ambari和Cloudera Manger

CentOS6.5下Cloudera安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

CentOS6.5下Ambari安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

Ubuntu14.04下Ambari安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

Ubuntu14.04下Cloudera安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)(在线或离线)

因为,这篇博客,我是以CentOS为例的。

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.5.4/manual.html#_install_hue

其实,其他系统如Ubuntu而言,就是这些依赖安装有些区别而已。这里,大家自行去看官网吧!

一、hue-3.9.0-cdh5.5.4.tar.gz的下载地址

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.5.4.tar.gz

二、在安装Hue之前,需要安装各种依赖包

ant

asciidoc

cyrus-sasl-devel

cyrus-sasl-gssapi

gcc

gcc-c++

krb5-devel

libtidy (for unit tests only)

libxml2-devel

libxslt-devel

make

mvn (from ``maven`` package or maven3 tarball)

MySQL(可以不用安装)(当然,我在安装Hive时,已经在bigdatamaster这台机器就安装了MySQL)

mysql-devel (可以不用安装)(当然,我在安装Hive时,已经在bigdatamaster这台机器就安装了MySQL)

openldap-devel

Python-devel

sqlite-devel

openssl-devel (for version 7+)

gmp-devel

或者

ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel libtidy (for unit tests only) libxml2-devel libxslt-devel

make mvn (from maven package or maven3 tarball) mysql (我这里不安装了,因为在hive那边已经安装了) mysql-devel (我这里不安装了,因为在hive那边已经安装了) openldap-devel python-devel sqlite-devel openssl-devel (for version 7+)

gmp-devel

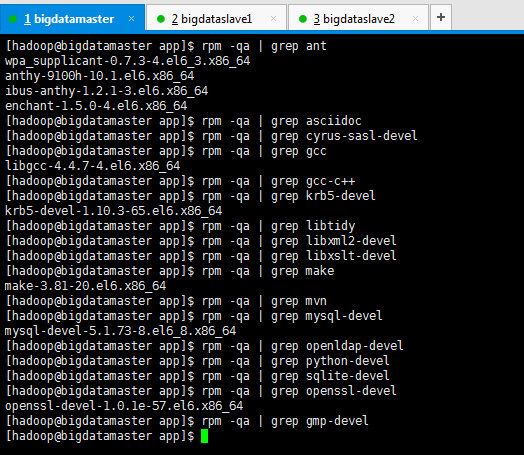

检查系统上有没有上述的那些包

rpm -qa | grep package_name

注意,不是上述的用法,是具体的。

[hadoop@bigdatamaster app]$ rpm -qa | grep ant wpa_supplicant-0.7.3-4.el6_3.x86_64 anthy-9100h-10.1.el6.x86_64 ibus-anthy-1.2.1-3.el6.x86_64 enchant-1.5.0-4.el6.x86_64 [hadoop@bigdatamaster app]$ rpm -qa | grep asciidoc [hadoop@bigdatamaster app]$ rpm -qa | grep cyrus-sasl-devel [hadoop@bigdatamaster app]$ rpm -qa | grep gcc libgcc-4.4.7-4.el6.x86_64 [hadoop@bigdatamaster app]$ rpm -qa | grep gcc-c++ [hadoop@bigdatamaster app]$ rpm -qa | grep krb5-devel krb5-devel-1.10.3-65.el6.x86_64 [hadoop@bigdatamaster app]$ rpm -qa | grep libtidy [hadoop@bigdatamaster app]$ rpm -qa | grep libxml2-devel [hadoop@bigdatamaster app]$ rpm -qa | grep libxslt-devel [hadoop@bigdatamaster app]$ rpm -qa | grep make make-3.81-20.el6.x86_64 [hadoop@bigdatamaster app]$ rpm -qa | grep mvn [hadoop@bigdatamaster app]$ rpm -qa | grep mysql-devel mysql-devel-5.1.73-8.el6_8.x86_64 [hadoop@bigdatamaster app]$ rpm -qa | grep openldap-devel [hadoop@bigdatamaster app]$ rpm -qa | grep python-devel [hadoop@bigdatamaster app]$ rpm -qa | grep sqlite-devel [hadoop@bigdatamaster app]$ rpm -qa | grep openssl-devel openssl-devel-1.0.1e-57.el6.x86_64 [hadoop@bigdatamaster app]$ rpm -qa | grep gmp-devel [hadoop@bigdatamaster app]$

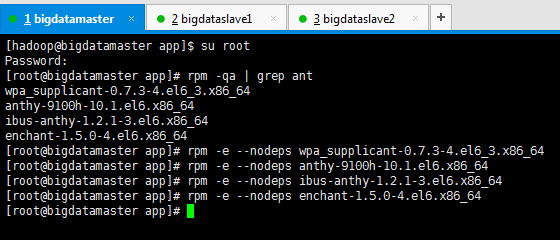

这一步,我看到有些资料上说,需要先卸载掉,自带的这些。不然会对后续的安装,产生版本冲突的问题。

直接用yum安装的,中间可能会报一些依赖的版本冲突问题,可以卸载已经安装的版本,然后再装。

卸载自带的包

rpm -e --nodeps ***

查阅和卸载自带的ant

[hadoop@bigdatamaster app]$ su root Password: [root@bigdatamaster app]# rpm -qa | grep ant wpa_supplicant-0.7.3-4.el6_3.x86_64 anthy-9100h-10.1.el6.x86_64 ibus-anthy-1.2.1-3.el6.x86_64 enchant-1.5.0-4.el6.x86_64 [root@bigdatamaster app]# rpm -e --nodeps wpa_supplicant-0.7.3-4.el6_3.x86_64 [root@bigdatamaster app]# rpm -e --nodeps anthy-9100h-10.1.el6.x86_64 [root@bigdatamaster app]# rpm -e --nodeps ibus-anthy-1.2.1-3.el6.x86_64 [root@bigdatamaster app]# rpm -e --nodeps enchant-1.5.0-4.el6.x86_64 [root@bigdatamaster app]#

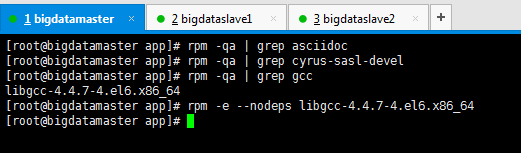

查阅和卸载自带的asciidoc、cyrus-sasl-devel、gcc

[root@bigdatamaster app]# rpm -qa | grep asciidoc [root@bigdatamaster app]# rpm -qa | grep cyrus-sasl-devel [root@bigdatamaster app]# rpm -qa | grep gcc libgcc-4.4.7-4.el6.x86_64 [root@bigdatamaster app]# rpm -e --nodeps libgcc-4.4.7-4.el6.x86_64 (删除完这个命令,我就后悔了) [root@bigdatamaster app]#

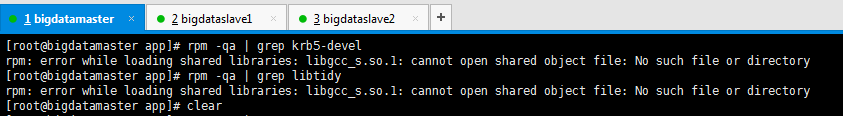

其他的,我都不多赘述了,也可以大家在中间,删除的时候,会出现如下的问题。

[root@bigdatamaster app]# rpm -qa | grep krb5-devel rpm: error while loading shared libraries: libgcc_s.so.1: cannot open shared object file: No such file or directory [root@bigdatamaster app]# rpm -qa | grep libtidy rpm: error while loading shared libraries: libgcc_s.so.1: cannot open shared object file: No such file or directory

乱删rpm导致再次安装包时出现 error while loading shared libraries: libgcc_s.so.1问题

解决办法

说先搜下有没有这个libgcc_s.so.1共享库,果然是有的。

[root@bigdatamaster app]# locate libgcc_s.so.1

在/lib64/libgcc_s.so.1。

然后,我的还在/lib64下,则

error while loading shared libraries: xxx.so.x"错误的原因和解决办法

即上面的这篇博客,里的1) 如果共享库文件安装到了/lib或/usr/lib目录下, 那么需执行一下ldconfig命令。

其实吧,我感觉,这些资料都不好。

最简单的办法就是,我们不是做大数据的么,直接,把另外一台机器的libgcc_s-4.4.6-20110824.so.1到/lib64下恢复正常。

rpm: error while loading shared libraries: libgcc_s.so.1: cannot open shared object file: No such file or directory解决办法 (还是看我自己总结的博客)

添加maven源

wget http://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo -O /etc/yum.repos.d/epel-apache-maven.repo

[root@bigdatamaster app]# wget http://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo -O /etc/yum.repos.d/epel-apache-maven.repo --2017-05-05 19:52:01-- http://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo Resolving repos.fedorapeople.org... 152.19.134.199, 2610:28:3090:3001:5054:ff:fea7:9474 Connecting to repos.fedorapeople.org|152.19.134.199|:80... connected. HTTP request sent, awaiting response... 302 Found Location: https://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo [following] --2017-05-05 19:52:02-- https://repos.fedorapeople.org/repos/dchen/apache-maven/epel-apache-maven.repo Connecting to repos.fedorapeople.org|152.19.134.199|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 445 Saving to: “/etc/yum.repos.d/epel-apache-maven.repo” 100%[=====================================================================================================================================================>] 445 --.-K/s in 0s 2017-05-05 19:52:04 (11.8 MB/s) - “/etc/yum.repos.d/epel-apache-maven.repo” saved [445/445] [root@bigdatamaster app]#

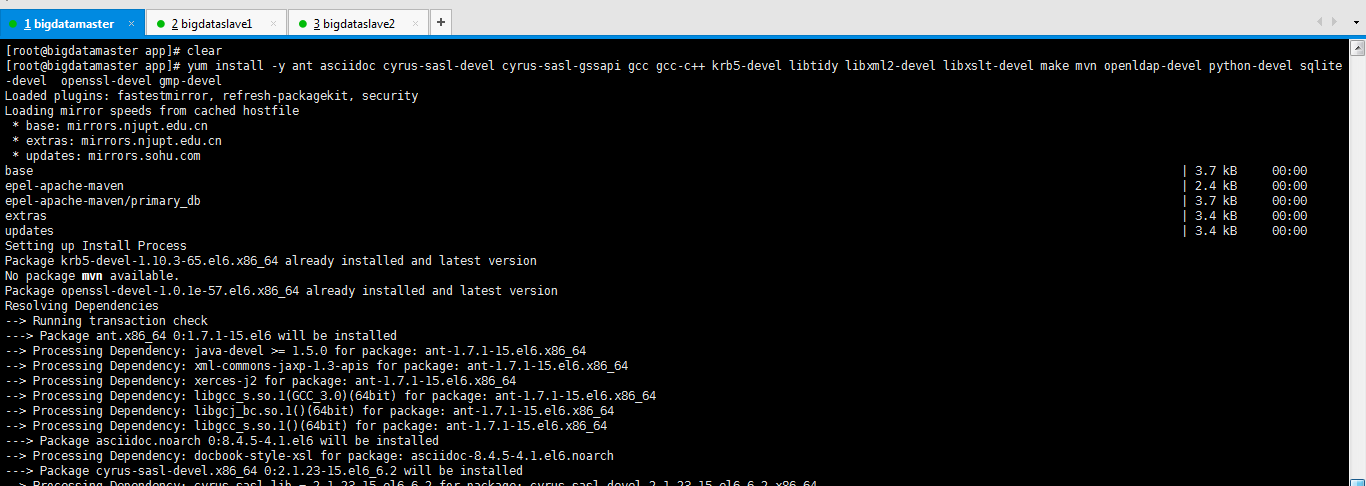

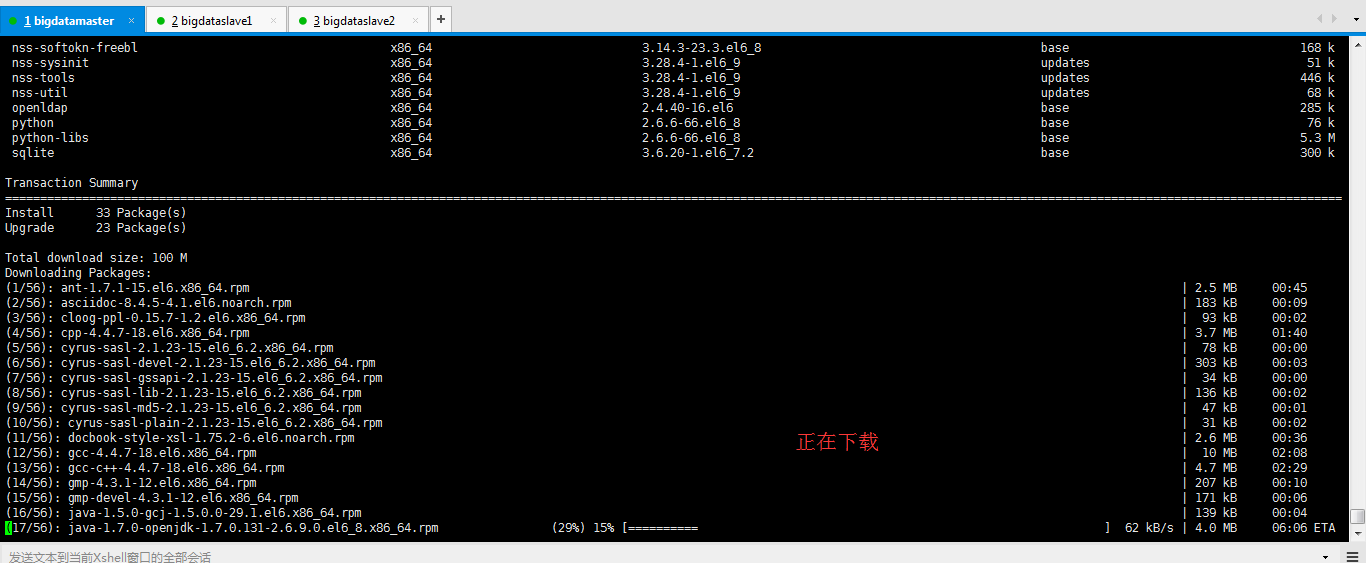

安装依赖 (注意mysql 和 mysql - dever 不需要安装了,因为我在hive那边已经安装好了)(别怪我没提醒你)(你这里若再安装,版本不兼容,会出问题的)

yum install -y ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel libtidy libxml2-devel libxslt-devel make mvn openldap-devel python-devel sqlite-devel openssl-devel gmp-devel

或者

下载需要的系统包

yum install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi gcc gcc-c++ krb5-devel ibtidy libxml2-devel libxslt-devel openldap-devel python-devel

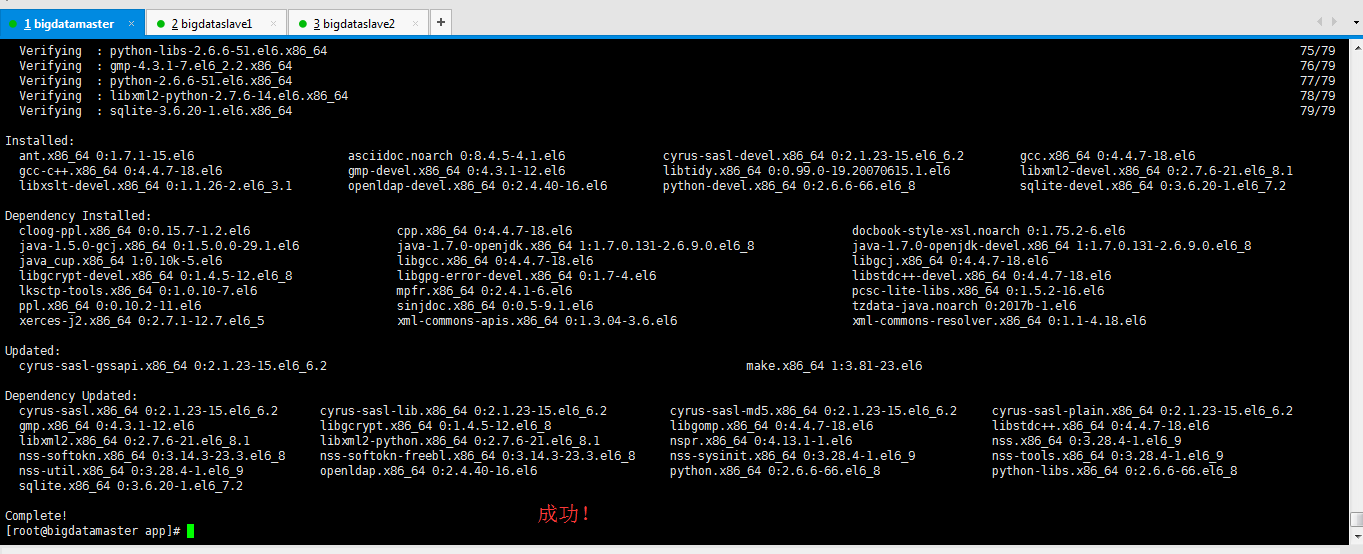

sqlite-devel openssl-devel mysql-devel gmp-devel

Verifying : python-libs-2.6.6-51.el6.x86_64 75/79 Verifying : gmp-4.3.1-7.el6_2.2.x86_64 76/79 Verifying : python-2.6.6-51.el6.x86_64 77/79 Verifying : libxml2-python-2.7.6-14.el6.x86_64 78/79 Verifying : sqlite-3.6.20-1.el6.x86_64 79/79 Installed: ant.x86_64 0:1.7.1-15.el6 asciidoc.noarch 0:8.4.5-4.1.el6 cyrus-sasl-devel.x86_64 0:2.1.23-15.el6_6.2 gcc.x86_64 0:4.4.7-18.el6 gcc-c++.x86_64 0:4.4.7-18.el6 gmp-devel.x86_64 0:4.3.1-12.el6 libtidy.x86_64 0:0.99.0-19.20070615.1.el6 libxml2-devel.x86_64 0:2.7.6-21.el6_8.1 libxslt-devel.x86_64 0:1.1.26-2.el6_3.1 openldap-devel.x86_64 0:2.4.40-16.el6 python-devel.x86_64 0:2.6.6-66.el6_8 sqlite-devel.x86_64 0:3.6.20-1.el6_7.2 Dependency Installed: cloog-ppl.x86_64 0:0.15.7-1.2.el6 cpp.x86_64 0:4.4.7-18.el6 docbook-style-xsl.noarch 0:1.75.2-6.el6 java-1.5.0-gcj.x86_64 0:1.5.0.0-29.1.el6 java-1.7.0-openjdk.x86_64 1:1.7.0.131-2.6.9.0.el6_8 java-1.7.0-openjdk-devel.x86_64 1:1.7.0.131-2.6.9.0.el6_8 java_cup.x86_64 1:0.10k-5.el6 libgcc.x86_64 0:4.4.7-18.el6 libgcj.x86_64 0:4.4.7-18.el6 libgcrypt-devel.x86_64 0:1.4.5-12.el6_8 libgpg-error-devel.x86_64 0:1.7-4.el6 libstdc++-devel.x86_64 0:4.4.7-18.el6 lksctp-tools.x86_64 0:1.0.10-7.el6 mpfr.x86_64 0:2.4.1-6.el6 pcsc-lite-libs.x86_64 0:1.5.2-16.el6 ppl.x86_64 0:0.10.2-11.el6 sinjdoc.x86_64 0:0.5-9.1.el6 tzdata-java.noarch 0:2017b-1.el6 xerces-j2.x86_64 0:2.7.1-12.7.el6_5 xml-commons-apis.x86_64 0:1.3.04-3.6.el6 xml-commons-resolver.x86_64 0:1.1-4.18.el6 Updated: cyrus-sasl-gssapi.x86_64 0:2.1.23-15.el6_6.2 make.x86_64 1:3.81-23.el6 Dependency Updated: cyrus-sasl.x86_64 0:2.1.23-15.el6_6.2 cyrus-sasl-lib.x86_64 0:2.1.23-15.el6_6.2 cyrus-sasl-md5.x86_64 0:2.1.23-15.el6_6.2 cyrus-sasl-plain.x86_64 0:2.1.23-15.el6_6.2 gmp.x86_64 0:4.3.1-12.el6 libgcrypt.x86_64 0:1.4.5-12.el6_8 libgomp.x86_64 0:4.4.7-18.el6 libstdc++.x86_64 0:4.4.7-18.el6 libxml2.x86_64 0:2.7.6-21.el6_8.1 libxml2-python.x86_64 0:2.7.6-21.el6_8.1 nspr.x86_64 0:4.13.1-1.el6 nss.x86_64 0:3.28.4-1.el6_9 nss-softokn.x86_64 0:3.14.3-23.3.el6_8 nss-softokn-freebl.x86_64 0:3.14.3-23.3.el6_8 nss-sysinit.x86_64 0:3.28.4-1.el6_9 nss-tools.x86_64 0:3.28.4-1.el6_9 nss-util.x86_64 0:3.28.4-1.el6_9 openldap.x86_64 0:2.4.40-16.el6 python.x86_64 0:2.6.6-66.el6_8 python-libs.x86_64 0:2.6.6-66.el6_8 sqlite.x86_64 0:3.6.20-1.el6_7.2 Complete! [root@bigdatamaster app]#

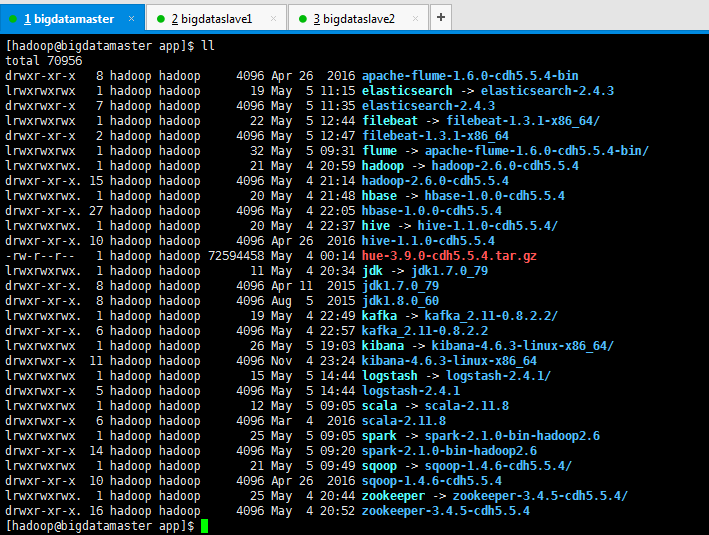

上传hue-3.9.0-cdh5.5.4.tar.gz(我这里选择先下载好,再上传)

当然,这一步,大家也是可以下载,编译源码(hue 3.9),编译时间较长

git clone https://github.com/cloudera/hue.git branch-3.9 cd branch-3.9 make apps

编译完后也可以选择安装

make install

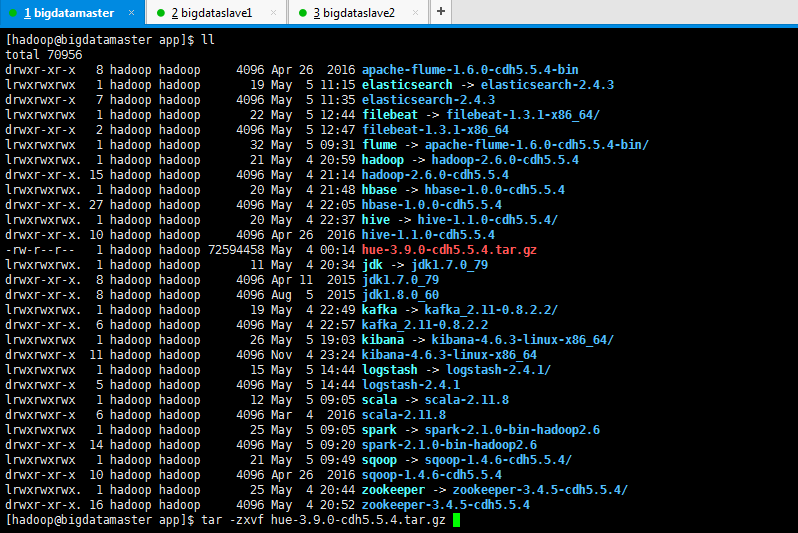

我这里选择上传

[hadoop@bigdatamaster app]$ pwd /home/hadoop/app [hadoop@bigdatamaster app]$ ll total 60 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 23:24 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 21 May 5 09:49 sqoop -> sqoop-1.4.6-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 16 hadoop hadoop 4096 May 4 20:52 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ rz [hadoop@bigdatamaster app]$ ll total 70956 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 -rw-r--r-- 1 hadoop hadoop 72594458 May 4 00:14 hue-3.9.0-cdh5.5.4.tar.gz lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 23:24 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 21 May 5 09:49 sqoop -> sqoop-1.4.6-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 16 hadoop hadoop 4096 May 4 20:52 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$

解压

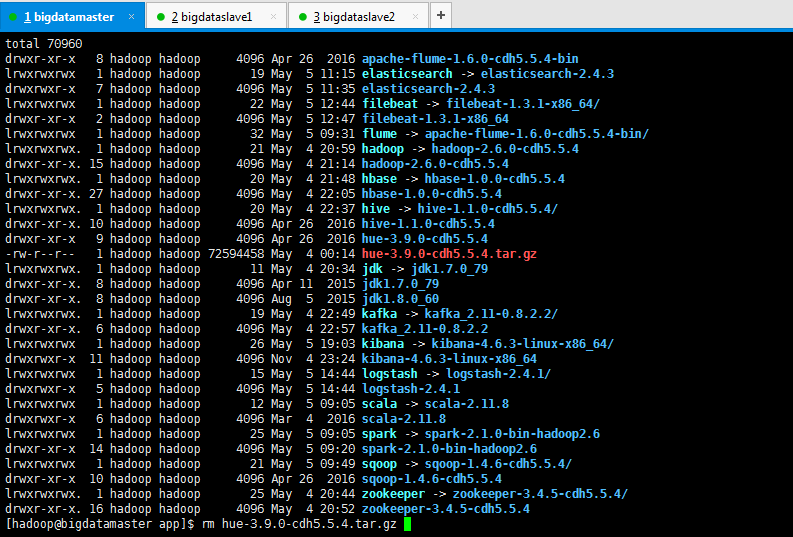

[hadoop@bigdatamaster app]$ ll total 70956 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 -rw-r--r-- 1 hadoop hadoop 72594458 May 4 00:14 hue-3.9.0-cdh5.5.4.tar.gz lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 23:24 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 21 May 5 09:49 sqoop -> sqoop-1.4.6-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 16 hadoop hadoop 4096 May 4 20:52 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ tar -zxvf hue-3.9.0-cdh5.5.4.tar.gz

total 70960 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 hue-3.9.0-cdh5.5.4 -rw-r--r-- 1 hadoop hadoop 72594458 May 4 00:14 hue-3.9.0-cdh5.5.4.tar.gz lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 23:24 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 21 May 5 09:49 sqoop -> sqoop-1.4.6-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 16 hadoop hadoop 4096 May 4 20:52 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ rm hue-3.9.0-cdh5.5.4.tar.gz

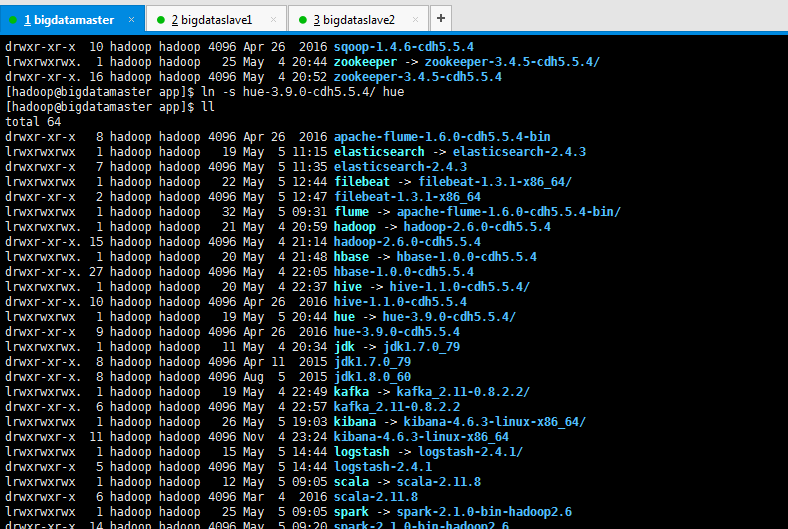

创建软链接

[hadoop@bigdatamaster app]$ ll total 64 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 23:24 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 21 May 5 09:49 sqoop -> sqoop-1.4.6-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 16 hadoop hadoop 4096 May 4 20:52 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$ ln -s hue-3.9.0-cdh5.5.4/ hue [hadoop@bigdatamaster app]$ ll total 64 drwxr-xr-x 8 hadoop hadoop 4096 Apr 26 2016 apache-flume-1.6.0-cdh5.5.4-bin lrwxrwxrwx 1 hadoop hadoop 19 May 5 11:15 elasticsearch -> elasticsearch-2.4.3 drwxrwxr-x 7 hadoop hadoop 4096 May 5 11:35 elasticsearch-2.4.3 lrwxrwxrwx 1 hadoop hadoop 22 May 5 12:44 filebeat -> filebeat-1.3.1-x86_64/ drwxr-xr-x 2 hadoop hadoop 4096 May 5 12:47 filebeat-1.3.1-x86_64 lrwxrwxrwx 1 hadoop hadoop 32 May 5 09:31 flume -> apache-flume-1.6.0-cdh5.5.4-bin/ lrwxrwxrwx. 1 hadoop hadoop 21 May 4 20:59 hadoop -> hadoop-2.6.0-cdh5.5.4 drwxr-xr-x. 15 hadoop hadoop 4096 May 4 21:14 hadoop-2.6.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 21:48 hbase -> hbase-1.0.0-cdh5.5.4 drwxr-xr-x. 27 hadoop hadoop 4096 May 4 22:05 hbase-1.0.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 20 May 4 22:37 hive -> hive-1.1.0-cdh5.5.4/ drwxr-xr-x. 10 hadoop hadoop 4096 Apr 26 2016 hive-1.1.0-cdh5.5.4 lrwxrwxrwx 1 hadoop hadoop 19 May 5 20:44 hue -> hue-3.9.0-cdh5.5.4/ drwxr-xr-x 9 hadoop hadoop 4096 Apr 26 2016 hue-3.9.0-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 11 May 4 20:34 jdk -> jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Apr 11 2015 jdk1.7.0_79 drwxr-xr-x. 8 hadoop hadoop 4096 Aug 5 2015 jdk1.8.0_60 lrwxrwxrwx. 1 hadoop hadoop 19 May 4 22:49 kafka -> kafka_2.11-0.8.2.2/ drwxr-xr-x. 6 hadoop hadoop 4096 May 4 22:57 kafka_2.11-0.8.2.2 lrwxrwxrwx 1 hadoop hadoop 26 May 5 19:03 kibana -> kibana-4.6.3-linux-x86_64/ drwxrwxr-x 11 hadoop hadoop 4096 Nov 4 23:24 kibana-4.6.3-linux-x86_64 lrwxrwxrwx 1 hadoop hadoop 15 May 5 14:44 logstash -> logstash-2.4.1/ drwxrwxr-x 5 hadoop hadoop 4096 May 5 14:44 logstash-2.4.1 lrwxrwxrwx 1 hadoop hadoop 12 May 5 09:05 scala -> scala-2.11.8 drwxrwxr-x 6 hadoop hadoop 4096 Mar 4 2016 scala-2.11.8 lrwxrwxrwx 1 hadoop hadoop 25 May 5 09:05 spark -> spark-2.1.0-bin-hadoop2.6 drwxr-xr-x 14 hadoop hadoop 4096 May 5 09:20 spark-2.1.0-bin-hadoop2.6 lrwxrwxrwx 1 hadoop hadoop 21 May 5 09:49 sqoop -> sqoop-1.4.6-cdh5.5.4/ drwxr-xr-x 10 hadoop hadoop 4096 Apr 26 2016 sqoop-1.4.6-cdh5.5.4 lrwxrwxrwx. 1 hadoop hadoop 25 May 4 20:44 zookeeper -> zookeeper-3.4.5-cdh5.5.4/ drwxr-xr-x. 16 hadoop hadoop 4096 May 4 20:52 zookeeper-3.4.5-cdh5.5.4 [hadoop@bigdatamaster app]$

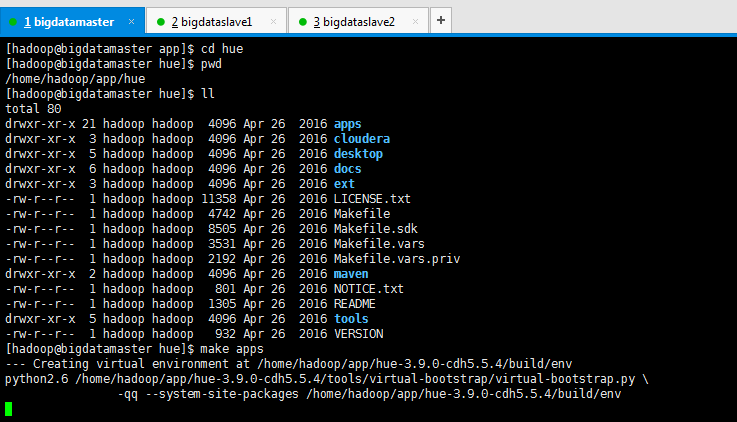

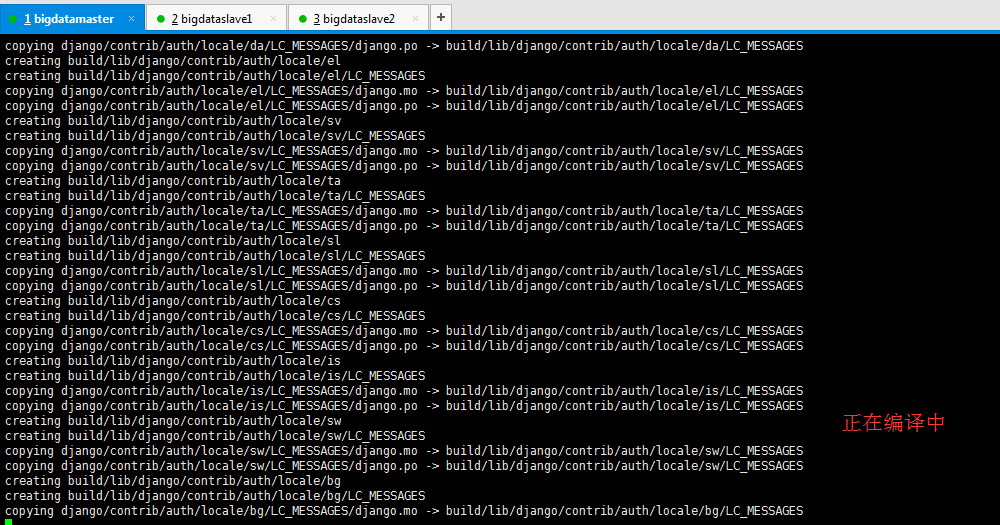

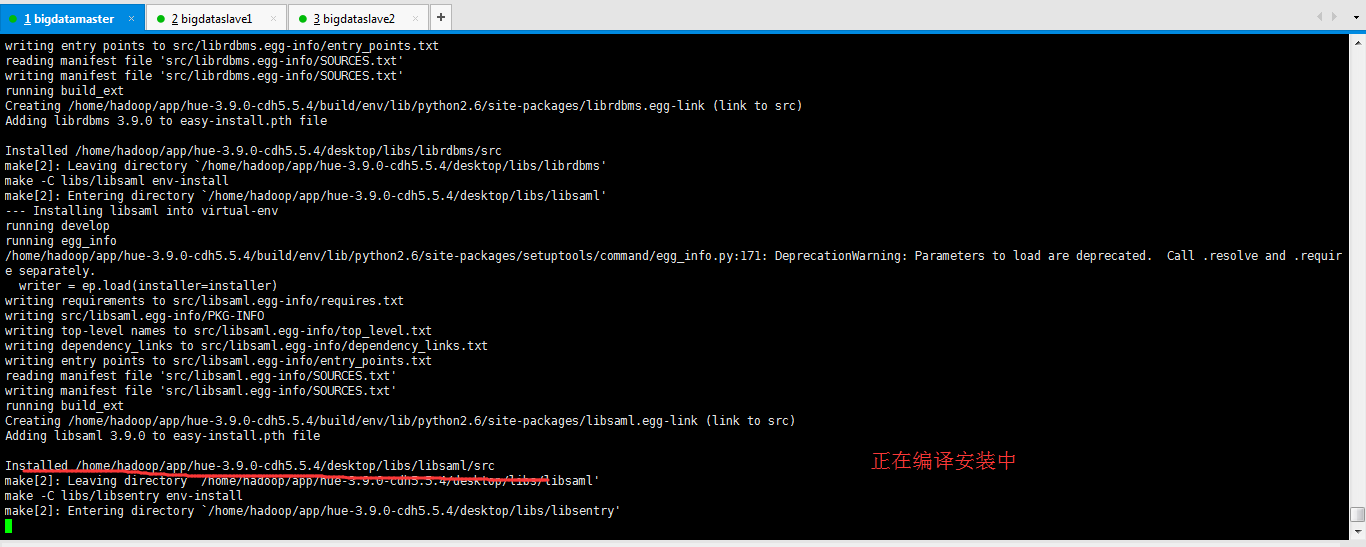

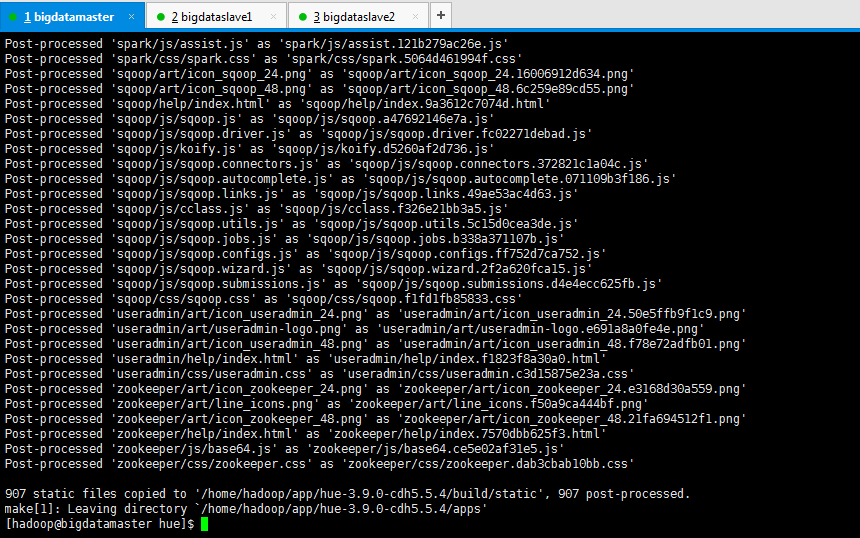

进入hue的安装目录,进行编译。

make apps

看个人的网速吧,这个过程中,需要几分钟。

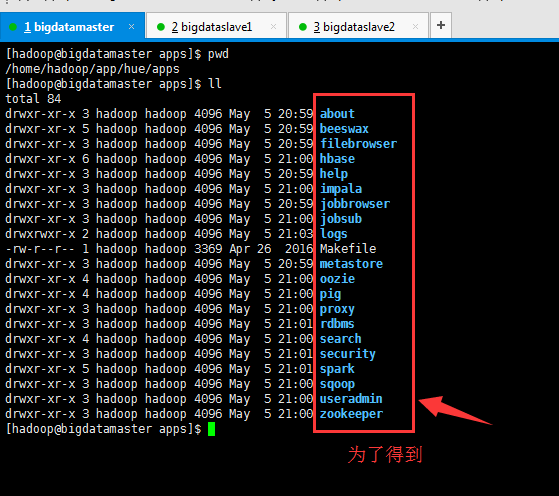

为什么需要make apps,其实啊,是为了得到如下

首先,大家一应要看清我的3个节点的集群机器情况!(不看清楚,自己去后悔吧)

(这个配置文件表格,我是为了给大家方便看,制作出来展示,是根据大家的机器变动而走的)(动态的)

| Hue配置段 | Hue配置项 | Hue配置值 | 说明 |

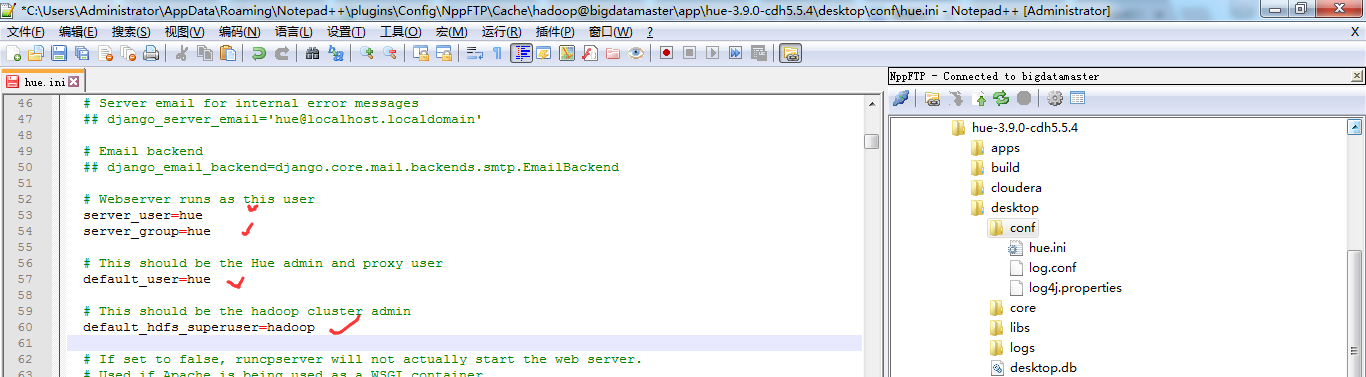

| desktop | default_hdfs_superuser | hadoop | HDFS管理用户 |

| desktop | http_host | 192.168.80.10 | Hue Web Server所在主机/IP |

| desktop | http_port | 8000 | Hue Web Server服务端口 |

| desktop | server_user | hue | 运行Hue Web Server的进程用户 |

| desktop | server_group | hue | 运行Hue Web Server的进程用户组 |

| desktop | default_user | hue | Hue管理员 |

| desktop | default_hdfs_superuser | hadoop |

更改为你的hadoop用户,网上有些资料写为什么将 修改 文件desktop/libs/hadoop/src/hadoop/fs/webhdfs.py 中的 DEFAULT_HDFS_SUPERUSER = ‘hdfs’ 更改为你的hadoop用户。 我的这里是hadoop 修改默认的hdfs访问用户 修改hue.ini中的配置 default_hdfs_superuser=hdfs 改为 default_hdfs_superuser=root (注意,这里别人的用户是root) |

| hadoop/hdfs_clusters | fs_defaultfs | hdfs://bigdatamaster:9000 | 对应core-site.xml配置项fs.defaultFS |

| hadoop/hdfs_clusters | hadoop_conf_dir | /home/hadoop/app/hadoop/etc/hadoop/conf | Hadoop配置文件目录 |

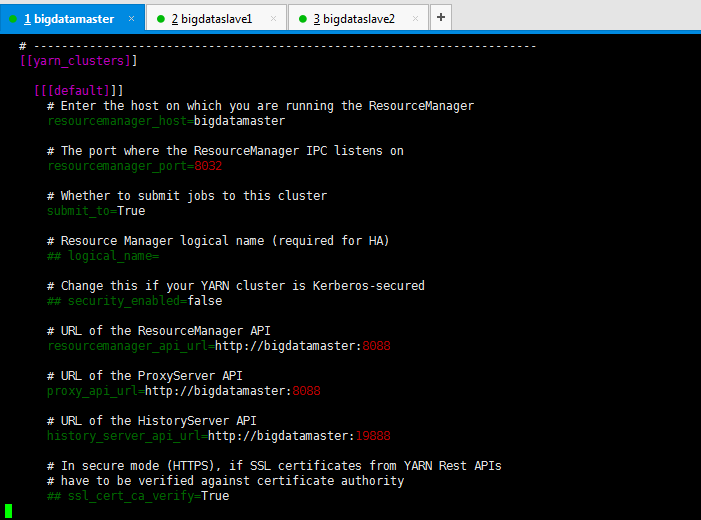

| hadoop/yarn_clusters | resourcemanager_host | bigdatamaster | 对应yarn-site.xml配置项yarn.resourcemanager.hostname |

| hadoop/yarn_clusters | resourcemanager_port | 8032 | ResourceManager服务端口号 |

| hadoop/yarn_clusters | resourcemanager_api_url | http://bigdatamaster:23188 | 对应于yarn-site.xml配置项yarn.resourcemanager.webapp.address(我这里是为了避免跟spark那边的端口冲突,当然你也可以改为其他的端口) |

| hadoop/yarn_clusters | proxy_api_url | http://bigdatamaster:8888 | 对应yarn-site.xml配置项yarn.web-proxy.address |

| hadoop/yarn_clusters | history_server_api_url | http://bigdatamaster:19888 |

对应mapred-site.xml配置项mapreduce.jobhistory.webapp.address |

zookeeper

|

host_ports

|

bigdatamaster:2181,bigdataslave1:2181,bigdataslave2:2181 |

zookeeper集群管理 |

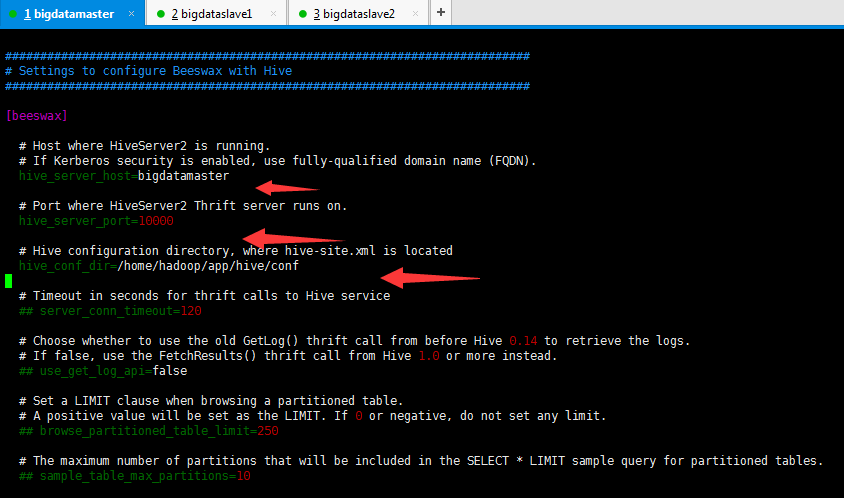

| beeswax | hive_server_host | bigdatamaster | Hive所在节点主机名/IP |

| beeswax | hive_server_port | 10000 | HiveServer2服务端口号 |

|

beeswax |

hive_conf_dir |

home/hadoop/app/hive/conf |

Hive配置文件目录 |

因为,我的Hue仅只安装在bigdatamaster(192.168.80.10)这台机器上即可!!!

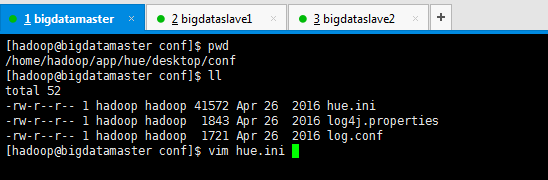

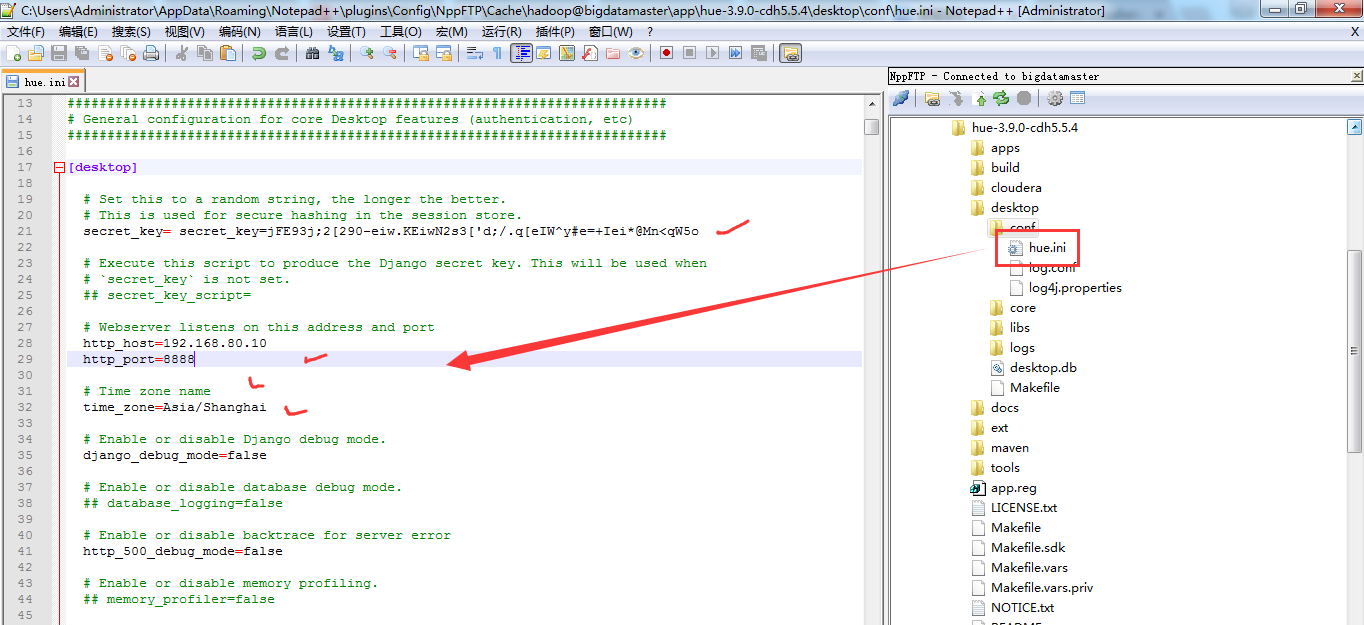

配置hue文件(重点,一定要细心)

$HUE_HOME/desktop/conf/hue.ini

[hadoop@bigdatamaster conf]$ pwd /home/hadoop/app/hue/desktop/conf [hadoop@bigdatamaster conf]$ ll total 52 -rw-r--r-- 1 hadoop hadoop 41572 Apr 26 2016 hue.ini -rw-r--r-- 1 hadoop hadoop 1843 Apr 26 2016 log4j.properties -rw-r--r-- 1 hadoop hadoop 1721 Apr 26 2016 log.conf [hadoop@bigdatamaster conf]$ vim hue.ini

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.5.4/manual.html#_install_hue

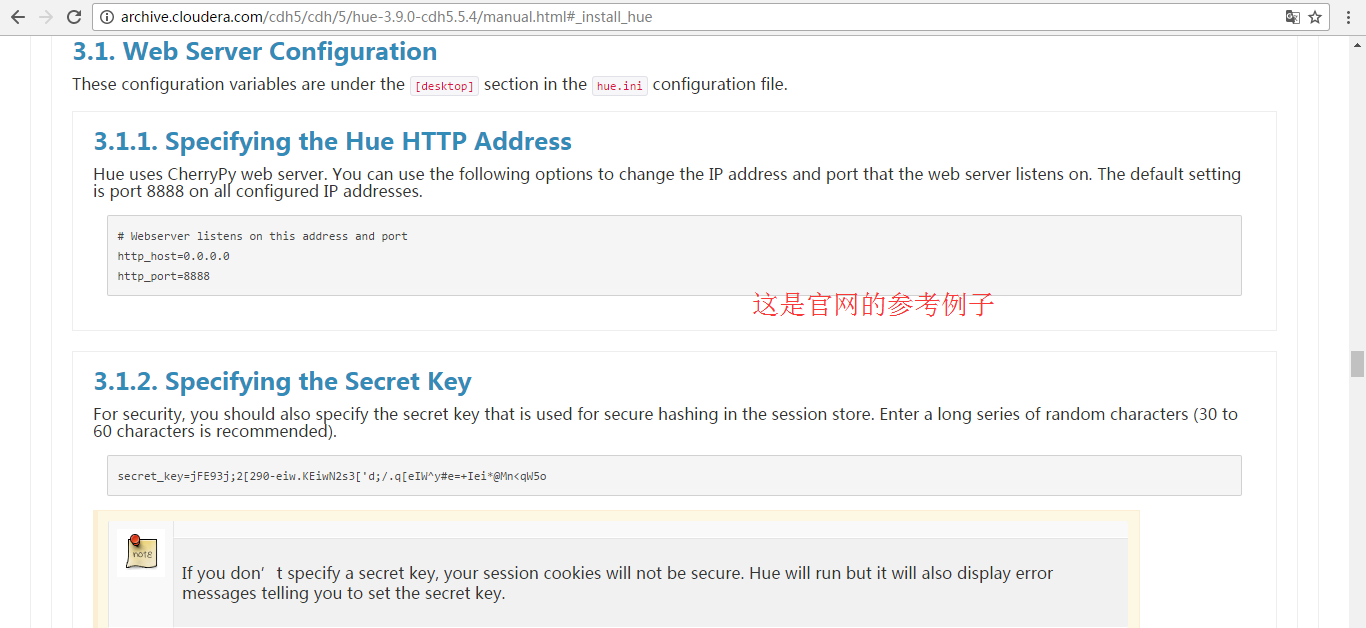

[desktop]这块,配置如下

[desktop]

# hue webServer 地址和端口号

secret_key= secret_key=jFE93j;2[290-eiw.KEiwN2s3['d;/.q[eIW^y#e=+Iei*@Mn<qW5o

http_host=192.168.80.10 http_port=8888

time_zone=Asia/Shanghai

# Webserver runs as this user server_user=hue server_group=hue # This should be the Hue admin and proxy user default_user=hue # This should be the hadoop cluster admin default_hdfs_superuser=hadoop

注意,这里也可以不弄,保持默认的

http://archive.cloudera.com/cdh5/cdh/5/hue-3.9.0-cdh5.5.4/manual.html#_install_hue

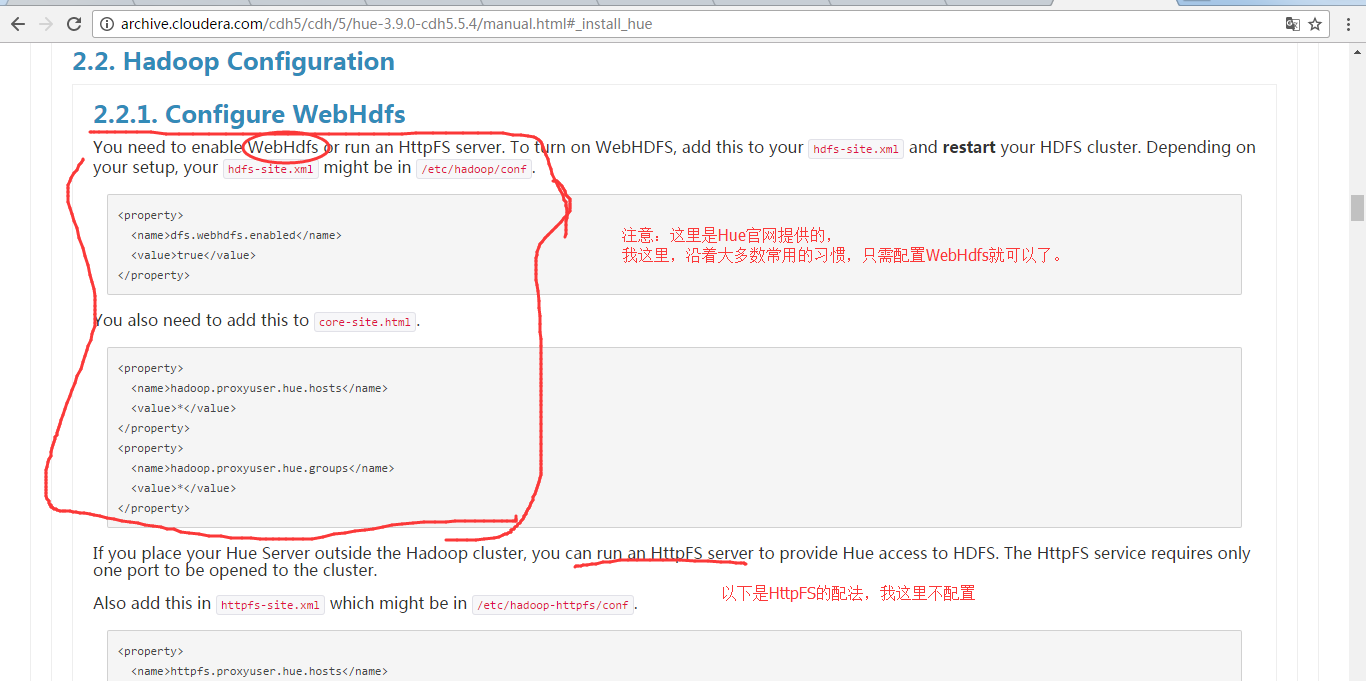

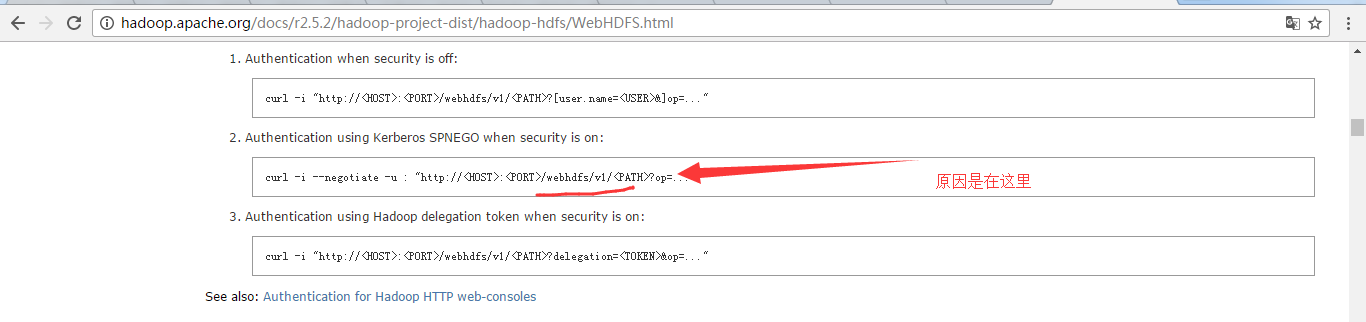

[hadoop]这块,配置如下 (注意官网说,WebHdfs 或者 HttpFS)(一般用WebHdfs,那是因为非HA集群。如果是HA集群,则必须还要配置HttpFS)

下面这篇博客,我给了具体的配置和原因。

HUE配置文件hue.ini 的hdfs_clusters模块详解(图文详解)(分HA集群)

好的,我们继续往下。因为本博客立足于是由bigdatamaster、bigdataslave1和bigdataslave2组成的非HA的3节点集群,所以选择WebHdfs。

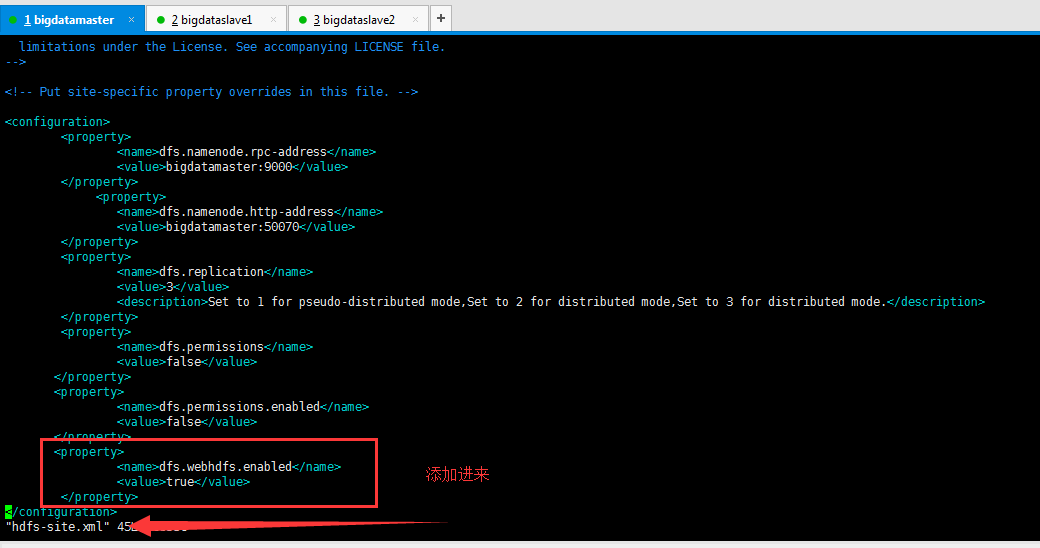

bigdataslave1 和 bigdataslave2都操作,不多赘述。

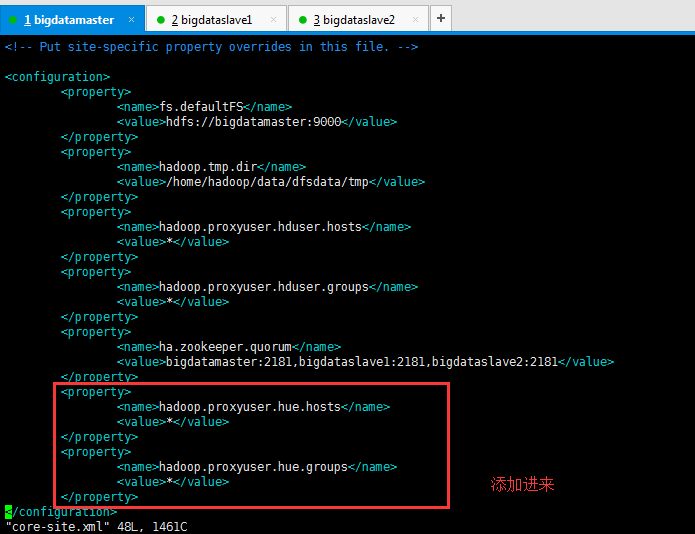

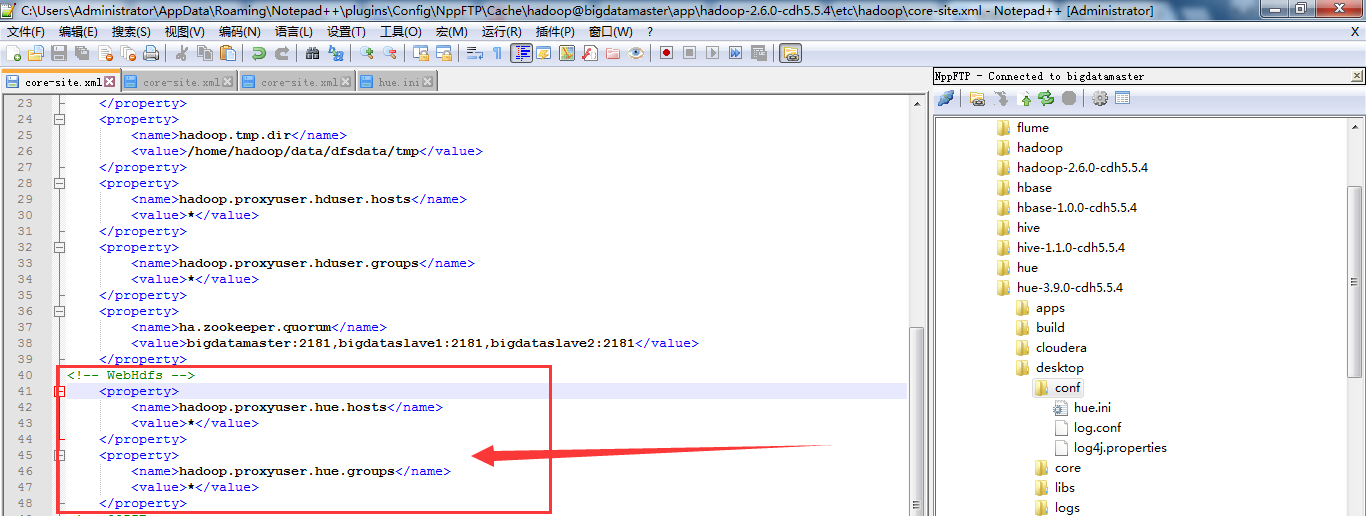

然后,修改完三台机器的hdfs-site.xml之后,再修改core-site.xml

bigdataslave1和bigdataslave2都操作,不多赘述。

hadoop模块

[hadoop] # Configuration for HDFS NameNode # ------------------------------------------------------------------------ [[hdfs_clusters]] # HA support by using HttpFs [[[default]]] # Enter the filesystem uri fs_defaultfs=hdfs://bigdatamaster:9000 # NameNode logical name. ## logical_name= # Use WebHdfs/HttpFs as the communication mechanism. # Domain should be the NameNode or HttpFs host. # Default port is 14000 for HttpFs. webhdfs_url=http://bigdatamaster:50070/webhdfs/v1 # Change this if your HDFS cluster is Kerberos-secured ## security_enabled=false # In secure mode (HTTPS), if SSL certificates from YARN Rest APIs # have to be verified against certificate authority ## ssl_cert_ca_verify=True # Directory of the Hadoop configuration hadoop_conf_dir=/home/hadoop/app/hadoop/etc/hadoop/conf

注意,我的 fs_defaultfs=hdfs://bigdatamaster:9000 ,大家要根据自己的机器来配置,思路一定要清晰,别一味地看别人博客怎么配置的

网上有些如, fs_defaultfs=hdfs://mycluster ,以及 fs_defaultfs=hdfs://master:8020。注意,这是别人的机器是这么配置的。

总之,跟自己机器的core-site.xml的fs.defaultFS属性保持一致即可。

其中,bigdatamaster,是我安装Hue这台机器的主机名,192.168.80.10是它对应的静态ip。

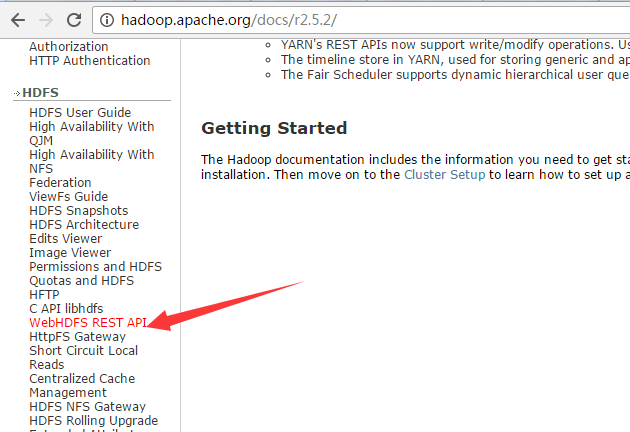

那么,为什么是上面这样来配置的呢。大家要知道为什么

http://hadoop.apache.org/docs/r2.5.2/

[yarn_clusters]这块

[[yarn_clusters]] [[[default]]] # Enter the host on which you are running the ResourceManager resourcemanager_host=192.168.80.10 # The port where the ResourceManager IPC listens on resourcemanager_port=8032 # Whether to submit jobs to this cluster submit_to=True # Resource Manager logical name (required for HA) ## logical_name= # Change this if your YARN cluster is Kerberos-secured ## security_enabled=false # URL of the ResourceManager API resourcemanager_api_url=http://192.168.80.10:8088 # URL of the ProxyServer API proxy_api_url=http://192.168.80.10:8088 # URL of the HistoryServer API history_server_api_url=http://192.168.80.10:19888

进一步深入的话,请移步我的博客

HUE配置文件hue.ini 的yarn_clusters模块详解(图文详解)(分HA集群)

[zookeeper]这块

[zookeeper] host_ports=bigdatamaster:2181,bigdataslave1:2181,bigdataslave2:2181

[beeswax] 和 hive 这块

[beeswax] # Host where HiveServer2 is running. # If Kerberos security is enabled, use fully-qualified domain name (FQDN). hive_server_host=bigdatamaster # Port where HiveServer2 Thrift server runs on. hive_server_port=10000 # Hive configuration directory, where hive-site.xml is located hive_conf_dir=/home/hadoop/app/hive/conf

因为,我的hive是安装在bigdatamaster这台机器上。大家一定要根据自己的机器情况来配置啊!

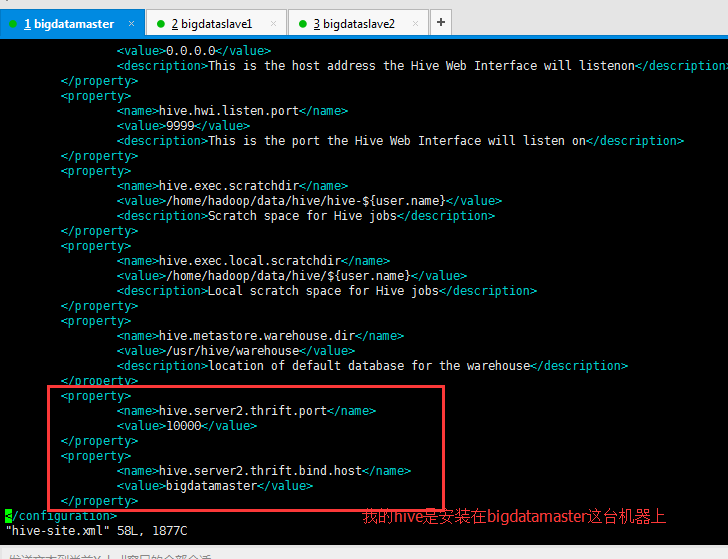

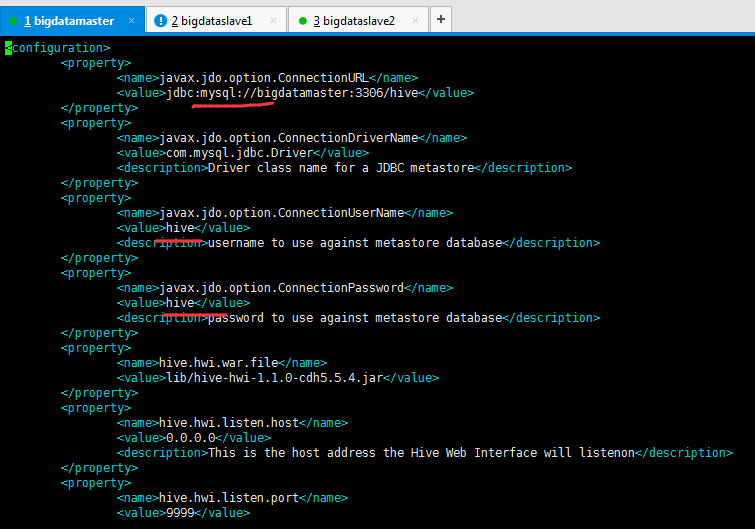

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>bigdatamaster</value>

</property>

同时,是还要将hive-site.xml里的hive.server2.thrift.port属性 和 hive.server2.thrift.bind.host属性。我这里的hive是安装在bigdatamaster机器上。

更深入,想请请见

HUE配置文件hue.ini 的hive和beeswax模块详解(图文详解)(分HA集群)

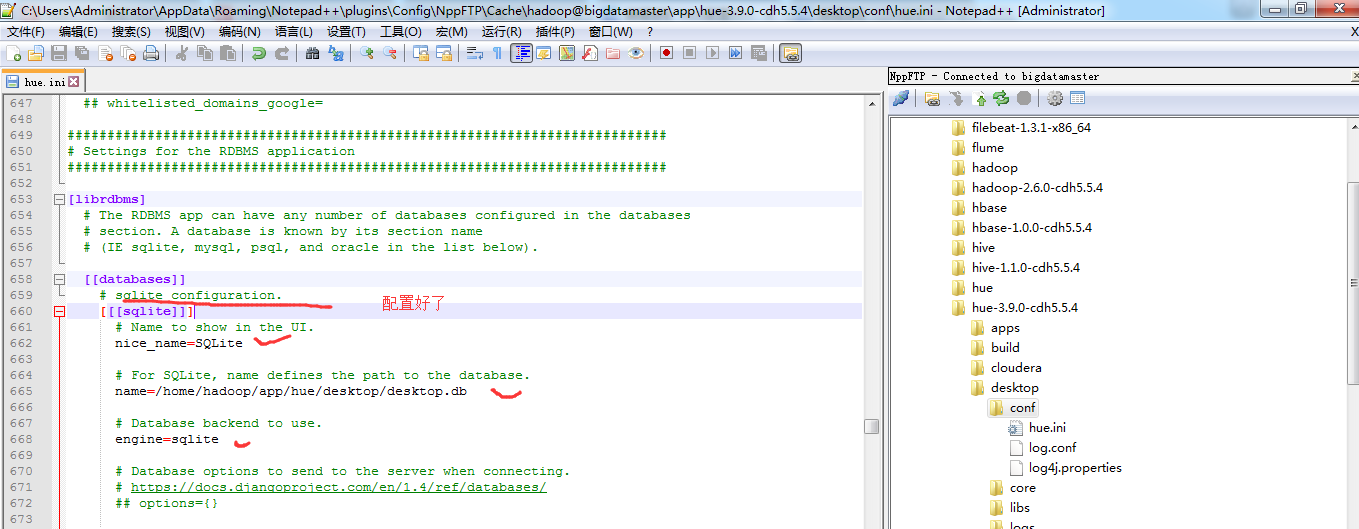

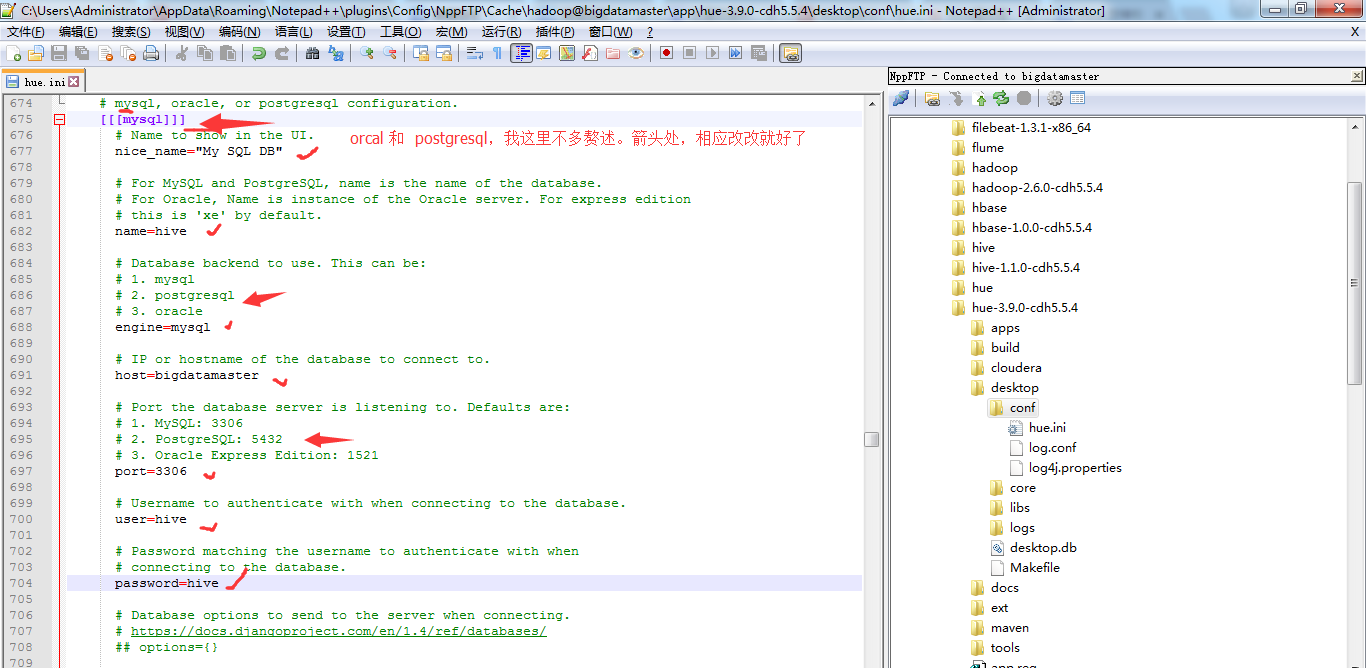

database模块

HUE配置文件hue.ini 的database模块详解(包含qlite、mysql、 psql、和oracle)(图文详解)(分HA集群)

########################################################################### # Settings for the RDBMS application ########################################################################### [librdbms] # The RDBMS app can have any number of databases configured in the databases # section. A database is known by its section name # (IE sqlite, mysql, psql, and oracle in the list below). [[databases]] # sqlite configuration. [[[sqlite]]] # Name to show in the UI. nice_name=SQLite # For SQLite, name defines the path to the database. name=/home/hadoop/app/hue/desktop/desktop.db # Database backend to use. engine=sqlite

hive> show databases; OK default hive Time taken: 0.074 seconds, Fetched: 2 row(s) hive>

# mysql, oracle, or postgresql configuration. [[[mysql]]] # Name to show in the UI. nice_name="My SQL DB" # For MySQL and PostgreSQL, name is the name of the database. # For Oracle, Name is instance of the Oracle server. For express edition # this is 'xe' by default. name=hive # Database backend to use. This can be: # 1. mysql # 2. postgresql # 3. oracle engine=mysql # IP or hostname of the database to connect to. host=bigdatamaster # Port the database server is listening to. Defaults are: # 1. MySQL: 3306 # 2. PostgreSQL: 5432 # 3. Oracle Express Edition: 1521 port=3306 # Username to authenticate with when connecting to the database. user=hive # Password matching the username to authenticate with when # connecting to the database. password=hive # Database options to send to the server when connecting. # https://docs.djangoproject.com/en/1.4/ref/databases/ ## options={}

pig模块的配置

具体,见

HUE配置文件hue.ini 的pig模块详解(图文详解)(分HA集群)

zookeeper模块的配置

具体,见

HUE配置文件hue.ini 的zookeeper模块详解(图文详解)(分HA集群)

spark模块的配置

具体,见

HUE配置文件hue.ini 的Spark模块详解(图文详解)(分HA集群)

impala模块的配置

具体请见

HUE配置文件hue.ini 的impala模块详解(图文详解)(分HA集群)

liboozie和oozie模块的配置

具体,见

HUE配置文件hue.ini 的liboozie和oozie模块详解(图文详解)(分HA集群)

sqoop模块的配置

具体,见

HUE配置文件hue.ini 的sqoop模块详解(图文详解)(分HA集群)

hbase模块的配置(暂时这里遇到了点问题)

1、配置HBase

Hue需要读取HBase的数据是使用thrift的方式,默认HBase的thrift服务没有开启,所有需要手动额外开启thrift 服务。

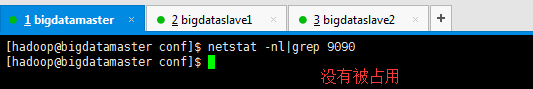

thrift service默认使用的是9090端口,使用如下命令查看端口是否被占用。

[hadoop@bigdatamaster conf]$ netstat -nl|grep 9090 [hadoop@bigdatamaster conf]$

这里,最好保持默认端口。

对于Hbase的配置,有点错误。

HUE配置文件hue.ini 的hbase模块详解(图文详解)(分HA集群)

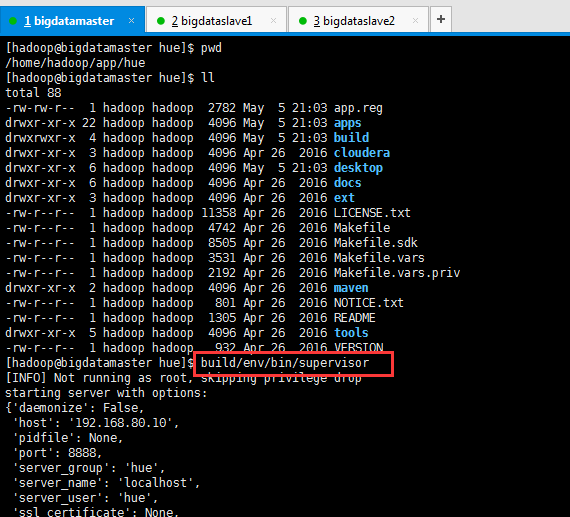

Hue的启动

也就是说,你Hue配置文件里面配置了什么,进程都要先提前启动。

build/env/bin/supervisor

[hadoop@bigdatamaster hue]$ pwd /home/hadoop/app/hue [hadoop@bigdatamaster hue]$ ll total 92 -rw-rw-r-- 1 hadoop hadoop 2782 May 5 21:03 app.reg drwxr-xr-x 22 hadoop hadoop 4096 May 5 21:03 apps drwxrwxr-x 4 hadoop hadoop 4096 May 5 21:03 build drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 cloudera drwxr-xr-x 6 hadoop hadoop 4096 May 7 10:13 desktop drwxr-xr-x 6 hadoop hadoop 4096 Apr 26 2016 docs drwxr-xr-x 3 hadoop hadoop 4096 Apr 26 2016 ext -rw-r--r-- 1 hadoop hadoop 11358 Apr 26 2016 LICENSE.txt drwxrwxr-x 2 hadoop hadoop 4096 May 7 09:27 logs -rw-r--r-- 1 hadoop hadoop 4742 Apr 26 2016 Makefile -rw-r--r-- 1 hadoop hadoop 8505 Apr 26 2016 Makefile.sdk -rw-r--r-- 1 hadoop hadoop 3531 Apr 26 2016 Makefile.vars -rw-r--r-- 1 hadoop hadoop 2192 Apr 26 2016 Makefile.vars.priv drwxr-xr-x 2 hadoop hadoop 4096 Apr 26 2016 maven -rw-r--r-- 1 hadoop hadoop 801 Apr 26 2016 NOTICE.txt -rw-r--r-- 1 hadoop hadoop 1305 Apr 26 2016 README drwxr-xr-x 5 hadoop hadoop 4096 Apr 26 2016 tools -rw-r--r-- 1 hadoop hadoop 932 Apr 26 2016 VERSION [hadoop@bigdatamaster hue]$ build/env/bin/supervisor

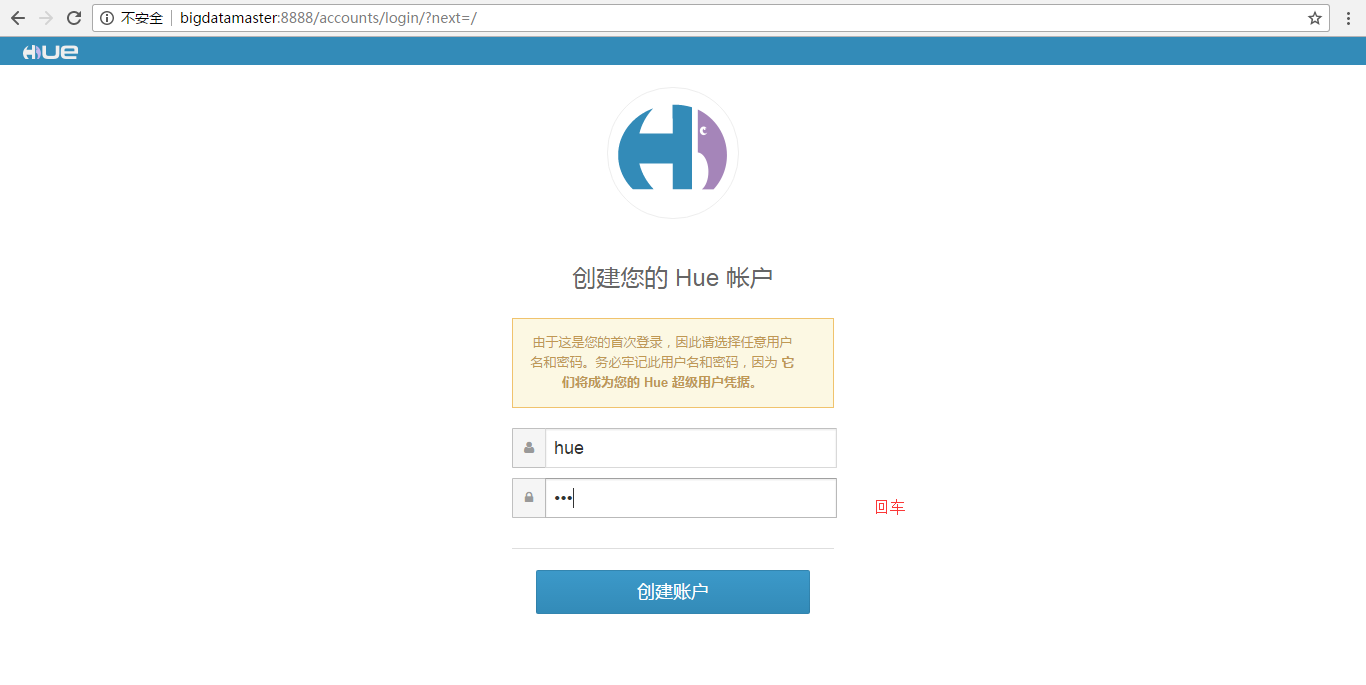

[hadoop@bigdatamaster hue]$ build/env/bin/supervisor [INFO] Not running as root, skipping privilege drop starting server with options: {'daemonize': False, 'host': '192.168.80.10', 'pidfile': None, 'port': 8888, 'server_group': 'hue', 'server_name': 'localhost', 'server_user': 'hue', 'ssl_certificate': None, 'ssl_certificate_chain': None, 'ssl_cipher_list': 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA', 'ssl_private_key': None, 'threads': 40, 'workdir': None} /home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env/lib/python2.6/site-packages/django_axes-1.4.0-py2.6.egg/axes/decorators.py:210: DeprecationWarning: The use of AUTH_PROFILE_MODULE to define user profiles has been deprecated. profile = user.get_profile()

http://bigdatamaster:8888

这里,不多赘述了。

如果大家,在启动Hue之后,遇到一些问题,相应可以去看我以下写的博客

安装Hue后的一些功能的问题解决干货总结(博主推荐)

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)