心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了。

delete.deleteColumn和delete.deleteColumns区别是:

deleteColumn是删除某一个列簇里的最新时间戳版本。

delete.deleteColumns是删除某个列簇里的所有时间戳版本。

hbase(main):020:0> desc 'test_table'

Table test_table is ENABLED

test_table

COLUMN FAMILIES DESCRIPTION

{NAME => 'f', DATA_BLOCK_ENCODING => 'NONE', BLOOMFILTER => 'ROW', REPLICATION_SCOPE => '0', VERSIONS => '1', COMPRESSION => 'NONE', MIN_VERSIONS => '0', TTL => 'FOREVER', KEEP_DELETED_CELLS

=> 'FALSE', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}

1 row(s) in 0.2190 seconds

hbase(main):021:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478102698687, value=maizi

row_01 column=f:name, timestamp=1478104345828, value=Andy

row_02 column=f:name, timestamp=1478104477628, value=Andy2

row_03 column=f:name, timestamp=1478104823358, value=Andy3

3 row(s) in 0.2270 seconds

hbase(main):022:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478102698687, value=maizi

row_01 column=f:name, timestamp=1478104345828, value=Andy

row_02 column=f:name, timestamp=1478104477628, value=Andy2

row_03 column=f:name, timestamp=1478104823358, value=Andy3

3 row(s) in 0.1480 seconds

hbase(main):023:0> scan 'test_table',{VERSIONS=>3}

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478102698687, value=maizi

row_01 column=f:name, timestamp=1478104345828, value=Andy

row_02 column=f:name, timestamp=1478104477628, value=Andy2

row_03 column=f:name, timestamp=1478104823358, value=Andy3

3 row(s) in 0.1670 seconds

hbase(main):024:0>

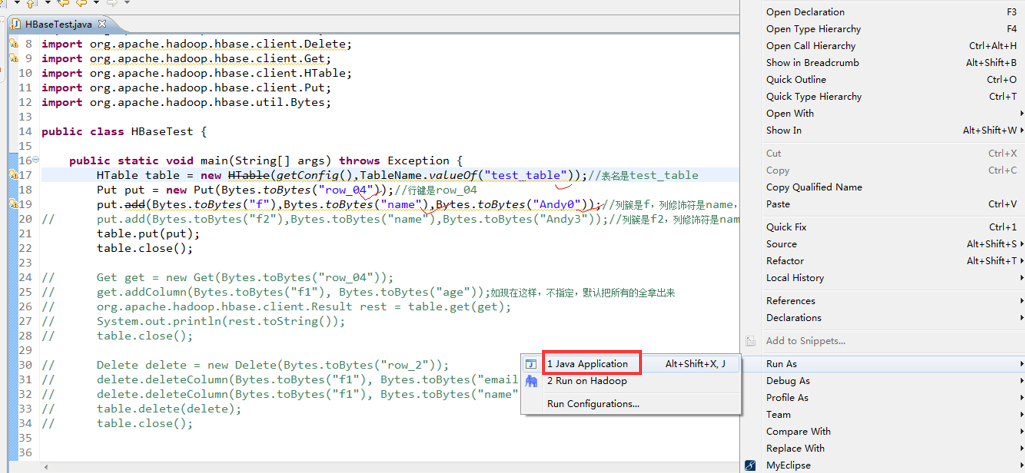

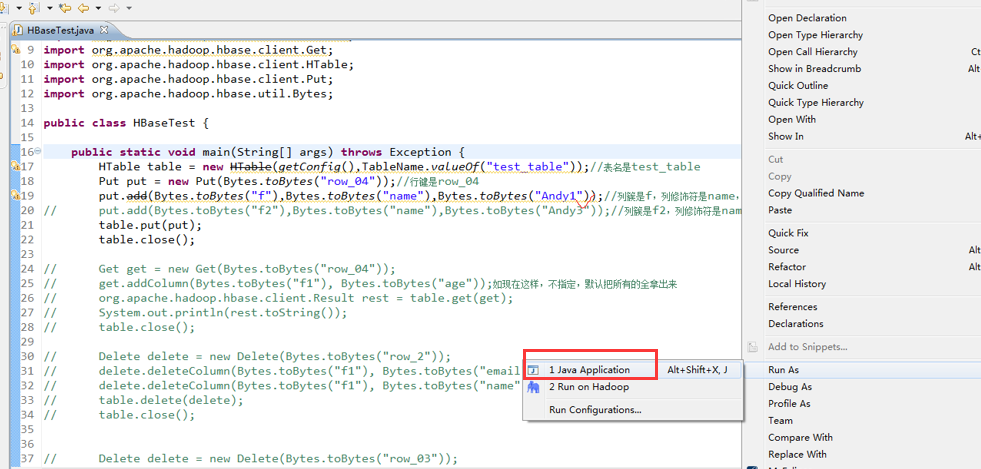

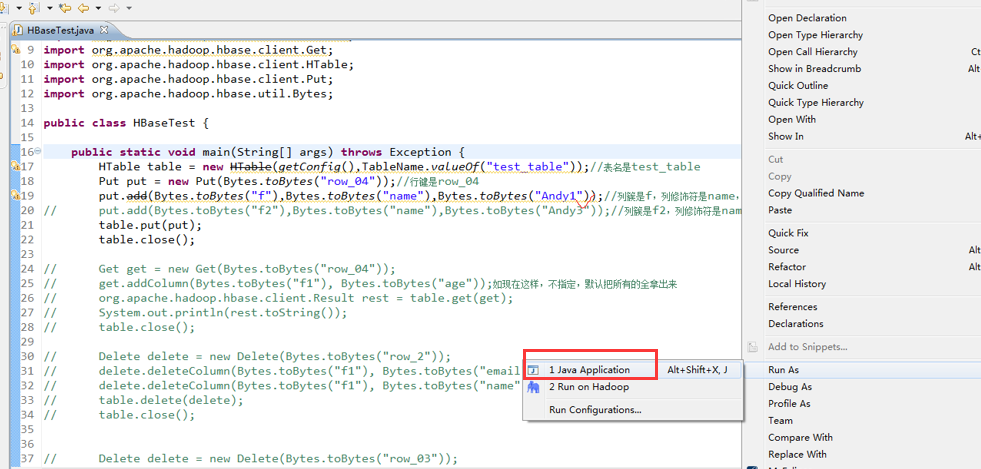

1 package zhouls.bigdata.HbaseProject.Test1; 2 3 import javax.xml.transform.Result; 4 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.hbase.HBaseConfiguration; 7 import org.apache.hadoop.hbase.TableName; 8 import org.apache.hadoop.hbase.client.Delete; 9 import org.apache.hadoop.hbase.client.Get; 10 import org.apache.hadoop.hbase.client.HTable; 11 import org.apache.hadoop.hbase.client.Put; 12 import org.apache.hadoop.hbase.util.Bytes; 13 14 public class HBaseTest { 15 public static void main(String[] args) throws Exception { 16 HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table 17 Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04 18 put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy0"));//列簇是f,列修饰符是name,值是Andy0 19 // put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3 20 table.put(put); 21 table.close(); 22 23 // Get get = new Get(Bytes.toBytes("row_04")); 24 // get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来 25 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 26 // System.out.println(rest.toString()); 27 // table.close(); 28 29 // Delete delete = new Delete(Bytes.toBytes("row_2")); 30 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email")); 31 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name")); 32 // table.delete(delete); 33 // table.close(); 34 35 36 // Delete delete = new Delete(Bytes.toBytes("row_03")); 37 // delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 38 // delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name")); 39 // table.delete(delete); 40 // table.close(); 41 } 42 43 public static Configuration getConfig(){ 44 Configuration configuration = new Configuration(); 45 // conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase"); 46 configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 47 return configuration; 48 } 49 }

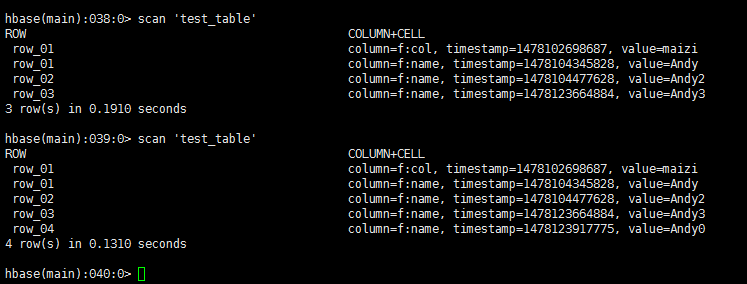

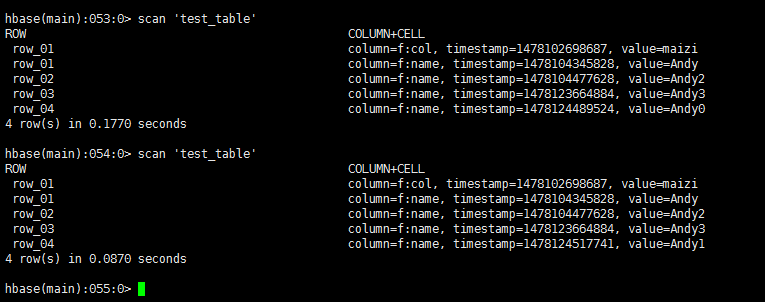

hbase(main):038:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478102698687, value=maizi

row_01 column=f:name, timestamp=1478104345828, value=Andy

row_02 column=f:name, timestamp=1478104477628, value=Andy2

row_03 column=f:name, timestamp=1478123664884, value=Andy3

3 row(s) in 0.1910 seconds

hbase(main):039:0> scan 'test_table'

ROW COLUMN+CELL

row_01 column=f:col, timestamp=1478102698687, value=maizi

row_01 column=f:name, timestamp=1478104345828, value=Andy

row_02 column=f:name, timestamp=1478104477628, value=Andy2

row_03 column=f:name, timestamp=1478123664884, value=Andy3

row_04 column=f:name, timestamp=1478123917775, value=Andy0

4 row(s) in 0.1310 seconds

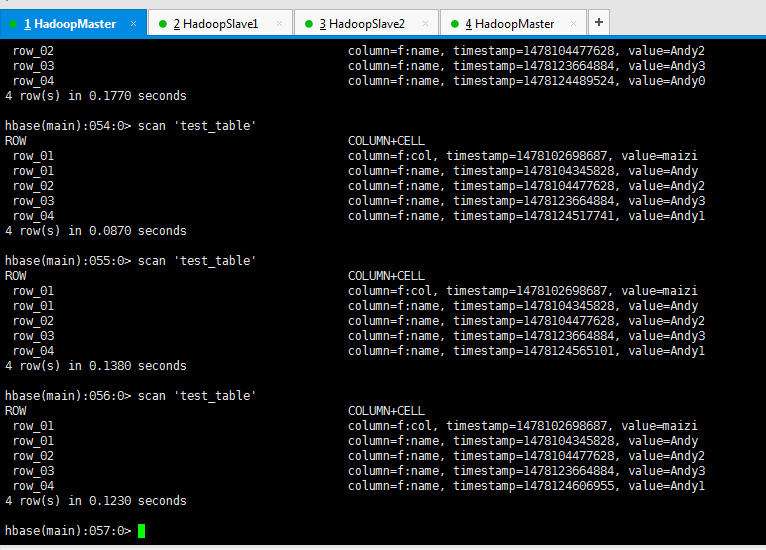

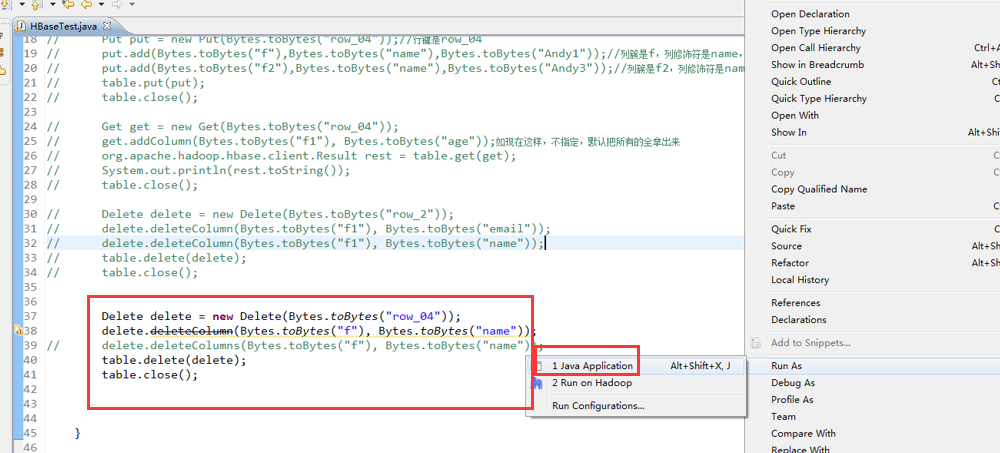

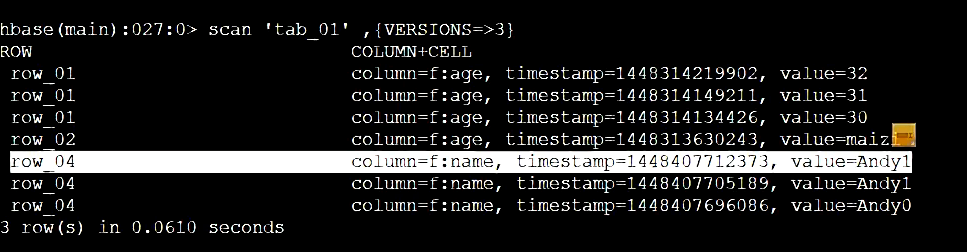

delete.deleteColumn和delete.deleteColumns区别是:

deleteColumn是删除某一个列簇里的最新时间戳版本。

delete.deleteColumns是删除某个列簇里的所有时间戳版本。

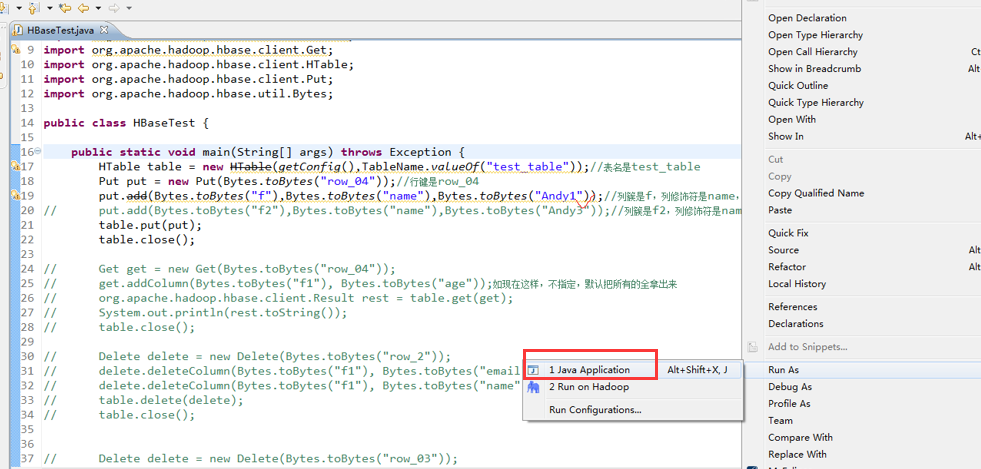

1 package zhouls.bigdata.HbaseProject.Test1; 2 3 import javax.xml.transform.Result; 4 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.hbase.HBaseConfiguration; 7 import org.apache.hadoop.hbase.TableName; 8 import org.apache.hadoop.hbase.client.Delete; 9 import org.apache.hadoop.hbase.client.Get; 10 import org.apache.hadoop.hbase.client.HTable; 11 import org.apache.hadoop.hbase.client.Put; 12 import org.apache.hadoop.hbase.util.Bytes; 13 14 public class HBaseTest { 15 public static void main(String[] args) throws Exception { 16 HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table 17 Put put = new Put(Bytes.toBytes("row_04"));//行键是row_04 18 put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy1"));//列簇是f,列修饰符是name,值是Andy0 19 // put.add(Bytes.toBytes("f2"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f2,列修饰符是name,值是Andy3 20 table.put(put); 21 table.close(); 22 23 // Get get = new Get(Bytes.toBytes("row_04")); 24 // get.addColumn(Bytes.toBytes("f1"), Bytes.toBytes("age"));如现在这样,不指定,默认把所有的全拿出来 25 // org.apache.hadoop.hbase.client.Result rest = table.get(get); 26 // System.out.println(rest.toString()); 27 // table.close(); 28 29 // Delete delete = new Delete(Bytes.toBytes("row_2")); 30 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("email")); 31 // delete.deleteColumn(Bytes.toBytes("f1"), Bytes.toBytes("name")); 32 // table.delete(delete); 33 // table.close(); 34 35 36 // Delete delete = new Delete(Bytes.toBytes("row_03")); 37 // delete.deleteColumn(Bytes.toBytes("f"), Bytes.toBytes("name")); 38 // delete.deleteColumns(Bytes.toBytes("f"), Bytes.toBytes("name")); 39 // table.delete(delete); 40 // table.close(); 41 } 42 43 public static Configuration getConfig(){ 44 Configuration configuration = new Configuration(); 45 // conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase"); 46 configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181"); 47 return configuration; 48 } 49 }

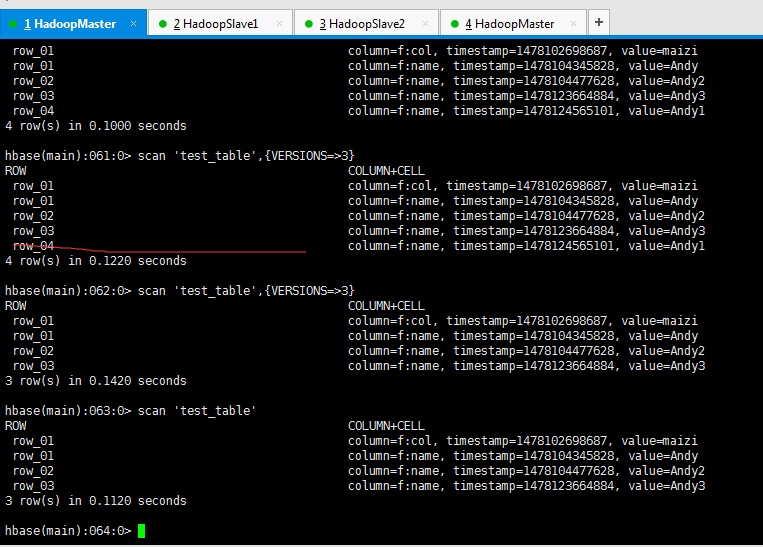

delete.deleteColumn和delete.deleteColumns区别是:

deleteColumn是删除某一个列簇里的最新时间戳版本。

delete.deleteColumns是删除某个列簇里的所有时间戳版本。