- 首先创建好我们得项目

--scrapy startproject projectname - 然后在创建你的爬虫启动文件

--scrapy genspider spidername

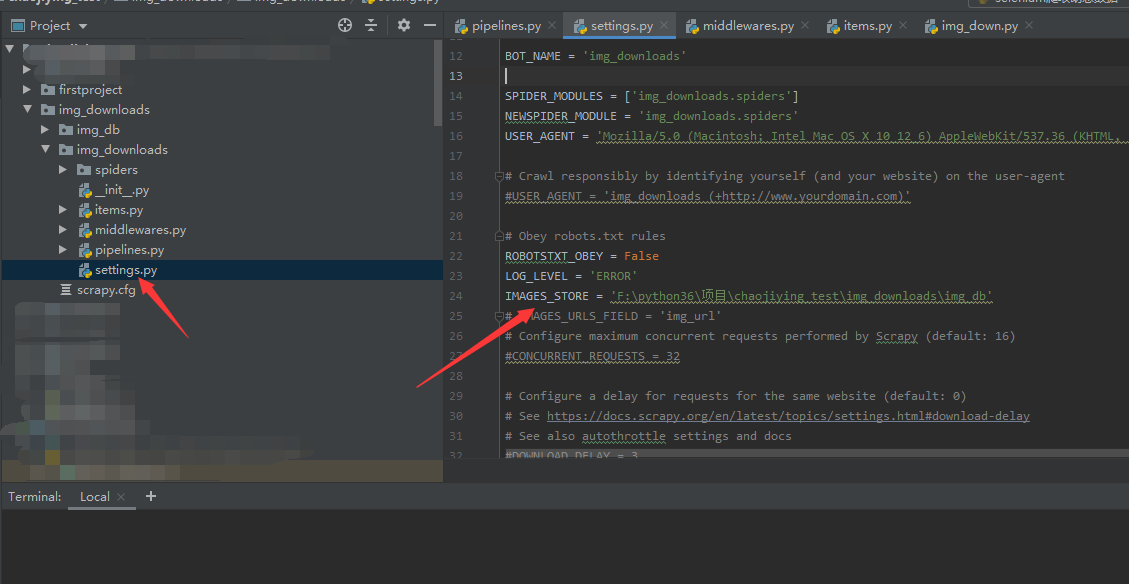

然后进入我们得settings文件下配置我们得携带参数

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.106 Safari/537.36' ROBOTSTXT_OBEY = False LOG_LEVEL = 'ERROR'

我们也要创建一个存储图片得路径

我们先写启动文件spiders中得代码(不解释这些模块得用法,过于基础)

import scrapy

import requests

from img_downloads.items import ImgDownloadsItem

class ImgDownSpider(scrapy.Spider):

name = 'img_down'

# allowed_domains = ['http://pic.netbian.com/4kdongman/']

start_urls = ['http://pic.netbian.com/new/index.html']

url_model = 'http://pic.netbian.com/new/index_%s.html'

page_num = 2

def parse(self, response):

detail_list = response.xpath('//*[@id="main"]/div[3]/ul/li/a/@href').extract()

for detail in detail_list:

detail_url = 'http://pic.netbian.com' + detail

item = ImgDownloadsItem()

# print(detail_url)

yield scrapy.Request(detail_url, callback=self.parse_detail, meta={'item': item})

if self.page_num < 3:

new_url = self.url_model % self.page_num

print(new_url)

self.page_num += 1

yield scrapy.Request(new_url, callback=self.parse)

def parse_detail(self, response):

upload_img_url_list = response.xpath('//*[@id="img"]/img/@src').extract()

upload_img_name = response.xpath('//*[@id="img"]/img/@title').extract_first()

item = response.meta['item']

for upload_img_url in upload_img_url_list:

# print(upload_img_url)

img_url = 'http://pic.netbian.com' + upload_img_url

# print(img_url)

item['img_src'] = img_url

item['img_name'] = upload_img_name+'.jpg'

# print(upload_img_name)

yield item

然后进入我们得管道文件

from scrapy import Request

from scrapy.pipelines.images import ImagesPipeline

import scrapy

class ImgDownloadsPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

yield scrapy.Request(url=item['img_src'], meta={'item': item})

# 返回图片名称即可

def file_path(self,request,response=None,info=None):

item = request.meta['item']

img_name = item['img_name']

print(img_name,'下载成功!!!')

return img_name

def item_completed(self,results,item,info):

return item

最后回到settings中吧管道配置打开

ITEM_PIPELINES = { 'img_downloads.pipelines.ImgDownloadsPipeline': 300, }