1. 建立一个MediaPipe AAR的步骤

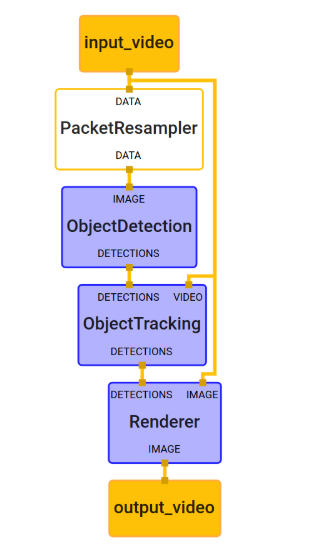

MediaPipe是用于构建跨平台多模态应用ML管道的框架,其包括快速ML推理,经典计算机视觉和媒体内容处理(如视频解码)。下面是用于对象检测与追踪的MediaPipe示例图,它由4个计算节点组成:PacketResampler计算单元;先前发布的ObjectDetection子图;围绕上述BoxTrakcing子图的ObjectTracking子图;以及绘制可视化效果的Renderer子图。

ObjectDetection子图仅在请求时运行,例如以任意帧速率或由特定信号触发。更具体地讲,在将视频帧传递到ObjectDetection之前,本示例中的PacketResampler将它们暂时采样为0.5 fps。你可以在PacketResampler中将这一选项配置为不同的帧速率。正是因为如此,在识别的时候可以抖动更少,而且可以跨帧维护对象ID。

1.1. 安装MediaPipe框架

参考Ubuntu下MediaPipe的环境配置(https://www.cnblogs.com/zhongzhaoxie/p/13359340.html)

1.2. 编译MediaPipe得AAR包

创建Mediapipe生成Android aar的编译文件,命令如下。

cd mediapipe/examples/android/src/java/com/google/mediapipe/apps/

mkdir buid_aar && cd buid_aar

vim BUILD

编译文件BUILD中内容如下,name是生成后aar的名字,calculators为使用的模型和计算单元,其他的模型和支持计算单元可以查看 mediapipe/graphs/目录下的内容,在这个目录都是Mediapipe支持的模型。其中目录hand_tracking就是使用到的模型,支持的计算单元需要查看该目录下的BUILD文件中的cc_library,这里我们是要部署到Android端的,所以选择Mobile的计算单元。本教程我们使用mobile_calculators,这个只检测一个手的关键点,如何想要检查多个收修改成这个计算单元multi_hand_mobile_calculators。

load("//mediapipe/java/com/google/mediapipe:mediapipe_aar.bzl", "mediapipe_aar")

mediapipe_aar(

name = "mediapipe_hand_tracking",

calculators = ["//mediapipe/graphs/hand_tracking:mobile_calculators"],

)

回到mediapipe根目录,执行以下命令生成Android的aar文件。执行成功,会生成该文件 bazel-bin/mediapipe/examples/android/src/java/com/google/mediapipe/apps/buid_aar/mediapipe_hand_tracking.aar

chmod -R 755 mediapipe/

bazel build -c opt --host_crosstool_top=@bazel_tools//tools/cpp:toolchain --fat_apk_cpu=arm64-v8a,armeabi-v7a

//mediapipe/examples/android/src/java/com/google/mediapipe/apps/buid_aar:mediapipe_hand_tracking

执行以下命令生成Mediapipe的二进制图,命令参数同样是上面的BUILD中,其中路径不变,变的是路径后面的参数。这次我们需要寻找的是 mediapipe_binary_graph中的name,根据我们所要使用的模型,同样这个也是只检测单个手的关键点,多个手的使用multi_hand_tracking_mobile_gpu_binary_graph。选择对应的name。成功之后会生成 bazel-bin/mediapipe/graphs/hand_tracking/hand_tracking_mobile_gpu.binarypb。

bazel build -c opt mediapipe/graphs/hand_tracking:hand_tracking_mobile_gpu_binary_graph

2. Android Studio使用MediaPipe AAR步骤

(1) 在Android Studio中创建一个TestMediaPipe的空白项目。

(2) 复制上一步编译生成的aar文件到app/libs/目录下,该文件在mediapipe根目录下的以下路径:

bazel-bin/mediapipe/examples/android/src/java/com/google/mediapipe/apps/buid_aar/mediapipe_hand_tracking.aar

(3) 复制以下文件到app/src/main/assets/目录下。

bazel-bin/mediapipe/graphs/hand_tracking/hand_tracking_mobile_gpu.binarypb

mediapipe/models:handedness.txt

mediapipe/models/hand_landmark.tflite

mediapipe/models/palm_detection.tflite

mediapipe/models/palm_detection_labelmap.txt

(4)下载OpenCV SDK,下载地址如下,解压之后,把OpenCV-android-sdk/sdk/native/libs/目录下的arm64-v8a和armeabi-v7a复制到Android项目的app/src/main/jniLibs/目录下。(https://github.com/opencv/opencv/releases/download/3.4.3/opencv-3.4.3-android-sdk.zip)

(5) 在app/build.gradle添加以下依赖库,除了添加新的依赖库,还有在第一行添加'*.aar',这样才能通过编译。还需要指定项目使用的Java版本为1.8。

dependencies {

implementation fileTree(dir: "libs", include: ["*.jar", '*.aar'])

implementation 'androidx.appcompat:appcompat:1.1.0'

implementation 'androidx.constraintlayout:constraintlayout:1.1.3'

testImplementation 'junit:junit:4.13'

androidTestImplementation 'androidx.test.ext:junit:1.1.1'

androidTestImplementation 'androidx.test.espresso:espresso-core:3.2.0'

// MediaPipe deps

implementation 'com.google.flogger:flogger:0.3.1'

implementation 'com.google.flogger:flogger-system-backend:0.3.1'

implementation 'com.google.code.findbugs:jsr305:3.0.2'

implementation 'com.google.guava:guava:27.0.1-android'

implementation 'com.google.guava:guava:27.0.1-android'

implementation 'com.google.protobuf:protobuf-java:3.11.4'

// CameraX core library

implementation "androidx.camera:camera-core:1.0.0-alpha06"

implementation "androidx.camera:camera-camera2:1.0.0-alpha06"

}

// android 中添加

compileOptions {

targetCompatibility = 1.8

sourceCompatibility = 1.8

}

(6) 在配置文件AndroidManifest.xml中添加相机权限。

<!-- For using the camera -->

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<!-- For MediaPipe -->

<uses-feature android:glEsVersion="0x00020000" android:required="true" />

(7) 修改页面代码和逻辑代码,MainActivity.java和activity_main.xml代码如下。以下为activity_main.xml代码,结构很简单,就一个FrameLayout包裹TextView,通常如何相机不正常才会显示TextView,一般情况下都会在FrameLayout显示相机拍摄的视频。

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent">

<FrameLayout

android:id="@+id/preview_display_layout"

android:layout_width="match_parent"

android:layout_height="match_parent">

<TextView

android:id="@+id/no_camera_access_view"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:gravity="center"

android:text="相机连接失败" />

</FrameLayout>

</LinearLayout>

MainActivity.java代码,模型流的输出名请查看mediapipe/examples/android/src/java/com/google/mediapipe/apps/对应的Java代码。例如多个手的输出流名为multi_hand_landmarks。

public class MainActivity extends AppCompatActivity {

private static final String TAG = "MainActivity";

// 资源文件和流输出名

private static final String BINARY_GRAPH_NAME = "hand_tracking_mobile_gpu.binarypb";

private static final String INPUT_VIDEO_STREAM_NAME = "input_video";

private static final String OUTPUT_VIDEO_STREAM_NAME = "output_video";

private static final String OUTPUT_HAND_PRESENCE_STREAM_NAME = "hand_presence";

private static final String OUTPUT_LANDMARKS_STREAM_NAME = "hand_landmarks";

private SurfaceTexture previewFrameTexture;

private SurfaceView previewDisplayView;

private EglManager eglManager;

private FrameProcessor processor;

private ExternalTextureConverter converter;

private CameraXPreviewHelper cameraHelper;

private boolean handPresence;

// 所使用的摄像头

private static final boolean USE_FRONT_CAMERA = false;

// 因为OpenGL表示图像时假设图像原点在左下角,而MediaPipe通常假设图像原点在左上角,所以要翻转

private static final boolean FLIP_FRAMES_VERTICALLY = true;

// 加载动态库

static {

System.loadLibrary("mediapipe_jni");

System.loadLibrary("opencv_java3");

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

previewDisplayView = new SurfaceView(this);

setupPreviewDisplayView();

// 获取权限

PermissionHelper.checkAndRequestCameraPermissions(this);

// 初始化assets管理器,以便MediaPipe应用资源

AndroidAssetUtil.initializeNativeAssetManager(this);

eglManager = new EglManager(null);

// 通过加载获取一个帧处理器

processor = new FrameProcessor(this,

eglManager.getNativeContext(),

BINARY_GRAPH_NAME,

INPUT_VIDEO_STREAM_NAME,

OUTPUT_VIDEO_STREAM_NAME);

processor.getVideoSurfaceOutput().setFlipY(FLIP_FRAMES_VERTICALLY);

// 获取是否检测到手模型输出

processor.addPacketCallback(

OUTPUT_HAND_PRESENCE_STREAM_NAME,

(packet) -> {

handPresence = PacketGetter.getBool(packet);

if (!handPresence) {

Log.d(TAG, "[TS:" + packet.getTimestamp() + "] Hand presence is false, no hands detected.");

}

});

// 获取手的关键点模型输出

processor.addPacketCallback(

OUTPUT_LANDMARKS_STREAM_NAME,

(packet) -> {

byte[] landmarksRaw = PacketGetter.getProtoBytes(packet);

try {

NormalizedLandmarkList landmarks = NormalizedLandmarkList.parseFrom(landmarksRaw);

if (landmarks == null || !handPresence) {

Log.d(TAG, "[TS:" + packet.getTimestamp() + "] No hand landmarks.");

return;

}

// 如果没有检测到手,输出的关键点是无效的

Log.d(TAG,

"[TS:" + packet.getTimestamp()

+ "] #Landmarks for hand: "

+ landmarks.getLandmarkCount());

Log.d(TAG, getLandmarksDebugString(landmarks));

} catch (InvalidProtocolBufferException e) {

Log.e(TAG, "Couldn't Exception received - " + e);

}

});

}

@Override

protected void onResume() {

super.onResume();

converter = new ExternalTextureConverter(eglManager.getContext());

converter.setFlipY(FLIP_FRAMES_VERTICALLY);

converter.setConsumer(processor);

if (PermissionHelper.cameraPermissionsGranted(this)) {

startCamera();

}

}

@Override

protected void onPause() {

super.onPause();

converter.close();

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

PermissionHelper.onRequestPermissionsResult(requestCode, permissions, grantResults);

}

// 计算最佳的预览大小

protected Size computeViewSize(int width, int height) {

return new Size(width, height);

}

protected void onPreviewDisplaySurfaceChanged(SurfaceHolder holder, int format, int width, int height) {

// 设置预览大小

Size viewSize = computeViewSize(width, height);

Size displaySize = cameraHelper.computeDisplaySizeFromViewSize(viewSize);

// 根据是否旋转调整预览图像大小

boolean isCameraRotated = cameraHelper.isCameraRotated();

converter.setSurfaceTextureAndAttachToGLContext(

previewFrameTexture,

isCameraRotated ? displaySize.getHeight() : displaySize.getWidth(),

isCameraRotated ? displaySize.getWidth() : displaySize.getHeight());

}

private void setupPreviewDisplayView() {

previewDisplayView.setVisibility(View.GONE);

ViewGroup viewGroup = findViewById(R.id.preview_display_layout);

viewGroup.addView(previewDisplayView);

previewDisplayView

.getHolder()

.addCallback(

new SurfaceHolder.Callback() {

@Override

public void surfaceCreated(SurfaceHolder holder) {

processor.getVideoSurfaceOutput().setSurface(holder.getSurface());

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

onPreviewDisplaySurfaceChanged(holder, format, width, height);

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

processor.getVideoSurfaceOutput().setSurface(null);

}

});

}

// 相机启动后事件

protected void onCameraStarted(SurfaceTexture surfaceTexture) {

// 显示预览

previewFrameTexture = surfaceTexture;

previewDisplayView.setVisibility(View.VISIBLE);

}

// 设置相机大小

protected Size cameraTargetResolution() {

return null;

}

// 启动相机

public void startCamera() {

cameraHelper = new CameraXPreviewHelper();

cameraHelper.setOnCameraStartedListener(this::onCameraStarted);

CameraHelper.CameraFacing cameraFacing =

USE_FRONT_CAMERA ? CameraHelper.CameraFacing.FRONT : CameraHelper.CameraFacing.BACK;

cameraHelper.startCamera(this, cameraFacing, null, cameraTargetResolution());

}

// 解析关键点

private static String getLandmarksDebugString(NormalizedLandmarkList landmarks) {

int landmarkIndex = 0;

StringBuilder landmarksString = new StringBuilder();

for (NormalizedLandmark landmark : landmarks.getLandmarkList()) {

landmarksString.append(" Landmark[").append(landmarkIndex).append("]: (").append(landmark.getX()).append(", ").append(landmark.getY()).append(", ").append(landmark.getZ()).append(")

");

++landmarkIndex;

}

return landmarksString.toString();

}

}

效果如下:

参考文献