1. ssd-caffe部署

五年半前老笔记本,没有GPU(其实有,AMD的,不能装CUDA),之前装过CPU版的Caffe

新建一个目录,然后参考网上步骤

- sudo git clone https://github.com/weiliu89/caffe.git

- cd caffe

- sudo git checkout ssd --如果输出分支说明正确

- sudo cp ./.(反正就是之前部署好的caffe目录)./../Makefile.config Makefile.config --这里第一次是尝试着手动修改Makefile.config编译失败了。

- sudo make all -j4

- sudo make runtest -j4 (5、6步骤之间应该make test的,眼花看错行,居然make和test一起做了)

- sudo make pycaffe -j4

- cd python

- python

- import caffe

表示CPU版的ssd在Caffe已经部署好的机器上很容易配置,但windows下不成,win10+cuda8.0+vs2013+python2.7+matlab2014版的微软caffe也部署成功了,但是编译ssd-caffe时各种失败未解决。

2. ssd_detect.py试验

在caffe/examples/ssd目录下,进入该目录

python ssd_detect.py -- import caffe错误

修改ssd_detect.py内指定路径:

sys.path.insert(0, './../../python')

报错变为:cannot use GPU in CPU only caffe

修改其中gpu相关代码:

#caffe.set_device(gpu_id) caffe.set_mode_cpu()

报错变为/modules/VGGNet/...

参考http://blog.csdn.net/u013738531/article/details/56678247

在提供的网盘下载模型和数据

sudo gedit ./models/VGGNet/VOC0712Plus/SSD_300x300/deploy.prototxt

按作者所示修改,会报错说找不到test_name_size.txt

因为没有做过训练,没生成数据和文件列表。

-----------------2018.02.02 新服务器编译照1执行---------------------------------

下载好作者提供的模型放在/models/下以后直接在caffe目录下python examples/ssd/ssd_detect.py即可,什么都不用改

3. 生成数据

sudo ./data/VOC0712/create_list.sh

各种报错,主要是因为路径不对

把下载到的数据(VOC2007train、test、VOC2012那三个)解压到ssd这一版本的caffe/data目录下,解压后自动生成的文件名是VOCdevkit

sudo gedit ./data/VOC0712/create_list.sh

修改root_dir=./data/VOCdevkit

ssd这一版本的caffe目录下sudo ./data/VOC0712/create_list.sh

可以看到成功生成了test_name_size.txt

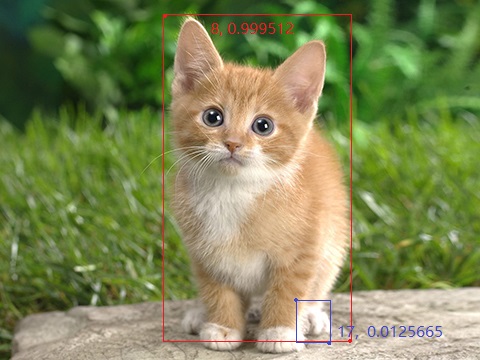

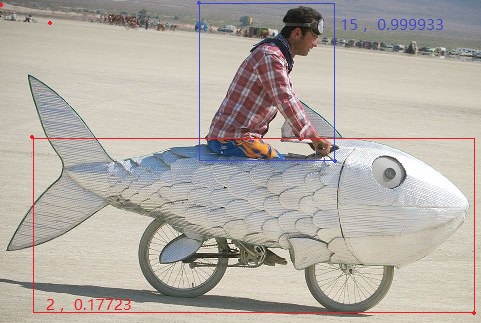

4. ssd_detect.bin测试单张(或多张图片)

仍在ssd这一版本的caffe目录下:

song@song-Lenovo-G470:/home/ssd/caffe$ sudo ./build/examples/ssd/ssd_detect.bin ./models/VGGNet/VOC0712Plus/SSD_300x300/deploy.prototxt ./models/VGGNet/VOC0712Plus/SSD_300x300/VGG_VOC0712Plus_SSD_300x300_iter_240000.caffemodel ./examples/images/test.txt

会报错说num_test_image不一致,再次

sudo gedit ./models/VGGNet/VOC0712Plus/SSD_300x300/deploy.prototxt

修改一下就行了,./examples/images/test.txt是之前手工建好的,内容为

examples/images/cat.jpg

examples/images/fish-bike.jpg

破笔记本风扇又开始呼呼的响了有一会儿(大概一分钟?),就可以了

运行结果:

examples/images/cat.jpg 8 0.999512 166 17 350 342

examples/images/cat.jpg 17 0.0125665 297 301 333 346

examples/images/cat.jpg 17 0.010942 11 304 91 356

examples/images/fish-bike.jpg 1 0.10214 1 5 49 23

examples/images/fish-bike.jpg 1 0.0192221 13 8 36 23

examples/images/fish-bike.jpg 1 0.0136562 453 43 477 58

examples/images/fish-bike.jpg 1 0.0128765 22 133 464 315

examples/images/fish-bike.jpg 1 0.0121259 16 4 42 18

examples/images/fish-bike.jpg 1 0.0109429 237 110 315 160

examples/images/fish-bike.jpg 1 0.0106769 0 -10 65 22

examples/images/fish-bike.jpg 1 0.0101953 447 43 466 56

examples/images/fish-bike.jpg 2 0.17723 34 136 474 313

examples/images/fish-bike.jpg 2 0.0162963 92 260 235 305

examples/images/fish-bike.jpg 2 0.010479 48 243 175 293

examples/images/fish-bike.jpg 4 0.0434637 22 133 464 315

examples/images/fish-bike.jpg 7 0.0490763 454 46 480 60

examples/images/fish-bike.jpg 7 0.0285298 434 42 448 55

examples/images/fish-bike.jpg 7 0.0273768 426 43 440 55

examples/images/fish-bike.jpg 7 0.0238768 219 21 235 34

examples/images/fish-bike.jpg 7 0.0224352 197 18 209 34

examples/images/fish-bike.jpg 7 0.0222405 203 19 215 33

examples/images/fish-bike.jpg 7 0.0203195 434 46 463 59

examples/images/fish-bike.jpg 7 0.0202004 427 46 446 58

examples/images/fish-bike.jpg 7 0.0190711 221 25 233 35

examples/images/fish-bike.jpg 7 0.0188878 206 24 219 34

examples/images/fish-bike.jpg 7 0.0186781 233 22 254 35

examples/images/fish-bike.jpg 7 0.0181446 222 28 239 38

examples/images/fish-bike.jpg 7 0.0176258 413 42 423 53

examples/images/fish-bike.jpg 7 0.0167608 442 43 474 60

examples/images/fish-bike.jpg 7 0.0162482 429 50 439 57

examples/images/fish-bike.jpg 7 0.0162143 209 28 227 38

examples/images/fish-bike.jpg 7 0.0160853 438 50 452 58

examples/images/fish-bike.jpg 7 0.0156584 403 41 414 52

examples/images/fish-bike.jpg 7 0.0150175 212 18 224 31

examples/images/fish-bike.jpg 7 0.0148612 419 46 433 56

examples/images/fish-bike.jpg 7 0.0145784 450 49 471 59

examples/images/fish-bike.jpg 7 0.0138213 427 47 435 55

examples/images/fish-bike.jpg 7 0.0134302 229 18 242 30

examples/images/fish-bike.jpg 7 0.0133286 438 46 448 56

examples/images/fish-bike.jpg 7 0.0128786 441 54 461 62

examples/images/fish-bike.jpg 7 0.0128691 393 41 403 51

examples/images/fish-bike.jpg 7 0.0126478 416 50 425 57

examples/images/fish-bike.jpg 7 0.0118132 199 28 216 38

examples/images/fish-bike.jpg 7 0.0118091 407 46 419 55

examples/images/fish-bike.jpg 7 0.0116668 236 29 255 40

examples/images/fish-bike.jpg 7 0.011606 404 50 412 57

examples/images/fish-bike.jpg 7 0.0115945 431 54 445 62

examples/images/fish-bike.jpg 7 0.0114097 188 24 198 33

examples/images/fish-bike.jpg 7 0.0113932 402 47 408 55

examples/images/fish-bike.jpg 7 0.0113243 416 41 429 54

examples/images/fish-bike.jpg 7 0.0113237 413 47 420 55

examples/images/fish-bike.jpg 7 0.0105125 402 38 411 50

examples/images/fish-bike.jpg 7 0.0104816 447 43 466 56

examples/images/fish-bike.jpg 9 0.0131654 293 255 373 312

examples/images/fish-bike.jpg 12 0.017695 259 110 334 161

examples/images/fish-bike.jpg 12 0.014104 291 126 335 158

examples/images/fish-bike.jpg 14 0.0288007 27 130 459 314

examples/images/fish-bike.jpg 15 0.999933 200 3 336 162

examples/images/fish-bike.jpg 15 0.0887778 267 5 330 70

examples/images/fish-bike.jpg 15 0.079791 182 40 347 271

examples/images/fish-bike.jpg 15 0.073601 238 119 315 158

examples/images/fish-bike.jpg 15 0.0449383 232 131 288 161

examples/images/fish-bike.jpg 15 0.0401796 196 17 207 32

examples/images/fish-bike.jpg 15 0.0395075 177 16 188 31

examples/images/fish-bike.jpg 15 0.0321272 144 13 153 30

examples/images/fish-bike.jpg 15 0.0313811 187 18 195 30

examples/images/fish-bike.jpg 15 0.0285742 211 103 286 161

examples/images/fish-bike.jpg 15 0.027668 201 17 213 31

examples/images/fish-bike.jpg 15 0.0267013 400 40 412 53

examples/images/fish-bike.jpg 15 0.0264357 390 40 400 52

examples/images/fish-bike.jpg 15 0.0260199 262 98 336 159

examples/images/fish-bike.jpg 15 0.0260001 156 16 165 31

examples/images/fish-bike.jpg 15 0.0259439 278 9 313 60

examples/images/fish-bike.jpg 15 0.0258899 387 39 396 48

examples/images/fish-bike.jpg 15 0.0257002 166 16 177 32

examples/images/fish-bike.jpg 15 0.0232971 162 15 172 30

examples/images/fish-bike.jpg 15 0.0230115 400 39 409 48

examples/images/fish-bike.jpg 15 0.0229916 133 12 143 30

examples/images/fish-bike.jpg 15 0.0229652 298 17 322 58

examples/images/fish-bike.jpg 15 0.0227231 189 16 198 27

examples/images/fish-bike.jpg 15 0.0222746 148 13 157 31

examples/images/fish-bike.jpg 15 0.021702 336 39 345 49

examples/images/fish-bike.jpg 15 0.0216578 373 38 381 46

examples/images/fish-bike.jpg 15 0.0212417 407 41 419 54

examples/images/fish-bike.jpg 15 0.0212224 357 38 364 45

examples/images/fish-bike.jpg 15 0.0212188 191 17 202 32

examples/images/fish-bike.jpg 15 0.0208593 378 39 386 51

examples/images/fish-bike.jpg 15 0.0207649 151 15 163 30

examples/images/fish-bike.jpg 15 0.0207481 412 39 420 51

examples/images/fish-bike.jpg 15 0.0203485 381 39 389 50

examples/images/fish-bike.jpg 15 0.0201077 333 36 342 46

examples/images/fish-bike.jpg 15 0.0199127 255 113 294 155

examples/images/fish-bike.jpg 15 0.0196864 394 39 405 53

examples/images/fish-bike.jpg 15 0.019666 336 36 344 44

examples/images/fish-bike.jpg 15 0.0193763 326 31 336 43

examples/images/fish-bike.jpg 15 0.0192419 273 7 329 39

examples/images/fish-bike.jpg 15 0.01892 351 38 357 47

examples/images/fish-bike.jpg 15 0.0185818 330 35 338 47

examples/images/fish-bike.jpg 15 0.0183361 349 39 355 49

examples/images/fish-bike.jpg 15 0.0181116 204 15 213 22

examples/images/fish-bike.jpg 15 0.0178212 255 20 320 91

examples/images/fish-bike.jpg 15 0.0174843 375 40 383 50

examples/images/fish-bike.jpg 15 0.0172716 254 23 273 38

examples/images/fish-bike.jpg 15 0.0172101 193 14 203 23

examples/images/fish-bike.jpg 15 0.0171742 221 21 231 31

examples/images/fish-bike.jpg 15 0.0169892 174 13 185 23

examples/images/fish-bike.jpg 15 0.0165922 343 37 350 45

examples/images/fish-bike.jpg 15 0.0162931 242 20 259 36

examples/images/fish-bike.jpg 15 0.0161449 197 15 205 24

examples/images/fish-bike.jpg 15 0.0161009 274 27 304 82

examples/images/fish-bike.jpg 15 0.0159696 368 38 374 48

examples/images/fish-bike.jpg 15 0.0158297 312 30 331 47

examples/images/fish-bike.jpg 15 0.0156176 185 14 192 22

examples/images/fish-bike.jpg 15 0.01529 119 11 132 29

examples/images/fish-bike.jpg 15 0.0151928 212 18 224 31

examples/images/fish-bike.jpg 15 0.0150705 416 41 429 54

examples/images/fish-bike.jpg 15 0.014883 342 31 351 39

examples/images/fish-bike.jpg 15 0.014865 207 17 217 26

examples/images/fish-bike.jpg 15 0.0147874 215 130 265 159

examples/images/fish-bike.jpg 15 0.0146961 419 40 428 49

examples/images/fish-bike.jpg 15 0.0146003 168 13 177 22

examples/images/fish-bike.jpg 15 0.0143927 364 40 371 48

examples/images/fish-bike.jpg 15 0.0142413 156 13 164 22

examples/images/fish-bike.jpg 15 0.0139997 358 32 366 39

examples/images/fish-bike.jpg 15 0.0137995 284 27 317 86

examples/images/fish-bike.jpg 15 0.0137608 168 22 176 31

examples/images/fish-bike.jpg 15 0.0137498 232 22 245 32

examples/images/fish-bike.jpg 15 0.0137089 262 19 289 42

examples/images/fish-bike.jpg 15 0.0136703 222 15 230 23

examples/images/fish-bike.jpg 15 0.0136547 242 26 265 42

examples/images/fish-bike.jpg 15 0.0134454 217 15 226 22

examples/images/fish-bike.jpg 15 0.0130665 236 122 267 155

examples/images/fish-bike.jpg 15 0.0128749 402 47 408 55

examples/images/fish-bike.jpg 15 0.0128543 142 11 152 23

examples/images/fish-bike.jpg 15 0.012737 255 26 299 50

examples/images/fish-bike.jpg 15 0.0127311 248 25 279 49

examples/images/fish-bike.jpg 15 0.0126138 301 20 328 42

examples/images/fish-bike.jpg 15 0.0126017 324 34 334 49

examples/images/fish-bike.jpg 15 0.0125893 285 2 349 51

examples/images/fish-bike.jpg 15 0.0124293 355 39 363 48

examples/images/fish-bike.jpg 15 0.0123481 291 126 335 158

examples/images/fish-bike.jpg 15 0.0121919 311 36 333 54

examples/images/fish-bike.jpg 15 0.0121331 225 18 234 26

examples/images/fish-bike.jpg 15 0.0121244 185 21 193 31

examples/images/fish-bike.jpg 15 0.0120606 423 43 436 55

examples/images/fish-bike.jpg 15 0.0120263 313 18 333 35

examples/images/fish-bike.jpg 15 0.0118891 2 5 16 22

examples/images/fish-bike.jpg 15 0.0118119 121 17 130 28

examples/images/fish-bike.jpg 15 0.0118004 257 26 269 37

examples/images/fish-bike.jpg 15 0.0117509 50 102 431 313

examples/images/fish-bike.jpg 15 0.011688 291 3 312 22

examples/images/fish-bike.jpg 15 0.0113686 332 32 344 42

examples/images/fish-bike.jpg 15 0.0113332 380 38 386 44

examples/images/fish-bike.jpg 15 0.0112034 386 34 393 39

examples/images/fish-bike.jpg 15 0.0112005 413 47 420 55

examples/images/fish-bike.jpg 15 0.0111634 305 30 326 55

examples/images/fish-bike.jpg 15 0.0111193 367 37 373 43

examples/images/fish-bike.jpg 15 0.0110268 373 33 380 39

examples/images/fish-bike.jpg 15 0.0109819 393 39 400 45

examples/images/fish-bike.jpg 15 0.0109263 271 32 315 58

examples/images/fish-bike.jpg 15 0.0109096 230 22 297 87

examples/images/fish-bike.jpg 15 0.0108865 399 35 406 40

examples/images/fish-bike.jpg 15 0.0108715 146 22 152 31

examples/images/fish-bike.jpg 15 0.0105892 329 39 342 51

examples/images/fish-bike.jpg 15 0.010463 251 34 302 60

examples/images/fish-bike.jpg 15 0.0103912 229 18 242 30

examples/images/fish-bike.jpg 15 0.0102471 273 16 296 32

examples/images/fish-bike.jpg 15 0.010216 250 105 280 148

examples/images/fish-bike.jpg 15 0.0100929 233 16 242 23

examples/images/fish-bike.jpg 15 0.010052 242 24 253 33

examples/images/fish-bike.jpg 16 0.0153302 297 254 386 312

examples/images/fish-bike.jpg 19 0.0166149 27 130 459 314

选概率大的自己手动画了一下:

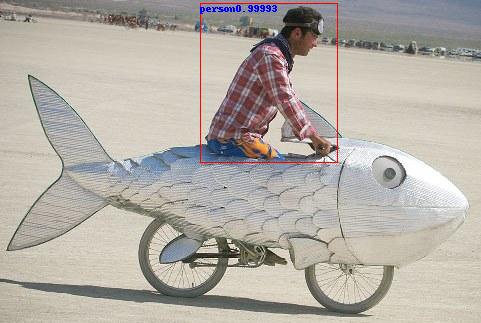

再次sudo python ssd_detect.py也可以了,输出比.bin那个好看一点点:

/usr/local/lib/python2.7/dist-packages/skimage/transform/_warps.py:84: UserWarning: The default mode, 'constant', will be changed to 'reflect' in skimage 0.15.

warn("The default mode, 'constant', will be changed to 'reflect' in "

[[0.4162176, 0.010038316, 0.70032334, 0.50228781, 15, 0.99993026, u'person']]

481 323

[0.4162176, 0.010038316, 0.70032334, 0.50228781, 15, 0.99993026, u'person']

[200, 3, 337, 162]

[200, 3] person

也不用傻不拉几的自己画图了,在caffe目录下保存了detect_result.jpg

5. 用VOC2007数据训练

create_data.sh中将data_root_dir改成自己数据的绝对路径,运行错误参考http://blog.csdn.net/lanyuelvyun/article/details/73628152解决。

(就是import caffe的问题:添加python路径,export PYTHONPATH=/home/ssd/caffe/python:$PYTHONPATH,是一次性修改,每次重启都要)

可以使用echo $PYTHONPATH查看Python环境变量

在ssd/caffe目录下python ./examples/ssd/ssd_pascal.py运行

出现了各种错误,需要修改几个.cpp之后重新编译一下caffe

依稀记得的有:

math_functions.cpp:250] Check failed: a <= b <0 vs -1.19209e-007>

修改方案按这个http://www.mamicode.com/info-detail-1869191.html,注释掉mat_functions.cpp里的CHACK_LE(a,b),重新编译,OK

一个特麻烦的,大概是数据的错误,大概长这样(好像最开始是2 vs. 0,改了哪里变成12了):

Check failed: mdb_status == 0 (12 vs. 0) Cannot allocate memory

按搜到的方案,有说是lmdb数据错误,修改create_data.sh生成leveldb格式数据训练,报错

删除VOC2012所有内容,重新create_data.sh生成只有VOC2007的训练验证集

按http://blog.csdn.net/apsvvfb/article/details/50885335各种修改/src/caffe/util里的db_lmdb.cpp源码

MDB_CHECK(mdb_env_set_mapsize(mdb_env, 1024>>20); //等等各种,这里试了1GB,2GB,都不行

最后又改回原来的new_size(不懂什么意思,是说根据机器当前状态分配吗?)重新编译

总之各种试,依稀记得当晚python ./examples/ssd/ssd_pascal.py最后一次报错是一个关于snapshot的,好像是说上次训练中断时候记录的数据不存在,太晚了,睡了,没管它

隔了一天,今天下午重新运行,居然似乎是在训练了,小电脑风扇又开始呼呼呼的响,控制台状态:

1 I1023 13:20:48.597777 2746 net.cpp:150] Setting up conv7_2_mbox_conf 2 I1023 13:20:48.597807 2746 net.cpp:157] Top shape: 8 126 5 5 (25200) 3 I1023 13:20:48.597818 2746 net.cpp:165] Memory required for data: 1997809664 4 I1023 13:20:48.597837 2746 layer_factory.hpp:77] Creating layer conv7_2_mbox_conf_perm 5 I1023 13:20:48.597854 2746 net.cpp:100] Creating Layer conv7_2_mbox_conf_perm 6 I1023 13:20:48.597867 2746 net.cpp:434] conv7_2_mbox_conf_perm <- conv7_2_mbox_conf 7 I1023 13:20:48.597882 2746 net.cpp:408] conv7_2_mbox_conf_perm -> conv7_2_mbox_conf_perm 8 I1023 13:20:48.597908 2746 net.cpp:150] Setting up conv7_2_mbox_conf_perm 9 I1023 13:20:48.597926 2746 net.cpp:157] Top shape: 8 5 5 126 (25200) 10 I1023 13:20:48.597936 2746 net.cpp:165] Memory required for data: 1997910464 11 I1023 13:20:48.597947 2746 layer_factory.hpp:77] Creating layer conv7_2_mbox_conf_flat 12 I1023 13:20:48.597970 2746 net.cpp:100] Creating Layer conv7_2_mbox_conf_flat 13 I1023 13:20:48.597985 2746 net.cpp:434] conv7_2_mbox_conf_flat <- conv7_2_mbox_conf_perm 14 I1023 13:20:48.598001 2746 net.cpp:408] conv7_2_mbox_conf_flat -> conv7_2_mbox_conf_flat 15 I1023 13:20:48.598022 2746 net.cpp:150] Setting up conv7_2_mbox_conf_flat 16 I1023 13:20:48.598038 2746 net.cpp:157] Top shape: 8 3150 (25200) 17 I1023 13:20:48.598048 2746 net.cpp:165] Memory required for data: 1998011264 18 I1023 13:20:48.598059 2746 layer_factory.hpp:77] Creating layer conv7_2_mbox_priorbox 19 I1023 13:20:48.598074 2746 net.cpp:100] Creating Layer conv7_2_mbox_priorbox 20 I1023 13:20:48.598088 2746 net.cpp:434] conv7_2_mbox_priorbox <- conv7_2_conv7_2_relu_0_split_3 21 I1023 13:20:48.598101 2746 net.cpp:434] conv7_2_mbox_priorbox <- data_data_0_split_4 22 I1023 13:20:48.598117 2746 net.cpp:408] conv7_2_mbox_priorbox -> conv7_2_mbox_priorbox 23 I1023 13:20:48.598140 2746 net.cpp:150] Setting up conv7_2_mbox_priorbox 24 I1023 13:20:48.598156 2746 net.cpp:157] Top shape: 1 2 600 (1200) 25 I1023 13:20:48.598168 2746 net.cpp:165] Memory required for data: 1998016064 26 I1023 13:20:48.598181 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_loc 27 I1023 13:20:48.598199 2746 net.cpp:100] Creating Layer conv8_2_mbox_loc 28 I1023 13:20:48.598213 2746 net.cpp:434] conv8_2_mbox_loc <- conv8_2_conv8_2_relu_0_split_1 29 I1023 13:20:48.598233 2746 net.cpp:408] conv8_2_mbox_loc -> conv8_2_mbox_loc 30 I1023 13:20:48.599309 2746 net.cpp:150] Setting up conv8_2_mbox_loc 31 I1023 13:20:48.599329 2746 net.cpp:157] Top shape: 8 16 3 3 (1152) 32 I1023 13:20:48.599342 2746 net.cpp:165] Memory required for data: 1998020672 33 I1023 13:20:48.599380 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_loc_perm 34 I1023 13:20:48.599397 2746 net.cpp:100] Creating Layer conv8_2_mbox_loc_perm 35 I1023 13:20:48.599411 2746 net.cpp:434] conv8_2_mbox_loc_perm <- conv8_2_mbox_loc 36 I1023 13:20:48.599428 2746 net.cpp:408] conv8_2_mbox_loc_perm -> conv8_2_mbox_loc_perm 37 I1023 13:20:48.599457 2746 net.cpp:150] Setting up conv8_2_mbox_loc_perm 38 I1023 13:20:48.599473 2746 net.cpp:157] Top shape: 8 3 3 16 (1152) 39 I1023 13:20:48.599485 2746 net.cpp:165] Memory required for data: 1998025280 40 I1023 13:20:48.599496 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_loc_flat 41 I1023 13:20:48.599529 2746 net.cpp:100] Creating Layer conv8_2_mbox_loc_flat 42 I1023 13:20:48.599541 2746 net.cpp:434] conv8_2_mbox_loc_flat <- conv8_2_mbox_loc_perm 43 I1023 13:20:48.599556 2746 net.cpp:408] conv8_2_mbox_loc_flat -> conv8_2_mbox_loc_flat 44 I1023 13:20:48.599575 2746 net.cpp:150] Setting up conv8_2_mbox_loc_flat 45 I1023 13:20:48.599591 2746 net.cpp:157] Top shape: 8 144 (1152) 46 I1023 13:20:48.599603 2746 net.cpp:165] Memory required for data: 1998029888 47 I1023 13:20:48.599614 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_conf 48 I1023 13:20:48.599638 2746 net.cpp:100] Creating Layer conv8_2_mbox_conf 49 I1023 13:20:48.599653 2746 net.cpp:434] conv8_2_mbox_conf <- conv8_2_conv8_2_relu_0_split_2 50 I1023 13:20:48.599671 2746 net.cpp:408] conv8_2_mbox_conf -> conv8_2_mbox_conf 51 I1023 13:20:48.605247 2746 net.cpp:150] Setting up conv8_2_mbox_conf 52 I1023 13:20:48.605273 2746 net.cpp:157] Top shape: 8 84 3 3 (6048) 53 I1023 13:20:48.605283 2746 net.cpp:165] Memory required for data: 1998054080 54 I1023 13:20:48.605300 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_conf_perm 55 I1023 13:20:48.605319 2746 net.cpp:100] Creating Layer conv8_2_mbox_conf_perm 56 I1023 13:20:48.605334 2746 net.cpp:434] conv8_2_mbox_conf_perm <- conv8_2_mbox_conf 57 I1023 13:20:48.605348 2746 net.cpp:408] conv8_2_mbox_conf_perm -> conv8_2_mbox_conf_perm 58 I1023 13:20:48.605379 2746 net.cpp:150] Setting up conv8_2_mbox_conf_perm 59 I1023 13:20:48.605396 2746 net.cpp:157] Top shape: 8 3 3 84 (6048) 60 I1023 13:20:48.605408 2746 net.cpp:165] Memory required for data: 1998078272 61 I1023 13:20:48.605419 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_conf_flat 62 I1023 13:20:48.605433 2746 net.cpp:100] Creating Layer conv8_2_mbox_conf_flat 63 I1023 13:20:48.605446 2746 net.cpp:434] conv8_2_mbox_conf_flat <- conv8_2_mbox_conf_perm 64 I1023 13:20:48.605461 2746 net.cpp:408] conv8_2_mbox_conf_flat -> conv8_2_mbox_conf_flat 65 I1023 13:20:48.605480 2746 net.cpp:150] Setting up conv8_2_mbox_conf_flat 66 I1023 13:20:48.605496 2746 net.cpp:157] Top shape: 8 756 (6048) 67 I1023 13:20:48.605509 2746 net.cpp:165] Memory required for data: 1998102464 68 I1023 13:20:48.605520 2746 layer_factory.hpp:77] Creating layer conv8_2_mbox_priorbox 69 I1023 13:20:48.605535 2746 net.cpp:100] Creating Layer conv8_2_mbox_priorbox 70 I1023 13:20:48.605548 2746 net.cpp:434] conv8_2_mbox_priorbox <- conv8_2_conv8_2_relu_0_split_3 71 I1023 13:20:48.605561 2746 net.cpp:434] conv8_2_mbox_priorbox <- data_data_0_split_5 72 I1023 13:20:48.605579 2746 net.cpp:408] conv8_2_mbox_priorbox -> conv8_2_mbox_priorbox 73 I1023 13:20:48.605602 2746 net.cpp:150] Setting up conv8_2_mbox_priorbox 74 I1023 13:20:48.605618 2746 net.cpp:157] Top shape: 1 2 144 (288) 75 I1023 13:20:48.605630 2746 net.cpp:165] Memory required for data: 1998103616 76 I1023 13:20:48.605641 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_loc 77 I1023 13:20:48.605664 2746 net.cpp:100] Creating Layer conv9_2_mbox_loc 78 I1023 13:20:48.605677 2746 net.cpp:434] conv9_2_mbox_loc <- conv9_2_conv9_2_relu_0_split_0 79 I1023 13:20:48.605693 2746 net.cpp:408] conv9_2_mbox_loc -> conv9_2_mbox_loc 80 I1023 13:20:48.606783 2746 net.cpp:150] Setting up conv9_2_mbox_loc 81 I1023 13:20:48.606804 2746 net.cpp:157] Top shape: 8 16 1 1 (128) 82 I1023 13:20:48.606817 2746 net.cpp:165] Memory required for data: 1998104128 83 I1023 13:20:48.606833 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_loc_perm 84 I1023 13:20:48.606850 2746 net.cpp:100] Creating Layer conv9_2_mbox_loc_perm 85 I1023 13:20:48.606863 2746 net.cpp:434] conv9_2_mbox_loc_perm <- conv9_2_mbox_loc 86 I1023 13:20:48.606881 2746 net.cpp:408] conv9_2_mbox_loc_perm -> conv9_2_mbox_loc_perm 87 I1023 13:20:48.606907 2746 net.cpp:150] Setting up conv9_2_mbox_loc_perm 88 I1023 13:20:48.606925 2746 net.cpp:157] Top shape: 8 1 1 16 (128) 89 I1023 13:20:48.606936 2746 net.cpp:165] Memory required for data: 1998104640 90 I1023 13:20:48.606947 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_loc_flat 91 I1023 13:20:48.606961 2746 net.cpp:100] Creating Layer conv9_2_mbox_loc_flat 92 I1023 13:20:48.606973 2746 net.cpp:434] conv9_2_mbox_loc_flat <- conv9_2_mbox_loc_perm 93 I1023 13:20:48.607007 2746 net.cpp:408] conv9_2_mbox_loc_flat -> conv9_2_mbox_loc_flat 94 I1023 13:20:48.607028 2746 net.cpp:150] Setting up conv9_2_mbox_loc_flat 95 I1023 13:20:48.607044 2746 net.cpp:157] Top shape: 8 16 (128) 96 I1023 13:20:48.607056 2746 net.cpp:165] Memory required for data: 1998105152 97 I1023 13:20:48.607067 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_conf 98 I1023 13:20:48.607089 2746 net.cpp:100] Creating Layer conv9_2_mbox_conf 99 I1023 13:20:48.607102 2746 net.cpp:434] conv9_2_mbox_conf <- conv9_2_conv9_2_relu_0_split_1 100 I1023 13:20:48.607123 2746 net.cpp:408] conv9_2_mbox_conf -> conv9_2_mbox_conf 101 I1023 13:20:48.613224 2746 net.cpp:150] Setting up conv9_2_mbox_conf 102 I1023 13:20:48.613458 2746 net.cpp:157] Top shape: 8 84 1 1 (672) 103 I1023 13:20:48.613528 2746 net.cpp:165] Memory required for data: 1998107840 104 I1023 13:20:48.613605 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_conf_perm 105 I1023 13:20:48.613675 2746 net.cpp:100] Creating Layer conv9_2_mbox_conf_perm 106 I1023 13:20:48.613711 2746 net.cpp:434] conv9_2_mbox_conf_perm <- conv9_2_mbox_conf 107 I1023 13:20:48.613756 2746 net.cpp:408] conv9_2_mbox_conf_perm -> conv9_2_mbox_conf_perm 108 I1023 13:20:48.613818 2746 net.cpp:150] Setting up conv9_2_mbox_conf_perm 109 I1023 13:20:48.613857 2746 net.cpp:157] Top shape: 8 1 1 84 (672) 110 I1023 13:20:48.613888 2746 net.cpp:165] Memory required for data: 1998110528 111 I1023 13:20:48.613919 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_conf_flat 112 I1023 13:20:48.613955 2746 net.cpp:100] Creating Layer conv9_2_mbox_conf_flat 113 I1023 13:20:48.613986 2746 net.cpp:434] conv9_2_mbox_conf_flat <- conv9_2_mbox_conf_perm 114 I1023 13:20:48.614022 2746 net.cpp:408] conv9_2_mbox_conf_flat -> conv9_2_mbox_conf_flat 115 I1023 13:20:48.614068 2746 net.cpp:150] Setting up conv9_2_mbox_conf_flat 116 I1023 13:20:48.614104 2746 net.cpp:157] Top shape: 8 84 (672) 117 I1023 13:20:48.614135 2746 net.cpp:165] Memory required for data: 1998113216 118 I1023 13:20:48.614168 2746 layer_factory.hpp:77] Creating layer conv9_2_mbox_priorbox 119 I1023 13:20:48.614207 2746 net.cpp:100] Creating Layer conv9_2_mbox_priorbox 120 I1023 13:20:48.614243 2746 net.cpp:434] conv9_2_mbox_priorbox <- conv9_2_conv9_2_relu_0_split_2 121 I1023 13:20:48.614276 2746 net.cpp:434] conv9_2_mbox_priorbox <- data_data_0_split_6 122 I1023 13:20:48.614320 2746 net.cpp:408] conv9_2_mbox_priorbox -> conv9_2_mbox_priorbox 123 I1023 13:20:48.614364 2746 net.cpp:150] Setting up conv9_2_mbox_priorbox 124 I1023 13:20:48.614401 2746 net.cpp:157] Top shape: 1 2 16 (32) 125 I1023 13:20:48.614431 2746 net.cpp:165] Memory required for data: 1998113344 126 I1023 13:20:48.614462 2746 layer_factory.hpp:77] Creating layer mbox_loc 127 I1023 13:20:48.614503 2746 net.cpp:100] Creating Layer mbox_loc 128 I1023 13:20:48.614537 2746 net.cpp:434] mbox_loc <- conv4_3_norm_mbox_loc_flat 129 I1023 13:20:48.614570 2746 net.cpp:434] mbox_loc <- fc7_mbox_loc_flat 130 I1023 13:20:48.614605 2746 net.cpp:434] mbox_loc <- conv6_2_mbox_loc_flat 131 I1023 13:20:48.614640 2746 net.cpp:434] mbox_loc <- conv7_2_mbox_loc_flat 132 I1023 13:20:48.614672 2746 net.cpp:434] mbox_loc <- conv8_2_mbox_loc_flat 133 I1023 13:20:48.614711 2746 net.cpp:434] mbox_loc <- conv9_2_mbox_loc_flat 134 I1023 13:20:48.614761 2746 net.cpp:408] mbox_loc -> mbox_loc 135 I1023 13:20:48.614820 2746 net.cpp:150] Setting up mbox_loc 136 I1023 13:20:48.614881 2746 net.cpp:157] Top shape: 8 34928 (279424) 137 I1023 13:20:48.614918 2746 net.cpp:165] Memory required for data: 1999231040 138 I1023 13:20:48.614958 2746 layer_factory.hpp:77] Creating layer mbox_conf 139 I1023 13:20:48.615005 2746 net.cpp:100] Creating Layer mbox_conf 140 I1023 13:20:48.615046 2746 net.cpp:434] mbox_conf <- conv4_3_norm_mbox_conf_flat 141 I1023 13:20:48.615084 2746 net.cpp:434] mbox_conf <- fc7_mbox_conf_flat 142 I1023 13:20:48.615118 2746 net.cpp:434] mbox_conf <- conv6_2_mbox_conf_flat 143 I1023 13:20:48.615152 2746 net.cpp:434] mbox_conf <- conv7_2_mbox_conf_flat 144 I1023 13:20:48.615185 2746 net.cpp:434] mbox_conf <- conv8_2_mbox_conf_flat 145 I1023 13:20:48.615231 2746 net.cpp:434] mbox_conf <- conv9_2_mbox_conf_flat 146 I1023 13:20:48.615284 2746 net.cpp:408] mbox_conf -> mbox_conf 147 I1023 13:20:48.615327 2746 net.cpp:150] Setting up mbox_conf 148 I1023 13:20:48.615363 2746 net.cpp:157] Top shape: 8 183372 (1466976) 149 I1023 13:20:48.615393 2746 net.cpp:165] Memory required for data: 2005098944 150 I1023 13:20:48.615424 2746 layer_factory.hpp:77] Creating layer mbox_priorbox 151 I1023 13:20:48.615460 2746 net.cpp:100] Creating Layer mbox_priorbox 152 I1023 13:20:48.615491 2746 net.cpp:434] mbox_priorbox <- conv4_3_norm_mbox_priorbox 153 I1023 13:20:48.615525 2746 net.cpp:434] mbox_priorbox <- fc7_mbox_priorbox 154 I1023 13:20:48.615557 2746 net.cpp:434] mbox_priorbox <- conv6_2_mbox_priorbox 155 I1023 13:20:48.615592 2746 net.cpp:434] mbox_priorbox <- conv7_2_mbox_priorbox 156 I1023 13:20:48.615623 2746 net.cpp:434] mbox_priorbox <- conv8_2_mbox_priorbox 157 I1023 13:20:48.615656 2746 net.cpp:434] mbox_priorbox <- conv9_2_mbox_priorbox 158 I1023 13:20:48.615690 2746 net.cpp:408] mbox_priorbox -> mbox_priorbox 159 I1023 13:20:48.615731 2746 net.cpp:150] Setting up mbox_priorbox 160 I1023 13:20:48.615766 2746 net.cpp:157] Top shape: 1 2 34928 (69856) 161 I1023 13:20:48.615795 2746 net.cpp:165] Memory required for data: 2005378368 162 I1023 13:20:48.615828 2746 layer_factory.hpp:77] Creating layer mbox_conf_reshape 163 I1023 13:20:48.615869 2746 net.cpp:100] Creating Layer mbox_conf_reshape 164 I1023 13:20:48.615902 2746 net.cpp:434] mbox_conf_reshape <- mbox_conf 165 I1023 13:20:48.615942 2746 net.cpp:408] mbox_conf_reshape -> mbox_conf_reshape 166 I1023 13:20:48.616000 2746 net.cpp:150] Setting up mbox_conf_reshape 167 I1023 13:20:48.616037 2746 net.cpp:157] Top shape: 8 8732 21 (1466976) 168 I1023 13:20:48.616067 2746 net.cpp:165] Memory required for data: 2011246272 169 I1023 13:20:48.616097 2746 layer_factory.hpp:77] Creating layer mbox_conf_softmax 170 I1023 13:20:48.616132 2746 net.cpp:100] Creating Layer mbox_conf_softmax 171 I1023 13:20:48.616163 2746 net.cpp:434] mbox_conf_softmax <- mbox_conf_reshape 172 I1023 13:20:48.616201 2746 net.cpp:408] mbox_conf_softmax -> mbox_conf_softmax 173 I1023 13:20:48.616256 2746 net.cpp:150] Setting up mbox_conf_softmax 174 I1023 13:20:48.616297 2746 net.cpp:157] Top shape: 8 8732 21 (1466976) 175 I1023 13:20:48.616333 2746 net.cpp:165] Memory required for data: 2017114176 176 I1023 13:20:48.616394 2746 layer_factory.hpp:77] Creating layer mbox_conf_flatten 177 I1023 13:20:48.616442 2746 net.cpp:100] Creating Layer mbox_conf_flatten 178 I1023 13:20:48.616477 2746 net.cpp:434] mbox_conf_flatten <- mbox_conf_softmax 179 I1023 13:20:48.616516 2746 net.cpp:408] mbox_conf_flatten -> mbox_conf_flatten 180 I1023 13:20:48.616564 2746 net.cpp:150] Setting up mbox_conf_flatten 181 I1023 13:20:48.616603 2746 net.cpp:157] Top shape: 8 183372 (1466976) 182 I1023 13:20:48.616634 2746 net.cpp:165] Memory required for data: 2022982080 183 I1023 13:20:48.616668 2746 layer_factory.hpp:77] Creating layer detection_out 184 I1023 13:20:48.616753 2746 net.cpp:100] Creating Layer detection_out 185 I1023 13:20:48.616796 2746 net.cpp:434] detection_out <- mbox_loc 186 I1023 13:20:48.616833 2746 net.cpp:434] detection_out <- mbox_conf_flatten 187 I1023 13:20:48.616868 2746 net.cpp:434] detection_out <- mbox_priorbox 188 I1023 13:20:48.616914 2746 net.cpp:408] detection_out -> detection_out 189 I1023 13:20:48.679901 2746 net.cpp:150] Setting up detection_out 190 I1023 13:20:48.679949 2746 net.cpp:157] Top shape: 1 1 1 7 (7) 191 I1023 13:20:48.680019 2746 net.cpp:165] Memory required for data: 2022982108 192 I1023 13:20:48.680063 2746 layer_factory.hpp:77] Creating layer detection_eval 193 I1023 13:20:48.680110 2746 net.cpp:100] Creating Layer detection_eval 194 I1023 13:20:48.680150 2746 net.cpp:434] detection_eval <- detection_out 195 I1023 13:20:48.680187 2746 net.cpp:434] detection_eval <- label 196 I1023 13:20:48.680227 2746 net.cpp:408] detection_eval -> detection_eval 197 I1023 13:20:48.683207 2746 net.cpp:150] Setting up detection_eval 198 I1023 13:20:48.683255 2746 net.cpp:157] Top shape: 1 1 21 5 (105) 199 I1023 13:20:48.683290 2746 net.cpp:165] Memory required for data: 2022982528 200 I1023 13:20:48.683334 2746 net.cpp:228] detection_eval does not need backward computation. 201 I1023 13:20:48.683385 2746 net.cpp:228] detection_out does not need backward computation. 202 I1023 13:20:48.683421 2746 net.cpp:228] mbox_conf_flatten does not need backward computation. 203 I1023 13:20:48.683455 2746 net.cpp:228] mbox_conf_softmax does not need backward computation. 204 I1023 13:20:48.683488 2746 net.cpp:228] mbox_conf_reshape does not need backward computation. 205 I1023 13:20:48.683521 2746 net.cpp:228] mbox_priorbox does not need backward computation. 206 I1023 13:20:48.683557 2746 net.cpp:228] mbox_conf does not need backward computation. 207 I1023 13:20:48.683594 2746 net.cpp:228] mbox_loc does not need backward computation. 208 I1023 13:20:48.683630 2746 net.cpp:228] conv9_2_mbox_priorbox does not need backward computation. 209 I1023 13:20:48.683665 2746 net.cpp:228] conv9_2_mbox_conf_flat does not need backward computation. 210 I1023 13:20:48.683698 2746 net.cpp:228] conv9_2_mbox_conf_perm does not need backward computation. 211 I1023 13:20:48.683732 2746 net.cpp:228] conv9_2_mbox_conf does not need backward computation. 212 I1023 13:20:48.683765 2746 net.cpp:228] conv9_2_mbox_loc_flat does not need backward computation. 213 I1023 13:20:48.683799 2746 net.cpp:228] conv9_2_mbox_loc_perm does not need backward computation. 214 I1023 13:20:48.683832 2746 net.cpp:228] conv9_2_mbox_loc does not need backward computation. 215 I1023 13:20:48.683866 2746 net.cpp:228] conv8_2_mbox_priorbox does not need backward computation. 216 I1023 13:20:48.683900 2746 net.cpp:228] conv8_2_mbox_conf_flat does not need backward computation. 217 I1023 13:20:48.683934 2746 net.cpp:228] conv8_2_mbox_conf_perm does not need backward computation. 218 I1023 13:20:48.683969 2746 net.cpp:228] conv8_2_mbox_conf does not need backward computation. 219 I1023 13:20:48.684001 2746 net.cpp:228] conv8_2_mbox_loc_flat does not need backward computation. 220 I1023 13:20:48.684034 2746 net.cpp:228] conv8_2_mbox_loc_perm does not need backward computation. 221 I1023 13:20:48.684068 2746 net.cpp:228] conv8_2_mbox_loc does not need backward computation. 222 I1023 13:20:48.684103 2746 net.cpp:228] conv7_2_mbox_priorbox does not need backward computation. 223 I1023 13:20:48.684136 2746 net.cpp:228] conv7_2_mbox_conf_flat does not need backward computation. 224 I1023 13:20:48.684170 2746 net.cpp:228] conv7_2_mbox_conf_perm does not need backward computation. 225 I1023 13:20:48.684203 2746 net.cpp:228] conv7_2_mbox_conf does not need backward computation. 226 I1023 13:20:48.684237 2746 net.cpp:228] conv7_2_mbox_loc_flat does not need backward computation. 227 I1023 13:20:48.684269 2746 net.cpp:228] conv7_2_mbox_loc_perm does not need backward computation. 228 I1023 13:20:48.684303 2746 net.cpp:228] conv7_2_mbox_loc does not need backward computation. 229 I1023 13:20:48.684336 2746 net.cpp:228] conv6_2_mbox_priorbox does not need backward computation. 230 I1023 13:20:48.684391 2746 net.cpp:228] conv6_2_mbox_conf_flat does not need backward computation. 231 I1023 13:20:48.684427 2746 net.cpp:228] conv6_2_mbox_conf_perm does not need backward computation. 232 I1023 13:20:48.684463 2746 net.cpp:228] conv6_2_mbox_conf does not need backward computation. 233 I1023 13:20:48.684496 2746 net.cpp:228] conv6_2_mbox_loc_flat does not need backward computation. 234 I1023 13:20:48.684530 2746 net.cpp:228] conv6_2_mbox_loc_perm does not need backward computation. 235 I1023 13:20:48.684562 2746 net.cpp:228] conv6_2_mbox_loc does not need backward computation. 236 I1023 13:20:48.684594 2746 net.cpp:228] fc7_mbox_priorbox does not need backward computation. 237 I1023 13:20:48.684628 2746 net.cpp:228] fc7_mbox_conf_flat does not need backward computation. 238 I1023 13:20:48.684659 2746 net.cpp:228] fc7_mbox_conf_perm does not need backward computation. 239 I1023 13:20:48.684690 2746 net.cpp:228] fc7_mbox_conf does not need backward computation. 240 I1023 13:20:48.684723 2746 net.cpp:228] fc7_mbox_loc_flat does not need backward computation. 241 I1023 13:20:48.684754 2746 net.cpp:228] fc7_mbox_loc_perm does not need backward computation. 242 I1023 13:20:48.684787 2746 net.cpp:228] fc7_mbox_loc does not need backward computation. 243 I1023 13:20:48.684836 2746 net.cpp:228] conv4_3_norm_mbox_priorbox does not need backward computation. 244 I1023 13:20:48.684871 2746 net.cpp:228] conv4_3_norm_mbox_conf_flat does not need backward computation. 245 I1023 13:20:48.684903 2746 net.cpp:228] conv4_3_norm_mbox_conf_perm does not need backward computation. 246 I1023 13:20:48.684936 2746 net.cpp:228] conv4_3_norm_mbox_conf does not need backward computation. 247 I1023 13:20:48.684967 2746 net.cpp:228] conv4_3_norm_mbox_loc_flat does not need backward computation. 248 I1023 13:20:48.684998 2746 net.cpp:228] conv4_3_norm_mbox_loc_perm does not need backward computation. 249 I1023 13:20:48.685030 2746 net.cpp:228] conv4_3_norm_mbox_loc does not need backward computation. 250 I1023 13:20:48.685063 2746 net.cpp:228] conv4_3_norm_conv4_3_norm_0_split does not need backward computation. 251 I1023 13:20:48.685096 2746 net.cpp:228] conv4_3_norm does not need backward computation. 252 I1023 13:20:48.685128 2746 net.cpp:228] conv9_2_conv9_2_relu_0_split does not need backward computation. 253 I1023 13:20:48.685161 2746 net.cpp:228] conv9_2_relu does not need backward computation. 254 I1023 13:20:48.685192 2746 net.cpp:228] conv9_2 does not need backward computation. 255 I1023 13:20:48.685223 2746 net.cpp:228] conv9_1_relu does not need backward computation. 256 I1023 13:20:48.685253 2746 net.cpp:228] conv9_1 does not need backward computation. 257 I1023 13:20:48.685284 2746 net.cpp:228] conv8_2_conv8_2_relu_0_split does not need backward computation. 258 I1023 13:20:48.685317 2746 net.cpp:228] conv8_2_relu does not need backward computation. 259 I1023 13:20:48.685348 2746 net.cpp:228] conv8_2 does not need backward computation. 260 I1023 13:20:48.685379 2746 net.cpp:228] conv8_1_relu does not need backward computation. 261 I1023 13:20:48.685410 2746 net.cpp:228] conv8_1 does not need backward computation. 262 I1023 13:20:48.685441 2746 net.cpp:228] conv7_2_conv7_2_relu_0_split does not need backward computation. 263 I1023 13:20:48.685472 2746 net.cpp:228] conv7_2_relu does not need backward computation. 264 I1023 13:20:48.685503 2746 net.cpp:228] conv7_2 does not need backward computation. 265 I1023 13:20:48.685534 2746 net.cpp:228] conv7_1_relu does not need backward computation. 266 I1023 13:20:48.685565 2746 net.cpp:228] conv7_1 does not need backward computation. 267 I1023 13:20:48.685598 2746 net.cpp:228] conv6_2_conv6_2_relu_0_split does not need backward computation. 268 I1023 13:20:48.685631 2746 net.cpp:228] conv6_2_relu does not need backward computation. 269 I1023 13:20:48.685660 2746 net.cpp:228] conv6_2 does not need backward computation. 270 I1023 13:20:48.685691 2746 net.cpp:228] conv6_1_relu does not need backward computation. 271 I1023 13:20:48.685722 2746 net.cpp:228] conv6_1 does not need backward computation. 272 I1023 13:20:48.685756 2746 net.cpp:228] fc7_relu7_0_split does not need backward computation. 273 I1023 13:20:48.685787 2746 net.cpp:228] relu7 does not need backward computation. 274 I1023 13:20:48.685818 2746 net.cpp:228] fc7 does not need backward computation. 275 I1023 13:20:48.685849 2746 net.cpp:228] relu6 does not need backward computation. 276 I1023 13:20:48.685883 2746 net.cpp:228] fc6 does not need backward computation. 277 I1023 13:20:48.685914 2746 net.cpp:228] pool5 does not need backward computation. 278 I1023 13:20:48.685945 2746 net.cpp:228] relu5_3 does not need backward computation. 279 I1023 13:20:48.685976 2746 net.cpp:228] conv5_3 does not need backward computation. 280 I1023 13:20:48.686007 2746 net.cpp:228] relu5_2 does not need backward computation. 281 I1023 13:20:48.686038 2746 net.cpp:228] conv5_2 does not need backward computation. 282 I1023 13:20:48.686069 2746 net.cpp:228] relu5_1 does not need backward computation. 283 I1023 13:20:48.686100 2746 net.cpp:228] conv5_1 does not need backward computation. 284 I1023 13:20:48.686131 2746 net.cpp:228] pool4 does not need backward computation. 285 I1023 13:20:48.686162 2746 net.cpp:228] conv4_3_relu4_3_0_split does not need backward computation. 286 I1023 13:20:48.686194 2746 net.cpp:228] relu4_3 does not need backward computation. 287 I1023 13:20:48.686233 2746 net.cpp:228] conv4_3 does not need backward computation. 288 I1023 13:20:48.686275 2746 net.cpp:228] relu4_2 does not need backward computation. 289 I1023 13:20:48.686306 2746 net.cpp:228] conv4_2 does not need backward computation. 290 I1023 13:20:48.686337 2746 net.cpp:228] relu4_1 does not need backward computation. 291 I1023 13:20:48.686367 2746 net.cpp:228] conv4_1 does not need backward computation. 292 I1023 13:20:48.686398 2746 net.cpp:228] pool3 does not need backward computation. 293 I1023 13:20:48.686429 2746 net.cpp:228] relu3_3 does not need backward computation. 294 I1023 13:20:48.686460 2746 net.cpp:228] conv3_3 does not need backward computation. 295 I1023 13:20:48.686491 2746 net.cpp:228] relu3_2 does not need backward computation. 296 I1023 13:20:48.686522 2746 net.cpp:228] conv3_2 does not need backward computation. 297 I1023 13:20:48.686553 2746 net.cpp:228] relu3_1 does not need backward computation. 298 I1023 13:20:48.686583 2746 net.cpp:228] conv3_1 does not need backward computation. 299 I1023 13:20:48.686614 2746 net.cpp:228] pool2 does not need backward computation. 300 I1023 13:20:48.686645 2746 net.cpp:228] relu2_2 does not need backward computation. 301 I1023 13:20:48.686676 2746 net.cpp:228] conv2_2 does not need backward computation. 302 I1023 13:20:48.686705 2746 net.cpp:228] relu2_1 does not need backward computation. 303 I1023 13:20:48.686736 2746 net.cpp:228] conv2_1 does not need backward computation. 304 I1023 13:20:48.686767 2746 net.cpp:228] pool1 does not need backward computation. 305 I1023 13:20:48.686799 2746 net.cpp:228] relu1_2 does not need backward computation. 306 I1023 13:20:48.686828 2746 net.cpp:228] conv1_2 does not need backward computation. 307 I1023 13:20:48.686859 2746 net.cpp:228] relu1_1 does not need backward computation. 308 I1023 13:20:48.686889 2746 net.cpp:228] conv1_1 does not need backward computation. 309 I1023 13:20:48.686923 2746 net.cpp:228] data_data_0_split does not need backward computation. 310 I1023 13:20:48.686954 2746 net.cpp:228] data does not need backward computation. 311 I1023 13:20:48.686983 2746 net.cpp:270] This network produces output detection_eval 312 I1023 13:20:48.687135 2746 net.cpp:283] Network initialization done. 313 I1023 13:20:48.687716 2746 solver.cpp:75] Solver scaffolding done. 314 I1023 13:20:48.688022 2746 caffe.cpp:155] Finetuning from models/VGGNet/VGG_ILSVRC_16_layers_fc_reduced.caffemodel 315 I1023 13:20:49.964323 2746 upgrade_proto.cpp:67] Attempting to upgrade input file specified using deprecated input fields: models/VGGNet/VGG_ILSVRC_16_layers_fc_reduced.caffemodel 316 I1023 13:20:49.964531 2746 upgrade_proto.cpp:70] Successfully upgraded file specified using deprecated input fields. 317 W1023 13:20:49.964645 2746 upgrade_proto.cpp:72] Note that future Caffe releases will only support input layers and not input fields. 318 I1023 13:20:50.016149 2746 net.cpp:761] Ignoring source layer drop6 319 I1023 13:20:50.017864 2746 net.cpp:761] Ignoring source layer drop7 320 I1023 13:20:50.017911 2746 net.cpp:761] Ignoring source layer fc8 321 I1023 13:20:50.017922 2746 net.cpp:761] Ignoring source layer prob 322 I1023 13:20:50.366048 2746 upgrade_proto.cpp:67] Attempting to upgrade input file specified using deprecated input fields: models/VGGNet/VGG_ILSVRC_16_layers_fc_reduced.caffemodel 323 I1023 13:20:50.366091 2746 upgrade_proto.cpp:70] Successfully upgraded file specified using deprecated input fields. 324 W1023 13:20:50.366102 2746 upgrade_proto.cpp:72] Note that future Caffe releases will only support input layers and not input fields. 325 I1023 13:20:50.399529 2746 net.cpp:761] Ignoring source layer drop6 326 I1023 13:20:50.402957 2746 net.cpp:761] Ignoring source layer drop7 327 I1023 13:20:50.403225 2746 net.cpp:761] Ignoring source layer fc8 328 I1023 13:20:50.403296 2746 net.cpp:761] Ignoring source layer prob 329 I1023 13:20:50.404901 2746 caffe.cpp:251] Starting Optimization 330 I1023 13:20:50.404959 2746 solver.cpp:294] Solving VGG_VOC0712_SSD_300x300_train 331 I1023 13:20:50.404994 2746 solver.cpp:295] Learning Rate Policy: multistep 332 I1023 13:20:50.461501 2746 blocking_queue.cpp:50] Data layer prefetch queue empty 333

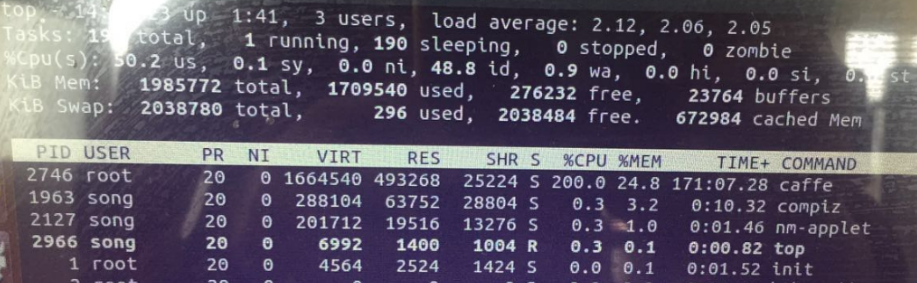

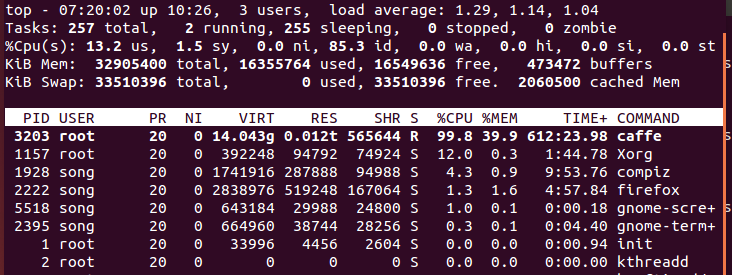

top一下,觉得五年前写毕业论文时候买的学生笔记本真是良心制造,联想G470,4核core-i3,2G内存。

然后整个下午,一直到傍晚,都持续在这个状态,觉得不太正常,看ssd_pscal.py代码:

1 # Directory which stores the job script and log file. 2 job_dir = "jobs/VGGNet/VOC0712/{}".format(job_name)

发现.log也是停留在这里就没了

1 I1023 13:20:50.404901 2746 caffe.cpp:251] Starting Optimization 2 I1023 13:20:50.404959 2746 solver.cpp:294] Solving VGG_VOC0712_SSD_300x300_train 3 I1023 13:20:50.404994 2746 solver.cpp:295] Learning Rate Policy: multistep 4 I1023 13:20:50.461501 2746 blocking_queue.cpp:50] Data layer prefetch queue empty

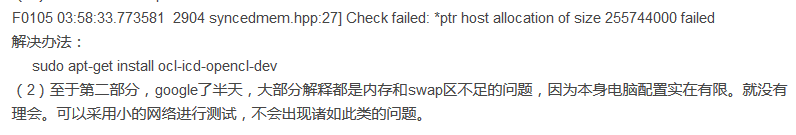

之前曾经跑过一次ssd_pascal_ori.py,记录的log是:

1 I1021 22:33:31.553313 4519 solver.cpp:294] Solving VGG_VOC0712_SSD_300x300_orig_train 2 I1021 22:33:31.553562 4519 solver.cpp:295] Learning Rate Policy: multistep 3 F1021 22:36:47.937511 4519 syncedmem.hpp:25] Check failed: *ptr host allocation of size 40934400 failed 4 *** Check failure stack trace: *** 5 @ 0xb723eefc (unknown) 6 @ 0xb723ee13 (unknown) 7 @ 0xb723e85f (unknown) 8 @ 0xb72418b0 (unknown) 9 @ 0xb7640e5d caffe::SyncedMemory::mutable_cpu_data() 10 @ 0xb746c763 caffe::Blob<>::mutable_cpu_diff() 11 @ 0xb74df5d8 caffe::PoolingLayer<>::Backward_cpu() 12 @ 0xb75c5a9c caffe::Net<>::BackwardFromTo() 13 @ 0xb75c5bc6 caffe::Net<>::Backward() 14 @ 0xb745ebbc caffe::Solver<>::Step() 15 @ 0xb745f395 caffe::Solver<>::Solve() 16 @ 0x804fc5d train() 17 @ 0x804cfd0 main 18 @ 0xb6e70a83 (unknown) 19 @ 0x804d8e3 (unknown)

搜到的原因基本上是电脑配置不够,像这样:http://blog.csdn.net/genius_zz/article/details/54348232

看来硬件还是不行,CPU上跑caffe可能玩玩还行,真正做实验训练什么的还是得找个GPU。

综上,觉得真要用CNN做点东西,不只是学学源码什么的,带GPU的硬件平台+Linux OS+Caffe比较合适。

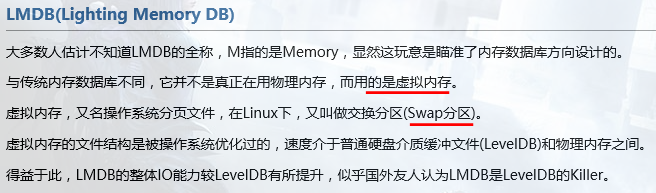

转机:

在http://www.cnblogs.com/neopenx/p/5269852.html这里看到

灵机一动,既然用虚拟内存,是不是可以扩大交换分区呢?

照这里:http://www.cnblogs.com/ericsun/archive/2013/08/17/3263739.html

之前是2G物理内存+2G虚拟内存,扩大成2G+3G,果然开始训练了,不过好景不长~

I1025 12:11:56.203306 2755 caffe.cpp:251] Starting Optimization

I1025 12:11:56.203392 2755 solver.cpp:294] Solving VGG_VOC0712_SSD_300x300_train

I1025 12:11:56.203433 2755 solver.cpp:295] Learning Rate Policy: multistep

I1025 12:13:11.956351 2755 solver.cpp:243] Iteration 0, loss = 29.3416

I1025 12:13:12.201416 2755 solver.cpp:259] Train net output #0: mbox_loss = 29.3416 (* 1 = 29.3416 loss)

I1025 12:13:12.268118 2755 sgd_solver.cpp:138] Iteration 0, lr = 0.001

I1025 12:13:14.233126 2755 blocking_queue.cpp:50] Data layer prefetch queue empty

2G+6G,2G+8G,都是这样。

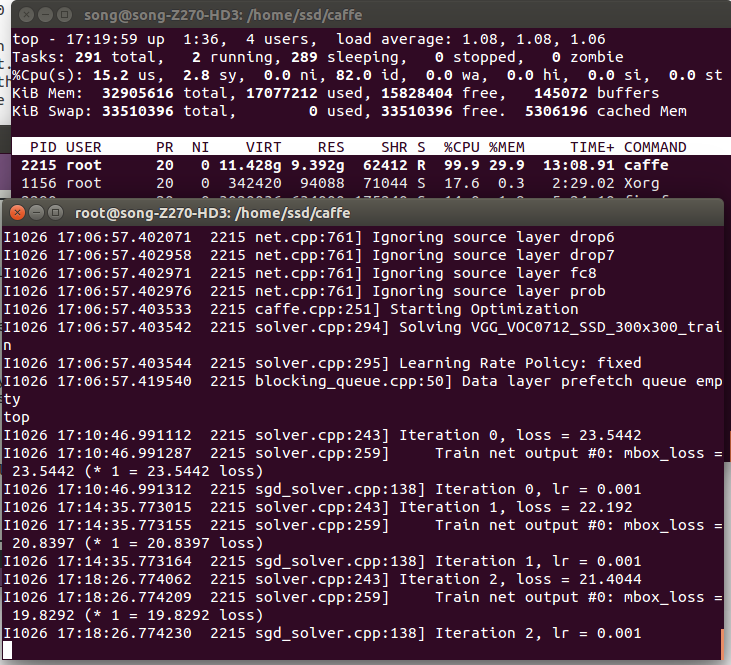

后记:台式机上装了个ubuntu,版本和小笔记本一样,14.04,所以基本上依赖的包下载完以后把之前的目录考过来就可以直接编译了,试着跑了下cifar-10可以训练。

补一个ssd跑VOC2007:

也出现了data layer prefetch queue empty的问题,但是能训练,虽然很慢,CPU用了100%,memory持续上升ing,跑了一晚上,之前的小笔记本仍旧停留在老样子不动,新台式:

I1026 21:09:32.796375 3203 caffe.cpp:251] Starting Optimization

I1026 21:09:32.796382 3203 solver.cpp:294] Solving VGG_VOC0712_SSD_300x300_train

I1026 21:09:32.796386 3203 solver.cpp:295] Learning Rate Policy: fixed

I1026 21:09:32.813050 3203 blocking_queue.cpp:50] Data layer prefetch queue empty

I1026 21:13:19.556558 3203 solver.cpp:243] Iteration 0, loss = 22.509

I1026 21:13:19.556725 3203 solver.cpp:259] Train net output #0: mbox_loss = 22.509 (* 1 = 22.509 loss)

I1026 21:13:19.556732 3203 sgd_solver.cpp:138] Iteration 0, lr = 0.001

I1026 21:17:03.379603 3203 solver.cpp:243] Iteration 1, loss = 21.7313

I1026 21:17:03.379711 3203 solver.cpp:259] Train net output #0: mbox_loss = 20.9537 (* 1 = 20.9537 loss)

I1026 21:17:03.379731 3203 sgd_solver.cpp:138] Iteration 1, lr = 0.001

I1026 21:20:49.064519 3203 solver.cpp:243] Iteration 2, loss = 20.8767

I1026 21:20:49.064656 3203 solver.cpp:259] Train net output #0: mbox_loss = 19.1674 (* 1 = 19.1674 loss)

I1026 21:20:49.064663 3203 sgd_solver.cpp:138] Iteration 2, lr = 0.001

I1026 21:24:33.324839 3203 solver.cpp:243] Iteration 3, loss = 20.0986

I1026 21:24:33.324995 3203 solver.cpp:259] Train net output #0: mbox_loss = 17.7645 (* 1 = 17.7645 loss)

I1026 21:24:33.325002 3203 sgd_solver.cpp:138] Iteration 3, lr = 0.001

I1026 21:28:18.298053 3203 solver.cpp:243] Iteration 4, loss = 19.3101

I1026 21:28:18.298166 3203 solver.cpp:259] Train net output #0: mbox_loss = 16.1561 (* 1 = 16.1561 loss)

I1026 21:28:18.298176 3203 sgd_solver.cpp:138] Iteration 4, lr = 0.001

I1026 21:32:03.106290 3203 solver.cpp:243] Iteration 5, loss = 18.8092

I1026 21:32:03.106421 3203 solver.cpp:259] Train net output #0: mbox_loss = 16.3044 (* 1 = 16.3044 loss)

I1026 21:32:03.106428 3203 sgd_solver.cpp:138] Iteration 5, lr = 0.001

I1026 21:35:47.668949 3203 solver.cpp:243] Iteration 6, loss = 18.3751

I1026 21:35:47.669019 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.7708 (* 1 = 15.7708 loss)

I1026 21:35:47.669026 3203 sgd_solver.cpp:138] Iteration 6, lr = 0.001

I1026 21:39:33.073357 3203 solver.cpp:243] Iteration 7, loss = 18.1015

I1026 21:39:33.073503 3203 solver.cpp:259] Train net output #0: mbox_loss = 16.1863 (* 1 = 16.1863 loss)

I1026 21:39:33.073511 3203 sgd_solver.cpp:138] Iteration 7, lr = 0.001

I1026 21:43:16.992905 3203 solver.cpp:243] Iteration 8, loss = 17.9009

I1026 21:43:16.992954 3203 solver.cpp:259] Train net output #0: mbox_loss = 16.2956 (* 1 = 16.2956 loss)

I1026 21:43:16.992960 3203 sgd_solver.cpp:138] Iteration 8, lr = 0.001

I1026 21:47:02.906033 3203 solver.cpp:243] Iteration 9, loss = 17.7352

I1026 21:47:02.906191 3203 solver.cpp:259] Train net output #0: mbox_loss = 16.2439 (* 1 = 16.2439 loss)

I1026 21:47:02.906199 3203 sgd_solver.cpp:138] Iteration 9, lr = 0.001

I1026 21:47:02.986310 3203 solver.cpp:433] Iteration 10, Testing net (#0)

I1026 21:47:02.986764 3203 net.cpp:693] Ignoring source layer mbox_loss

I1027 01:07:59.728220 3203 solver.cpp:546] Test net output #0: detection_eval = 8.94167e-05

I1027 01:11:44.542400 3203 solver.cpp:243] Iteration 10, loss = 17.0653

I1027 01:11:44.542552 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.8105 (* 1 = 15.8105 loss)

I1027 01:11:44.542562 3203 sgd_solver.cpp:138] Iteration 10, lr = 0.001

I1027 01:15:28.281282 3203 solver.cpp:243] Iteration 11, loss = 16.5182

I1027 01:15:28.281368 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.4822 (* 1 = 15.4822 loss)

I1027 01:15:28.281375 3203 sgd_solver.cpp:138] Iteration 11, lr = 0.001

I1027 01:19:13.447082 3203 solver.cpp:243] Iteration 12, loss = 16.1136

I1027 01:19:13.447216 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.1213 (* 1 = 15.1213 loss)

I1027 01:19:13.447227 3203 sgd_solver.cpp:138] Iteration 12, lr = 0.001

I1027 01:22:56.734020 3203 solver.cpp:243] Iteration 13, loss = 15.9099

I1027 01:22:56.734146 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.7285 (* 1 = 15.7285 loss)

I1027 01:22:56.734169 3203 sgd_solver.cpp:138] Iteration 13, lr = 0.001

I1027 01:26:41.813959 3203 solver.cpp:243] Iteration 14, loss = 15.8432

I1027 01:26:41.814071 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.489 (* 1 = 15.489 loss)

I1027 01:26:41.814079 3203 sgd_solver.cpp:138] Iteration 14, lr = 0.001

I1027 01:30:25.233520 3203 solver.cpp:243] Iteration 15, loss = 15.7216

I1027 01:30:25.233619 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.0885 (* 1 = 15.0885 loss)

I1027 01:30:25.233626 3203 sgd_solver.cpp:138] Iteration 15, lr = 0.001

I1027 01:34:09.895038 3203 solver.cpp:243] Iteration 16, loss = 15.666

I1027 01:34:09.895109 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.2142 (* 1 = 15.2142 loss)

I1027 01:34:09.895117 3203 sgd_solver.cpp:138] Iteration 16, lr = 0.001

I1027 01:37:53.745384 3203 solver.cpp:243] Iteration 17, loss = 15.528

I1027 01:37:53.745455 3203 solver.cpp:259] Train net output #0: mbox_loss = 14.8065 (* 1 = 14.8065 loss)

I1027 01:37:53.745461 3203 sgd_solver.cpp:138] Iteration 17, lr = 0.001

I1027 01:41:38.118726 3203 solver.cpp:243] Iteration 18, loss = 15.4515

I1027 01:41:38.118793 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.5301 (* 1 = 15.5301 loss)

I1027 01:41:38.118799 3203 sgd_solver.cpp:138] Iteration 18, lr = 0.001

I1027 01:45:22.254400 3203 solver.cpp:243] Iteration 19, loss = 15.3034

I1027 01:45:22.254480 3203 solver.cpp:259] Train net output #0: mbox_loss = 14.7633 (* 1 = 14.7633 loss)

I1027 01:45:22.254487 3203 sgd_solver.cpp:138] Iteration 19, lr = 0.001

I1027 01:45:22.331688 3203 solver.cpp:433] Iteration 20, Testing net (#0)

I1027 01:45:22.331750 3203 net.cpp:693] Ignoring source layer mbox_loss

W1027 05:06:09.821015 3203 solver.cpp:524] Missing true_pos for label: 1

W1027 05:06:09.821113 3203 solver.cpp:524] Missing true_pos for label: 2

W1027 05:06:09.821122 3203 solver.cpp:524] Missing true_pos for label: 3

W1027 05:06:09.821125 3203 solver.cpp:524] Missing true_pos for label: 4

W1027 05:06:09.821130 3203 solver.cpp:524] Missing true_pos for label: 5

W1027 05:06:09.821131 3203 solver.cpp:524] Missing true_pos for label: 6

W1027 05:06:09.881377 3203 solver.cpp:524] Missing true_pos for label: 8

W1027 05:06:09.881528 3203 solver.cpp:524] Missing true_pos for label: 10

W1027 05:06:09.881533 3203 solver.cpp:524] Missing true_pos for label: 11

W1027 05:06:09.881536 3203 solver.cpp:524] Missing true_pos for label: 12

W1027 05:06:09.881539 3203 solver.cpp:524] Missing true_pos for label: 13

W1027 05:06:09.881542 3203 solver.cpp:524] Missing true_pos for label: 14

W1027 05:06:09.965651 3203 solver.cpp:524] Missing true_pos for label: 17

W1027 05:06:09.965673 3203 solver.cpp:524] Missing true_pos for label: 19

W1027 05:06:09.965677 3203 solver.cpp:524] Missing true_pos for label: 20

I1027 05:06:09.965680 3203 solver.cpp:546] Test net output #0: detection_eval = 0.00013241

I1027 05:09:53.990563 3203 solver.cpp:243] Iteration 20, loss = 15.2165

I1027 05:09:53.990700 3203 solver.cpp:259] Train net output #0: mbox_loss = 14.9418 (* 1 = 14.9418 loss)

I1027 05:09:53.990710 3203 sgd_solver.cpp:138] Iteration 20, lr = 0.001

I1027 05:13:38.261768 3203 solver.cpp:243] Iteration 21, loss = 15.1906

I1027 05:13:38.261819 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.2229 (* 1 = 15.2229 loss)

I1027 05:13:38.261828 3203 sgd_solver.cpp:138] Iteration 21, lr = 0.001

I1027 05:17:22.776798 3203 solver.cpp:243] Iteration 22, loss = 15.1411

I1027 05:17:22.776938 3203 solver.cpp:259] Train net output #0: mbox_loss = 14.6261 (* 1 = 14.6261 loss)

I1027 05:17:22.776948 3203 sgd_solver.cpp:138] Iteration 22, lr = 0.001

I1027 05:21:06.807641 3203 solver.cpp:243] Iteration 23, loss = 15.092

I1027 05:21:06.807750 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.2372 (* 1 = 15.2372 loss)

I1027 05:21:06.807759 3203 sgd_solver.cpp:138] Iteration 23, lr = 0.001

I1027 05:24:51.765369 3203 solver.cpp:243] Iteration 24, loss = 15.0714

I1027 05:24:51.765485 3203 solver.cpp:259] Train net output #0: mbox_loss = 15.2836 (* 1 = 15.2836 loss)

I1027 05:24:51.765493 3203 sgd_solver.cpp:138] Iteration 24, lr = 0.001

I1027 05:28:35.644573 3203 solver.cpp:243] Iteration 25, loss = 15.0133

I1027 05:28:35.644652 3203 solver.cpp:259] Train net output #0: mbox_loss = 14.5068 (* 1 = 14.5068 loss)

I1027 05:28:35.644659 3203 sgd_solver.cpp:138] Iteration 25, lr = 0.001

I1027 05:32:20.744153 3203 solver.cpp:243] Iteration 26, loss = 14.9701

I1027 05:32:20.744256 3203 solver.cpp:259] Train net output #0: mbox_loss = 14.7832 (* 1 = 14.7832 loss)

I1027 05:32:20.744263 3203 sgd_solver.cpp:138] Iteration 26, lr = 0.001

I1027 05:36:04.126538 3203 solver.cpp:243] Iteration 27, loss = 14.7926

I1027 05:36:04.126641 3203 solver.cpp:259] Train net output #0: mbox_loss = 13.0314 (* 1 = 13.0314 loss)

I1027 05:36:04.126659 3203 sgd_solver.cpp:138] Iteration 27, lr = 0.001

I1027 05:39:49.326182 3203 solver.cpp:243] Iteration 28, loss = 14.6038

I1027 05:39:49.326277 3203 solver.cpp:259] Train net output #0: mbox_loss = 13.6422 (* 1 = 13.6422 loss)

I1027 05:39:49.326295 3203 sgd_solver.cpp:138] Iteration 28, lr = 0.001

I1027 05:43:32.725708 3203 solver.cpp:243] Iteration 29, loss = 14.4194

I1027 05:43:32.725805 3203 solver.cpp:259] Train net output #0: mbox_loss = 12.9187 (* 1 = 12.9187 loss)

I1027 05:43:32.725812 3203 sgd_solver.cpp:138] Iteration 29, lr = 0.001

I1027 05:43:32.803719 3203 solver.cpp:433] Iteration 30, Testing net (#0)

I1027 05:43:32.803767 3203 net.cpp:693] Ignoring source layer mbox_loss

无意中看到这个,台式是4G显存的1050,这就尴尬了,虽然cuda还没有装,英伟达的网站从昨晚开始一直在维护......