去重规则

在爬虫应用中,我们可以在request对象中设置参数dont_filter = True 来阻止去重。而scrapy框架中是默认去重的,那内部是如何去重的。

from scrapy.dupefilter import RFPDupeFilter

请求进来以后,会先执行from_settings方法,从settings文件中找一个DUPEFILTER_DEBUG的配置,再执行init初始化方法,生成一个集合 self.fingerprints = set(),然后在执行request_seen方法,所以我们可以自定制去重规则,只要继承BaseDupeFilter即可

class RFPDupeFilter(BaseDupeFilter): """Request Fingerprint duplicates filter""" def __init__(self, path=None, debug=False): self.file = None self.fingerprints = set() self.logdupes = True self.debug = debug self.logger = logging.getLogger(__name__) if path: self.file = open(os.path.join(path, 'requests.seen'), 'a+') self.file.seek(0) self.fingerprints.update(x.rstrip() for x in self.file) @classmethod def from_settings(cls, settings): debug = settings.getbool('DUPEFILTER_DEBUG') return cls(job_dir(settings), debug) def request_seen(self, request): fp = self.request_fingerprint(request) if fp in self.fingerprints: return True self.fingerprints.add(fp) if self.file: self.file.write(fp + os.linesep) def request_fingerprint(self, request): return request_fingerprint(request) def close(self, reason): if self.file: self.file.close() def log(self, request, spider): if self.debug: msg = "Filtered duplicate request: %(request)s" self.logger.debug(msg, {'request': request}, extra={'spider': spider}) elif self.logdupes: msg = ("Filtered duplicate request: %(request)s" " - no more duplicates will be shown" " (see DUPEFILTER_DEBUG to show all duplicates)") self.logger.debug(msg, {'request': request}, extra={'spider': spider}) self.logdupes = False spider.crawler.stats.inc_value('dupefilter/filtered', spider=spider)

scrapy默认使用 scrapy.dupefilter.RFPDupeFilter 进行去重,相关配置有:

|

1

2

3

|

DUPEFILTER_CLASS = 'scrapy.dupefilter.RFPDupeFilter'DUPEFILTER_DEBUG = FalseJOBDIR = "保存范文记录的日志路径,如:/root/" # 最终路径为 /root/requests.seen |

使用redis的集合自定制去重规则:

import redis from scrapy.dupefilter import BaseDupeFilter from scrapy.utils.request import request_fingerprint class Myfilter(BaseDupeFilter): def __init__(self,key): self.conn = None self.key = key @classmethod def from_settings(cls, settings): key = settings.get('DUP_REDIS_KEY') return cls(key) def open(self): self.conn = redis.Redis(host='127.0.0.1',port=6379) def request_seen(self, request): fp = request_fingerprint(request) ret = self.conn.sadd(self.key,fp) return ret == 0

备注:利用scrapy的封装的request_fingerprint 进行对每个request对象进行加密,变成固长,方便存储。

定制完去重规则后,如何生效,只需更改配置文件即可:

settings.py文件中设置 DUPEFILTER_CLASS = '自定制去重规则的类的路径'由此可见,去重规则是由两个因素决定的,一个是request对象中的dont_filter参数,一个是去重类。那这两个因素又是如何处理的? 这是由调度器中的enqueue_request方法决定的

# scrapy下的core文件中的scheduler.py文件 class Scheduler(object): def enqueue_request(self, request): if not request.dont_filter and self.df.request_seen(request): self.df.log(request, self.spider) return False dqok = self._dqpush(request) if dqok: self.stats.inc_value('scheduler/enqueued/disk', spider=self.spider) else: self._mqpush(request) self.stats.inc_value('scheduler/enqueued/memory', spider=self.spider) self.stats.inc_value('scheduler/enqueued', spider=self.spider) return True

调度器

1.使用队列(广度优先)

2.使用栈(深度优先)

3.使用优先级的队列(利用redis的有序集合)

下载中间件

在request对象请求下载的过程中,会穿过一系列的中间件,这一系列的中间件,在请求下载时,会穿过每一个下载中间件的process_request方法,下载完之后返回时,会穿过process_response方法。那这些中间件有什么用处呢?

作用:统一对所有的request对象进行下载前或下载后的处理

我们可以自定制中间件,在请求时,可以添加一些请求头,在返回时,获得cookie

自定制下载中间件时,需要在settings.py配置文件中配置才会生效。

# Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html DOWNLOADER_MIDDLEWARES = { 'myspider.middlewares.MyspiderDownloaderMiddleware': 543, }

如果想在下载时更换url,可以在process_request中设置,一般不会这么做

class MyspiderDownloaderMiddleware(object): def process_request(self, request, spider): request._set_url(‘更改的url’) return None

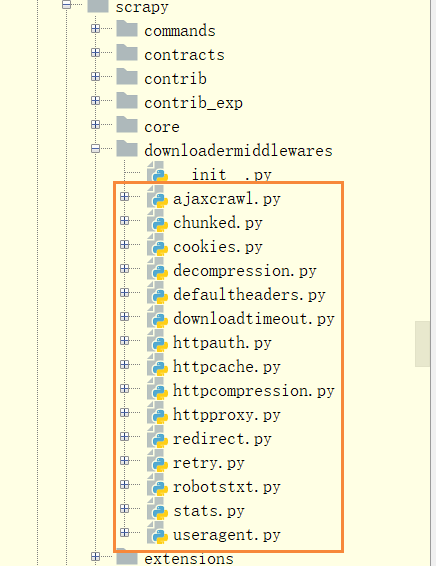

我们可以在请求的中间件中添加请求头,也可以添加cookie,但是,scrapy框架为我们写好了很多东西,我们只需要用即可,自定制的中间件添加scrapy中没有的就行。那么scrapy为我们提供了那些下载中间件呢?

比如:我们请求头中常携带的useragent(在useragent.py中做了处理),还有redirect.py 中,处理了重定向的设置,我们在请求时,会出现重定向的情况,scrapy框架为我们做了重定向处理。

class BaseRedirectMiddleware(object): enabled_setting = 'REDIRECT_ENABLED' def __init__(self, settings): if not settings.getbool(self.enabled_setting): raise NotConfigured self.max_redirect_times = settings.getint('REDIRECT_MAX_TIMES') self.priority_adjust = settings.getint('REDIRECT_PRIORITY_ADJUST') @classmethod def from_crawler(cls, crawler): return cls(crawler.settings)

我们可以在settings配置文件中设置最大重定向的次数来阻止重定向 (REDIRECT_MAX_TIMES)

下载中间件中也为我们处理了cookie。

cookie中间件中,实例化时,创建了一个默认的字典defaultdict(特点:创建默认字典时,传入什么,生成键值对时,值就是什么类型,比如 ret =defaultdict(list) s = ret[1] 此时的ret是一个key为1,值为[]的默认字典)。

在请求进来时,在请求requests对象中取一个cookiejar的值:cookiejarkey = request.meta.get("cookiejar"),并把这个值,直接赋值给了实例化时创建的字典, jar = self.jars[cookiejarkey],此时的 self.jars = {cookiejarkey:CookieJar对象},然后从这个CookieJar中取值。请求时携带取得值。

下载完成后,响应时,会从响应中取到cookie的值jar.extract_cookies(response, request) ,然后添加到cookiejar中

class CookiesMiddleware(object): """This middleware enables working with sites that need cookies""" def __init__(self, debug=False): self.jars = defaultdict(CookieJar) self.debug = debug @classmethod def from_crawler(cls, crawler): if not crawler.settings.getbool('COOKIES_ENABLED'): raise NotConfigured return cls(crawler.settings.getbool('COOKIES_DEBUG')) def process_request(self, request, spider): if request.meta.get('dont_merge_cookies', False): return cookiejarkey = request.meta.get("cookiejar") jar = self.jars[cookiejarkey] cookies = self._get_request_cookies(jar, request) for cookie in cookies: jar.set_cookie_if_ok(cookie, request) # set Cookie header request.headers.pop('Cookie', None) jar.add_cookie_header(request) self._debug_cookie(request, spider) def process_response(self, request, response, spider): if request.meta.get('dont_merge_cookies', False): return response # extract cookies from Set-Cookie and drop invalid/expired cookies cookiejarkey = request.meta.get("cookiejar") jar = self.jars[cookiejarkey] jar.extract_cookies(response, request) self._debug_set_cookie(response, spider) return response

因此,我们可以在发送请求时,在请求携带的参数中设置meta参数 meta={"cookiejar":任意值} ,下次请求时,直接在请求中也携带同样的meta即可。如果不想携带本次的cookie,也可以重新设置值 meta={"cookiejar":任意值1} ,那后面的请求就可以依据自己的需求,想携带谁就携带谁。

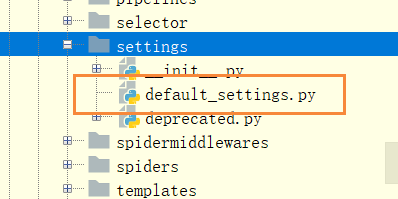

scrapy为我们提供了很多的内置中间件,但是我们自定制中间件时,需要在配置文件中配置,但是,在配置文件中,我们并看到这些scrapy自带的中间件,因为这些中间件的配置在scrapy的默认配置文件中。

打开这个默认的配置文件,可以看到scrapy默认的中间件以及优先级的数值

DOWNLOADER_MIDDLEWARES_BASE = { # Engine side 'scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware': 100, 'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware': 300, 'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware': 350, 'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware': 400, 'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': 500, 'scrapy.downloadermiddlewares.retry.RetryMiddleware': 550, 'scrapy.downloadermiddlewares.ajaxcrawl.AjaxCrawlMiddleware': 560, 'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware': 580, 'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 590, 'scrapy.downloadermiddlewares.redirect.RedirectMiddleware': 600, 'scrapy.downloadermiddlewares.cookies.CookiesMiddleware': 700, 'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware': 750, 'scrapy.downloadermiddlewares.stats.DownloaderStats': 850, 'scrapy.downloadermiddlewares.httpcache.HttpCacheMiddleware': 900, # Downloader side }

所以,但我们自定制对应的中间件时,请求时一定要比默认的对应的中间件的数值大,返回响应时一定要比默认的对应的中间件的数值小,否则默认的中间件会覆盖掉自定制的中间件(执行顺序:请求时从小到大,响应时从大到小),从而无法生效。

当然,这些中间件也是有返回值,请求中间件 process_request 返回None表示继续执行后续的中间件,返回response(怎么返回response?伪造一个,可以自己使用requests模块访问一个其他url,返回response,或者from scrapy.http import Response 实例化一个response对象即可)就会跳过后续的请求中间件,直接执行所有的响应中间件(是所有,不同于django的中间件)。也可以返回一个request对象,表示放弃此次请求,并将返回的request对象添加到调度器中。也可以抛出一个异常。

返回响应时, process_response 必须要有返回值,正常情况下返回response,也可以返回一个request对象,也可以抛出异常。

下载中间件中也可以设置代理。

爬虫中间件

爬虫应用将item对象或者request对象依次穿过爬虫中间件的process_spider_output方法传给引擎进行分发,下载完成后依次穿过爬虫中间件的process_spider_input方法。

返回值:process_spider_output方法必须返回None或者抛出一个异常

同样的,我们自定义爬虫中间件也要在配置文件中配置

# Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html SPIDER_MIDDLEWARES = { 'myspider.middlewares.MyspiderSpiderMiddleware': 543, }

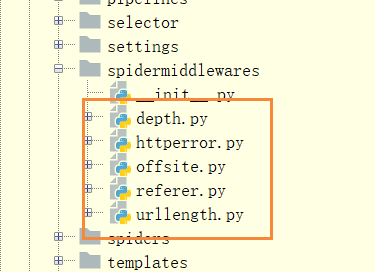

那么爬虫中间件有什么作用呢?我们爬取的深度(DEPTH_LIMIT参数)和优先级是如何实现的呢?就是通过内置的爬虫中间件实现的。

scrapy框架为我们内置的一些爬虫中件间:

那爬虫爬取的深度限制和优先级是如何实现的呢? 通过depth.py 这个文件

爬虫执行到深度中间件时,会先调用from_crawler方法,这个方法会先去settings文件中获取几个参数:DEPTH_LIMIT(爬取深度)、DEPTH_PRIORITY(优先级)、DEPTH_STATS_VERBOSE(是否收集最后一层),然后通过process_spider_output 方法中判断有没有设置过depth,如果没有就给当前的request对象设置depth=0参数,然后通过每层自加一 depth = response.meta['depth'] + 1实现层级的控制

class DepthMiddleware(object): def __init__(self, maxdepth, stats=None, verbose_stats=False, prio=1): self.maxdepth = maxdepth self.stats = stats self.verbose_stats = verbose_stats self.prio = prio @classmethod def from_crawler(cls, crawler): settings = crawler.settings maxdepth = settings.getint('DEPTH_LIMIT') verbose = settings.getbool('DEPTH_STATS_VERBOSE') prio = settings.getint('DEPTH_PRIORITY') return cls(maxdepth, crawler.stats, verbose, prio) def process_spider_output(self, response, result, spider): def _filter(request): if isinstance(request, Request): depth = response.meta['depth'] + 1 request.meta['depth'] = depth if self.prio: request.priority -= depth * self.prio if self.maxdepth and depth > self.maxdepth: logger.debug( "Ignoring link (depth > %(maxdepth)d): %(requrl)s ", {'maxdepth': self.maxdepth, 'requrl': request.url}, extra={'spider': spider} ) return False elif self.stats: if self.verbose_stats: self.stats.inc_value('request_depth_count/%s' % depth, spider=spider) self.stats.max_value('request_depth_max', depth, spider=spider) return True # base case (depth=0) if self.stats and 'depth' not in response.meta: response.meta['depth'] = 0 if self.verbose_stats: self.stats.inc_value('request_depth_count/0', spider=spider) return (r for r in result or () if _filter(r))

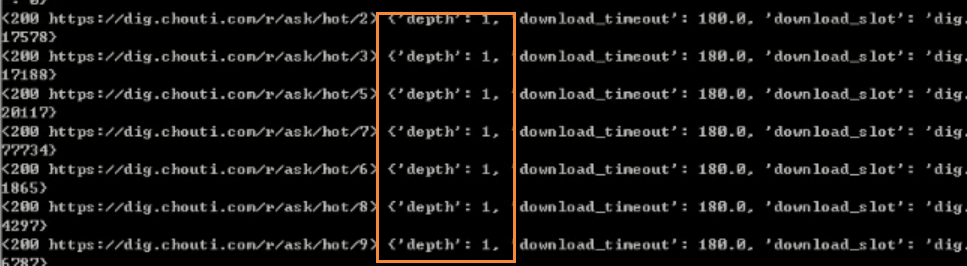

备注:response.request 表示当前响应是由那个request对象发起的

response.meta 等同于 response.request.meta 可以获取到当前响应对应的request对象的meta属性

没有meta属性时,会默认携带一些参数:比如当前页面下载的时间。

{'download_timeout': 180.0, 'download_slot': 'dig.chouti.com', 'download_latency': 0.5455923080444336}

同时,request的优先级,通过自身的priority的值自减depth的值得到request.priority -= depth * self.prio

如果 配置值DEPTH_PRIORITY设置为1,则请求的优先级会递减(0,-1,-2,...)

如果 配置值DEPTH_PRIORITY设置为-1,则请求的优先级会递增(0,1,2,...)

通过这种方式,通过改变配置的正负值,来实现优先级的控制(是深度优先(从大到小),还是广度优先(从小到大))

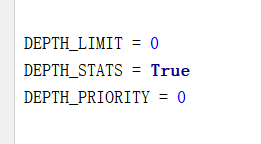

scrapy中DEPTH_LIMIT 和 DEPTH_PRIORITY的默认值

scrapy框架中默认的爬虫中间件的配置信息

SPIDER_MIDDLEWARES_BASE = { # Engine side 'scrapy.spidermiddlewares.httperror.HttpErrorMiddleware': 50, 'scrapy.spidermiddlewares.offsite.OffsiteMiddleware': 500, 'scrapy.spidermiddlewares.referer.RefererMiddleware': 700, 'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware': 800, 'scrapy.spidermiddlewares.depth.DepthMiddleware': 900, # Spider side }

备注:scrapy框架完美的遵循了开放封闭原则(源码封闭,配置文件开放)

自定制命令

有两种自定义命令的方式

执行单个爬虫时,直接写一个python脚本(.py文件)即可,这是scrapy框架默认支持的

通过脚本执行单个爬虫脚本

import sys from scrapy.cmdline import execute if __name__ == '__main__': # 方式一 #可以直接写 脚本的目录下终端运行 --> python 脚本名 # execute(["scrapy","crawl","chouti","--nolog"]) # #也可以借助sys.argv 在命令行中传参数会被argv捕获(argv为一个列表,第一个参数为脚本的路径,后面是传的参数) # # 比如 运行命令 : python 脚本 参数(chouti) # print(sys.argv) execute(['scrapy','crawl',sys.argv[1],'--nolog'])

如果我们希望可以同时执行多个爬虫时,就需要自定制命令

自定制命令的步骤:

- 在spiders同级创建任意目录,如:commands

- 在其中创建 crawlall.py 文件 (此处文件名就是自定义的命令) 备注:py文件什么名字,自定义命令就是什么名字

crawlall.py

crawlall.pyfrom scrapy.commands import ScrapyCommand from scrapy.utils.project import get_project_settings class Command(ScrapyCommand): requires_project = True def syntax(self): return '[options]' def short_desc(self): return 'Runs all of the spiders' # 返回对该命令的描述信息,可以通过scrapy --help 查看 def run(self, args, opts): # 自定制命令默认执行的方法,可以通过判断等定制内容 # 执行所有的爬虫 spider_list = self.crawler_process.spiders.list() for name in spider_list: self.crawler_process.crawl(name, **opts.__dict__) self.crawler_process.start()

- 在settings.py 中添加配置 COMMANDS_MODULE = '项目名称.目录名称'

- 在项目目录执行命令:scrapy crawlall

自定制扩展

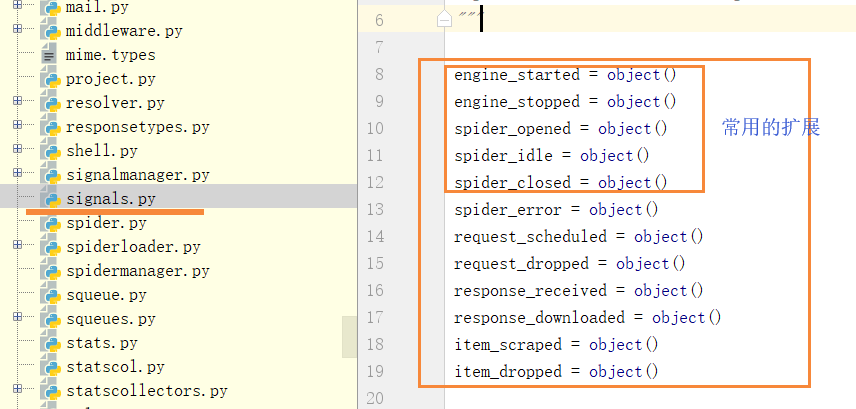

自定义扩展是利用信号在指定位置注册指定操作

自定义扩展是基于scrapy中的信号的

from scrapy import signals class MyExtension(object): def __init__(self): pass @classmethod def from_crawler(cls, crawler): # 可以在配置文件中指定参数 # val = crawler.settings.getint('MMMM') # ext = cls(val) ext = cls() crawler.signals.connect(ext.func_open, signal=signals.spider_opened) # 表示执行到爬虫开始时(spider_opened),开始执行func_open这个函数 crawler.signals.connect(ext.func_close, signal=signals.spider_closed) # 结束时,执行func_close 函数 return ext def func_open(self, spider): print('open') def func_close(self, spider): print('close')

同样的我们自定义扩展后也要在配置文件中配置才能生效

# Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None,

'xxx.xxx.xxxx': 500,

}

自定义扩展是在scrapy指定的位置实现的,那scrapy又给我们提供了哪些可扩展的地方?

解释:engine_stared 和 engine_stopped 是引擎的开始和结束 ,是整个爬虫爬取任务最开始和结束的地方

spider_opend 和 spider_closed 是爬虫开始和结束

spider_idle 表示爬虫空闲 spider_error 表示爬虫错误

request_scheduled 表示调度器开始调度的时候 request_dropped 表示请求舍弃

response_received 表示响应接收到 response_downloaded 表示下载完毕

代理

实现有三种方式:

基于环境变量 (给当前进程中的所有的请求加代理)

借助os模块中的environ方法,print(os.environ) 得到的是当前进程中的共享的变量,可以通过设置key,val实现。

在爬虫程序刚开始启动之前设置环境变量:

启动脚本中设置

import os

os.environ['http_proxy'] = '代理http:xxx.com'

os.environ['https_proxy'] = '代理https:xxx.com'

或者start_requests方法中:

def start_requests(self):

import os

os.environ['http_proxy'] = 'http:xxx.com'

yield Request(url='xxx')

基于request的meta参数 (给单个请求加代理)

在request参数中设置 meta={'proxy':'代理http:xxx.com'}

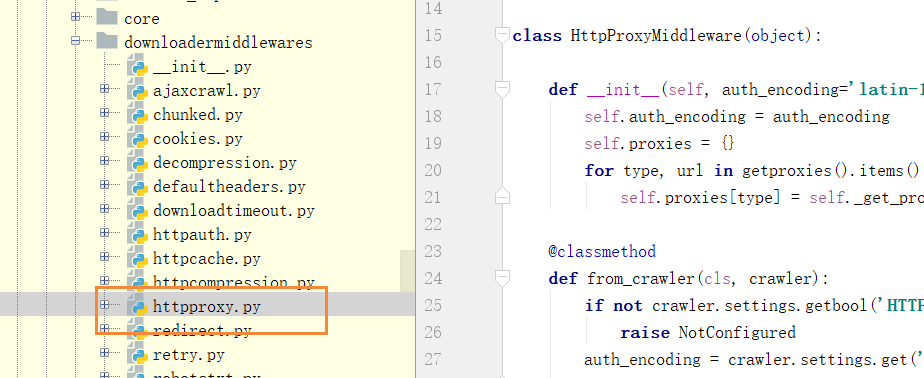

基于下载中间件

怎么使用呢?先看源码中怎么实现的

请求到达HttpProxyMiddleware中间件后,先执行from_crawler方法,从配置文件中查看是否有HTTPPROXY_ENABLED参数

这个参数表示是否开启代理,然后实例化时,创建了一个空字典 self.proxies = {} ,并循环getproxies,这个getproxies是什么?

class HttpProxyMiddleware(object): def __init__(self, auth_encoding='latin-1'): self.auth_encoding = auth_encoding self.proxies = {} for type, url in getproxies().items(): self.proxies[type] = self._get_proxy(url, type)

getproxies = getproxies_environment 等于一个函数

def getproxies_environment(): proxies = {} for name, value in os.environ.items(): name = name.lower() if value and name[-6:] == '_proxy': proxies[name[:-6]] = value if 'REQUEST_METHOD' in os.environ: proxies.pop('http', None) for name, value in os.environ.items(): if name[-6:] == '_proxy': name = name.lower() if value: proxies[name[:-6]] = value else: proxies.pop(name[:-6], None) return proxies

这个函数中,循环环境变量的值,并从中找一个以_proxy结尾 的key,然后进行字符串的切割,并将处理后的值放入示例化的proxies字典中。比如:我们设置了环境变量 os.environ["http_proxy"] = 'http:xxx.com',那么处理后的proxies字典中的结果为{"http":"http:xxx.com"} 。因此我们可以采用这种方式,实现添加代理,那我们在一开始就要设置好全局变量,在start_requests方法中就要设置,或者在脚本启动之前也可以。

执行process_request方法时,会先从request的meta参数中找 ‘proxy’ ,如果存在,则使用,不存在,就从self.proxies这个字典中找,这个字典的值来自于全局环境变量。

因此,request中meta参数的优先级高于全局环境变量的。

def process_request(self, request, spider):

# ignore if proxy is already set

if 'proxy' in request.meta:

if request.meta['proxy'] is None:

return

# extract credentials if present

creds, proxy_url = self._get_proxy(request.meta['proxy'], '')

request.meta['proxy'] = proxy_url

if creds and not request.headers.get('Proxy-Authorization'):

request.headers['Proxy-Authorization'] = b'Basic ' + creds

return

elif not self.proxies:

return

if scheme in self.proxies:

self._set_proxy(request, scheme)

class HttpProxyMiddleware(object): def __init__(self, auth_encoding='latin-1'): self.auth_encoding = auth_encoding self.proxies = {} for type, url in getproxies().items(): self.proxies[type] = self._get_proxy(url, type) @classmethod def from_crawler(cls, crawler): if not crawler.settings.getbool('HTTPPROXY_ENABLED'): raise NotConfigured auth_encoding = crawler.settings.get('HTTPPROXY_AUTH_ENCODING') return cls(auth_encoding) def _basic_auth_header(self, username, password): user_pass = to_bytes( '%s:%s' % (unquote(username), unquote(password)), encoding=self.auth_encoding) return base64.b64encode(user_pass).strip() def _get_proxy(self, url, orig_type): proxy_type, user, password, hostport = _parse_proxy(url) proxy_url = urlunparse((proxy_type or orig_type, hostport, '', '', '', '')) if user: creds = self._basic_auth_header(user, password) else: creds = None return creds, proxy_url def process_request(self, request, spider): # ignore if proxy is already set if 'proxy' in request.meta: if request.meta['proxy'] is None: return # extract credentials if present creds, proxy_url = self._get_proxy(request.meta['proxy'], '') request.meta['proxy'] = proxy_url if creds and not request.headers.get('Proxy-Authorization'): request.headers['Proxy-Authorization'] = b'Basic ' + creds return elif not self.proxies: return parsed = urlparse_cached(request) scheme = parsed.scheme # 'no_proxy' is only supported by http schemes if scheme in ('http', 'https') and proxy_bypass(parsed.hostname): return if scheme in self.proxies: self._set_proxy(request, scheme) def _set_proxy(self, request, scheme): creds, proxy = self.proxies[scheme] request.meta['proxy'] = proxy if creds: request.headers['Proxy-Authorization'] = b'Basic ' + creds

小结:request的meta参数和全局环境变量的方式设置代理 适用于下载量比较小的场景,当下载量很大时,由于频繁的使用一个或几个就会容易被封。

所以,当请求量很大时,就需要用到第三种方式了,自定制一个下载中间件,每次随机从所有的代理中取出一个取执行,这样就会没有规律性,就不容易被封。

import random import base64 class ProxyMiddleware(object): def process_request(self, request, spider): PROXIES = [ {'ip_port': '111.11.228.75:80', 'user_pass': ''}, {'ip_port': '120.198.243.22:80', 'user_pass': ''}, {'ip_port': '111.8.60.9:8123', 'user_pass': ''}, {'ip_port': '101.71.27.120:80', 'user_pass': ''}, {'ip_port': '122.96.59.104:80', 'user_pass': ''}, {'ip_port': '122.224.249.122:8088', 'user_pass': ''}, ] proxy = random.choice(PROXIES) if proxy['user_pass'] is not None: request.meta['proxy'] = bytes("http://%s" % proxy['ip_port'],encoding='utf8') encoded_user_pass = base64.encodebytes(bytes(proxy['user_pass'],encoding='utf8')) request.headers['Proxy-Authorization'] = bytes('Basic ' + encoded_user_pass,encoding='utf8') else: request.meta['proxy'] = bytes("http://%s" % proxy['ip_port'],encoding='utf8')

设置后要在settings.py 中配置

scrapy中settings.py文件解析

# -*- coding: utf-8 -*- # Scrapy settings for step8_king project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # http://doc.scrapy.org/en/latest/topics/settings.html # http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html # http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html # 1. 爬虫名称 BOT_NAME = 'step8_king' # 2. 爬虫应用路径 SPIDER_MODULES = ['step8_king.spiders'] NEWSPIDER_MODULE = 'step8_king.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent # 3. 客户端 user-agent请求头 # USER_AGENT = 'step8_king (+http://www.yourdomain.com)' # Obey robots.txt rules # 4. 禁止爬虫配置 # ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) # 5. 并发请求数 # CONCURRENT_REQUESTS = 4 # Configure a delay for requests for the same website (default: 0) # See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs # 6. 延迟下载秒数 # DOWNLOAD_DELAY = 2 # The download delay setting will honor only one of: # 7. 单域名访问并发数,并且延迟下次秒数也应用在每个域名 # CONCURRENT_REQUESTS_PER_DOMAIN = 2 # 单IP访问并发数,如果有值则忽略:CONCURRENT_REQUESTS_PER_DOMAIN,并且延迟下次秒数也应用在每个IP # CONCURRENT_REQUESTS_PER_IP = 3 # Disable cookies (enabled by default) # 8. 是否支持cookie,cookiejar进行操作cookie # COOKIES_ENABLED = True # COOKIES_DEBUG = True # Disable Telnet Console (enabled by default) # 9. Telnet用于查看当前爬虫的信息,操作爬虫等... # 使用telnet ip port ,然后通过命令操作 # TELNETCONSOLE_ENABLED = True # TELNETCONSOLE_HOST = '127.0.0.1' # TELNETCONSOLE_PORT = [6023,] # 10. 默认请求头 # Override the default request headers: # DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', # } # Configure item pipelines # See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html # 11. 定义pipeline处理请求 # ITEM_PIPELINES = { # 'step8_king.pipelines.JsonPipeline': 700, # 'step8_king.pipelines.FilePipeline': 500, # } # 12. 自定义扩展,基于信号进行调用 # Enable or disable extensions # See http://scrapy.readthedocs.org/en/latest/topics/extensions.html # EXTENSIONS = { # # 'step8_king.extensions.MyExtension': 500, # } # 13. 爬虫允许的最大深度,可以通过meta查看当前深度;0表示无深度 # DEPTH_LIMIT = 3 # 14. 爬取时,0表示深度优先Lifo(默认);1表示广度优先FiFo # 后进先出,深度优先 # DEPTH_PRIORITY = 0 # SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleLifoDiskQueue' # SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.LifoMemoryQueue' # 先进先出,广度优先 # DEPTH_PRIORITY = 1 # SCHEDULER_DISK_QUEUE = 'scrapy.squeue.PickleFifoDiskQueue' # SCHEDULER_MEMORY_QUEUE = 'scrapy.squeue.FifoMemoryQueue' # 15. 调度器队列 # SCHEDULER = 'scrapy.core.scheduler.Scheduler' # from scrapy.core.scheduler import Scheduler # 16. 访问URL去重 # DUPEFILTER_CLASS = 'step8_king.duplication.RepeatUrl' # Enable and configure the AutoThrottle extension (disabled by default) # See http://doc.scrapy.org/en/latest/topics/autothrottle.html """ 17. 自动限速算法 from scrapy.contrib.throttle import AutoThrottle 自动限速设置 1. 获取最小延迟 DOWNLOAD_DELAY 2. 获取最大延迟 AUTOTHROTTLE_MAX_DELAY 3. 设置初始下载延迟 AUTOTHROTTLE_START_DELAY 4. 当请求下载完成后,获取其"连接"时间 latency,即:请求连接到接受到响应头之间的时间 5. 用于计算的... AUTOTHROTTLE_TARGET_CONCURRENCY target_delay = latency / self.target_concurrency new_delay = (slot.delay + target_delay) / 2.0 # 表示上一次的延迟时间 new_delay = max(target_delay, new_delay) new_delay = min(max(self.mindelay, new_delay), self.maxdelay) slot.delay = new_delay """ # 开始自动限速 # AUTOTHROTTLE_ENABLED = True # The initial download delay # 初始下载延迟 # AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies # 最大下载延迟 # AUTOTHROTTLE_MAX_DELAY = 10 # The average number of requests Scrapy should be sending in parallel to each remote server # 平均每秒并发数 # AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: # 是否显示 # AUTOTHROTTLE_DEBUG = True # Enable and configure HTTP caching (disabled by default) # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings """ 18. 启用缓存 目的用于将已经发送的请求或相应缓存下来,以便以后使用 from scrapy.downloadermiddlewares.httpcache import HttpCacheMiddleware from scrapy.extensions.httpcache import DummyPolicy from scrapy.extensions.httpcache import FilesystemCacheStorage """ # 是否启用缓存策略 # HTTPCACHE_ENABLED = True # 缓存策略:所有请求均缓存,下次在请求直接访问原来的缓存即可 # HTTPCACHE_POLICY = "scrapy.extensions.httpcache.DummyPolicy" # 缓存策略:根据Http响应头:Cache-Control、Last-Modified 等进行缓存的策略 # HTTPCACHE_POLICY = "scrapy.extensions.httpcache.RFC2616Policy" # 缓存超时时间 # HTTPCACHE_EXPIRATION_SECS = 0 # 缓存保存路径 # HTTPCACHE_DIR = 'httpcache' # 缓存忽略的Http状态码 # HTTPCACHE_IGNORE_HTTP_CODES = [] # 缓存存储的插件 # HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' """ 19. 代理,需要在环境变量中设置 from scrapy.contrib.downloadermiddleware.httpproxy import HttpProxyMiddleware 方式一:使用默认 os.environ { http_proxy:http://root:woshiniba@192.168.11.11:9999/ https_proxy:http://192.168.11.11:9999/ } 方式二:使用自定义下载中间件 def to_bytes(text, encoding=None, errors='strict'): if isinstance(text, bytes): return text if not isinstance(text, six.string_types): raise TypeError('to_bytes must receive a unicode, str or bytes ' 'object, got %s' % type(text).__name__) if encoding is None: encoding = 'utf-8' return text.encode(encoding, errors) class ProxyMiddleware(object): def process_request(self, request, spider): PROXIES = [ {'ip_port': '111.11.228.75:80', 'user_pass': ''}, {'ip_port': '120.198.243.22:80', 'user_pass': ''}, {'ip_port': '111.8.60.9:8123', 'user_pass': ''}, {'ip_port': '101.71.27.120:80', 'user_pass': ''}, {'ip_port': '122.96.59.104:80', 'user_pass': ''}, {'ip_port': '122.224.249.122:8088', 'user_pass': ''}, ] proxy = random.choice(PROXIES) if proxy['user_pass'] is not None: request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) encoded_user_pass = base64.encodestring(to_bytes(proxy['user_pass'])) request.headers['Proxy-Authorization'] = to_bytes('Basic ' + encoded_user_pass) print "**************ProxyMiddleware have pass************" + proxy['ip_port'] else: print "**************ProxyMiddleware no pass************" + proxy['ip_port'] request.meta['proxy'] = to_bytes("http://%s" % proxy['ip_port']) DOWNLOADER_MIDDLEWARES = { 'step8_king.middlewares.ProxyMiddleware': 500, } """ """ 20. Https访问 Https访问时有两种情况: 1. 要爬取网站使用的可信任证书(默认支持) DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory" DOWNLOADER_CLIENTCONTEXTFACTORY = "scrapy.core.downloader.contextfactory.ScrapyClientContextFactory" 2. 要爬取网站使用的自定义证书 DOWNLOADER_HTTPCLIENTFACTORY = "scrapy.core.downloader.webclient.ScrapyHTTPClientFactory" DOWNLOADER_CLIENTCONTEXTFACTORY = "step8_king.https.MySSLFactory" # https.py from scrapy.core.downloader.contextfactory import ScrapyClientContextFactory from twisted.internet.ssl import (optionsForClientTLS, CertificateOptions, PrivateCertificate) class MySSLFactory(ScrapyClientContextFactory): def getCertificateOptions(self): from OpenSSL import crypto v1 = crypto.load_privatekey(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.key.unsecure', mode='r').read()) v2 = crypto.load_certificate(crypto.FILETYPE_PEM, open('/Users/wupeiqi/client.pem', mode='r').read()) return CertificateOptions( privateKey=v1, # pKey对象 certificate=v2, # X509对象 verify=False, method=getattr(self, 'method', getattr(self, '_ssl_method', None)) ) 其他: 相关类 scrapy.core.downloader.handlers.http.HttpDownloadHandler scrapy.core.downloader.webclient.ScrapyHTTPClientFactory scrapy.core.downloader.contextfactory.ScrapyClientContextFactory 相关配置 DOWNLOADER_HTTPCLIENTFACTORY DOWNLOADER_CLIENTCONTEXTFACTORY """ """ 21. 爬虫中间件 class SpiderMiddleware(object): def process_spider_input(self,response, spider): ''' 下载完成,执行,然后交给parse处理 :param response: :param spider: :return: ''' pass def process_spider_output(self,response, result, spider): ''' spider处理完成,返回时调用 :param response: :param result: :param spider: :return: 必须返回包含 Request 或 Item 对象的可迭代对象(iterable) ''' return result def process_spider_exception(self,response, exception, spider): ''' 异常调用 :param response: :param exception: :param spider: :return: None,继续交给后续中间件处理异常;含 Response 或 Item 的可迭代对象(iterable),交给调度器或pipeline ''' return None def process_start_requests(self,start_requests, spider): ''' 爬虫启动时调用 :param start_requests: :param spider: :return: 包含 Request 对象的可迭代对象 ''' return start_requests 内置爬虫中间件: 'scrapy.contrib.spidermiddleware.httperror.HttpErrorMiddleware': 50, 'scrapy.contrib.spidermiddleware.offsite.OffsiteMiddleware': 500, 'scrapy.contrib.spidermiddleware.referer.RefererMiddleware': 700, 'scrapy.contrib.spidermiddleware.urllength.UrlLengthMiddleware': 800, 'scrapy.contrib.spidermiddleware.depth.DepthMiddleware': 900, """ # from scrapy.contrib.spidermiddleware.referer import RefererMiddleware # Enable or disable spider middlewares # See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html SPIDER_MIDDLEWARES = { # 'step8_king.middlewares.SpiderMiddleware': 543, } """ 22. 下载中间件 class DownMiddleware1(object): def process_request(self, request, spider): ''' 请求需要被下载时,经过所有下载器中间件的process_request调用 :param request: :param spider: :return: None,继续后续中间件去下载; Response对象,停止process_request的执行,开始执行process_response Request对象,停止中间件的执行,将Request重新调度器 raise IgnoreRequest异常,停止process_request的执行,开始执行process_exception ''' pass def process_response(self, request, response, spider): ''' spider处理完成,返回时调用 :param response: :param result: :param spider: :return: Response 对象:转交给其他中间件process_response Request 对象:停止中间件,request会被重新调度下载 raise IgnoreRequest 异常:调用Request.errback ''' print('response1') return response def process_exception(self, request, exception, spider): ''' 当下载处理器(download handler)或 process_request() (下载中间件)抛出异常 :param response: :param exception: :param spider: :return: None:继续交给后续中间件处理异常; Response对象:停止后续process_exception方法 Request对象:停止中间件,request将会被重新调用下载 ''' return None 默认下载中间件 { 'scrapy.contrib.downloadermiddleware.robotstxt.RobotsTxtMiddleware': 100, 'scrapy.contrib.downloadermiddleware.httpauth.HttpAuthMiddleware': 300, 'scrapy.contrib.downloadermiddleware.downloadtimeout.DownloadTimeoutMiddleware': 350, 'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': 400, 'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 500, 'scrapy.contrib.downloadermiddleware.defaultheaders.DefaultHeadersMiddleware': 550, 'scrapy.contrib.downloadermiddleware.redirect.MetaRefreshMiddleware': 580, 'scrapy.contrib.downloadermiddleware.httpcompression.HttpCompressionMiddleware': 590, 'scrapy.contrib.downloadermiddleware.redirect.RedirectMiddleware': 600, 'scrapy.contrib.downloadermiddleware.cookies.CookiesMiddleware': 700, 'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 750, 'scrapy.contrib.downloadermiddleware.chunked.ChunkedTransferMiddleware': 830, 'scrapy.contrib.downloadermiddleware.stats.DownloaderStats': 850, 'scrapy.contrib.downloadermiddleware.httpcache.HttpCacheMiddleware': 900, } """ # from scrapy.contrib.downloadermiddleware.httpauth import HttpAuthMiddleware # Enable or disable downloader middlewares # See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html # DOWNLOADER_MIDDLEWARES = { # 'step8_king.middlewares.DownMiddleware1': 100, # 'step8_king.middlewares.DownMiddleware2': 500, # }